Colour Terms, Syntax and Bayes: - Linguistics and English Language

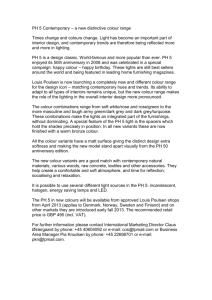

advertisement