`bad` research can yield

advertisement

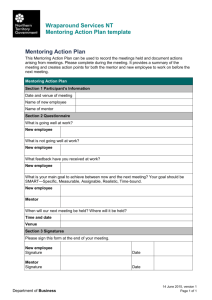

Realist Synthesis: Supplementary reading 6: Digging for nuggets: how ‘bad’ research can yield ‘good’ evidence 1 Digging for nuggets: how ‘bad’ research can yield ‘good’ evidence Abstract A good systematic review is often likened to the pre-flight instrument check ensuring a plane is airworthy before take-off. By analogy, research synthesis follows a disciplined, formalised, transparent and highly routinised sequence of steps in order that its findings can be considered trustworthy - before being launched on the policy community. The most characteristic aspect of that schedule is the appraise-thenanalyse sequence. The research quality of the primary studies is checked out and only those deemed to be of high standard may enter the analysis, the remainder being discarded. This paper rejects this logic arguing that the ‘study’ is not the appropriate unit of analysis for quality appraisal in research synthesis. There are often nuggets of wisdom in methodologically weak studies and systematic review disregards them at its peril. Two evaluations of youth mentoring programmes are appraised at length. A catalogue of doubts is raised about their design and analysis. Their conclusions, which incidentally run counter to each other, are highly questionable. Yet there is a great deal to be learned about the efficacy of mentoring if one digs into the specifics of each study. ‘Bad’ research may yield ‘good’ evidence - but only if the reviewer follows an approach which involves analysis-and-appraisal. Introduction Interest in the issue of ‘research quality’ is at an all time high. Undoubtedly, one of the key spurs to the quest for higher standards in social research is the evidence-based policy movement. The chosen instrument for figuring out best practice for forthcoming interventions in a particular policy domain is the systematic review of all first rate evidence from bygone studies in that realm. In trying to piece together the evidence that should carry weight in policy formation, a key step in the logic is to provide an ‘inclusion criterion’ as a means of identifying those studies upon which most reliance should be placed. This paper questions this strategy, beginning with a short rehearsal of the role of quality appraisal in systematic review. This brief history commences with a backward glance at the short-sighted world of meta-analytic reviews in which double-blinded, randomised controlled trials are deemed to provide the source of gold-standard evidence. It then moves to present day attempts to provide parallel appraisal tools for the full range of social research strategies. In particular, the difficulties of using quality frameworks to appraise qualitative research are discussed. One particular impediment is highlighted in the focal section of the paper. My basic hypothesis is that the appraisal tool has to work in parallel with the nature of the synthesis. If, as in meta-analysis, that synthesis is arithmetic and has no other objective than to calculate the mean effect of a class of programmes then indeed there is no need to look beyond RCTs as the source of primary evidence. If, however, the ambition is to provide an explanatory synthesis then the appraisal tool should be subordinate to the particular 2 explanation being pursued. The wide-ranging, whole-study nature of qualitative appraisal tools cover aspects of the primary inquiries that may be quite irrelevant to explanatory thesis pursued in a review. The consequence of wielding such a generic quality axe is that credible explanatory messages from otherwise poor studies are lost to the review. The final section of the paper forwards an alternative approach to research appraisal, considering how to make the most of mixed messages from curate’s eggs. Two qualitative studies from the field of mentoring research are used as illustrations. Quality appraisal in systematic review Systematic reviews carry supreme influence in the world of evidence-based policy, so argue the disciples, because they are ruthlessly methodical. A set of steps, known as a ‘protocol’, is established though which all reviews have to pass, and in the passing they are deemed to produce a cumulative and objective body of knowledge. I reproduce a template of the classic meta-analytic review in Figure 1. There are any number of such operational diagrams and flowcharts in the literature. Some specify six stages, some seven and more. Some include a preliminary feasibility study. Some include a planning stage. Some make room for periodic updating as new primary studies continue to trickle in. My rather more humble effort below (based on Alderson et al, 2003 and CRD, 2001) is only intended to capture the essentials: Figure 1: Simplified systematic review template 1. Formulating the review question. Identifying the exact hypothesis to be tested about the particular efficacy of a particular class of interventions. 2. Identifying and collecting the evidence. Searching for and retrieving all the relevant primary studies of the intervention in question. Comprehensive probing of data-banks, bibliographies and websites. 3. Appraising the quality of the evidence. Deciding which of the foregathered studies is valid and serviceable for further analysis by distinguishing rigorous from flawed primary studies. 4. Extracting and processing the data. Presenting the raw evidence on a grid, gathering the relevant information from all primary inquiries. 5. Synthesising the data. Collating and summarising the evidence in order to address the review hypothesis. Using statistical methods to estimate the mean effect of a class of programmes. 6. Disseminating the findings. Reporting the results to the wider policy community in order to influence new programming decisions. Identifying best practice and eliminating dangerous interventions. Our interest here lies, of course, in step 3 – the ‘critical appraisal’ or ‘quality threshold’ stage in which the primary studies are lined up and inspected for their rigour and trustworthiness. The implication here is that ‘evidence’ comes in all sorts of shapes and sizes, with some of it being distinctly sloppy and untrustworthy. This sentiment is rooted in evidence-based medicine and is fixed upon its bête noire, namely - the ‘expert opinions’ of doctors and clinicians. “The publication of Archie Cochrane’s radical critique, Efficiency and Effectiveness, 25 years ago stimulated a penetrating examination of the degree 3 to which medical practice is based on robust demonstrations of clinical effectiveness... Instead of practice being dominated by opinion (possibly illinformed) and by consensus formed in poorly understood ways by ‘experts’, the idea is to shift the centre of gravity of health care decision making towards an explicit consideration and incorporation of research evidence.” (Sheldon, 1997). Here is the call for a methodological tribunal at the centre of systematic reviews. The core idea is to exclude entirely from further analysis any research that falls short of acceptable scientific standards. There are a number of such ‘hierarchies of evidence’ designed for evidence-based policy, which use a variety of rankings and subgroupings. Figure 2, based on Davies et al (2000) and CRD (2001), serves as a rough amalgam, illustrating the standing of different strategies as perceived by the ‘rigorous paradigm’: Figure 2: Simplified structure of the hierarchy of evidence in meta-analysis Level 1: Randomised controlled trials (with concealed allocation) Level 2: Quasi-experimental studies (using matching) Level 3: Before-and-after comparisons Level 4: Cross sectional, random sample studies Level 5: Process evaluation, formative studies and action research Level 6: Qualitative case study and ethnographic research Level 7: Descriptive guides and examples of good practice Level 8: Professional and expert opinion Level 9: User opinion The qualitative turn Needless to say, such a pecking order has proved highly controversial. It is redundant in many areas of policy evaluation in which RCTs are not feasible and it is a strange ‘gold standard’ that is quite unable to peer inside the ‘black box’ of programme implementation. Methodological fisticuffs of this sort are not my topic on this occasion, so it suffices to invoke the stock criticism here, which is that such a hierarchy undervalues the contribution made by other research perspectives. What should be happening, choruses the opposition, is that the pecking order should be replaced by a horses-for-courses approach, which recognises equally the contribution made to programme understanding by other research strategies. Let me quote an example of this sentiment, with no further embellishment other than to encourage the reader into noting the name of its author: “Qualitative knowledge is absolutely essential as a prerequisite foundation for quantification in any science. Without competence at the qualitative level, one’s computer printout is misleading or meaningless. We failed in our thinking about programme evaluation methods to emphasise the need for a qualitative context that could be depended upon… To rule out plausible hypotheses we need situation specific wisdom. The lack of this knowledge (whether it be called ethnography or program history or gossip) makes us incompetent estimators of programme impacts, turning 4 out conclusions that are not only wrong, but often wrong in socially destructive ways.” (Campbell, 1984) Campbell’s entreaty here has been taken up enthusiastically, if not always by members of the evidence-based policy Collaboration named in his honour, many of whom remain die-hard supporters of the RCT (Farrington and Walsh, 2001). And in respect of critical appraisal, the consequence is that there are now several dozen attempts to set down frameworks and tools to assess the quality of qualitative research1. I bring my brief history up to date by describing, arguably, the most significant of these – namely ‘Quality in Qualitative Evaluation’ a report produced for the UK Cabinet Office (Spencer et al, 2003). What hits one in the eye about this particular appraisal checklist is the enormity of its reach; to be sure it is a ‘full kit inspection’. The key reform is to inculcate standards for a much greater range of investigatory activities. In the classic meta-analytic reviews the quality assessment focus goes little beyond design issues (i.e. is it a double-blinded RCT?) as the telling feature of the original studies. The new standards establishment aims to cover all phases of the research cycle. And in many ways this is an entirely reasonable expectation. There is no pronounced emphasis in qualitative research on design. In these circles it is no disgrace to suck-it-and-see, with terms like ‘unstructured’, ‘flexible’, and ‘adaptive’ being the watchwords of a good design. Accordingly, good qualitative research is widely recognised as multi-faceted (or ‘organic’ or ‘holistic’) and the Cabinet Office framework responds by having something to say under 8 major headings on the following aspects of research: ‘findings’, ‘sample’, ‘data collection’, ‘analysis’, ‘reporting’, ‘reflexivity and neutrality’, ‘ethics’ and ‘auditability’. Each of these features is then subdivided so that, for instance, in considering the quality of ‘findings’ the reviewer is expected to gauge their ‘credibility’, their ‘knowledge extension’, their ‘delivery on objectives’, their ‘wider inferences’ and the ‘basis of evaluative appraisal’. The original subdivision into the 8 research stages thus jumps to 18 major themes or ‘appraisal questions’. I will not list them all here because for each of these questions there are then ‘quality indicators’, usually running to 4 to 5 per theme. This leaves us with a final set of 75 indicators with which to judge the quality of qualitative research. Again, I refrain from attempting a listing, though it worth reproducing a couple of items (below) for they illustrate the intended mode of application. That is to say, the indicators are not decision points (is it an RCT or not?). Rather they invite the appraiser to examine rather more complex propositions as ‘possible features for consideration’, as for example: Is there discussion of access and methods of approach and how these might have affected participation/coverage? (a ‘sampling’ indicator) Is there a clear conceptual link between analytic commentary and presentation of original data? (a ‘reporting’ indicator) 1 Alas it is impossible to appraise all of these appraisal tools here. For example, another candidate for inspection might be the approached used by the EPPI group, on which there has already been a ferocious barrage of opinion and counter opinion (Oakley 2003; MacLure 2005). I pinpoint the Cabinet Office study for its provenance, because it is a distillation of many previous schemas and, above all, because it is the most clearly, formally and openly articulated. 5 As if all this impeccable detail were not enough, Spencer et al’s report concludes with some self-reflection on possible omissions from the framework (such as insufficient coverage of ethics and the failure to include different sub-types of qualitative research such as discourse analysis). To summarise, however, one can say that the final product is remarkably comprehensive, covering at least the A-to-Y of qualitative inquiry. Using the instrument But now the point is reached for my own critical appraisal. The question I want to concentrate on is the utility of the instrument. Can such a tool be used to support systematic review? Can it act as a quality filter with which to appraise primary studies? Such an expectation undoubtedly fed into the commissioning of the framework. The underlying ambition is to draw a firm parallel with the Cochrane model. In order to upgrade the profile of qualitative research in the policy process, the strategy is to provide a rigorous and defensible ‘inclusion criterion’ as a means sorting wheat from chaff in a paradigm that feels exposed to charges of subjectivity and bias. I begin my critique by briefly rehearsing five practical impediments to using such an instrument as a quality threshold. My initial aim is to air some of the ongoing discussion about the difficulties of arriving at any quality checklist for qualitative research. In making these points, I do not want to imply that there is any naiveté on this score on the part of the team that devised the model under discussion; indeed they stress its status as a ‘framework’ and call for ‘field testing’ in order to perfect it for usage (Spencer et al 2003:107). The following quintet of pitfalls anticipates some of the grave practical difficulties inherent in qualitative quality assessment. They should, however, be regarded as a preliminary to a sixth and underlying critique which argues that the very idea of using generic quality frameworks misunderstands the essential nature of research synthesis. I. Boundless Standards. Broadening the domain of quality standards creates quality registers that are dinosaurian in proportion. Spencer et al’s assessment grid looks rather more like a scholarly overview of methodological requirements than a practicable checklist. A reviewer might well have to put hundreds of primary studies to the microscope. It takes little imagination to see that wading through the totality of evidence in the light of seventy-five general queries (rather than a pointed one on the design employed) can render the exercise unmanageable. II. Abstract Standards. Broadening the domain of quality standards results in the usage of ‘essentially contested concepts’ (Gallie, 1964) to describe the requisite rules. Amongst the ‘weasel words’ that find their way into the Cabinet Office criteria are the requirements that the research should have ‘clarity’, ‘coherence’ and ‘thoughtfulness’, that it should be ‘structured’ and ‘illuminating’ and so forth. By contrast, it is fairly easy to decipher whether a study has or has not utilised an RCT and thus deliver a pass/fail verdict on its quality. But a concern for rigour, clarity, sensitivity and so forth generates far, far tougher calls. III. Imperceptible Standards. Broadening the domain of quality standards exacerbates one of the standard predicaments of systematic review, namely the 6 foreshortening of research reportage caused by publishing and reporting conventions. Inevitably, the first victim of ‘word-length’ syndrome, especially in journal formats, is the technical detail on access, design, collection, analysis and so forth. The consequence, of course, is that an appraisal of research standards cast in terms of 75 methodological yardsticks will frequently have no material with which to work. IV. Composite standards. Broadening the domain of quality standards also raises novel questions about their balance. Should ‘careful exposition of a research hypothesis’ be prized more than ‘discussion of fieldwork setting’? And how do these weigh up alongside ‘clarity and coherence of reporting’? The permutations, of course, increase exponentially when one is faced with quality indicators by the score. By and large the new standards regime has resisted formulating an algorithm to calculate the relative importance of different contributions (Spencer et al 2003: 82). Indeed the tendency is to resist altogether the ‘marking scheme’ approach implicit in the orthodox hierarchies illustrated in Figure 2. V. Permissive standards. With all of the above problems squarely in mind, most recent standards compendia come with provisos stressing that their application requires ‘judgement’. The following from the Cabinet Office Report is a prime example, ‘We recognise that there will be debate and disagreement about the decisions we have made in shaping the structure, focus and content of the framework. The importance of judgement and discretion in the assessment of quality is strongly emphasised by authors of other frameworks, and it was underlined by participants in our interviews and workshops. We think it is critical that the framework is applied flexibly, and not rigidly or prescriptively: judgement will remain at the heart of assessments of quality.’ (Spencer et al 2003: 91). One would be hard put to find a more sensible statement on the sensitivity needed in quality appraisal. But, for some, such a twist heralds the arrival into research synthesis of a decidedly oxymoronic character, the ‘permissive standard’. Whichever view one holds, it is clear that the application of quality standards is moving further and further away from being an efficient and unproblematic preliminary to systematic review. VI. Goal-free standards. Broadening the domain of quality standards looses sight of their function within a review. With this little proposition, I arrive at my main critique and so linger longer on this point. As we have seen, there is a self-defeating element in these efforts to build a quality yardstick for qualitative research. The more rigorous the exploration of conduct of qualitative inquiry, the more unwieldy becomes the appraisal apparatus. The reason is obvious. The standards echo the very nature of qualitative explanation. They capture the way that ethnographic accounts are created. Qualitative explanation has been portrayed in many ways, some of the best-known labels being: ‘thick description’, ‘pattern explanation’, ‘intensive analysis’, ‘explanatory narratives’, and ‘ideographic explanation’. These are different ways of getting at the same thing, namely that qualitative inquiry works by building up manysided descriptions, the explanatory import of which depends on their overall coherence. Such holistic explanations are, moreover, developed and refined in the field, over time and via the assistance of another set of analytic processes such as ‘reflexivity’, ‘respondent validation’, ‘triangulation’, and ‘analytic induction’. Little wonder then 7 that the evaluative tools needed to check out all this activity have grown exponentially. The end product is a set of desiderata rather than exact rules, a cloak of ambitions rather than a suite of performance indicators, a paradigm gazetteer rather than a decision making tool, a collection of tribal nostrums rather than critical questions, a methodological charter rather than a quality index. So how can we get qualitative quality appraisal to work? The answer resides in neither technical trick nor quick fix. There is no point in achieving this fined-grained appreciation of the multi-textured nature of qualitative research only to ditch it via the production of an abridged instrument cropping the 75 carefully identified issues to, say, a rough and ready 7. One needs to go back to square one to appreciate the problem. And the culprit here is that the ambition to create a quality appraisal tool (and one, moreover, that matches the muscularity of the quantitative hierarchies) has run ahead of consideration of its function within a systematic review. Whatever the oversimplifications of the RCT-or-bust approach, it does produce a batch of primary studies up and ready to deliver a consignment of net effects that can be synthesised readily into an overall mean effect. Form fits function. The qualitative quality appraisal tools, by contrast, are functionless; they are generic. Accordingly, the prior question regarding qualitative assessment is – what do we expect the synthesis of qualitative inquiries to deliver? Tougher still, what is the expected outcome of the synthesis of an array of multi-method studies? An initial temptation might be to remain true to the ‘additive’ vision of meta-analysis – but a moment’s thought tells us there is no equivalent of adding and taking the mean in qualitative inquiry. In general, a model of synthesis-as-agglomeration seems doomed to failure. Any single qualitative inquiry, any one-off case study, produces a mass of evidence. As we have seen, one of the marks of good research of this ilk is the ability to fill out the pattern, to capture the totality of stakeholders’ views, to produce thick description. But these are not the qualities that we aspire to in synthesis. We do not want an endless narrative; we do not seek a compendium of viewpoints; we do not crave thicker description! Thus lurks a paradox – the yardsticks of good qualitative research do not correspond to the hallmarks of good synthesis (and, more especially, the format of practicable policy advice). And with this thought, we arrive at the negative conclusion to the paper. Since the synthetic product is never going to be composed holistically then the full-kit inspections of each component study is not only unwieldy, but also quite unnecessary. Digging for nuggets in systematic review In this section I attempt to solve the paradox. Since I have argued that the strategy for quality appraisal is subordinate to the objective of a review, I commence with a brief description of an alternative view of how evidence can be brought together to inform policy. This is no place to introduce a paradigm shift in systematic review, so I refer readers to fuller accounts in Pawson et al (2004) and Pawson (2006). The realist perspective views research synthesis as a process of theory testing and refinement. Policies and programmes are rooted in ideas about why they will solve social problems, why they will change behaviour, why they will work. Realist synthesis thus starts by articulating key assumptions underlying interventions (known as ‘programme theories’) and then uses the existing research as case studies with which to test that those theories. 8 The overall expectation about how evidence will shape up is as follows. Some studies may indicate that a particular intervention works and, if they have a strong qualitative component, they may be able to say why the underlying theory works. Since there are no panacea programmes on this earth, other studies will chart instance of intervention failure and may also be able to describe a thing or two about why this is the case. Synthesis, in such instances, is not a case of taking averages or combining descriptions but rests on explanatory conciliation. That is to say, a refined theory emerges better to explicate the scope of the original programme theory. The aim of working through the primary research is, in short, to provide a more subtle portrait of intervention success and failure. The strategy is to provide a comprehensive explanation of the subjects, circumstances, and respects in which a programme theory works (and in which fails). And that, in a nutshell, is the basic logic of realist synthesis. Much more could be said about how the preliminary theories are chosen and expressed, how primary studies are located and selected, how evidence is extracted and compared, and so on. But this paper is directed at research quality and it is to some rather uncompromising ramifications on this score that I return. The quality issue is transformed in two ways, both due to the fact that programme theories are the focus of synthesis. I. The whole study is not the appropriate unit of quality appraisal. Primary studies are unlikely to have been constructed with an exploration of a particular programme theory as their raison d’être. More probably, the extant research will have been conducted across a multiplicity of banners under which evaluation research and policy analysis is organised. However, in so far as they will have a common commitment to understanding an intervention, few of these investigations will have absolutely nothing to say about why programmes work. In the case of qualitative research, there is a reasonable expectation that key programme theories will get an airing – alongside lots of other material on location, stakeholders, meanings, negations, power plays, implementation hitches, etc.etc. This raises a completely revised expectation about research synthesis, namely that evidential fragments rather than entire studies should be the unit of analysis. And in terms of research quality there is a parallel transformation down to the level of the specific proposition. Because synthesis takes a specific analytic cut through them, it is not a sensible requirement that every one of the many-sided claims in qualitative research must be defensible. What must be secure, however, are those elements that are to be put to use. In short, the implication on research quality goes back to the title of this paper and the idea an otherwise mediocre study can indeed produce pearls of explanatory wisdom, II, Research quality can only be determined within the act of synthesis. The notion that research synthesis is the act of developing and refining explanations also has a profound implication for the timing of any quality assessment. Theory development is dynamic. Understanding builds throughout an inquiry. Evidential requirements thus change though time and quality appraisal needs to be sensitive to this expectation. The notion of ‘explanation-sensitive’ standards will ring alarm bells in the homogenised world of meta-analysis (though it does, incidentally, clarify the somewhat oxymoronic idea of ‘permissive standards’ mentioned earlier). However, there is nothing alien to scientific inquiry in such a notion. The iterative relationship between theory and data 9 is a feature of all good inquiry. All inquiry starts with understanding E1 and moves on to more nuanced explanations E2, E3, … EN and in the course of doing will gobble up and spit out many different kinds of evidence. Applying this model to research synthesis introduces a different primary question for quality appraisal, namely – can this particular study (or fragment thereof) help, and is it of sufficient quality to help in respect of clarifying the particular explanatory challenge that the synthesis has reached? Such a question can only be answered, of course, relative to that point of analysis and, therefore, in the midst of analysis. In short, the worth of a primary study is determined in the synthesis. Pearls of wisdom – about mentoring This final section has the task of bringing these abstract methodological musings to life via an illustration. The example is drawn from a review I conducted on ‘mentoring relationships’ (Pawson, 2004). Clearly, I can do little more than give a flavour of a review that provides a hundred page synthesis of the evidence from 25 key primary studies. What I’ve chosen to reproduce, therefore, is some of the material from the first two cases. These are selected because they confront the reviewer with a severe challenge from the point of view of research quality. Both are qualitative studies. Each carries, quite distinctly and unmistakably, the voice, the preferences and the politics of its author. Arguably, they represent dogma rather than data. Without doubt they would be apportioned to the pile of rejects in a Cochrane or Campbell review. Even more interesting is how they would fare under the Cabinet Office quality appraisal checklist and, in particular, the criterion that there should be ‘a clear conceptual link between analytic commentary and presentation of original data’. As we shall see, these studies make giant and problematic inferential leaps in passing from data to conclusions. In short, this pair of inquiries tests to its limits my thesis about looking for pearls of wisdom rather than acres of orthodoxy. The first stage of realist synthesis is to articulate the theory that will be explored via the secondary analysis of existing studies. Thereafter, there is the hard slog of searching for and assembling the studies appropriate to this task. Eventually, we get down to the synthesis per se. In adhering to the principles developed thus far, it is clear that the reviewer has rather a lot of work to do in melding any study into an explanatory synthesis. For the purposes of this exposition, four tasks are highlighted. There has, of course, to be some initial orientation for the reader about the purpose of the primary research, its method and its conclusions. One is not, however, reviewing a study in its own terms, so the core requirement for report is a consideration of the implications of the study for the hypothesis under review. Alongside this, according to the argument above, will be an assessment of research quality. To repeat, this is not directed at the entirety of a primary study and all of its conclusions. Rather, what is pursued is a different question about whether the original research warrants the particular inference drawn from it by the reviewer. Finally, the synthesis should take stock of how the review hypothesis has been refined in the encounter with each evidential stepping stone. Let me commence the illustration by putting the review hypothesis in place before we assess the contribution of this brace of studies. The review examines mentoring programmes in which an experienced mentor is partnered with a younger, more junior 10 mentee with the idea of passing on wisdom and individual guidance to help the protégé through life’s hurdles. Such an idea has been used across policy domains and in all walks of life. The review concentrates on so-called ‘engagement mentoring’, that is dealing with ‘disaffected’, ‘high-risk’ youth and helping them move them into mainstream education and training. It may help readers to orient themselves to the idea by mentioning the most renowned and longstanding of these programmes, namely Big Brothers and Big Sisters of America. Much more than in any other type of social programme, interpersonal relationships between stakeholders embody the intervention. They are the resource that is intended to bring about change. Accordingly, the theory singled out for review highlighted the intended ‘function’ of mentoring. Youth mentoring programmes carry expectations about a range of such functions, which are summarised in Figure 3. Figure 3: A basic typology of mentoring mechanisms advocacy (positional resources) coaching (aptitudinal resources) direction setting (cognitive resources) affective contacts (emotional resources) ‘long-move’ mentoring Starting at the bottom, it is apparent that some mentors see their primary role as offering the hand of friendship; they work in the affective domain trying to make mentees feel differently about themselves. Others provide cognitive resources; offering advice and a guiding hand though the difficult choices confronting the mentee. Still others place hands on the mentees shoulders – encouraging and coaxing their protégés into practical gains, skills and qualifications. And in the uppermost box, some mentors grab the mentees’ hands, introducing them to this network, sponsoring them in that opportunity, using the institutional wherewithal at their disposal. In all cases the mentoring relationship takes root and change begins only if the mentee takes willingly the hand that is offered. Put simply, the basic thesis under review was that successful engagement mentoring involves the ‘long move’ though all of theses stages. The disaffected mentee will not suddenly leap into employment, and support within a programme must be provided to engender all of the above stages. This proposition leads to further hypotheses that were tested in the review about limitations on the individual mentor’s capacity to fulfil each and all of these demanding roles. The review, in short, examines the primary evidence with a view to discovering whether this sequence of steps is indeed a requirement of successful programmes. More particularly, it interrogates the evidence in respect of the resources of the mentor and of the intervention, in order to ascertain which practitioners and which delivery arrangement are best placed to provide this extensive apparatus. Let us now move on to the contribution of the first two primary investigations in exploring this thesis. The reader will find that each passage of synthesis is made up of 11 the quartet of methodological tasks mentioned above (basic orientation, hypothesis testing, quality appraisal, hypothesis refinement).The aim is to advance the rudimentary conjectures about the ‘long move’ and its contingencies. Given that the focus of the present is on research quality, I also emphasise the decisions made by way of ‘appraising’ the studies. Study 1. de Anda D (2001) A qualitative evaluation of a mentor program for atrisk youth Child and Adolescent Social Work Journal 18 (97-117) This is an evaluation of project RESCUE (Reaching Each Students Capacity Utilizing Education). Eighteen mentor-mentee dyads are investigated from a small, incorporated city in Los Angeles, with high rates of youth and violent crime. The aims of the programme are described in classic ‘long move’ terms: ‘The purpose of this relationship is to provide a supportive adult role model, who will encourage the youth’s social and emotional development, help improve his/her academic and career motivation, expand the youth’s life experiences, redirect the youth from at-risk behaviours, and foster improved self-esteem’. A curious, and far from incidental point, is that the volunteer mentors on the RESCUE programme were all fire fighters. The research takes the form of a ‘qualitative evaluation’ (meaning – an analysis consisting of ‘group interview’ data and of biographical ‘case histories’). There is a major claim in the abstract that the mentees are shown to secure ‘concrete benefits’, but these are mentioned only as part of the case studies narratives, there being no attempt to chart inputs, outputs and outcomes. The findings are, in the author’s words, ‘overwhelmingly positive’. The only hint of negativity comes in reportage of the replies to a questionnaire item about whether the mentees would like to ‘change anything about the programme’. The author reports, ‘All but three mentees answered the question with a “No” response’. Moreover, de Anda indicates that two of these malcontents merely wanted more ‘outings’ and the third, more ‘communication’. As for critical appraisal, the research could be discounted as soppy, feel-good stuff, especially as all of the key case study claims are in the researcher’s voice. (e.g. ‘the once sullen, hostile, defensive young woman now enters the agency office with hugs for staff members, a happy disposition and open communication with adult staff members and the youth she serves in her agency position’). The case studies do, however, provide a very clear account of an unfolding sequence of mentoring mechanisms: “Joe had been raised in a very chaotic household with his mother as the primary parent, his father’s presence erratic …He was clearly heading towards greater gang involvement… He had, in fact, begun drinking (with a breakfast consisting of a beer), demonstrated little interest in school and was often truant… The Mentor Program and the Captain who became his mentor were ideal for Joe, who had earlier expressed a desire to become a firefighter. The mentor not only served as a professional role model, but provided the nurturing father figure missing from his life. Besides spending time together socially, his mentor helped him train, prepare and discipline himself for the Fire Examiners test. Joe was one of the few who passed the test (which is the same as the physical test given to firefighters). A change in attitude, perception of his life, and attitudes and life goals was evident … [further long, long story omitted] … He also enrolled at the local junior college in classes (e.g. for paramedics) 12 to prepare for the firefighters’ examination and entry into the firefighters academy. He was subsequently admitted to the fire department as a trainee.” What we have here is a pretty full account of a successful ‘long move’ and the application of all of the attendant mechanisms – progressing from affective contacts (emotional resources) to direction setting (cognitive resources) to coaching (aptitudinal resources) to advocacy (positional resources). The vital evidence fragment for the review is that this particular mentor (‘many years of experience training the new, young auxiliary firefighters as well as the younger Fire explorers’) was quite uniquely positioned. As Joe climbs life’s ladder, away from his morning beer, the Captain is able to provide all the resources needed to meet all his attitudinal, aptitudinal, and training needs. How frequently such state of affairs applies in youth mentoring is a moot point and acknowledged only in the final paragraph of the paper. In its defence, one can point to two more plausible claims of the paper. There is constant refrain about precise circumstantial triggers and points of interpersonal congruity that provide the seeds of change. ‘It was at this point [end of lovingly described string of bust-ups] that Gina entered the Mentor programme and was paired with a female firefighter. The match was a perfect one in that the firefighter was seen as “tough” and was quickly able to gain Gina’s confidence.’ There is also an emphasis via the life history format on the holistic and cumulative nature of the successful encounter. ‘The responses and case descriptions do provide a constellation of concrete and psychosocial factors which the participants felt contributed to their development and success.’ These are the evidence fragments that are taken forward in the review and which, indeed, find further support in subsequent studies. In short, this example encapsulates the dilemma of incorporating highly descriptive qualitative studies in a review. We are told about ‘overwhelmingly positive results’, all the ‘evidence’ is wrapped up in the author’s narrative, and there is no attempt at strategies such as respondent validation. Compared to qualitative research of a higher method calibre, there is no material in the study allowing the reader the opportunity to ‘cross-examine’ its conclusions. Read at face value it tells us that engagement mentoring works. Read critically it screams of bias. Read synthetically, there is nothing in the account to suggest a general panacea and much to suggest a special case. The key point, however, is that some vital explanatory ingredients are unearthed (that the long move is possible given a well-positioned mentor, established community loyalties, specific interpersonal connections and interests, and multiobjective programme) and not lost to the review. Study 2. Colley H (2003) Engagement mentoring for socially excluded youth: problematising an ‘holistic’ approach to creating employability through the transformation of habitus. British Journal of Guidance and Counselling 31 (1) pp 77-98. Here we transfer from American optimism to British pessimism via the use of the same research strategy. The evidence here is draw from a study of a UK government scheme (‘New Beginnings’) which, in addition to basic skills training and work placement schemes, offered a modest shot of mentoring (one hour per week). This scheme is one of several in the UK mounted out of a realisation that disaffected youth have multiple, deep-seated problems and, accordingly, ‘joined up’ service provision is 13 required to have any hope of dealing with them. Colley’s study takes the form of series of qualitative ‘stories’ (her term) about flashpoints within the scheme. She selects cases in which the mentor ‘demonstrated an holistic person-centred commitment to put the concerns of the mentor before those of the scheme’ and reports that, ‘sooner of later these relationships break down’. The following quotations provide typical extracts from ‘Adrian’s story’: ‘Adrian spoke about his experience of mentoring with evangelical fervour: ‘To be honest, I think anyone who’s in my position with meeting people, being around people even, I think a mentor is one of the greatest things you can have … [passage omitted]. If I wouldn’t have had Pat, I think I’d still have problems at home … You know, she’s put my life in a whole different perspective.’ Adrian was sacked from the scheme after 13 weeks. He was placed in an office as filing clerk and dismissed because of lateness and absence. Colley reports that, despite his profuse excuses, the staff felt he was ‘swinging the lead’. Pat (the mentor) figured otherwise: “Pat, a former personnel manager and now student teacher, was concerned that Adrian had unidentified learning difficulties that were causing him to miss work though fear of getting things wrong. She tried to advocate on his behalf with New Beginnings staff, to no avail.” At this point Adrian was removed from the scheme. Another ‘story’ is shown to betray an equivalent pattern, with the mentor supporting the teenage mentee’s aspiration to become a mother and to eschew any interest in work (and thus the programme). From the point of view of the review theory, there is an elementary ‘fit’ with the idea of the difficulties entrenched in the ‘long move’. Mentors are able to provide emotional support and a raising of aspirations but cannot, and to some extent will not, provide advocacy and coaching. On this particular scheme, the latter are not in the mentor’s gift but the responsibility of other New Beginnings staff (their faltering, bureaucratic efforts also being briefly described). And what of quality appraisal? Colley displays the ethnographer’s art in being able to bring to life the emotions described above. She also performs ethnographic science in the way that these sentiments are supported by apt and detailed and verbatim quotations from they key players. Compared to case study one, the empirical material might be judged as more authentically the respondent’s tale rather than the reviewer’s account. But then we come to the author’s interpretations and conclusions. On the basis of these two case illustrations, the inevitability of mentoring not being able to reach further goals on employability is assumed. This proposition is supported in a substantial passage of ‘theorising’ about the ‘dialectical interplay between structure and agency’, via Bourdieu’s concept ‘habitus’, which Colley explained as follows: “ .. a structuring structure, which organises practices and the perceptions of practices, but also a structured structure: the principle of division into logical classes which organizes the perception of the social world is itself the product of internalisation of the division into social classes’. Put in more downright terms, this means that because of the way capitalist society is organised the best this kind of kid will get is a lousy job and whatever they do will be 14 taken as a sign that they barely deserve that. In Colley’s words, ‘As the case studies illustrate, the task of altering habitus is simply unfeasible in many cases, and certainly not to a set timetable’. It is arguable that this interpretative overlay derives more from the author’s self-acknowledged Marxist/feminist standpoint than from the empirical case studies presented. There is also a further very awkward methodological aspect for the reviewer in a ‘relativistic’ moment one often sees in qualitative work, when in the introduction to her case studies Colley acknowledges that her reading of them is ‘among many interpretations they offer’. There are huge ambiguities here, normally shoved under the carpet in a systematic review. Explanation by ‘theorising’ and an underlying ‘constructivism’ in data presentation are not the stuff of study selection and quality appraisal. Realist synthesis plays by another set of rules, which are about drawing warrantable inferences from the data presented. Thus, sticking just to Colley’s case studies in this paper, they have value to an explanatory review because they exemplify in close relief some of the difficulties of ‘long move’ mentoring. In the stories presented, the mentor is able to make headway in terms of befriending and influencing vision but these gains are thwarted by programme requirements on training and employment, over which the mentor had little control. What they show is the sheer difficulty that an individual mentor faces in trying to compensate for lives scarred by poverty and lack of opportunity. Whether the two instances demonstrate the ‘futility’ or ‘unfeasibility’ of trying to do so and the unfaltering grip of capitalist habitus and social control is a somewhat bolder inference. The jury (and the review at this point) is still out on that question. Conclusion These two inquiries, of course, represent only the initial skirmishes of the review. Both of them are flawed but both of them present useable evidence about the circumstances in which mentoring can and cannot flourish. Exploration of further inquires lends detail to the developing theory, giving a picture both of the likelihood of youth mentoring programmes being able to make the ‘long move’ and a model of the additional process and resources needed to help in its facilitation. What is discovered, of course, is a further mix of relatively successful and unsuccessful programmes. But a pattern does begin to emerge about the necessary ingredients of mentoring relationships. Goals can begin to be achieved if the mentor has a similar biography, if she shares the everyday interests of the mentee, if he has the resources to build and rebuild the relationship, and if she is able to forge links with the mentee’s family, peers, community, school and college (for the full model consult Pawson, 2004). There is one final methodological point to report of the continued journey. The additional studies included in the synthesis employed a variety of research strategies (RCT, survey, mixed-method, path analysis and, indeed, an existing meta-analysis). These tax the poor reviewer in having to make a quality appraisal across each and every one of these domains. My approach remained the same. It is not necessary to draw upon a full, formal and preformulated apparatus to make the judgement on research quality. The only feasible approach is to make the appraisal in relation to the precise usage of each fragment of evidence within the review. The worth of a study is determined in the synthesis. 15 References Alderson P, Green S, Higgins J (2003) Cochrane Reviewers’ Handbook The Cochrane Library, John Wiley: Chichester Campbell D (1984) ‘Can we be scientific in applied social science?’ in Conner R, Altman D and Jackson C (eds.) Evaluation Studies: Review Annual (vol. 9) Beverly Hills: Sage. Colley H (2003) Engagement mentoring for socially excluded youth: problematising an ‘holistic’ approach to creating employability through the transformation of habitus. British Journal of Guidance and Counselling 31 (1) pp 77-98. Centre for Reviews and Dissemination, (2001) Undertaking Systematic Reviews of Research on Effectiveness CRD Report Number 4: University of York Davies H, Nutley S, and Smith P (2000) What Works? Evidence based policy and practice in public services? Bristol: Policy Press de Anda D (2001) A qualitative evaluation of a mentor program for at-risk youth Child and Adolescent Social Work Journal 18: 97-117. Farrington D and Walsh B (2001) What works in preventing crime? Systematic reviews of experimental and quasi-experimental research. Annals of the Academy of Political and Social Sciences 578 pp 8-13. Gallie W. (1964) Essentially contested concepts in philosophy and the historical understanding. London: Chatto and Windus MacLure M (2205) ‘Clarity bordering on stupidity’; where’s the quality in systematic review? Journal of Education Policy, 20, 4 (no page numbers – in press) Oakley A (2003) Research evidence, knowledge management and educational practice London Review of Education 1:21-33 Pawson R (2004) ‘Mentoring Relationships: an Explanatory Review’, Working Paper 21 ERSC UK Centre for Evidence based Policy and Practice. Available at: www.evidencenetwork.org/Documents/wp21.pdf Pawson R (2006 forthcoming) Evidence-based Policy: A Realist Perspective London: Sage Pawson R, Greenhalgh P, Harvey G, Walshe K (2004) ‘Realist Synthesis: an Introduction’ ERSC Research Methods Programme Papers (no2). Available at www.ccsr.ac.uk/methods/publications/RMPmethods2.pdf 16 Spencer L, Ritchie J, Lewis J and Dillon, L (2003) Assessing Quality in Qualitative Evaluation, Strategy Unit, Cabinet Office. 17