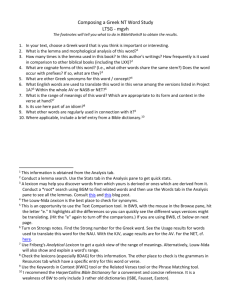

Version identification: a literature review

advertisement