Bioinformatics Course Handouts for Biotechnology Students

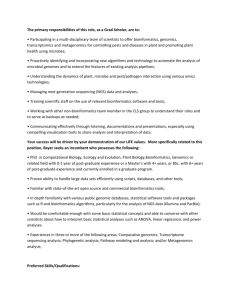

advertisement

bioinformatics Cource handouts: For the class third year B.Sc.(biotechnology), North maharashtra university, Jalgaon. Dhananjay Bhole,(M.Sc. bioinformatics, M.B.A. HR) Coordinator, Centre for Disability Studies, Department of Education and Extension,, University of Pune. Email: dhananjay.bhole@gmail.com Cell: 9850123212 Preface: Understanding need of the students, Notes are drawn by considering syllabus of TY B.Sc. biotechnology, North Maharashtra university Jalgaon. Several websites, standerd databases and books are refered for taking notes. At several places, pages from websites are copied directly. This introductory notes consist of 6 chapters. First chapter gives an overview of bioinformatics. second chapter provides basic information about computer. Chapter third is related to basic database concept. Fourth chapter is more elaborated on types of biological databases. In the fifth chapter, brief information about comparative genomics and proteomics is given. In the last chapter, information about human genome project is provided. In the end of the notes, bioinformatics glossary is given. Teachers can distribute the notes freely among the students in the colleges where my lectures are condected. Dhananjay Bhole Contents 1. bioinformatics definition, history, scope and importance 2. computer fundamental and its application in biology 3. basic database concepts 4. biological databases 5. genomics and proteomics 6. human genome project 1. bioinformatics definition, history, scope and importance 1.0 Introduction Quantitative tools are indispensable in modern biology. Most biological research involves application of some type of mathematical, statistical, Or computational tools to help synthesize recorded data and integrate various types of information in the process of answering a particular biological question. For example, enumeration and statistics are required for assessing everyday laboratory experiments, such as making serial dilutions of a solution or counting bacterial colonies, phage plaques, or trees and animals in the natural environment. A classic example in the history of genetics is by Gregor Mendel and Thomas Morgan, who, by simply counting genetic variations of plants and fruit flies, were able to discover the principles of genetic inheritance. More dedicated use of quantitative tools may involve using calculus to predict the growth rate of a human population or to establish a kinetic model for Enzyme catalysis. For very sophisticated uses of quantitative tools, one may find application of the “game theory” to model animal behavior and evolution, or the use of millions of nonlinear partial differential equations to model cardiac blood flow. Whether the application is simple or complex, subtle or explicit, it is clear that mathematical and computational tools have become an integral part of modern-day biological research. However, none of these examples of quantitative tool use in biology could be considered to be part of bioinformatics, which is also quantitative in nature. To help the reader understand the difference between bioinformatics and other elements of quantitative biology, a detailed explanation of what is bioinformatics is provided in the following sections. 1.1 definition WHAT IS BIOINFORMATICS? Bioinformatics is an interdisciplinary research area at the interface between computer science and biological science. A variety of definitions exist in The literature and on the World Wide Web; some are more inclusive than others. The definition proposed by Luscombe et al. in defining bioinformatics As a union of biology and informatics: bioinformatics involves the technology that uses computers for storage, retrieval, manipulation, and distribution of information related to biological macromolecules such as DNA, RNA, and proteins. The emphasis here is on the use of computers because most of the tasks in genomic data analysis are highly repetitive or mathematically complex. The use of computers is absolutely indispensable in mining genomes for information gathering and knowledge building. Bioinformatics differs from a related field known as computational biology. Bioinformatics is limited to sequence, structural, and functional analysis Of genes and genomes and their corresponding products and is often considered computational molecular biology. However, computational biology encompasses all biological areas that involve computation. For example, mathematical modeling of ecosystems, population dynamics, application of the game theory in behavioral studies, and phylogenetic construction using fossil records all employ computational tools, but do not necessarily involve biological macromolecules. Beside this distinction, it is worth noting that there are other views of how the two terms relate. For example, one version defines bioinformatics as The development and application of computational tools in managing all kinds of biological data, whereas computational biology is more confined to the Theoretical development of algorithms used for bioinformatics. The confusion at present over definition may partly reflect the nature of this vibrant and quickly evolving new field. Explanation: Bioinformatics and computational biology are rooted in life sciences as well as computer and information sciences and technologies. Both of these interdisciplinary approaches draw from specific disciplines such as mathematics, physics, computer science and engineering, biology, and behavioral science. Bioinformatics and computational biology each maintain close interactions with life sciences to realize their full potential. Bioinformatics applies principles of information sciences and technologies to make the vast, diverse, and complex life sciences data more understandable and useful. Computational biology uses mathematical and computational approaches to address theoretical and experimental questions in biology. Although bioinformatics and computational biology are distinct, there is also significant overlap and activity at their interface. Definition The NIH Biomedical Information Science and Technology Initiative Consortium agreed on the following definitions of bioinformatics and computational biology recognizing that no definition could completely eliminate overlap with other activities or preclude variations in interpretation by different individuals and organizations. Bioinformatics: Research, development, or application of computational tools and Approaches for expanding the use of biological, medical, behavioral or health data, Including those to acquire, store, organize, archive, analyze, or visualize such data. Computational Biology: The development and application of data-analytical and Theoretical methods, mathematical modeling and computational simulation techniques To the study of biological, behavioral, and social systems. 1.2 history Bioinformatics, which will be more clearly defined below, is the discipline of quantitative analysis of information relating to biological macromolecules With the aid of computers. The development of bioinformatics as a field is the result of advances in both molecular biology and computer science over the past 30–40 years. Although these developments are not described in detail here, understanding the history of this discipline is helpful in obtaining a broader insight into current bioinformatics research. A succinct chronological summary of the landmark events that have had major impacts on the development of bioinformatics is presented here to provide context. The earliest bioinformatics efforts can be traced back to the 1960s, although the word bioinformatics did not exist then. Probably, the first major bioinformatics Project was undertaken by Margaret Dayhoff in 1965, who developed a first protein sequence database called Atlas of Protein Sequence and Structure. Subsequently, In the early 1970s, the Brookhaven National Laboratory established the Protein Data Bank for archiving three-dimensional protein structures. At its onset, The database stored less than a dozen protein structures, compared to more than 30,000 structures today. The first sequence alignment algorithm was developed by Needleman and Wunsch in 1970. This was a fundamental step in the development of the field of bioinformatics, which paved the way for the routine sequence Comparisons and database searching practiced by modern biologists. The first protein structure prediction algorithm was developed by Chou and Fasman in 1974. Though it is rather rudimentary by today’s standard, it pioneered a series of developments in protein structure prediction. The 1980s saw the establishment of GenBank and the development of fast database searching algorithms such as FASTA by William Pearson and BLAST by Stephen Altschul and coworkers. The start of the human genome project in the late 1980s provided a major boost for the development of bioinformatics. The development and the increasingly widespread use of the Internet in the 1990s made instant access to, and exchange and dissemination of, biological data possible. These are only the major milestones in the establishment of this new field. The fundamental reason that bioinformatics gained prominence as a discipline Was the advancement of genome studies that produced unprecedented amounts of biological data. The explosion of genomic sequence information generated a Sudden demand for efficient computational tools to manage and analyze the data. The development of these computational tools depended on knowledge generated From a wide range of disciplines including mathematics, statistics, computer science, information technology, and molecular biology. The merger of these Disciplines created an information-oriented field in biology, which is now known as bioinformatics. 1.3 SCOPE Bioinformatics consists of two subfields: the development of computational tools and databases and the application of these tools and databases in generating Biological knowledge to better understand living Systems. These two subfields are complementary to each other. The tool development includes writing software For sequence, structural, and functional analysis, as well as the construction and curating of biological databases. These tools are used in three areas of genomic and molecular biological research: molecular sequence analysis, molecular structural analysis, and molecular functional analysis. The analyses of biological data often generate new problems and challenges that in turn spur the development of new and better computational tools. The areas of sequence analysis include sequence alignment, sequence database searching, motif and pattern discovery, gene and promoter finding, reconstruction of evolutionary relationships, and genome assembly and comparison. Structural analyses include protein and nucleic acid structure analysis, comparison, classification, and prediction. The functional analyses include gene expression profiling, protein–protein interaction prediction, protein subcellular localization prediction, metabolic pathway reconstruction, and simulation (Fig. 1.1). Figure 1.1: Overview of various subfields of bioinformatics. Biocomputing tool development is at the foundation of all bioinformatics analysis. The applications Of the tools fall into three areas: sequence analysis, structure analysis, and function analysis. There are intrinsic connections between different areas of analyses represented by bars between the boxes. The three aspects of bioinformatics analysis are not isolated but often interact to produce integrated results (see Fig. 1.1). For example, protein structure Prediction depends on sequence alignment data; clustering of gene expression profiles requires the use of phylogenetic tree construction methods derived In sequence analysis. Sequence-based promoter prediction is related to functional analysis of coexpressed genes. Gene annotation involves a number of activities, which include distinction between coding and noncoding sequences, identification of translated protein sequences, and determination of the gene’s evolutionary relationship with other known genes; prediction of its cellular functions employs tools from all three groups of the analyses. 1.4 GOALS The ultimate goal of bioinformatics is to better understand a living cell and how it functions at the molecular level. By analyzing raw molecular sequence And structural data, bioinformatics research can generate new insights and provide a “global” perspective of the cell. The reason that the functions of A cell can be better understood by analyzing sequence data is ultimately because the flow of genetic information is dictated by the “central dogma” of Biology in which DNA is transcribed to RNA, which is translated to proteins. Cellular functions are mainly performed by proteins whose capabilities are Ultimately determined by their sequences. Therefore, solving functional problems using sequence and sometimes structural approaches has proved to be a fruitful endeavor. 1.5 APPLICATIONS Bioinformatics has not only become essential for basic genomic and molecular biology research, but is having a major impact on many areas of biotechnology And biomedical sciences. It has applications, for example, in knowledge-based drug design, forensic DNA analysis, and agricultural biotechnology. Computational studies of protein–ligand interactions provide a rational basis for the rapid identification of novel leads for synthetic drugs. Knowledge of the three-dimensional structures of proteins allows molecules to be designed that are capable of binding to the receptor site of a target protein with great affinity and specificity. This informatics-based approach significantly reduces the time and cost necessary to develop drugs with higher potency, fewer side effects, and less toxicity than using the traditional trial-and-error approach. In forensics, results from molecular phylogenetic analysis have been accepted as evidence in criminal courts. Some sophisticated Bayesian statistics and likelihood-based methods for analysis of DNA have been applied in the analysis of forensic identity. It is worth mentioning that genomics and bioinformtics are now poised to revolutionize our healthcare system by developing personalized and customized medicine. The high speed genomic sequencing coupled with sophisticated informatics technology will allow a doctor in a clinic to quickly sequence a patient’s genome and easily detect potential harmful mutations and to engage in early diagnosis and effective treatment of diseases. Bioinformatics tools are being used in agriculture as well. Plant genome databases and gene expression profile analyses have played an important role in the development of new crop varieties that have higher productivity and more resistance to disease. 1.6 LIMITATIONS Having recognized the power of bioinformatics, it is also important to realize its limitations and avoid over-reliance on and over-expectation of bioinformatics Output. In fact, bioinformatics has a number of inherent limitations. In many ways, the role of bioinformatics in genomics and molecular biology research Can be likened to the role of intelligence gathering in battlefields. Intelligence is clearly very important in leading to victory in a battlefield. Fighting A battle without intelligence is inefficient and dangerous. Having superior information and correct intelligence helps to identify the enemy’s weaknesses And reveal the enemy’s strategy and intentions. The gathered information can then be used in directing the forces to engage the enemy and win the battle. However, completely relying on intelligence can also be dangerous if the intelligence is of limited accuracy. Overreliance on poor-quality intelligence Can yield costly mistakes if not complete failures. It is no stretch in analogy that fighting diseases or other biological problems using bioinformatics is like fighting battles with intelligence. Bioinformatics And experimental biology are independent, but complementary, activities. Bioinformatics depends on experimental science to produce raw data for analysis. It, in turn, provides useful interpretation of experimental data and important leads for further experimental research. Bioinformatics predictions are Not formal proofs of any concepts. They do not replace the traditional experimental research methods of actually testing hypotheses. In addition, the quality of bioinformatics predictions depends on the quality of data and the sophistication of the algorithms being used. Sequence data from high throughput analysis Often contain errors. If the sequences are wrong or annotations incorrect, the results from the downstream analysis are misleading as well. That is why It is so important to maintain a realistic perspective of the role of bioinformatics. Bioinformatics is by no means a mature field. Most algorithms lack the capability and sophistication to truly reflect reality. They often make incorrect Predictions that make no sense when placed in a biological context. Errors in sequence alignment, for example, can affect the outcome of structural or phylogenetic analysis. The outcome of computation also depends on the computing power available. Many accurate but exhaustive algorithms cannot be used because of the slow rate of computation. Instead, less accurate but faster algorithms have to be used. This is a necessary trade-off between accuracy and Computational feasibility. Therefore, it is important to keep in mind the potential for errors produced by bioinformatics programs. Caution should always be exercised when interpreting prediction results. It is a good practice to use multiple programs, if they are available, and perform multiple evaluations. A more accurate prediction can often be obtained if one draws a consensus by comparing results from different algorithms. 1.7 Future of bioinformatics Despite the pitfalls, there is no doubt that bioinformatics is a field that holds great potential for revolutionizing biological research in the coming decades. Currently, the field is undergoing major expansion. In addition to providing more reliable and more rigorous computational tools for sequence, Structural, and functional analysis, the major challenge for future bioinformatics development is to develop tools for elucidation of the functions and Interactions of all gene products in a cell. This presents a tremendous challenge because it requires integration of disparate fields of biological knowledge And a variety of complex mathematical and statistical tools. To gain a deeper understanding of cellular functions, mathematical models are needed to simulate A wide variety of intracellular reactions and interactions at the whole cell level. This molecular simulation of all the cellular processes is termed systems Biology. Achieving this goal will represent a major leap toward fully understanding a living system. That is why the system-level simulation and integration Are considered the future of bioinformatics. Modeling such complex networks and making predictions about their behavior present tremendous challenges and Opportunities for bioinformaticians. The ultimate goal of this endeavor is to transform biology from a qualitative science to a quantitative and predictive Science. This is truly an exciting time for bioinformatics. 2. Computer fundamental and its application in biology What is computer Computer is an automatic electronic device used to perform an arithmatic and logical operation, Historical Evolution of computer :, The development of computer has passed through a number of stages before it racedffte present state of development. In fact the development Of the first calculating device named ABACUS dates back to 3000 B.C from ABACUS to micro computer .the computing system have undergone a tremendous changes. Historical evaluations are given below. 1)ABACUS 2)Analog machines and Napier's Bone 3)Odometer 4)Basic pascal and hismechanical calculator 5)Clartes Babbage different machine 6)Harmine Hollerith :The'punchedcard 7)Electrical machines 8)Howard Aikens and IBM Mark i TYPES OF COMPUTER Technically Computers are of three types 1)Digital Computer 2)Analog Computer 3)Hybrid Computer 1)Digital Computer.-In these type of Computers mathematil expression are finally represented asbinary digits 0 and 1 all operations are using these digits at very high rate. 2)Analog Computer.-In this similarities are established in form of current or voltage signal. AnalOg computer operateby measuring raher than counting in analg computer the variable is an electrical signal produced analogous to the variable of physical system. 3)Hybrid Computer :-Thi type of computer is a combination computer using all good quantities of both the analog and digital computers. CHARACTERISTICS OF COMPUTER 1.SPEED- Computers makes calculat's at very fast rate. But how fast?. Let us muply 369 with 514. It takes about 50 to 60 seconds for expert calculator to do this job. But modem computer can do 30, 00:000 such calculations in minute and those too, without mistake. 2. ACCURACY - Computers never makes mistake .If we :an insert a faulty data will get faultyresult. Their is no chance of making mistake in computer. 3.STORAGE- Computer can store a large amount of data or tnformation which we can in micxo.seco= 4:VERSATILITY - It helpSln forming re jobs automatically and also perform audio, vleo and graphics functions. 5.DILIGENCE' computer can gow0rking endlessly and unlike human being can not get tired. It can process a large amount of information at a time. 6. INTEGRITY -It is abil to take in and store a sequence of instructions for obeying. Such a sequence of instructions is.called as PROGRAM. It is also ability to obey the sequence ofprogram automatically. Block diagram of computer INPUT UNIT :- We cargive information to the computer through input unit e.g. mouse ,keyboard Keyboard - With the help of keyboard we provide information or command to the computer. It is same as typewriter but only additional keys are present in keyboard. CPU (Central Processing Unit) :It is called as heart of the computer .The basic function performed by computer is programme execution. CPU controls the operation of computer and perform it's data processing function. The major three components of CPU are- A) ALU - (Arithmatic LogicUnit)All the calculations & compadsonsare made in this unit of computer. The actual processing of calculation is done in ALU, B) CU- (ControlUnit) It acts like supervisor. It control the transfer of data and infon'nal between various unit. It is also initiate appropriate function by the arithmatic unit. C) MEMORY- Computer can store a large amount of data. The storage capacity of computer called as memory. There are to main types of memory. 1. Primary Memory :- This is of two types a) RAM - Ram stands for random access memory. ItisvolaBeortemporary./fmeaas soon as power is off any information stored in the ram wilt belosses, fnthis memory we can read the contents as well as write new information. b) FROM - FROM stands for read only memory. It is permanent or nonvolatilemeiry. In this memory we can not change data or can not write,a'information We ca (n'ly region the information. = 2. Secodary Memory :-Secondary memory is also called as external memory. The secondary storage device is - " a) Floppy disk- It have various sizes, information is written in it or read from the floppy along ncentric circle called tracks. Floppy disk is corn paratively cheap and can be take from one place to another place. OUTPUT DEVICES :- It gives us the final result in the desired form. VDU (V$ual Display Unit):- It is common output device. This gives display of output from the computer. It is temporary display when computer is off display is Iossed. Printer-Printers are divided into main two types 1) Impact printer :- The printers produce an impact on a piece of paper on which information is to be typed i.e. Head of a printer physically touched to the paper. 2) Non-impact printer :-The printers in which no impact printing machine is ublised & their is no physical contact between head and paper. Printers are classified by their printing mechanism also as below a)Character pdnter :-This pdntsone character at a time b)Line printer :-This prints one line at a time c)Page pdnter :-This prints one page at a time PROGRAMMING LANGUAGES , A languages is a system of communication, programming language consist of all symbols and' character that permit people to communicate with computer. A)Machine Language -A languages which uses numeric codes to represent operations and numeric addresses of operand and is the only onedirectly understand by computer. The sequence of such a instructions called as machine language, B) Assembly Languages- In assembly language mnemonics are used to represent operation codeand string of characters to represent addresses. As an assembly language is designed mainly to replace each machine code with an understandabie mnemonic and eac, h address with a simple alphanumeric string. It- should first translated into to its equivalent machine language program. C) High Level Languages- The development of technique an micro instructions lead to the deveol.ment of high Ivel languages. A no.of languages have !en develop to process scientific and maematica115roblem. High,level language is English like language. Some commonly used high level language is PASCAL, COBOL, FORTRAN etc. Software A set of instructions given tothe computer to operate and control its activities is called software. Software is the part of computer which enable the hardware to use. As a car cannot run without fuel, a computer carmot work wout software. Software can be classified as follow 1)System Software 2)Application Software 1) System Software: Most of the software will be programme which contribute to control and performance of the computer system. System software consist of a)Operating System b)Utility software c) Translator a) operating System -An integrated set of programmes that manages the resources of computer and shedules its operation called software. The operating system acts as an interface between the hardware and the programs. e.g. DOS (Single user), UNIX (Multiuser) Uses of operating system; 1.Control and coordination of peripheral devices such as printers, display screen and disk drives, 2. To monitor the use of machine resources. 3. To help the user develop programmes. 4. To deal with any faults that may occur in the computer and inform the operator. 2) utility software There are many task common to a variety of applications. Examples of such task are: Computer is an automatic electronic device used to perform an arithmatic and logical operation. 2.2 Types of computers according to size 1. Micro computer 2. miniframe computer and 3. main frame computer work stations. Micro computer: is a common small computer used for personal purpose . eg personal desk top or laptop computers. Miniframe computers: the larger computers or work stations used for commercial perpose eg servers in small computer lab. It is made up of many micro computers. operating systems and architectures is arose in the 1970s and 1980s, but minicomputers are generally not considered mainframes. Main frame computers: Mainframes (often colloquially referred to as Big Iron) are computers used mainly by large organizations for critical applications, typically bulk data processing such as census, industry and consumer statistics, ERP, and financial transaction processing. Most large-scale computer system architectures were firmly established in the 1960s. 2.3 Application of computers in biology 1 To store vast, diverse, and complex life sciences data 2 To have fast and easy accessibility of biological data 3 To make biological information more understandable and useful by using various visualization tools. 4 to analyze biological data for addressing theoretical and experimental questions in biology by using mathematical and computational approaches. 3. basic database concepts 3.1 what is data? Technically, raw facts and figures, such as orders and payments, which are processed into information, such as balance due and quantity on hand is consider as data. the terms data and information are used synonymously., the term data is the plural of "datum," which is one item of data. But datum is rarely used, and data is used as both singular and plural in practice. Data is Any form of information whether on paper or in electronic form. Data may refer to any electronic file no matter what the format: database data, text, images, audio and video. Everything read and written by the computer can be considered as data except for instructions in a program that are executed (software). A common misconception is that software is also data. Software is executed, or run, by the computer. Data are "processed." Thus, software causes the computer to process data. The amount of data versus information kept in the computer is a tradeoff. Data can be processed into different forms of information, but it takes time to sort and sum transactions. Up-to-date information can provide instant answers. 3.2 Basic Database Concepts Database Concepts WHAT IS A DATABASE? A database is a computerized archive used to store and organize data in such a way that information can be retrieved easily via a variety of search criteria. Databases are composed of computer hardware and software for data management. The chief objective of the development of a database is to organize data in a set of structured records to enable easy retrieval of information. Each record, also called an entry, should contain a number of fields that hold the actual data items, for example, fields for names, phone numbers, addresses, dates. To retrieve a particular record from the database, a user can specify a particular piece of information, called value, to be found in a particular field and expect the computer to retrieve the whole data record. This process is called making a query. Database management systems (DBMS) are collections of tools used to manage databases. Four basic functions performed by all DBMS are: • Create, modify, and delete data structures, e.g. tables • Add, modify, and delete data • Retrieve data selectively • Generate reports based on data A short list of database applications would include: Inventory, Payroll, Membership Orders, Shipping, Reservation, Invoicing, Accounting, Security, Catalogues, Mailing, Medical records etc. 3.3 Database Components Databases are composed of related tables, while tables are composed of fields and records. Field:A field is an area (within a record) reserved for a specific piece of data. Examples: customer number, customer name, street address, city, state, phone, current balance. Fields are defined by: • Field name • Data type • Character: text, including such things as telephone numbers and zip codes • Numeric: numbers which can be manipulated using math operators • Date: calendar dates which can be manipulated mathematically • Logical: True or False, Yes or No Field size: Amount of space reserved for storing data Record: A record is the collection of values for all the fields pertaining to one entity: i.e. a person, product, company, transaction, etc. Table: A table is a collection of related records. For example, employee table, product table, customer, and orders tables. In a table, records are represented by rows and fields are represented as columns. Relationships: There are three types of relationships which can exist between tables: • One-to-One • One-to-Many • Many-to-Many The most common relationships in relational databases are One-to-Many and Many-toMany. An example of a One-to-Many relationship would be a Customer table and an Orders table: each order has only one customer, but a customer can make many orders. One-to-Many relationships consist of two tables, the "one" table, and the "many" table. An example of a Many-to-Many relationship would be an Orders table and a Products table: an order can contain many products, and a product can be on many orders. A Many-to-Many relationship consists of three tables: two "one" tables, both in a One-toMany relationship with a third table. The third table is sometimes referred to as the lien. Key Fields: In order for two tables to be related, they must share a common field. The common field (key field) in the "one" table of a One-to- Many relationship needs to be a primary key. The same field in the "many" table of a One-to-Many relationship is called the foreign key. Primary key: A Primary key is a field or a combination of two or more fields. The value in the primary key field for each record uniquely identifies that record. In the example above, customer number is the Primary key for the Customer table. A customer number identifies one and only one customer in the Customer table. The primary key for the Orders table would be a field for the order number. Foreign key: When a "one" table's primary key field is added to a related "many" table in order to create the common field which relates the two tables, it is called a foreign key in the "many" table. In the example above, the primary key (customer number) from the Customer table ("one" table) is a foreign key in the Orders table ("many" table). For the "many" records of the Order table, the foreign key identifies with which unique record in the Customer table they are associated. 3.4 Rationalization and Redundancy Grouping logically-related fields into distinct tables, determining key fields, and then relating distinct tables using common key fields is called rationalizing a database. There are two major reasons for designing a database this way: • To avoid wasting storage space for redundant data • To eliminate the complication of updating duplicate data copies For example, in the Customers/Orders database, we want to be able to identify the customer name, address, and phone number for each order, but we want to avoid repeating that information for each order. To do so would take up storage space needlessly and make the job of updating multiple customer addresses difficult and time-consuming. To avoid redundancy: 1. Place all the fields related to customers (name, address, etc.) into a Customer table and create a Primary key field which uniquely identifies each customer: Customer ID. 2. Put all the fields related to orders (date, salesperson, total, etc.) into the Orders table. 3. Include the Primary key field (Customer ID) from the Customer table in the table for Orders. The One-to-Many relationship between Customer and Orders is defined by the common field Customer ID. In the table for Customers (the "one" table) Customer ID is a primary key, while in the Orders table (the "many" table) it is a foreign key. Reference: Bruce Miller, 2005 Database Management Systems Index 4. Introduction to Biological Databases 4.1 need One of the hallmarks of modern genomic research is the generation of enormous amounts of raw sequence data. As the volume of genomic data grows, sophisticated computational methodologies are required to manage the data deluge. Thus, the very first challenge in the genomics era is to store and handle the staggering volume of information through the establishment and use of computer databases. The development of databases to handle the vast amount of molecular biological data is thus a fundamental task of bioinformatics. This chapter introduces some basic concepts related to databases, in particular, the types, designs, and architectures of biological databases. Emphasis is on retrieving data from the main biological databases such as GenBank. Although data retrieval is the main purpose of all databases, biological databases often have a higher level of requirement, known as knowledge discovery, which refers to the identification of connections between pieces of information that were not known when the information was first entered. For example, databases containing raw sequence information can perform extra computational tasks to identify sequence homology or conserved motifs. These features facilitate the discovery of new biological insights from raw data. 4.2 TYPES OF DATABASES Originally, databases all used a flat file format, which is a long text file that contains many entries separated by a delimiter, a special character such as a vertical bar (|). Within each entry are a number of fields separated by tabs or commas. Except for the raw values in each field, the entire text file does not contain any hidden instructions for computers to search for specific information or to create reports based on certain fields from each record. The text file can be considered a single table. Thus, to search a flat file for a particular piece of information, a computer has to read through the entire file, an obviously inefficient process. This is manageable for a small database, but as database size increases or data types become more complex, this database style can become very difficult for information retrieval. Indeed, searches through such files often cause crashes of the entire computer system because of the memory-intensive nature of the operation. there are over 1,000 public and commercial biological databases. These biological databases usually contain genomics and proteomics data, but databases are also used in taxonomy. The data are nucleotide sequences of genes or amino acid sequences of proteins. Furthermore information about function, structure, localisation on chromosome, clinical effects of mutations as well as similarities of biological sequences can be found. • Most important public databases for molecular biology • 1 Primary sequence databases • 2 Meta-databases • 3 Genome Browsers • 4 Specialized databases • 5 Expression, regulation & pathways databases • 6 Protein sequence databases • 7 Protein structure databases • 8 Microarray-databases • 9 Protein-Protein Interactions Overview Biological databases have become an important tool in assisting scientists to understand and explain a host of biological phenomena from the structure of biomolecules and their interaction, to the whole metabolism of organisms and to understanding the evolution of species. This knowledge helps facilitate the fight against diseases, assists in the development of medications and in discovering basic relationships amongst species in the history of life. The biological knowledge of databases is usually (locally) distributed amongst many different specialized databases. This makes it difficult to ensure the consistency of information, which sometimes leads to low data quality. By far the most important resource for biological databases is a special (yearly) issue of the journal "Nucleic Acids Research" (NAR). The Database Issue is freely available, and categorizes all the publicly available online databases related to computational biology (or bioinformatics). NCBI, Most important public databases for molecular biology (from http://www.kokocinski.net/bioinformatics/databases.php) Primary sequence databases The International Nucleotide Sequence Database (INSD) consists of the following databases. 1. DDBJ (DNA Data Bank of Japan) 2. EMBL Nucleotide DB ( European Molecular Biology Laboratory) 3. GenBank [1] ( National Center for Biotechnology Information) These databanks represent the current knowledge about the sequences of all organisms. They interchange the stored information and are the source for many other databases. Meta-databases 1. MetaDB (MetaDB: A Metadatabase for the Biological Sciences) containing links and descriptions for over 1200 biological databases. 2. Entrez [2] ( National Center for Biotechnology Information) 3. euGenes ( Indiana University) 4. GeneCards ( Weizmann Inst.) 5. SOURCE ( Stanford University) 6. mGen containing four of the world biggest databases GenBank, Refseq, EMBL and DDBJ easy and simple program friendly gene extraction 7. Harvester ( EMBL Heidelberg) Bioinformatic_Harvester Integrating 16 major protein resources. Strictly speaking a meta-database can be considered a database of databases, rather than any one integration project or technology. It collects information from different other sources and usually makes them available in new and more convenient form. Genome Browsers 1. UCSC Genome Bioinformatics Genome Browser and Tools ( UCSC) 2. Ensembl Genome Browser ( Sanger Institute and EBI) 3. Integrated Microbial Genomes Microbial Genome Browser ( Joint Genome Institute, Department of Energy) 4. GBrowse The GMOD GBrowse Project Genome Browsers enable researchers to visualize and browse entire genomes (most have many complete genomes) with annotated data including gene prediction and structure, proteins, expression, regulation, variation, comparative analysis, etc. Annotated data is usually from multiple diverse sources. Specialized databases 1. CGAP Cancer Genes ( National Cancer Institute) 2. Clone Registry Clone Collections ( National Center for Biotechnology Information) 3. DBGET H.sapiens ( Univ. of Kyoto) 4. GDB Hum. Genome Db ( Human Genome Organisation) 5. I.M.A.G.E Clone Collections (Image Consortium) 6. MGI Mouse Genome ( Jackson Lab.) 7. SHMPD The Singapore Human Mutation and Polymorphism Database 8. NCBI-UniGene (National Center for Biotechnology Information) 9. OMIM Inherited Diseases (Online Mendelian Inheritance in Man) 10. Off. Hum. Genome Db (HUGO Gene Nomenclature Committee) 11. List with SNP-Databases 12. p53 The p53 Knowledgebase Expression, regulation & pathways databases 1. KEGG PATHWAY Database [3] ( Univ. of Kyoto) 2. Reactome [4] ( Cold Spring Harbor Laboratory, EBI, Gene Ontology Consortium) Protein sequence databases 1. UniProt [5] Universal Protein Resource (UniProt Consortium: EBI, Expasy, PIR) 2. PIR Protein Information Resource ( Georgetown University Medical Center (GUMC)) 3. Swiss-Prot [6] Protein Knowledgebase ( Swiss Institute of Bioinformatics) 4. PEDANT Protein Extraction, Description and ANalysis Tool (Forschungszentrum f. Umwelt & Gesundheit) 5. PROSITE Database of Protein Families and Domains 6. DIP Database of Interacting Proteins ( Univ. of California) 7. Pfam Protein families database of alignments and HMMs ( Sanger Institute) 8. ProDom Comprehensive set of Protein Domain Families ( INRA/ CNRS) 9. SignalP Server for signal peptide prediction Protein structure databases Protein structure databases: 1. Protein Data Bank [7] (PDB) (Research Collaboratory for Structural Bioinformatics (RCSB)) 2. CATH Protein Structure Classification 3. SCOP Structural Classification of Proteins 4. SWISS-MODEL Server and Repository for Protein Structure Models 5. ModBase Database of Comparative Protein Structure Models (Sali Lab, UCSF) Microarray-databases Microarraydatabases: 1. ArrayExpress ( European Bioinformatics Institute) 2. Gene Expression Omnibus ( National Center for Biotechnology Information) 3. maxd (Univ. of Manchester) 4. SMD ( Stanford University) 5. GPX(Scottish Centre for Genomic Technology and Informatics) Protein-Protein Interactions Protein-protein interactions: 1. BioGRID [8] A General Repository for Interaction Datasets ( Samuel Lunenfeld Research Institute) 2. STRING: STRING is a database of known and predicted protein-protein interactions .( EMBL) • Interactome 4.3 DNA sequence databases NCBI: national centre for biotechnology information Established in 1988 as a national resource for molecular biology information, NCBI creates public databases, conducts research in computational biology, develops software tools for analyzing genome data, and disseminates biomedical information - all for the better understanding of molecular processes affecting human health and disease. EMBL: European molecular biology laboratory Developed by European bioinformatics institute Heidelberg Germany It also archives up to date and detail information about biological macro molecules such as nucleotide sequences and protein sequences. DDBJ: DNA data bank of japan began DNA data bank activities in earnest in 1986 at the National Institute of Genetics (NIG) with the endorsement of the Ministry of Education, Science, Sport and Culture. From the beginning, DDBJ has been functioning as one of the International DNA Databases, including EBI (European Bioinformatics Institute; responsible for the EMBL database) in Europe and NCBI (National Center for Biotechnology Information; responsible for GenBank database) in the USA as the two other members. Consequently, we have been collaborating with the two data banks through exchanging data and information on Internet and by regularly holding two meetings, the International DNA Data Banks Advisory Meeting and the International DNA Data Banks Collaborative Meeting. The Center for Information Biology at NIG was reorganized as the Center for Information Biology and DNA Data Bank of Japan ( CIB-DDBJ) in 2001. The new center is to play a major role in carrying out research in information biology and to run DDBJ operation in the world. It is generally accepted that research in biology today requires both computer and experimental equipment equally well. In particular, we must rely on computers to analyze DNA sequence data accumulating at a remarkably rapid rate. Actually, this triggered the birth and development of information biology. DDBJ is the sole DNA data bank in Japan, which is officially certified to collect DNA sequences from researchers and to issue the internationally recognized accession number to data submitters. We collect data mainly from Japanese researchers, but of course accept data and issue the accession number to researchers in any other countries. Since we exchange the collected data with EMBL/EBI and GenBank/NCBI on a daily basis, the three data banks share virtually the same data at any given time. We also provide worldwide many tools for data retrieval and analysis developed by at DDBJ and others. Database collaboration: NCBI, EMBL and DDBJ are collaborated internationally for exchange of data and information on Internet and by regularly holding two meetings, the International DNA Data Banks Advisory Meeting and the International DNA Data Banks Collaborative Meeting. the three data banks share virtually the same data at any given time. 4.4 protein sequence databasesSwis prot: swis institute for protein resources Swiss-Prot strives to provide reliable protein sequences associated with a high level of annotation (such as the description of the function of a protein, its domains structure, post-translational modifications, variants, etc.), a minimal level of redundancy and high level of integration with other databases. In 2002, the UniProt consortium was created: it is a collaboration between the Swiss Institute of Bioinformatics, the European Bioinfomatics Institute and the Protein Information Resource (PIR), funded by the National Institutes of Health. Swiss-Prot and its automatically curated supplement TrEMBL, have joined with the Protein Information Resource protein database to produce the UniProt Knowledgebase, the world's most comprehensive catalogue of information on proteins. [2] As of 3 April 2007, UniProtKB/Swiss-Prot release 52.2 contains 263,525 entries. As of 3 April 2007, the UniProtKB/TrEMBL release 35.2 contains 4,232,122 entries. The UniProt consortium produced 3 database components, each optimised for different uses. The UniProt Knowledgebase ( UniProtKB (Swiss-Prot + TrEMBL)), the UniProt Non-redundant Reference ( UniRef) databases, which combine closely related sequences into a single record to speed similarity searches and the UniProt Archive ( UniParc), which is a comprehensive repository of protein sequences, reflecting the history of all protein sequences. Tremble: translated nucleotide sequence database of European molecular biology laboratory. The database also archive the same kind of information as that of swis prot. 5 genomics and proteomics 5.1 What is gene ,genome and genomics? Gene:Agene is segment of dna or chromosome responsible for coding one or more functional protein. Genome: The genome is the gene complement of an organism. A genome sequence comprises the information of the entire genetic material of an organism. Genomics: it is the science deals with the study of entire genome, gene organization such as gene order, gene arrangement, gene ontology etc. The goal of Genomics is to determine the complete DNA sequence for all the genetic material contained in an organism's complete genome. 5.2 Structural genomics: the branch of genomics that determines the three-dimensional structures of proteins Structural genomics or structural bioinformatics refers to the analysis of macromolecular structure particularly proteins, using computational tools and theoretical frameworks. One of the goals of structural genomics is the extension of idea of genomics, to obtain accurate three-dimensional structural models for all known protein families, protein domains or protein folds. Structural alignment is a tool of structural genomics. 5.3 functional genomics Understanding the function of genes and other parts of the genome is known as functional genomics. Functional genomics is a field of molecular biology that attempts to make use of the vast wealth of data produced by genomic projects (such as genome sequencing projects) to describe gene (and protein !) functions and interactions. Unlike genomics and proteomics, functional genomics focus on the dynamic aspects such as gene transcription, translation, and protein-protein interactions, as opposed to the static aspects of the genomic information such as DNA sequence or structures. Fields of Application Functional genomics includes function-related aspects of the genome itself such as mutation and polymorphism (such as SNP) analysis, as well as measurement of molecular activities. The latter comprise a number of "-omics" such as transcriptomics ( gene expression), proteomics ( protein expression), phosphoproteomics and metabolomics. Together these measurement modalities quantifies the various biological processes and powers the understanding of gene and protein functions and interactions. Frequently Used Techniques Functional genomics uses mostly high-throughput techniques to characterize the abundance gene products such as mRNA and proteins. Some typical technology platforms are: • DNA microarrays and SAGE for mRNA • two-dimensional gel electrophoresis And mass spectrometryfor protein Because of the large quantity of data produced by these techniques and the desire to find biologically meaningful patterns, bioinformatics is crucial to this type of analysis. Examples of techniques in bioinformatics are data clustering orprincipal component analysis for unsupervised machine learning (class detection) as well as artificial neural networks or support vector machines for supervised machine learning (class prediction, classification). 5.4 proteom and proteomics Proteom: The Proteome is the protein complement expressed by a genome. While the genome is static, the proteome continually changes in response to external and internal events. Proteomics: The study of how the entire set of proteins produced by a particular organism interact. It encompasses the identification and quantification of proteins, and the effect of their modifications, interactions, activities, and function, during disease states, and treatment. A study of an organism's proteins, including the molecular structure of the protein. Protein structure often determines the roles that proteins play in plant physiology. A term applied to anyone working with proteins, which is almost everyone in the post-genomic age. It is the science of determining protein structure and function. The study of how the entire set of proteins produced by a particular organism interact 5.5 What is comparative genomics? How does it relate to functional genomics? Comparative genomics is the analysis and comparison of genomes from different species. The purpose is to gain a better understanding of how species have evolved and to determine the function of genes and noncoding regions of the genome. Researchers have learned a great deal about the function of human genes by examining their counterparts in simpler model organisms such as the mouse. Genome researchers look at many different features when comparing genomes: sequence similarity, gene location, the length and number of coding regions (called exons) within genes, the amount of noncoding DNA in each genome, and highly conserved regions maintained in organisms as simple as bacteria and as complex as humans. Comparative genomics involves the use of computer programs that can line up multiple genomes and look for regions of similarity among them. Some of these sequence-similarity tools are accessible to the public over the Internet. One of the most widely used is BLAST, which is available from the National Center for Biotechnology Information. BLAST is a set of programs designed to perform similarity searches on all available sequence data. For instructions on how to use BLAST, see the tutorial Sequence similarity searching using NCBI BLAST available through Gene Gateway, an online guide for learning about genes, proteins, and genetic disorders. Why is model organism research important? Why do we care what diseases mice get? Functional genomics research is conducted using model organisms such as mice. Model organisms offer a cost-effective way to follow the inheritance of genes (that are very similar to human genes) through many generations in a relatively short time. Some model organisms studied in the HGP were the bacterium Escherichia coli, yeast Saccharomyces cerevisiae, roundworm Caenorhabditis elegans, fruit fly Drosophila melanogaster, and laboratory mouse. Additionally, HGP spinoffs have led to genetic analysis of other environmentally and industrially important organisms in the United States and abroad. For "Microbial Genomes Sequenced". How closely related are mice and humans? How many genes are the same? Answer provided by Lisa Stubbs of Lawrence Livermore National Laboratory, Livermore, California. Mice and humans (indeed, most or all mammals including dogs, cats, rabbits, monkeys, and apes) have roughly the same number of nucleotides in their genomes -- about 3 billion base pairs. This comparable DNA content implies that all mammals contain more or less the same number of genes, and indeed our work and the work of many others have provided evidence to confirm that notion. I know of only a few cases in which no mouse counterpart can be found for a particular human gene, and for the most part we see essentially a one-to-one correspondence between genes in the two species. The exceptions generally appear to be of a particular type --genes that arise when an existing sequence is duplicated. Gene duplication occurs frequently in complex genomes; sometimes the duplicated copies degenerate to the point where they no longer are capable of encoding a protein. However, many duplicated genes remain active and over time may change enough to perform a new function. Since gene duplication is an ongoing process, mice may have active duplicates that humans do not possess, and vice versa. These appear to make up a small percentage of the total genes. I believe the number of human genes without a clear mouse counterpart, and vice versa, won't be significantly larger than 1% of the total. Nevertheless, these novel genes may play an important role in determining species-specific traits and functions. However, the most significant differences between mice and humans are not in the number of genes each carries but in the structure of genes and the activities of their protein products. Gene for gene, we are very similar to mice. What really matters is that subtle changes accumulated in each of the approximately 30,000 genes add together to make quite different organisms. Further, genes and proteins interact in complex ways that multiply the functions of each. In addition, a gene can produce more than one protein product through alternative splicing or post-translational modification; these events do not always occur in an identical way in the two species. A gene can produce more or less protein in different cells at various times in response to developmental or environmental cues, and many proteins can express disparate functions in various biological contexts. Thus, subtle distinctions are multiplied by the more than 30,000 estimated genes. The often-quoted statement that we share over 98% of our genes with apes (chimpanzees, gorillas, and orangutans) actually should be put another way. That is, there is more than 95% to 98% similarity between related genes in humans and apes in general. (Just as in the mouse, quite a few genes probably are not common to humans and apes, and these may influence uniquely human or ape traits.) Similarities between mouse and human genes range from about 70% to 90%, with an average of 85% similarity but a lot of variation from gene to gene (e.g., some mouse and human gene products are almost identical, while others are nearly unrecognizable as close relatives). Some nucleotide changes are “neutral” and do not yield a significantly altered protein. Others, but probably only a relatively small percentage, would introduce changes that could substantially alter what the protein does. Put these alterations in the context of known inherited human diseases: a single nucleotide change can lead to inheritance of sickle cell disease, cystic fibrosis, or breast cancer. A single nucleotide difference can alter protein function in such a way that it causes a terrible tissue malfunction. Single nucleotide changes have been linked to hereditary differences in height, brain development, facial structure, pigmentation, and many other striking morphological differences; due to single nucleotide changes, hands can develop structures that look like toes instead of fingers, and a mouse's tail can disappear completely. Single-nucleotide changes in the same genes but in different positions in the coding sequence might do nothing harmful at all. Evolutionary changes are the same as these sequence differences that are linked to person-toperson variation: many of the average 15% nucleotide changes that distinguish humans and mouse genes are neutral; some lead to subtle changes, whereas others are associated with dramatic differences. Add them all together, and they can make quite an impact, as evidenced by the huge range of metabolic, morphological, and behavioral differences we see among organisms. Why are mice used in this research? Mice are genetically very similar to humans. They also reproduce rapidly, have short life spans, are inexpensive and easy to handle, and can be genetically manipulated at the molecular level. 5.6 What genomes have been sequenced completely? In addition to the human genome, numerous other genomes have been sequenced. These include the mouse Mus musculus, the fruitfly Drosophila melanogaster, the worm Caenorhabditis elegans, the bacterium Escherichia coli, the yeast Saccharomyces cerevisiae, the plant Arabidopsis thaliana, and several microbes. For a complete listing see A Quick Guide to Sequenced Genomes from the Genome News Network. Other resources for information on sequenced genomes: • GOLD -Genomes Online Database provides comprehensive access to information regarding complete and ongoing genome projects around the world. • Comprehensive Microbial Resource -A tool that allows the researcher to access all of the bacterial genome sequences completed to date. From The Institute for Genomic Research (TIGR). • Entrez Genome -A resource from the National Center for Biotechnology Information (NCBI) for accessing information about completed and in-progress genomes. 5.7 overview on Comparative Genomic Analysis Sequencing the genomes of the human, the mouse and a wide variety of other organisms - from yeast to chimpanzees - is driving the development of an exciting new field of biological research called comparative genomics. By comparing the human genome with the genomes of different organisms, researchers can better understand the structure and function of human genes and thereby develop new strategies in the battle against human disease. In addition, comparative genomics provides a powerful new tool for studying evolutionary changes among organisms, helping to identify the genes that are conserved among species along with the genes that give each organism its own unique characteristics. Using computer-based analysis to zero in on the genomic features that have been preserved in multiple organisms over millions of years, researchers will be able to pinpoint the signals that control gene function, which in turn should translate into innovative approaches for treating human disease and improving human health. In addition, the evolutionary perspective may prove extremely helpful in understanding disease susceptibility. For example, chimpanzees do not suffer from some of the diseases that strike humans, such as malaria and AIDS. A comparison of the sequence of genes involved in disease susceptibility may reveal the reasons for this species barrier, thereby suggesting new pathways for prevention of human disease. Although living creatures look and behave in many different ways, all of their genomes consist of DNA, the chemical chain that makes up the genes that code for thousands of different kinds of proteins. Precisely which protein is produced by a given gene is determined by the sequence in which four chemical building blocks - adenine (A), thymine (T), cytosine (C) and guanine (G) - are laid out along DNA's double-helix structure. In order for researchers to most efficiently use an organism's genome in comparative studies, data about its DNA must be in large, contiguous segments, anchored to chromosomes and, ideally, fully sequenced. Furthermore, the data needs to be organized for easy access and highspeed analysis by sophisticated computer software. The successful sequencing of the human genome, which is scheduled to be finished in April 2003, and the recent draft assemblies of the mouse and rat genomes have demonstrated that large-scale sequencing projects can generate high-quality data at a reasonable cost. As a result, the interest in sequencing the genomes of many other organisms has risen dramatically. The fledgling field of comparative genomics has already yielded some dramatic results. For example, a March 2000 study comparing the fruit fly genome with the human genome discovered that about 60 percent of genes are conserved between fly and human. Or, to put it more simply, the two organisms appear to share a core set of genes. Researchers have found that two-thirds of human cancer genes have counterparts in the fruit fly. Even more surprisingly, when scientists inserted a human gene associated with early-onset Parkinson's disease into fruit flies, they displayed symptoms similar to those seen in humans with the disorder, raising the possibility that the tiny insects could serve as a new model for testing therapies aimed at Parkinson's. In September 2002, the cow (Bos taurus), the dog (Canis familiaris) and the ciliate Oxytricha (Oxytricha trifallax) joined the "high priority" that the National Human Genome Research Institute (NHGRI) decided to consider for genome sequencing as capacity becomes available. Other high-priority animals include the chimpanzee (Pan troglodytes), the chicken (Gallus gallus), the honey bee (Apis mellifera) and even a sea urchin (Strongylocentrotus purpuratus). With sequencing projects on the human, mouse and rat genomes progressing rapidly and nearing completion, NHGRI-supported sequencing capability is expected to be available soon for work on other organisms. NHGRI created a priority-setting process in 2001 to make rational decisions about the many requests being brought forward by various communities of scientists, each championing the animals used in its own research. The prioritysetting process, which does not result in new grants for sequencing the organisms, is based on the medical, agricultural and biological opportunities expected to be created by sequencing a given organism. In addition to its implications for human health and well being, comparative genomics may benefit the animal world as well. As sequencing technology grows easier and less expensive, it will likely find wide applications in zoology as a tool to tease apart the often-subtle differences among animal species. Such efforts might possibly lead to the rearrangement of some branches on the evolutionary tree, as well as point to new strategies for conserving or expanding rare and endangered species. 6. Human Genome Project What was the Human Genome Project? The Human Genome Project (HGP) was the international, collaborative research program whose goal was the complete mapping and understanding of all the genes of human beings. All our genes together are known as our "genome." The HGP was the natural culmination of the history of genetics research. In 1911, Alfred Sturtevant, then an undergraduate researcher in the laboratory of Thomas Hunt Morgan, realized that he could - and had to, in order to manage his data - map the locations of the fruit fly (Drosophila melanogaster) genes whose mutations the Morgan laboratory was tracking over generations. Sturtevant's very first gene map can be likened to the Wright brothers' first flight at Kitty Hawk. In turn, the Human Genome Project can be compared to the Apollo program bringing humanity to the moon. The hereditary material of all multi-cellular organisms is the famous double helix of deoxyribonucleic acid (DNA), which contains all of our genes. DNA, in turn, is made up of four chemical bases, pairs of which form the "rungs" of the twisted, ladder-shaped DNA molecules. All genes are made up of stretches of these four bases, arranged in different ways and in different lengths. HGP researchers have deciphered the human genome in three major ways: determining the order, or "sequence," of all the bases in our genome's DNA; making maps that show the locations of genes for major sections of all our chromosomes; and producing what are called linkage maps, complex versions of the type originated in early Drosophila research, through which inherited traits (such as those for genetic disease) can be tracked over generations. The HGP has revealed that there are probably somewhere between 30,000 and 40,000 human genes. The completed human sequence can now identify their locations. This ultimate product of the HGP has given the world a resource of detailed information about the structure, organization and function of the complete set of human genes. This information can be thought of as the basic set of inheritable "instructions" for the development and function of a human being. The International Human Genome Sequencing Consortium published the first draft of the human genome in the journal Nature in February 2001 with the sequence of the entire genome's three billion base pairs some 90 percent complete. A startling finding of this first draft was that the number of human genes appeared to be significantly fewer than previous estimates, which ranged from 50,000 genes to as many as 140,000.The full sequence was completed and published in April 2003. Upon publication of the majority of the genome in February 2001, Francis Collins, the director of NHGRI, noted that the genome could be thought of in terms of a book with multiple uses: "It's a history book - a narrative of the journey of our species through time. It's a shop manual, with an incredibly detailed blueprint for building every human cell. And it's a transformative textbook of medicine, with insights that will give health care providers immense new powers to treat, prevent and cure disease." The tools created through the HGP also continue to inform efforts to characterize the entire genomes of several other organisms used extensively in biological research, such as mice, fruit flies and flatworms. These efforts support each other, because most organisms have many similar, or "homologous," genes with similar functions. Therefore, the identification of the sequence or function of a gene in a model organism, for example, the roundworm C. elegans, has the potential to explain a homologous gene in human beings, or in one of the other model organisms. These ambitious goals required and will continue to demand a variety of new technologies that have made it possible to relatively rapidly construct a first draft of the human genome and to continue to refine that draft. These techniques include: • DNA Sequencing • The Employment of Restriction Fragment-Length Polymorphisms (RFLP) • Yeast Artificial Chromosomes (YAC) • Bacterial Artificial Chromosomes (BAC) • The Polymerase Chain Reaction (PCR) • Electrophoresis Of course, information is only as good as the ability to use it. Therefore, advanced methods for widely disseminating the information generated by the HGP to scientists, physicians and others, is necessary in order to ensure the most rapid application of research results for the benefit of humanity. Biomedical technology and research are particular beneficiaries of the HGP. However, the momentous implications for individuals and society for possessing the detailed genetic information made possible by the HGP were recognized from the outset. Another major component of the HGP - and an ongoing component of NHGRI - is therefore devoted to the analysis of the ethical, legal and social implications (ELSI) of our newfound genetic knowledge, and the subsequent development of policy options for public consideration. Glossary Keyword: Analogous protein Definition: Two proteins with related folds but unrelated sequences are called analogous. During evolution, analogous proteins independently developed the same fold. Keyword: Databank Definition: In the biosciences, a databank (or data bank) is a structured set of raw data, most notably DNA sequences from sequencing projects (e.g. the EMBL and GenBank databases). Keyword: Database Definition: A database (or data base) is a collection of data that is organized so that its contents can easily be accessed, managed, and modified by a computer. The most prevalent type of database is the relational database which organizes the data in tables; multiple relations can be mathematically defined between the rows and columns of each table to yield the desired information. An object-oriented database stores data in the form of objects which are organized in hierachical classes that may inherit properties from classes higher in the tree structure. In the biosciences, a database is a curated repository of raw data containing annotations, further analysis, links to other databases. Examples of databases are the SWISSPROT database for annotated protein sequences or the FlyBase database of genetic and molecular data for Drosophila melanogaster. Keyword: Dynamic Progamming Definition: In general, dynamic programming is an algorithmic scheme for solving discrete optimization problems that have overlapping subproblems. In a dynamic programming algorithm, the definition of the function that is optimized is extended as the computation proceeds. The solution is constructed by progressing from simpler to more complex cases, thereby solving each subproblem before it is needed by any other subproblem. In particular, the algorithm for finding optimal alignments is an example of dynamic programming. Keyword: Force-field Definition: In molecular dynamics and molecular mechanics calculations, the intra- and intermolecular interactions of a molecule are calculated from a simplified empirical parametrization called a force field. These include atom masses, charges, dihedral angles, improper angles, van-der-Waals and electrostatic interactions, etc. Keyword: Genome Definition: The genome is the gene complement of an organism. A genome sequence comprises the information of the entire genetic material of an organism. Keyword: Genomics, Functional Genomics, Structural Genomics Definition: The goal of Genomics is to determine the complete DNA sequence for all the genetic material contained in an organism's complete genome. Functional genomics (sometimes refered to as functional proteomics) aims at determining the function of the proteome (the protein complement encoded by an organism's entire genome). It expands the scope of biological investigation from studying single genes or proteins to studying all genes or proteins at once in a systematic fashion, using large-scale experimental methodologies combined with statistical analysis of the results. Structural Genomics is the systematic effort to gain a complete structural description of a defined set of molecules, ultimately for an organism’s entire proteome. Structural genomics projects apply X-ray crystallography and NMR spectroscopy in a high-throughput manner. Keyword: Hidden Markov Model Definition: A Hidden Markov Model (HMM) is a general probabilistic model for sequences of symbols. In a Markov chain, the probability of each symbol depends only on the preceeding one. Hidden Markov models are widely used in bioinformatics, most notably to replace sequence profile in the calculation of sequence alignments Keyword: Homologous Proteins Definition: Two proteins with related folds and related sequences are called homologous. Commonly, homologous proteins are further divided into orthologous and paralogous proteins. While orthologous proteins evolved from a common ancestral gene, paralogous proteins were created by gene duplication. Keyword: Neural Network Definition: A neural network is a computer algorithm to solve non-linear optimisation problems. The algorithm was derived in analogy to the way the densely interconnected, parallel structure of the brain processes information. Keyword: Ontology Definition: The word ontology has a long history in philosophy, in which it refers to the study of being as such. In information science, an ontology is an explicit formal specification of how to represent the objects, concepts and other entities that are assumed to exist in some area of interest and the relationships among them. Keyword: Open Reading Frame (ORF) Definition: An opening frame contains a series of codons (base triplets) coding for amino acids without any termination codons. There are six potential reading frames of an unidentified sequence. Keyword: Protein Folding Problem Definition: Proteins fold on a time scale from ms to s. Starting from a random coil conformation, proteins can find their stable fold quickly although the number of possible conformations is astronomically high. The Protein Folding Problem is to predict the folding and the final structure of a protein solely from its sequence. The Protein Structure Prediction Problem refers to the combinatorial problem to calculate the three-dimensional structure of a protein from its sequence alone. It is one of the biggest challenges in structural bioinformatics. Keyword: Proteome Definition: The Proteome is the protein complement expressed by a genome. While the genome is static, the proteome continually changes in response to external and internal events. Keyword: Proteomics Definition: Proteomics aims at quantifying the expression levels of the complete protein complement (the proteome) in a cell at any given time. While proteomics research was initially focussed on two-dimensional gel electrophoresis for protein separation and identification, proteomics now refers to any procedure that characterizes the function of large sets of proteins. It is thus often used as a synonym for functional genomics. Keyword: Sequence Contig Definition: A contig consists of a set of gel readings from a sequencing project that are related to one another by overlap of their sequences. The gel readings of a contig can be combined to form a contiguous consensus sequence whose length is called the length of the contig. Keyword: Sequence Profile Definition: A sequence profile represents certain features in a set of aligned sequences. In particular, it gives position-dependent weights for all 20 amino acids and as for insertion and deletion events at any sequence position. Keyword: Single Nucleotide Polymorphism Definition: Single Nucleotide Polymorphisms (SNPs) are single base pair positions in genomic DNA at which normal individuals in a given population show different sequence alternatives (alleles) with the least frequent allele having an abundance of 1 % or greater. SNPs occur once every 100 to 300 bases and are hence the most common genetic variations. Keyword: Threading Definition: Threading techniques try to match a target sequence on a library of known threedimensional structures by „threading“ the target sequence over the known coordinates. In this manner, threading tries to predict the three-dimensional structure starting from a given protein sequence. It is sometimes successful when comparisons based on sequences or sequence profiles alone fail due to a too low sequence similarity. Keyword: Turing Machine Definition: The Turing machine is one of the key abstractions used in modern computability theory. It is a mathematical model of a device that changes its internal state and reads from, writes on, and moves a potentially infinite tape, all in accordance with its present state. The model of the Turing machine played an important role in the conception of the modern digital computer.