Recommender using K-Nearest Neighbor and

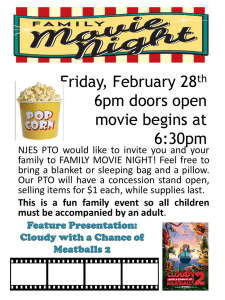

advertisement

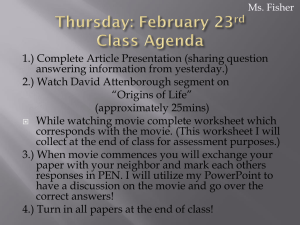

Movie Advisor David Witherspoon University of Colorado at Boulder Boulder, Colorado 80309 ABSTRACT Develop a recommendation application that provides predictions on new items of interest for a user utilizing a combination of classifiers to increase the accuracy. Utilizing the principles of boosting where we have one classifier feed another classifier to take advantage of the benefits of those specific classifiers. In this paper I will take algorithms that have worked well in other papers where I will combine and modify them to maximize the accuracy of the recommendation to the user. I will also make some modifications to help increase the accuracy due to possible shortcomings in regards to correlation analysis. classifiers that can work together to provide a more accurate prediction or classification. 1.3 Contributions I am looking at utilizing the Locally Weighted Naïve Bayesian Network from section 2.4 to replace the Naïve Bayesian Network that is in the architecture of the Content-Boosted Collaboration Coefficient Recommender framework from section 2.1 to increase the accuracy of the recommendations. I will keep the standard KNearest Neighbor as the second classifier within the framework. I will also look towards utilizing the cosine [2] defined below: cosine(A,B) = P(A∪B) / √P(A)P(B) Keywords Collaborative Filtering, Naïve Bayesian Network, Recommender, K-Nearest Neighbor, Data Mining, Knowledge Discovery, Weka. (1) when the result of the Pearson correlation coefficient is not conclusive. 2. RELATED WORK 1. INTRODUCTION 1.1 Motivation I am currently working on a Research and Development (R&D) project that wants to have the ability to recommend tasks based on other users’ ratings of other tasks. The thought of all recommendation applications is that people that have similar interest in the pass will continue to have similar interest in the future. Most recommendation systems utilize one algorithm to classify or predict the new items interest to the user, which seems to be fairly accurate. With the data set that I will do future work on, accuracy of the prediction is critical and would be willing to sacrifice performance for gains in accuracy. This is a fine line to walk since we do not want the system to perform so slowly that waiting for the recommendation from the system hinders the operator for using the system. So I will look at utilizing multiple algorithms that will complement each other in providing more accurate predictions. Since the R&D project currently does not have the recommendation component, there is no data set to work with. Therefore, I will be looking towards the rich data sets provided by Netflix and Internet Movie Database (IMDB). 1.2 Existing Techniques The main issue with most recommendation systems is that they are not accurate enough for systems that are outside the domain of recommending books, movies, music, etc. If the domain that you are looking at needing recommendations on is more in the realm of medical, military, or other life altering situations, then the accuracy in the recommendation is critical. This led me to see that most recommendation systems are only utilizing a single classifier or predictor. After reading through the topic of boosting and adaboosting [2], I realized the benefits of having multiple 2.1 Content-Boosted Collaborative Filtering The concept behind the Content-Boosted Collaborative Filtering (CBCF) is that having two classifiers that complement each other will produce a more accurate prediction. In the paper about CBCF they utilize the naïve Bayesian network, K-nearest neighbor, Pearson correlation coefficient, and other components to create an accuracy of MAE = 0.962.[5] The main techniques that I will be utilizing is the pseudo user vector of predictions, the concept of the K-nearest neighbor, and Pearson correlation coefficient. The pseudo user vector of predictions and actual ratings is used to resolve the issue of the user-rating matrix being very sparse when most of the items have not been rated. The one of the issues that I am looking to expand on is the Pearson correlation coefficient and the time when this value is not conclusive. Being able to utilize another correlation will hopefully add to the increase in accuracy on recommendations that were false negatives. The other issue that I am looking to improve on is the use of the naïve Bayesian network that assumes that the attributes are independent. This can actually cause errors in the prediction and that is something that I am trying to reduce. Therefore I will be looking at using the locally weighted naïve Bayesian network that is described in section 2.4. There is no tool or API that can be used to implement the framework that is described in the paper [5]. 2.2 Slope One Predictors The Slope One predictor is a item-based collaborative filtering where the predictor is of the for ƒ(x) = x + b.[4] The thought is that there are less item to item comparisons than there are user ratings to user rating comparisons. So that you can reduce the amount of items to look up in order to figure out the recommendation. The process for calculating the recommendation is that if user A and user B both rate the same item and then user A has a rating for the new item that B is interested in. Then to calculate the rating for user B. We take the B’s rating of the common item and add the difference between A’s rating of the common item and A’s rating of the new item. This is illustrated in figure 1 from the Slope One Predictor paper.[4] This is a very simple and easy to implement classifier, but the accuracy is not at an acceptable level for recommendations that are more critical like military or hospital. In the Slope One Predictor paper the results that they gathered were a MAE of 1.90 [4], which is much larger than the MAE provided by the CBCF design as is. Therefore I will not be utilizing the Slope One classifier, but this could be a candidate for an application that is more concerned about performance and still have good accuracy. The API that can be used for implementing this is Taste which has become Mahout under Apache and is located at http://lucene.apache.org/mahout/. 2.3 Use of K-Nearest Neighbor The K-nearest neighbor (KNN) classifier is based around the premise that users that have rated movies similar in the past will more than likely rate movies similar in the future. The main decision that needs to be configured during the training of the Knearest neighbor is determining the value for K. From the results presented in the paper on the Use of KNN [3], we can see that the use of Pearson correlation coefficient is the better coefficient to use. There really is no issue with the results from the paper, it is more the fact that they could do better by utilizing multiple classifiers to increase the accuracy or even as they stated to utilize clustering. [3] The API that can be used for implementing this is Taste which has become Mahout under Apache and is located at http://lucene.apache.org/mahout/. 2.4 Locally Weighted Naïve Bayesian The idea around the development of the locally weighted naïve Bayesian network is the fact that the naïve Bayesian does well when the assumption that the attributes are independent, but once that assumption is violated the predictions become less accurate. So the effort made by this group of people was to keep with the idea of having a lazy learner. A new model would use a weighted set of training instances in the locale of the test instance, which helps to mitigate the effects of attribute dependence. [1] The idea of weighting is based off the linear regression model and having weight when it becomes non-linear. Again there is really no issue with their approach besides striving for higher accuracy, which leads me to utilize this version of the naïve Bayesian network with the CBCF framework instead of the original naïve Bayesian network. The API that can be used to implement the locally weighted Naïve Bayesian network is Weka and is located at http://www.cs.waikato.ac.nz/ml/weka/. This API also supports the Naïve Bayesian which is the original Bayesian Network that is described in the framework for the CBCF application [5]. 3. PROPOSED WORK 3.1 Analyze Data Set The first step will be to download from the different web sites the data for the Netflix and IMDB movie data. Then I will import the data into the database and create a component that will attempt to merge the two data sources by movie title and keep track of the fallout between the two systems. At this point if the fallout of records is too great, then I will proceed with the IMDB data source since it contains other attributes besides just the time, movie, rating, and user. The IMDB contains other attributes like genre that could be significant attributes and increase the accuracy of the predictions. 3.2 Database Design and Analysis Once it has been determined what the actual data set will contain. I will need to look at what design changes can be made to the database to increase performance of the scanning of the tables that will be performed by the classifiers. I will also need to come up with a design for the table(s) that will hold the pseudo user-rating vector described in section 2.1. 3.3 Data Sampling Develop a component that will generate the 10-fold crossvalidation, where the data is randomly divided into 10 mutually exclusive subsets. This would be beneficial since this is recommended for estimating accuracy. [2] 3.4 Develop Recommenders Develop the locally weighted naïve Bayesian network that will populate the user’s vector of ratings for missing rates that will be utilized by the K-nearest neighbor algorithm to determine the value for the rating with the Pearson correlation coefficient between [0,1] and cosine when the Pearson result is not conclusive. 3.5 Train and Test the System I will utilize the training data sets that were generated by the component described in section 3.3 to train the locally weighted naïve Bayesian network and the K-Nearest Neighbor to reduce the MAE and improve the accuracy of the system. After the system has been trained on the data I will run through the testing data sets to see how the system does on providing accurate recommendations. 3.6 Gather Metrics on RMSE and MAE I will be looking at calculating both the Root Mean Squared Error (RMSE) and the Mean Absolute Error (MAE) to compare against results calculated in other research. Both formulas are shown below: MAE : ∑ | y(i) – y′(i)| / d where i = 1 to d (2) RMSE : √∑ ( y(i) – y′(i))² / d where i = 1 to d (3) 4. EVALUATION The data set that I will be using on the application are the Netflix data source that can be found here: http://www.netflixprize.com/ after you have registered a team and IMDB data source that can be found here: ftp://ftp.fu-berlin.de/pub/misc/movies/database/. After we have gathered the metrics on RMSE and MAE, I will compare the results of the MAE with the results gathered and presented by other research [5]. Then I can determine if altering the type of classifiers, increasing the number of significant attributes and helping improve the correlation analysis increases the accuracy of the recommendations provided by the application. 5. MILESTONES Week of March 8th – Data Analysis and Database Design Week of March 15th – Develop Sampling and Create Sample Sets There are multiple different rows of customer movie ratings per movie. This data is loaded into the table that contains movie_id, customer_id, movie_rating, movie_rating_date, training, and is_prediction. The training attribute is used to mark the data as a training record and to be used during the training of the classifier. The is_prediction attribute is used to mark the movie_rating as a prediction or actual value depending on if the attribute is true or not. This allows me to keep track of what movie ratings were predicted by the first classifier vs. what were actually rated by the customers. Week of March 22nd – Develop Locally Weighted Naïve Bayesian 6.2 Data Pre-Processing Week of March 29th –Project Check Point. Finish up Bayesian network. There are a total of 17,770 distinct Netflix movies that were loaded into the database. The IMDB movie genre dataset contained 850,709 records within the file, where there are multiple genres per distinct movie. Importing the IMDB genre dataset and matching on the movie title, I was able to insert records into the database where they matched and filtered out the movies that did not match. Week of April 5th – Develop K-Nearest Neighbor algorithm Week of April 12th – Develop Correlation Analysis. Week of April 19th – Final Train, Test, and Gather Metrics Week of April 26th –Project Presentation and Final Project Report 6. PROGRESS ON WORK 6.1 Data Analysis Looking through the data and realizing that the IMDB data was not providing a good way of determining the users’ ratings on the movies, instead the ratings were an average weighting based on multiple users’ ratings. Therefore, since I was not able to determine individual user ratings, I proceeded with utilizing the Netflix data and merging in the genre data from IMDB as I originally had planned. The processing of the Netflix movie title data is necessary to load due to the fact that the genre data from the IMDB data source contains the movie title and associated genre. The Netflix movie title data format was movie id, movie year, and movie title. The IMDB genre data format was in movie title and genre. Although both datasets contained a movie title, there was a slight difference in the definition of the IMDB movie titles and the Netflix movie titles. In the IMDB movie titles attribute, it included the year of the movie where Netflix has this as a separate attribute. This made the comparison matching on movie title not a straight forward implementation, but required a bit of string manipulation to perform the matching. The processing of the Netflix movie rating training dataset was more straight forward and easier to implement. The format of the dataset is: Movie Id: Customer Id, Movie Rating, Movie Rating Date For example: 1: 1488844,3,2005-09-06 822109,5,2005-05-13 This states that customer id 1488844 rated movie id 1 as 3 out of 5 on 2005-09-06. So the second line states that customer id 822109 rated movie id 1 as 5 out of 5 on 2005-05-13. Each one of the training files contains any number of movies that were rated by any number of customers. With the matching between the two different dataset by movie title, I was able to match on 23,516 records from the IMDB to Netflix which is only 3%. This number is low due to the fact in the difference of amounts of distinct movies between Netflix dataset and the IMDB dataset. So if we look at the distinct movies that I was able to match on, it was 7,623 which are 43% related to the number of Netflix movies. This is still lower than I was hoping, but should be enough to see if having genre as a significant attribute will help out with the movie rating predictions. 6.3 Data Processing The data is queried from the database that contains all of the movie ratings. To create the dataset for the training I utilize the training attribute to pull all the records that are marked as training. The movie rating table contains indexes to increase the speed at which the data can be read and loaded into the Instances class that is defined in the Weka API at http://weka.sourceforge.net/doc/. In order to work with the Weka API from my java application, I needed to install the Weka application, and then within the main directory you can get the weka.jar and weka-src.jar. Including these two jars within your class path allows you to interact with the Weka API from within your java application and to view the source code from the Weka classes when debugging your application. 6.4 Training To create the training dataset of Instances, I load only the movie rating records that are marked as training as stated above. Currently the training set consists of 720,000 records, but as of Friday I was able to load 1,073,342 records into memory for the classifier to train with. I will continue to push the limit in order to hopefully load the entire training set provided by Netflix which includes 17,770 data files of which I have processed 250. If I finally reach a point of not being able to keep the training records in memory, then I will look to store a portion of the training data that will help me create the prediction on the records that I need to complete the rating matrix. The thought that I have so far would be to utilize the customer Id that will be provided to make sure that I load all of those records. Then I will look to load all records associated with any of the movies that were rated by the customer that was provided. This should provide enough information to get a prediction for the movie that the customer has not seen yet and to help fill in the rating matrix for the second classifier. This will be the plan to make sure that my current design will be able to scale for large datasets. When you are at the point of creating and training your classifier, you will need to indicate on the Instances object which attribute you will be classifying with. This is important otherwise you will not be able to train your classifier and you will not be able to perform predictions. This example of the qualifying data states that customer id 1046323 needs a rating of the movie id 1 with the movie rating date of 2005-12-19. 6.6 Results The initial metrics that I have after training the Naïve Bayesian Network and utilizing testing records to be classified, I am getting the following result as depicted (see Table 2). Table 2. Classification Results 6.5 Testing Correctly Classified Instances 60.1052 % Created the cross validation testing model from the Weka API and provided the Naïve Bayesian Network and movie rating dataset to perform the testing. Here I have been utilizing the 10-fold Cross Validation testing mechanism that will break the training data into a group of training folds and a single fold for the testing. This allows me to run through the testing cycle multiple times utilizing a different fold as the testing dataset and a different group of folds for the training dataset. Each time I run through this test I provide a different seed to a random number generator to select the specific fold to be used for the testing dataset. The results of running the 10-Fold Cross Validation multiple times utilizing the 720,000 movie rating records loaded so far is presented (see Table 1). Incorrectly Classified Instances 39.8948 % Kappa statistic 0.4351 Mean absolute error 0.2375 Root mean squared error 0.3319 Relative absolute error 80.8571 % Root relative squared error 86.5997 % Table 1. Results from 10-Fold Cross Validation Run Number MAE RMSE 1 0.2742 0.3761 2 0.2742 0.3761 3 0.2742 0.3761 4 0.2742 0.3761 5 0.2742 0.3761 6 0.2743 0.3762 7 0.2743 0.3761 8 0.2742 0.3761 9 0.2743 0.3761 10 0.2742 0.3761 Average 0.2742 0.3761 The final testing of the completed system will utilize the qualifying data that was provided by Netflix that has already been loaded into the Movie Advisor database. The qualifying data follows a similar format to the training data except that is it missing the rating: Movie Id: Customer Id, Movie Rating Date For example: 1: 1046323,2005-12-19 1080030,2005-12-23 6.7 Milestones Update So far I have completed the data analysis and database design for the Movie Advisor. I have also completed the development of the data sampling and created sample sets. The development of the Naïve Bayesian Network as the first level classifier has been completed. I have also trained and tested using this classifier and presented my results above (see Table 1 and Table 2). I still have items left to complete, starting with switching over to the Locally Weighted Naïve Bayesian Network as the first classifier of the not fully completed matrix. Then I will need to implement the K-nearest neighbor classifier and the correlation analysis. This will lead me to the first pass of the full testing of the application and gather metrics on the rating of the movies based off the use of the movie id, customer id, and rating date as significant attributes. Then I will perform a second pass to gather metrics after adding in the genre as a significant attribute. The dates to get these items completed are still relevant to the milestones that are presented in section 5. 6.8 Changes So far there have been no changes made since the creating of this document. As I continue with the work on this project there is a chance that I might have to change directions on some of the thoughts that I have especially around the training of the classifiers and keeping the training data in memory. So for scalability I have already talked about what I might do to improve on that in section 6.4 on trading. 7. REFERENCES [1] Frank, E., Hall, M., and Pfahringer, B. 2003. Locally weighted naïve Bayes. Proceedings of the Conference of Uncertainty in Artificial Intelligence. http://www.cs.waikato.ac.nz/pubs/wp/2003/uow-cs-wp2003-04.pdf [2] Han, J. and Kamber, M. Data Minging: Concepts and Techniques, Second Edition. Morgan Kaufmann Publishing, San Francisco, CA. 2006. [3] Hong, T. and Tsamis, D. 2006 Use of KNN for the Netflix Prize. Stanford University class project. http://www.stanford.edu/class/cs229/proj2006/HongTsamisKNNForNetflix.pdf [4] Lemire, D. and Maclachlan, D. 2005. Slope One Predictors for Online Rating-Based Collaborative Filtering, In SIAM Data Mining (SDM'05), Newport Beach, California, April 21-23, 2005. Slope One Predictors For Online Rating-Based Collaborative Filtering.pdf [5] Melville, P., Mooney, R. J., and Nagarajan, R. 2002. Content-boosted collaborative filtering for improved recommendations. Proceedings of the Eighteenth National Conference on Artificial intelligence (Edmonton, Alberta, Canada, July 28 - August 01, 2002). R. Dechter, M. Kearns, and R. Sutton, Eds. American Association for Artificial Intelligence, Menlo Park, CA, 187-192. http://www.cs.utexas.edu/users/ml/papers/cbcf-aaai-02.pdf 8. HONOR CODE Honor Code Pledge Honor Code Pledge The pledge is found in classrooms across campus. The goal of the pledge is too serve a reminder to students, faculty, and staff that honor in the classroom is of the utmost importance to the University community. If there is a classroom in which the pledge plaque is no longer mounted on the wall or if a classroom never received a plaque please email our office at honor@colorado.edu and we will take care of it. David Witherspoon