stach4

advertisement

Chapter 4: Probability

1

Chapter 4

Probability

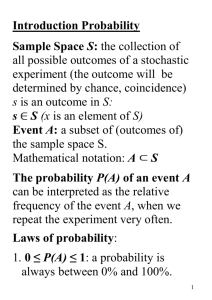

LEARNING OBJECTIVES

The main objective of Chapter 4 is to help you understand the basic principles of

probability, specifically enabling you to

1.

Comprehend the different ways of assigning probability.

2.

Understand and apply marginal, union, joint, and conditional probabilities.

3.

Select the appropriate law of probability to use in solving problems.

4.

Solve problems using the laws of probability, including the law of addition, the

law of multiplication , and the law of conditional probability.

5.

Revise probabilities using Bayes' rule.

CHAPTER TEACHING STRATEGY

Students can be motivated to study probability by realizing that the field of

probability has some stand-alone application in their lives in such applied areas human

resource analysis, actuarial science, and gaming. In addition, students should understand

that much of the rest of the course is based on probabilities even though they will not be

directly applying many of these formulas in other chapters.

This chapter is frustrating for the learner because probability problems can be

approached by using several different techniques. Whereas, in many chapters of this text,

students will approach problems by using one standard technique, in chapter 4, different

students will often use different approaches to the same problem. The text attempts to

emphasize this point and underscore it by presenting several different ways to solve

probability problems. The probability rules and laws presented in the chapter can

virtually always be used in solving probability problems. However, it is sometimes easier

to construct a probability matrix or a tree diagram or use the sample space to solve the

problem. If the student is aware that what they have at their hands is an array of tools or

techniques, they will be less overwhelmed in approaching a probability problem. An

Chapter 4: Probability

2

attempt has been made to differentiate the several types of probabilities so that students

can sort out the various types of problems.

In teaching students how to construct a probability matrix, emphasize that it is

usually best to place only one variable along each of the two dimensions of the matrix.

(That is place Mastercard with yes/no on one axis and Visa with yes/no on the other

instead of trying to place Mastercard and Visa along the same axis).

This particular chapter is very amenable to the use of visual aids. Students enjoy

rolling dice, tossing coins, and drawing cards as a part of the class experience.

Of all the chapters in the book, it is most imperative that students work a lot of

problems in this chapter. Probability problems are so varied and individualized that a

significant portion of the learning comes in the doing. Experience is an important factor

in working probability problems.

Section 4.8 on Bayes’ theorem can be skipped in a one-semester course without

losing any continuity. This section is a prerequisite to the chapter 18 presentation of

“revising probabilities in light of sample information (section 18.4).

CHAPTER OUTLINE

4.1

Introduction to Probability

4.2

Methods of Assigning Probabilities

Classical Method of Assigning Probabilities

Relative Frequency of Occurrence

Subjective Probability

4.3

Structure of Probability

Experiment

Event

Elementary Events

Sample Space

Unions and Intersections

Mutually Exclusive Events

Independent Events

Collectively Exhaustive Events

Complimentary Events

Counting the Possibilities

The mn Counting Rule

Sampling from a Population with Replacement

Combinations: Sampling from a Population Without Replacement

4.4

Marginal, Union, Joint, and Conditional Probabilities

Chapter 4: Probability

4.5

Addition Laws

Probability Matrices

Complement of a Union

Special Law of Addition

4.6

Multiplication Laws

General Law of Multiplication

Special Law of Multiplication

4.7

Conditional Probability

Independent Events

4.8

Revision of Probabilities: Bayes' Rule

3

KEY TERMS

A Priori

Bayes' Rule

Classical Method of Assigning Probabilities

Collectively Exhaustive Events

Combinations

Complement of a Union

Complementary Events

Conditional Probability

Elementary Events

Event

Experiment

Independent Events

Intersection

Joint Probability

Marginal Probability

mn Counting Rule

Mutually Exclusive Events

Probability Matrix

Relative Frequency of Occurrence

Sample Space

Set Notation

Subjective Probability

Union

Union Probability

SOLUTIONS TO PROBLEMS IN CHAPTER 4

4.1

Enumeration of the six parts: D1, D2, D3, A4, A5, A6

D = Defective part

A = Acceptable part

Sample Space:

D1 D2,

D1 D3,

D1 A4,

D1 A5,

D2 D3,

D2 A4,

D2 A5,

D2 A6,

D3 A5

D3 A6

A4 A5

A4 A6

Chapter 4: Probability

4

D1 A6, D3 A4, A5 A6

There are 15 members of the sample space

The probability of selecting exactly one defect out of

two is:

9/15 = .60

4.2

X = {1, 3, 5, 7, 8, 9}, Y = {2, 4, 7, 9}

and Z = {1, 2, 3, 4, 7,}

a)

X Z = {1, 2, 3, 4, 5, 7, 8, 9}

b)

X Y = {7, 9}

c)

X Z = {1, 3, 7}

d)

X Y Z = {1, 2, 3, 4, 5, 7, 8, 9}

e)

X Y Z = {7}

f)

(X Y) Z = {1, 2, 3, 4, 5, 7, 8, 9} {1, 2, 3, 4, 7} = {1, 2, 3, 4, 7}

g)

(Y Z) (X Y) = {2, 4, 7} {7, 9} = {2, 4, 7, 9}

h)

X or Y = X Y = {1, 2, 3, 4, 5, 7, 8, 9}

i)

Y and Z = Y Z = {2, 4, 7}

4.3

If A = {2, 6, 12, 24} and the population is the positive even numbers through 30,

A’ = {4, 8, 10, 14, 16, 18, 20, 22, 26, 28, 30}

4.4

6(4)(3)(3) = 216

4.5

Enumeration of the six parts: D1, D2, A1, A2, A3, A4

D = Defective part

A = Acceptable part

Sample Space:

D1 D2 A1,

D1 D2 A4,

D1 A1 A4,

D1 A3 A4,

D2 A1 A4,

D2 A3 A4,

A1 A3 A4,

D1 D2 A2,

D1 A1 A2,

D1 A2 A3,

D2 A1 A2,

D2 A2 A3,

A1 A2 A3,

A2 A3 A4

D1 D2 A3,

D1 A1 A3,

D1 A2 A4,

D2 A1 A3,

D2 A2 A4,

A1 A2 A4,

Combinations are used to counting the sample space because sampling is done

without replacement.

Chapter 4: Probability

C3 =

6

5

6!

= 20

3!3!

Probability that one of three is defective is:

12/20 = 3/5

.60

There are 20 members of the sample space and 12 of them have 1 defective part.

4.6

107 = 10,000,000 different numbers

4.7

20

C6 =

20!

= 38,760

6!14!

It is assumed here that 6 different (without replacement) employees are to be

selected.

4.8

P(A) = .10, P(B) = .12, P(C) = .21

P(A C) = .05 P(B C) = .03

a) P(A C) = P(A) + P(C) - P(A C) = .10 + .21 - .05 = .26

b) P(B C) = P(B) + P(C) - P(B C) = .12 + .21 - .03 = .30

c) If A, B mutually exclusive, P(A B) = P(A) + P(B) = .10 + .12 = .22

4.9

D

E

F

A

5

8

12

25

B

10

6

4

20

C

8

2

5

15

23

16

21

60

a) P(A D) = P(A) + P(D) - P(A D) = 25/60 + 23/60 - 5/60 = 43/60 = .7167

b) P(E B) = P(E) + P(B) - P(E B) = 16/60 + 20/60 - 6/60 = 30/60 = .5000

c) P(D E) = P(D) + P(E) = 23/60 + 16/60 = 39/60 = .6500

d) P(C F) = P(C) + P(F) - P(C F) = 15/60 + 21/60 - 5/60 = 31/60 = .5167

Chapter 4: Probability

6

4.10

a)

b)

c)

d)

4.11

E

F

A

.10

.03

.13

B

.04

.12

.16

C

.27

.06

.33

D

.31

.07

.38

.72

.28

1.00

P(A F) = P(A) + P(F) - P(A F) = .13 + .28 - .03 = .38

P(E B) = P(E) + P(B) - P(E B) = .72 + .16 - .04 = .84

P(B C) = P(B) + P(C) =.16 + .33 =

.49

P(E F) = P(E) + P(F) = .72 + .28 =

1.000

A = event - flown in an airplane at least once

T = event - ridden in a train at least once

P(A) = .47

P(T) = .28

P (ridden either a train or an airplane) =

P(A T) = P(A) + P(T) - P(A T) = .47 + .28 - P(A T)

Cannot solve this problem without knowing the probability of the intersection.

We need to know the probability of the intersection of A and T, the proportion

who have ridden both.

4.12 P(L) = .75

4.13

P(M) = .78

P(M L) = .61

a) P(M L) = P(M) + P(L) - P(M L) = .78 + .75 - .61 = .92

b) P(M L) but not both = P(M L) - P(M L) = .92 - .61 = .31

c) P(NM NL) = 1 - P(M L) = 1 - .92 = .08

Let C = have cable TV

Let T = have 2 or more TV sets

P(C) = .67, P(T) = .74, P(C T) = .55

a) P(C T) = P(C) + P(T) - P(C T) = .67 + .74 - .55 = .86

b) P(C T but not both) = P(C T) - P(C T) = .86 - .55 = .31

Chapter 4: Probability

7

c) P(NC NT) = 1 - P(C T) = 1 - .86 = .14

d) The special law of addition does not apply because P(C T) is not .0000.

Possession of cable TV and 2 or more TV sets are not mutually exclusive.

4.14

Let T = review transcript

F = consider faculty references

P(T) = .54

P(F) = .44

P(T F) = .35

a) P(F T) = P(F) + P(T) - P(F T) = .44 + .54 - .35 =

b) P(F T) - P(F T) = .63 - .35 = .28

c) 1 - P(F T) = 1 - .63 = .37

d)

Y

Y

.35

N

.19

.54

N

.09

.37

.46

.44

.56

1.00

4.15

a)

b)

c)

d)

P(A

P(D

P(D

P(A

C

D

E

F

A

5

11

16

8

40

B

2

3

5

7

17

7

14

21

15

57

E) =

B) =

E) =

B) =

16/57 =

3/57 =

.0000

.0000

.2807

.0526

4.16

D

E

F

A

.12

.13

.08

.33

B

.18

.09

.04

.31

C

.06

.24

.06

.36

.63

Chapter 4: Probability

.36

.46

.18

8

1.00

a) P(E B) = .09

b) P(C F) = .06

c) P(E D) = .00

4.17

Let D = Defective part

a) (without replacement)

P(D1 D2) = P(D1) P(D2 D1) =

6 5

30

= .0122

50 49 2450

b) (with replacement)

P(D1 D2) = P(D1) P(D2) =

4.18

6 6

36

= .0144

50 50 2500

Let U = Urban

I = care for Ill relatives

a) P(U I) = P(U) P(I U)

P(U) = .78

P(I) = .15

P(IU) = .11

P(U I) = (.78)(.11) =

.0858

b) P(U NI) = P(U) P(NIU) but P(IU) = .11

So, P(NIU) = 1 - .11 = .89 and P(U NI) =

P(U) P(NIU) = (.78)(.89) = .6942

c)

U

Yes

I

No

Yes

No

.15

.85

.78

.22

The answer to a) is found in the YES-YES cell. To compute this cell, take 11%

Chapter 4: Probability

9

or .11 of the total (.78) people in urban areas. (.11)(.78) = .0858 which belongs in

the “YES-YES" cell. The answer to b) is found in the Yes for U and no for I cell.

It can be determined by taking the marginal, .78, less the answer for a), .0858.

d. P(NU I) is found in the no for U column and the yes for I row (1st row and

2nd column). Take the marginal, .15, minus the yes-yes cell, .0858, to get

.0642.

4.19

Let S = stockholder

Let C = college

P(S) = .43

P(C) = .37

P(CS) = .75

a) P(NS) = 1 - .43 = .57

b) P(S C) = P(S) P(CS) = (.43)(.75) = .3225

c) P(S C) = P(S) + P(C) - P(S C) = .43 + .37 - .3225 = .4775

d) P(NS NC) = 1 - P(S C) = 1 - .4775 = .5225

e) P(NS NC) = P(NS) + P(NC) - P(NS NC) = .57 + .63 - .5225 = .6775

f) P(C NS) = P(C) - P(C S) = .37 - .3225 = .0475

4.20

Let F = fax machine

Let P = personal computer

Given: P(F) = .10

P(P) = .52

P(PF) = .91

a) P(F P) = P(F) P(P F) = (.10)(.91) = .091

b) P(F P) = P(F) + P(P) - P(F P) = .10 + .52 - .091 = .529

c) P(F NP) = P(F) P(NP F)

Since P(P F) = .91, P(NP F)= 1 - P(P F) = 1 - .91 = .09

P(F NP) = (.10)(.09) = .009

d) P(NF NP) = 1 - P(F P) = 1 - .529 = .471

e) P(NF P) = P(P) - P(F P) = .52 - .091 = .429

4.21

Let S = safety

P

NP

F

.091

.009

.10

NF

.429

.471

.90

.520

.480

1.00

Chapter 4: Probability

10

Let A = age

P(S) = .30

P(A) = .39

P(A S) = .87

a) P(S NA) = P(S) P(NA S)

but P(NA S) = 1 - P(A S) = 1 - .87 = .13

P(S NA) = (.30)(.13) = .039

b) P(NS NA) = 1 - P(S A) = 1 - [P(S) + P(A) - P(S A)]

but P(S A) = P(S) P(A S) = (.30)(.87) = .261

P(NS NA) = 1 - (.30 + .39 - .261) = .571

c) P(NS A) = P(NS) - P(NS NA)

but P(NS) = 1 - P(S) = 1 - .30 = .70

P(NS A) = .70 - 571 = .129

4.22

Let C = ceiling fans

Let O = outdoor grill

P(C) = .60

P(O) = .29

P(C O) = .13

a) P(C O)= P(C) + P(O) - P(C O) = .60 + .29 - .13 = .76

b) P(NC NO) = 1 - P(C O)= 1 - .76 = .24

c) P(NC O) = P(O) - P(C O) = .29 - .13 = .16

d) P(C NO) = P(C) - P(C O) = .60 - .13 = .47

4.23

E

F

G

A

15

12

8

35

B

11

17

19

47

C

21

32

27

80

D

18

13

12

43

65

74

66

205

a) P(GA) = 8/35 = .2286

b) P(BF) = 17/74 = .2297

Chapter 4: Probability

11

c) P(CE) = 21/65 = .3231

d) P(EG) = .0000

4.24

C

D

A

.36

.44

.80

B

.11

.09

.20

.47

.53

1.00

a) P(CA) = .36/.80 = .4500

b) P(BD) = .09/.53 = .1698

c) P(AB) = .0000

4.25

Calculator

Computer

Yes

No

Yes

46

3

49

No

11

15

26

57

18

75

Select a category from each variable and test

P(V1V2) = P(V1).

For example, P(Yes ComputerYes Calculator) = P(Yes Computer)?

46 49

?

57 75

.8070 .6533

Variable of Computer not independent of Variable of Calculator.

Chapter 4: Probability

12

4.26

Let C = construction

Let S = South Atlantic

83,384 total failures

10,867 failures in construction

8,010 failures in South Atlantic

1,258 failures in construction and South Atlantic

a) P(S) = 8,010/83,384 = .09606

b) P(C S) = P(C) + P(S) - P(C S) =

10,867/83,384 + 8,010/83,384 - 1,258/83,384 = 17,619/83,384 = .2113

1258

P(C S ) 83,384

c) P(C S) =

= .15705

8010

P( S )

83,384

1258

P(C S ) 83,384

d) P(S C) =

= .11576

10,867

P(C )

83,384

e) P(NSNC) =

P( NS NC ) 1 P(C S )

P( NC )

P( NC )

but NC = 83,384 - 10,867 = 72,517

and P(NC) = 72,517/83,384 = .869675

Therefore, P(NSNC) = (1 - .2113)/(.869675) = .9069

f) P(NS C) =

P( NS C ) P(C ) P(C S )

P(C )

P(C )

but P(C) = 10,867/83,384 = .1303

P(C S) = 1,258/83,384 = .0151

Therefore, P(NS C) = (.1303 - .0151)/.1303 = .8842

4.27

Let E = Economy

Let Q = Qualified

Chapter 4: Probability

P(E) = .46

P(Q) = .37

13

P(E Q) = .15

a) P(EQ) = P(E Q)/P(Q) = .15/.37 = .4054

b) P(QE) = P(E Q)/P(E) = .15/.46 = .3261

c) P(QNE) = P(Q NE)/P(NE)

but P(Q NE) = P(Q) - P(Q E) = .37 - .15 = .22

P(NE) = 1 - P(E) = 1 - .46 = .54

P(QNE) = .22/.54 = .4074

d) P(NE NQ) = 1 - P(E Q) = 1 - [P(E) + P(Q) - P(E Q)]

= 1 - [.46 + .37 + .15] = 1 - (.68) = .32

4.28

Let A = airline tickets

Let T = transacting loans

P(A) = .47

P(TA) = .81

a) P(A T) = P(A) P(TA) = (.47)(.81) = .3807

b) P(NTA) = 1 - P(TA) = 1 - .81 = .19

c) P(NT A) = P(A) - P(A T) = .47 - .3807 = .0893

4.29

Let H = hardware

Let S = software

P(H) = .37

P(S) = .54

P(SH) = .97

a) P(NSH) = 1 - P(SH) = 1 - .97 = .03

b) P(SNH) = P(S NH)/P(NH)

but P(H S) = P(H) P(SH) = (.37)(.97) = .3589

so P(NH S) = P(S) - P(H S) = .54 - .3589 = .1811

P(NH) = 1 - P(H) = 1 - .37 = .63

P(SNH) = (.1811)/(.63) = .2875

c) P(NHS) = P(NH S)/P(S) = .1811//54 = .3354

Chapter 4: Probability

14

d) P(NHNS) = P(NH NS)/P(NS)

but P(NH NS) = P(NH) - P(NH S) = .63 - .1811 = .4489

and P(NS) = 1 - P(S) = 1 - .54 = .46

P(NHNS) = .4489/.46 = .9759

4.30

Let A = product produced on Machine A

B = product produces on Machine B

C = product produced on Machine C

D = defective product

P(A) = .10

P(B) = .40

P(C) = .50

P(DA) = .05

P(DB) = .12

Event

A

B

C

Prior

Conditional

P(Ei)

.10

.40

.50

P(DEi)

.05

.12

.08

Joint

P(D Ei)

.005

.048

.040

P(D)=.093

Revise:P(AD) = .005/.093 = .0538

P(BD) = .048/.093 = .5161

P(CD) = .040/.093 = .4301

4.31

Let

A = Alex fills the order

B = Alicia fills the order

C = Juan fills the order

I = order filled incorrectly

K = order filled correctly

P(A) = .30

P(B) = .45 P(C) = .25

P(IA) = .20 P(IB) = .12

P(IC) = .05

P(KA) = .80 P(KB) = .88

P(KC) = .95

a) P(B) = .45

b) P(KC) = 1 - P(IC) = 1 - .05 = .95

P(DC) = .08

Revised

.005/.093=.0538

.048/.093=.5161

.040/.093=.4301

Chapter 4: Probability

15

c)

Event

Prior

Conditional

A

B

C

P(Ei)

.30

.45

.25

P(IEi)

.20

.12

.05

Joint

P(I Ei)

Revised

P(EiI)

.0600/.1265=.4743

.0540/.1265=.4269

.0125/.1265=.0988

.0600

.0540

.0125

P(I)=.1265

Revised: P(AI) = .0600/.1265 = .4743

P(BI) = .0540/.1265 = .4269

P(CI) = .0125/.1265 = .0988

d)

Event

Prior

Conditional

A

B

C

P(Ei)

.30

.45

.25

P(KEi)

.80

.88

.95

4.32

Let

P(T) = .72

Joint

P(K Ei)

Revised

.2400

.3960

.2375

P(K)=.8735

P(EiK)

.2400/.8735=.2748

.3960/.8735=.4533

.2375/.8735=.2719

T = lawn treated by Tri-state

G = lawn treated by Green Chem

V = very healthy lawn

N = not very healthy lawn

P(G) = .28 P(VT) = .30

Event

Prior

Conditional

A

B

P(Ei)

.72

.28

P(VEi)

.30

.20

Joint

P(V Ei)

.216

.056

P(V)=.272

Revised: P(TV) = .216/.272 = .7941

P(GV) = .056/.272 = .2059

P(VG) = .20

Revised

P(EiV)

.216/.272=.7941

.056/.272=.2059

Chapter 4: Probability

4.33

16

Let T = training

Let S = small

P(T) = .65

P(ST) = .18 P(NST) = .82

P(SNT) = .75 P(NSNT) = .25

Event

Prior

Conditional

T

NT

P(Ei)

.65

.35

P(NSEi)

.82

.25

Joint

P(NS Ei)

Revised

P(EiNS)

.5330/.6205=.8590

.0875/.6205=.1410

.5330

.0875

P(NS)=.6205

4.34

Variable 1

Variable 2

D

E

A

10

20

B

15

5

C

30

15

55

40

95

a) P(E) = 40/95 = .42105

b) P(B D) = P(B) + P(D) - P(B D)

= 20/95 + 55/95 - 15/95 = 60/95 = .63158

c) P(A E) = 20/95 = .21053

d) P(BE) = 5/40 = .1250

e) P(A B) = P(A) + P(B) = 30/95 + 20/95 =

50/95 = .52632

f) P(B C) = .0000 (mutually exclusive)

g) P(DC) = 30/45 = .66667

h) P(AB)= P(A B) = .0000 = .0000 mutually exclusive

P(B) 20/95

Chapter 4: Probability

17

i) P(A) = P(AD)??

30/95 = 10/95 ??

.31579 .18182

No, Variables 1 and 2 are not independent.

4.35

D

E

F

G

A

3

9

7

12

31

B

8

4

6

4

22

C

10

5

3

7

25

21

18

16

23

78

a) P(F A) = 7/78 = .08974

b) P(AB) = P(A B) = .0000 = .0000

P(B) 22/78

c) P(B) = 22/78 = .28205

d) P(E F) = .0000 Mutually Exclusive

e) P(DB) = 8/22 = .36364

f) P(BD) = 8/21 = .38095

g) P(D C) = 21/78 + 25/78 – 10/78 = 36/78 = .4615

h) P(F) = 16/78 = .20513

4.36

Age(years)

Gender

<35

35-44

45-54

55-64

>65

Male

.11

.20

.19

.12

.16

.78

Female

.07

.08

.04

.02

.01

.22

.18

.28

.23

.14

.17

1.00

a) P(35-44) = .28

b) P(Woman 45-54) = .04

Chapter 4: Probability

18

c) P(Man 35-44) = P(Man) + P(35-44) - P(Man 35-44) = .78 + .28 - .20 = .86

d) P(<35 55-64) = P(<35) + P(55-64) = .18 + .14 = .32

e) P(Woman45-54) = P(Woman 45-54)/P(45-54) = .04/.23= .1739

f) P(NW N 55-64) = .11 + .20 + .19 + .16 = .66

4.37

Let T = thoroughness

Let K = knowledge

P(T) = .78

P(K) = .40

P(T K) = .27

a) P(T K) = P(T) + P(K) - P(T K) =

.78 + .40 - .27 = .91

b) P(NT NK) = 1 - P(T K) = 1 - .91 = .09

c) P(KT) = P(K T)/P(T) = .27/.78 = .3462

d) P(NT K) = P(NT) - P(NT NK)

but P(NT) = 1 - P(T) = .22

P(NT K) = .22 - .09 = .13

4.38 Let R = retirement

Let L = life insurance

P(R) = .42

P(L) = .61

P(R L) = .33

a) P(RL) = P(R L)/P(L) = .33/.61 = .5410

b) P(LR) = P(R L)/P(R) = .33/.42 = .7857

c) P(L R) = P(L) + P(R) - P(L R) =

.61 + .42 - .33 = .70

d) P(R NL) = P(R) - P(R L) = .42 - .33 = .09

e) P(NLR) = P(NL R)/P(R) = .09/.42 = .2143

4.39 P(T) = .16 P(TW) = .20

P(W) = .21 P(NE) = .20

P(TNE) = .17

a) P(W T) = P(W)P(TW) = (.21)(.20) = .042

b) P(NE T) = P(NE)P(TNE) = (.20)(.17) = .034

c) P(WT) = P(W T)/P(T) = (.042)/(.16) = .2625

d) P(NENT) = P(NE NT)/P(NT) = {P(NE)P(NTNE)}/P(NT)

but P(NTNE) = 1 - P(TNE) = 1 - .17 = .83 and

Chapter 4: Probability

19

P(NT) = 1 - P(T) = 1 - .16 = .84

Therefore, P(NENT) = {P(NE)P(NTNE)}/P(NT) =

{(.20)(.83)}/(.84) = .1976

e) P(not W not NET) = P(not W not NE T)/ P(T)

but P(not W not NE T) =

.16 - P(W T) - P(NE T) = .16 - .042 - .034 = .084

P(not W not NE T)/ P(T) = (.084)/(.16) = .525

4.40

Let M = Mastercard

A = American Express V = Visa

P(M) = .30

P(A) = .20

P(M A) = .08

P(V) = .25

P(V M) = .12

a) P(V A) = P(V) + P(A) - P(V A)

= .25 + .20 - .06 = .39

b) P(VM) = P(V M)/P(M) = .12/.30 = .40

c) P(MV) = P(V M)/P(V) = .12/.25 = .48

d) P(V) = P(VM)??

.25 .40

Possession of Visa is not independent of

possession of Mastercard

e) American Express is not mutually exclusive of Visa

because P(A V) .0000

4.41

Let S = believe SS secure

N = don't believe SS will be secure

<45 = under 45 years old

>45 = 45 or more years old

P(N) = .51

Therefore, P(S) = 1 - .51 = .49

P(S>45) = .70

Therefore, P(N>45) = 1 - P(S>45) = 1 - .70 = .30

P(<45) = .57

P(A V) = .06

Chapter 4: Probability

20

a)

b)

P(>45) = 1 - P(<45) = 1 - .57 = .43

P(>45 S) = P(>45)P(S>45) = (.57)(.70) = .301

P(<45 S) = P(S) - P(>45 S) = .49 - .301 = .189

c) P(>45S) = P(>45 S)/P(S) = P(>45)P(S>45)/P(S) =

(.43)(.70)/.49 = .6143

d) (<45 N) = P(<45) + P(N) - P(<45 N) =

but P(<45 N) = P(<45) - P(<45 S) =

.57 - .189 = .381

so P(<45 N) = .57 + .51 - .381 = .699

Probability Matrix Solution for Problem 4.41:

4.42

S

N

<45

.189

.381

.57

>45

.301

.129

.43

.490

.510

1.00

Let M = expect to save more

R = expect to reduce debt

NM = don't expect to save more

NR = don't expect to reduce debt

P(M) = .43

P(R) = .45

P(RM) = .81

P(NRM) = 1-P(RM) = 1 - .81 = .19

P(NM) = 1 - P(M) = 1 - .43 = .57

P(NR) = 1 - P(R) = 1 - .45 = .55

a) P(M R) = P(M)P(RM) = (.43)(.81) = .3483

b) P(M R) = P(M) + P(R) - P(M R)

= .43 + .45 - .3483 = .5317

c)

P(neither save nor reduce debt) =

1 - P(M R) = 1 - .5317 = .4683

d) P(M NR) = P(M)P(NRM) = (.43)(.19) = .0817

Probability matrix for problem 4.42:

Reduce

Yes

No

Chapter 4: Probability

21

Yes

.3483

.0817

.43

No

.1017

.4683

.57

.4500

.5500

1.00

Save

4.43

Let R = read

Let B = checked in the with boss

P(R) = .40

P(B) = .34

P(BR) = .78

a) P(B R) = P(R)P(BR) = (.40)(.78) = .312

b) P(NR NB) = 1 - P(R B)

but P(R B) = P(R) + P(B) - P(R B) =

.40 +.34 - .312 = .428

P(NR NB) = 1 - .428 = .572

c) P(RB) = P(R B)/P(B) = (.312)/(.34) = .9176

d) P(NBR) = 1 - P(BR) = 1 - .78 = .22

e) P(NBNR) = P(NB NR)/P(NR)

but P(NR) = 1 - P(R) = 1 - .40 = .60

P(NBNR) = .572/.60 = .9533

f) Probability matrix for problem 4.43:

B

NB

R

.312

.088

.40

NR

.028

.572

.60

.340

.660

1.00

4.44

Let: D = denial

I = inappropriate

C = customer

P = payment dispute

S = specialty

G = delays getting care

Chapter 4: Probability

22

R = prescription drugs

P(D) = .17

P(S) = .10

P(I) = .14

P(G) = .08

P(C) = .14

P(R) = .07

P(P) = .11

a) P(P S) = P(P) + P(S) = .11 + .10 = .21

b) P(R C) = .0000 (mutually exclusive)

c) P(IS) = P(I S)/P(S) = .0000/.10 = .0000

d) P(NG NP) = 1 - P(G P) = 1 - [P(G) + P(P)] =

1 – [.08 + .11] = 1 - .19 = .81

4.45

Let R = retention

P = process

P(R) = .56

P(P R) = .36

P(RP) = .90

a) P(R NP) = P(R) - P(P R) = .56 - .36 = .20

b) P(PR) = P(P R)/P(R) = .36/.56 = .6429

c) P(P) = ??

P(RP) = P(R P)/P(P)

so P(P) = P(R P)/P(RP) = .36/.90 = .40

d) P(R P) = P(R) + P(P) - P(R P) =

.56 + .40 - .36 = .60

e) P(NR NP) = 1 - P(R P) = 1 - .60 = .40

f) P(RNP) = P(R NP)/P(NP)

but P(NP) = 1 - P(P) = 1 - .40 = .60

P(RNP) = .20/.60 = .33

4.46

Let M = mail

S = sales

P(M) = .38 P(M S) = .0000

P(NM NS) = .41

a) P(M NS) = P(M) - P(M S) = .38 - .00 = .38

b) P(S):

P(M S) = 1 - P(NM NS) = 1 - .41 = .59

P(M S) = P(M) + P(S)

Therefore, P(S) = P(M S) - P(M) = .59 - .38 = .21

c) P(SM) = P(S M)/P(M) = .00/.38 = .00

Chapter 4: Probability

23

d) P(NMNS) = P(NM NS)/P(NS) = .41/.79 = .5190

where: P(NS) = 1 - P(S) = 1 - .21 = .79

4.47 Let S = Sarabia

T = Tran

J = Jackson

B = blood test

P(S) = .41

P(B S) = .05

Event

S

T

J

4.48

P(T) = .32

P(J) = .27

P(B T) = .08 P(B J) = .06

Prior

Conditional

P(Ei)

.41

.32

.27

P(BEi)

.05

.08

.06

Joint

P(B Ei)

.0205

.0256

.0162

P(B)=.0623

Revised

P(BiNS)

.329

.411

.260

Let R = regulations

T = tax burden

P(R) = .30

P(T) = .35

P(TR) = .71

a) P(R T) = P(R)P(TR) = (.30)(.71) = .2130

b) P(R T) = P(R) + P(T) - P(R T) =

.30 + .35 - .2130 = .4370

c) P(R T) - P(R T) = .4370 - .2130 = .2240

d) P(RT) = P(R T)/P(T) = .2130/.35 = .6086

e) P(NRT) = 1 - P(RT) = 1 - .6086 = .3914

f) P(NRNT) = P(NR NT)/P(NT) = [1 - P(R T)]/P(NT) =

(1 - .4370)/.65 = .8662

4.49

Event

Soup

Breakfast

Prior

Conditional

P(Ei)

.60

P(NSEi)

.73

Joint

P(NS Ei)

.4380

Revised

P(EiNS)

.8456

Chapter 4: Probability

Meats

Hot Dogs

4.50

.35

.05

.17

.41

24

.0595

.0205

.5180

Let GH = Good health

HM = Happy marriage

FG = Faith in God

P(GH) = .29

P(HM) = .21

P(FG) = .40

a) P(HM FG) = P(HM) + P(FG) - P(HM FG)

but P(HM FG) = .0000

P(HM FG) = P(HM) + P(FG) = .21 + .40 = .61

b) P(HM FG GH) = P(HM) + P(FG) + P(GH) =

.29 + .21 + .40 = .9000

c) P(FG GH) = .0000

The categories are mutually exclusive.

The respondent could not select more than one

answer.

d) P(neither FG nor GH nor HM) =

1 - P(HM FG GH) = 1 - .9000 = .1000

.1149

.0396