L1: Lecture notes: intro, combinatorics and conditional probability

advertisement

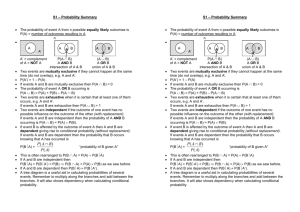

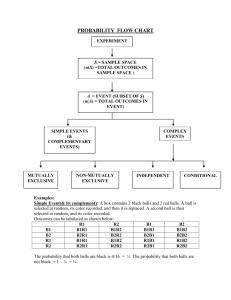

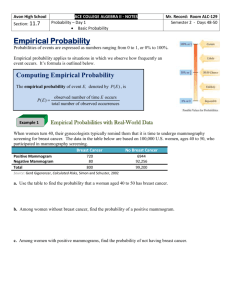

Introduction Probability Sample Space S: the collection of all possible outcomes of a stochastic experiment (the outcome will be determined by chance, coincidence) s is an outcome in S: s S (x is an element of S) Event A: a subset of (outcomes of) the sample space S. Mathematical notation: A S The probability P(A) of an event A can be interpreted as the relative frequency of the event A, when we repeat the experiment very often. Laws of probability: 1. 0 ≤ P(A) ≤ 1: a probability is always between 0% and 100%. 1 2. P(S) = 1: the probability of all outcomes is 1. Finite sample space: If 1. S consists of a finite number of outcomes and 2. every outcome in S is equally likely to occur, then the probability of the event A( S) can be computed by counting the number of outcomes in A and S, respectively (the Laplace definition): P( A) nr. of outcomes in A "favorable" = nr. of outcomes in S "total" Complement A (or AC) of an event A (say “not-A” or “A does not occur”): A is the set of all outcomes not in A. 2 Complement law: P( A ) = 1 – P(A) S A A The Intersection A and B: collection of all common outcomes of A and B. Notation: A∩B or AB S A A∩B B Union (A or B): A occurs or B occurs or both occur Notation: A B 3 General addition law P (A B) = P (A) + P (B) – P (A∩B ) Mutually exclusive (disjunct) events A and B do not have common outcomes: the intersection is empty, so that P(A∩B)= 0. Addition law for mutually exclusive events: P(A B) = P(A) + P(B) S A B The relative frequency as an intuitive definition of probability: if we repeat an experiment, under the same conditions, n times and the 4 event A occurs n(A) times, then the probability of A can be estimated with: . Intuitive property of this relative frequency (frequency ratio): This experimental law of large numbers cannot be proven formally. But the relative frequency has the same properties as the probability P. The theoretical background of probabilities: the axioms of Kolmogorov The probability P can be seen as a function that assigns a real (non5 negative) number to every event A P:A→R Kolmogorov stated his axioms for this probability measure P: 1. P(A) ≥ 0 , for every event A ( S): 2. P(S) = 1 3. For every sequence mutually exclusive events A1 , A2,… P Ai P( Ai ) i i All the desirable properties of the probability measure P can be proven, only using the axioms, e.g.: 1. P ( ) = 0 ( is the null set or empty event) 2. If A B, then P(A) ≤ P(B) 6 3. P(A B) = P(A) + P(B) – P(A∩B) A conditional probability P(.|B) is also a probability measure as defined by Kolmogorov (if P(B) > 0) Combinatorics, the art of counting: “Laplace”: for finite equally likely outcomes is P(A) = #A / #S Basic principle of counting: if an experiment has m outcomes and for each of these outcomes a second experiment has n outcomes, then all together there are mn outcomes. 7 n (different) elements have n! = n(n-1)…321 rankings (orders, permutations). The number of permutations of r elements chosen out of n elements, without replacement, is n(n-1)…(n – r +1) = n!/(n-r)! Permutations with replacement: nr The number of combinations of r elements chosen out of n elements, without replacement, is 8 the binomial coefficient (“n over r”) Combinatorics of random samples of size r chosen out of n elements: Replacement? No Yes n!/(n-r)! Ordered nr permutations Outcomes Not-ordered combinations In the last case the outcomes are not equally likely. The number of divisions of n elements in k distinct groups of size n1, …, nk (n1 +…+ nk = 1) is: 9 The multinomial coefficient The probability of r red balls if we choose at random n balls out of a vase containing R red balls and N-R white balls (N balls in total) is: The hypergeometric formula Conditional probabilities: The conditional probability of A, under the condition B has occurred, is defined as: (provided that P(B) 0) 10 A conditional probability P(.|B) is also a probability measure as defined by Kolmogorov (if P(B) > 0) The multiplication rule follows from this definition:: P(A∩B) = P(A|B) P(B) and P(A∩B) = P(B|A) P(A) Multiplication rule for 3 events (can be extended!): P(A∩B∩C) = P(A) P(B|A) P(C|A∩B) Consider the following situation The population consists of 2 parts A and A 11 We know the probability P(A) ( and P( A ) = 1 - P(A)!) We know P(B|A) en P(B| A ) In a Venn-diagram: A A∩B A A ∩B B Note that it follows from the product law that P(A∩B) = P(B|A)P(A) And P( A ∩B) = P(B| A )P( A ) Law of total probability: P(B) = P(A∩B) + P( A ∩B) =>P(B)= P(A)P(B|A) + P( A )P(B| A ) 12 Bayes rule: P( A B ) P( A | B ) P( B ) P( B | A) P( A) P ( B | A) P( A) P( B | A) P( A) In a “probability tree”: 13 A occurs? B occurs? P(B|A) P(A) P( A ) Yes P( B |A) Yes No P(B| A ) Yes P( B | A ) No No So: P(B)= P(A)×P(B|A) + P( A )×P(B| A ) -------------------------------------------- Generalization to n mutually exclusive parts A1,…, A n of S: 14 Law of total probability/ Bayes rule n P ( B ) P ( Ai ) P ( Ai | B ) and i 1 P ( A1 | B ) A1 P ( B | A1 ) P ( A1 ) in1 P ( B | Ai ) P ( Ai ) A2 A1∩B A2∩B S An An∩B B A and B are called independent when knowledge about the occurrence of A does not influence the probability of B (and vice versa) 15 Definition A and B are independent P(A∩B) = P(A)×P(B) The independence of A en B implies, for example, that P(B|A) = P(B) and P(A|B) = P(A) Three events A, B and C are independent iff. P(A∩B∩C) = P(A)×P(B)×P(C) Pair wise P(A∩B) = P(A)×P(B) indepenP(A∩C) = P(A)×P(C) dence P(B∩C) = P(B)×P(C) } This definition is extendable to n independent events. Sometimes we use the definition to proof the independence, but usually we can assume independence, 16 because of the nature of a situation and use the formulas above. When 2 experiments can be assumed independent, then 2 events with respect to either of the experiments are independent. Bernoulli trials are repeated independent experiments with only two outcomes “success” and “failure” (probabilities p and 1-p ) If we repeat the Bernoulli trials until the first success occurs, we find the geometrical formula: P(first success = kth trial)= (1-p)k-1p this formula applies for all k = 1, 2,... 17