E78_final_exam_DAVID_LUONG

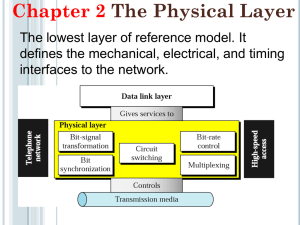

advertisement

David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 1 PROBLEM 1: Part a) The fundamental limitations of communication systems fall into two categories: technological problems and fundamental physical limitations. The first category is comprised of constraints such as hardware availability, economic factors, government regulations, and so on. The second category is of more interest in the sense that they are subject to the law of nature, ultimately deciding whether a given task can be strictly accomplished or not. Specifically, the fundamental limitations of information transmission via electrical signals are bandwidth and noise. Bandwidth in such systems is a tool to measures signal speed during transmission. Signals that change rapidly with time have frequencies that cover a wide range, and thus a high bandwidth. The ability of a system to follow signal variations is reflected in its transmission bandwidth. The limitation with this is that communication systems are composed of energy-storage elements such as capacitors, and the energy cannot be changed instantaneously. Because of this, every communication system has a finite bandwidth B that limits the rate of signal variations. Noise is the other fundamental limitations in communication systems and comes from kinetic energy theory. At any temperature above absolute zero, thermal energy causes microscopic particles to experience random motion. It is this random motion that produces random currents and voltages and is classified as thermal noise. The signal-to-noise ratio S/N is a measure of the signal power to that of noise. When this ratio becomes small (under 1), the noise power dominates the signal power of interest, and consequently corrupts analog signals and produces errors in digital ones. With both these limitations in mind, Shannon stated that the rate of information transmission cannot exceed the channel capacity in the following equation. C B log(1 S N ) This is known as the Hartley-Shannon law, which provides an upper limit on a communication system with a given B and S/N. This will be discussed further in problem 2. Part b) Linear Modulation: AM and DSB-AM Merit: Envelope of the modulated carrier has the same shape as the message. Standard modulation (AM) AM signal is characterized by xc (t ) Ac [1 x(t )]cos(ct ) where the modulation index is and is a positive constant. The frequency representation of this is Xc( f ) 1 Ac ( f f c ) Ac X ( f f c ) 2 2 for f > 0. The time and frequency domain of AM is shown visually in the following figures. David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 2 FIGURES 4.2-1 and 4.2.2. The envelope reproduces the shape of x (t) if f c 1 and 1 . The first condition is to ensure that the carrier oscillates rapidly compared to the time variation of x (t); otherwise, the envelope would be difficult to see. The second condition is to ensure that the modulation does not go negative. Too much modulation ( 1 ) causes phase reversals and envelope distortions has seen above. The disadvantage of standard AM is that at least 50 percent of the transmitted power is in a carrier that’s independent of x (t), and does not contain any information in the message. Suppressed-carrier double-sideband modulation (DSB) Setting 1 eliminates the “wasted” carrier power in AM. The resulting modulated wave and its frequency representation then becomes xc (t ) Ac cos(c t ) and X c ( f ) 1 Ac ( f f c ) 2 for f > 0. This is known as double-sideband-suppressed-carrier modulation or DSB. The DSB spectrum looks exactly like the AM spectrum shown above, but without the un-modulated carrier impulses. DSB in the time domain acts slightly different in that the envelope takes the place of |x (t)| instead of x (t) in AM. The modulated wave experiences phase reversals for x (t) at zero crossings. Message recovery is more difficult since knowledge of the occurrences of the phase reversals is required. A positive note of DSB is that the transmitted power is concentrated into the information sidebands with no wasteful power transmitted. Exponential Modulation: Frequency Modulation (FM) and Phase Modulation (PM) FM and PM are examples of nonlinear exponential modulation. In general, exponential modulation can increase the S/N ratio without increasing transmitted power whereas that is a requirement in linear modulation. However, the transmission bandwidth is usually much greater than two times the message bandwidth. Essentially, a tradeoff exists between bandwidth and power in exponentially modulated communication systems. David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 3 To study exponential modulation, we need to first define instantaneous phase and frequency. Suppose a continuous wave signal has a constant envelope and a time-varying phase depicted in the following equation: xc (t ) Ac cos[ct (t )] The total instantaneous angle is defined as c (t ) c t (t ) and we can write xc (t ) as xc (t ) Ac cos[c t (t )] Ac Re[e jc (t ) ] This expression provides the logic behind the nomenclature of this modulation scheme as exponential or angle modulation of the information message. Phase modulation is defined by t x(t ) where 180 . This constant is the maximum phase shift produced by x (t) and is typically termed the phase modulation index or phase deviation. The constraint that it is less than 180 degrees is analogous to the constraint that 1 in AM. A rotating-phasor diagram is helpful to interpret PM and to introduce FM. The figure below shows that the total angle c (t ) is comprised of the rotational term c (t ) plus (t ) , the latter of which is the angle difference relative to the former. FIGURE 5.1-1 The phasor’s instantaneous rate of rotation in cycles per second is then f (t ) 1 . 1 . c (t ) f c c (t ) 2 2 in FM, the instantaneous frequency of the modulated wave is defined as f (t ) f c f x(t ) f f c . Notice that f (t) varies in proportion to the modulating frequency by the frequency deviation factor, f . Integration yields the FM instantaneous phase to be for (t ) 2 f t x ( ) d The resulting FM waveform can then be written as xc (t ) Ac cos[ct 2 f t x ( ) d ] David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 4 The essential difference between PM and FM is the presence of the integration of the message in FM. Moreover, FM has superior noise-reduction properties over PM. A FM demodulator would extract f (t) from xc (t ) , and the resulting signal would be proportional to f . It could be increased without requiring more transmitted power. And if noise is constant, the S/N ratio would increase. Tone Modulation FM and PM can be tone modulated simply by offsetting their relative shifts by 90 degrees. The relevant equations for such a scheme are shown below: Am sin mt x(t ) Am cos mt (t ) sin mt Am ( Am / f m ) f PM FM PM FM Suppose that a tone modulation is applied simultaneously to a frequency modulator and a phase modulator and the two output spectra are identical (Problem 5.1-8). For an increase/decrease in the tone amplitude, the PM tone modulation index will increase/decrease proportionally to while FM tone modulation index will increase/decrease proportionally to f . fm For an increase/decrease in the tone frequency, PM tone modulation index will not change since it is only dependent on . FM tone modulation index will decrease/increase since f m is inversely proportional to it. For increase/decrease of the tone amplitude and frequency in the same proportion, PM tone modulation index will increase/decrease proportionally to . For FM tone modulation, there will be no change since the increase/decrease is cancelled out via the division. Part c) Analog systems can be implemented with relatively few components whereas digital systems require more hardware. Take for instance the implementation of a LPF. The analog side just needs a resistor and a capacitor in the right configuration. The digital side, however, requires an ADC, DSP, DAC, and an LPF for anti-aliasing. But with the added complexity, digital systems offer advantages over analog systems, which are described below. Stability o Time invariant o Greater accuracy in signal reproduction Parameters embedded in algorithms that change only when reprogrammed. Analog signals and parameters change as components age and degrade along with operating temperatures. Flexibility o System calibrations are easily modified for different uses Can improve trust in signals Error correction/detection schemes for accuracy Encryption for privacy and security of information exchange David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 5 Compression algorithms to reduce redundancies and inefficiencies Combining of several signals (voice, sound, text) for desired output Reliable Reproduction o Digital signal reproduction is extremely important in transmitting signals that need power boosting/amplification A representative copy of the signal after the amplification is needed to maintain accurate information from the conveyed message. Part d) Many types of noise are characterized by Gaussian processes and distribution including thermal noise, which is the random motion of electrons caused by a temperature above absolute zero. Thermal energy is proportional to KT where K is Boltzman’s constant (1.38E-23 J/K). A resistor’s thermal energy (noise) can be mathematically represented by 2( KT ) 2 R 3h given in units of squared-volts. The constant h is Planck’s constant. It can be shown that the spectral density of thermal noise in a resistor R is given by the following expression: Gv ( f ) 2 RKT Noise of this type follows a distribution that is closely studied in the field of statistics known as the Normal or Gaussian distribution. It is in the shape of a bell with a specified mean and standard deviation. The distribution provides information of likelihoods for a certain error value to occur. Intersymbol Interference (ISI) refers to the spillover or cross talk from other signal pulses during information transmission. Consider a baseband transmission system with a signal-plus-noise waveform y(t ) ak p(t td kD) n(t ) k where t d is the transmission delay and p (t ) is the pulse shape with transmission distortion. A regenerator is used to recover the digital message from y (t) by using an optimal sampling time tK KD td If p (0) 1 then y(tK ) ak ak p( KD kD) n(t K ) k K The first term is the wanted message information, the second being the ISI and the last being the noise contamination at t K . Power Signal versus Energy Signal: A signal is usually thought of as a function of varying amplitude over time. A reasonable measure of a signal’s strength is the area under the curve that we call its energy content. The amplitude can be negative at times, but that should not subtract from the positive area. Squaring the function or finding its absolute value before finding the area under the curve results in the signal’s energy. Some signals do not decay and so have infinite energy. The idea of signal power comes in to prevent this so-called infinite physical amount of energy. The power is the time average of energy over time (energy per unit time) as shown below. Pf lim T 1 T T 2 T 2 2 f (t ) dt David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 6 Pulse-code modulation (PCM) is a digital system scheme with an ADC at the input and a DAC at the output. Its performance as an analog communication system relies heavily on the quantization noise from the ADC. The block diagram of a PCM generation system is shown below. FIGURE 12.1-1a The analog input x (t) is LPFed and sampled to obtain x(kTs ) . A quantizer rounds off these sampled values to the nearest discrete one in a set of q quantum levels. A PCM then takes this signal xq ( kTs ) and reconstructs the waveform as seen below. FIGURE 12.1-2b Note that perfect reconstruction is not at all possible, even with the absence of noise. Part e) Oversampling happens when a signal is sampled at several times its Nyquist rate. It is useful to oversample because of resource constraints in hardware. Because it is difficult to fabricate integrated chips with large R and C values, we use the best RC LPF that we can construct and oversample. The frequency components above the information bandwidth is attenuated using digital filtering and downfiltering is used to obtain an effective sampling frequency at the Nyquist rate. Another reason to oversample is to reduce the effect from quantization noise by spreading the noise over a larger bandwidth while keeping the desired signal in the same band. However, the attitude toward oversampling in the audio industry is mixed. While it helps to attenuate jitter and quantization noise, the question of whether this reduction is actually audible is difficult to answer and debatable. Purists believe that oversampling should be removed since they think it is more natural to let the human ear perceive sounds and allow its own internal filter remove the audio “junk.” David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 7 Undersampling happens when a signal is sampled at a small fraction of its Nyquist rate. An application of this is in sampling oscilloscopes. These devices leverage undersampling to display high-speed periodic waveforms that most electronic platforms cannot handle. A glance in the frequency domain shows that a compressed image of the original spectrum is centered at each sampling frequency, f s . With a LPF having B fs , the original waveform is reconstructed. 2 Undersampling in data acquisition is problematic as aliasing can occur. The aliasing effect is the downward frequency translation of a signal’s spectrum, and can produce ghost signals—ones that never existed in the first place—seen in the time domain. This is widely problematic since the desired transmitted signal is not properly reconstructed. PROBLEM 2 Continuous Channels and System Comparisons Assuming AWGN channel with a transmission bandwidth B and signal-to-noise ratio S/N, Hartley and Shannon developed a mathematical framework for determining the carrying capacity of an information transmission system shown below. C B log(1 S N ) This is the benchmark for the general performance of communication systems using the simple parameters of bandwidth and signal-to-noise ratio. These also happen to be related to the fundamental limitations (bandwidth and power) of communication systems, and so is an important area of study. An ideal communication system is defined as one that has nearly error-free information transfer at the rate equal to the above equation. It is useful to compare real systems with the ideal one to evaluate performance. The Hartley-Shannon capacity equation highlights the important role that bandwidth and signal-to-noise ratio play in communication. As mentioned before, there is a tradeoff between bandwidth and signal power. Since noise power varies with bandwidth ( N yields N0 B) , rewriting the capacity equation S B R (2 B 1) when R = C. The figure below shows S in dB versus B . Unreliable R N0 R N0 R R and inefficient communication is when R>C and R<C, respectively while R = C represents the ideal case. For bandwidth compression, (B/R < 1) an increasing amount of signal power is needed to maintain the channel capacity. Similarly, bandwidth expansion decreases the signal-to-noise ratio. FIGURE 16.3.2 David Luong 2/12/2016 Engineering 78 Communication Systems Final Exam Spring 2005 Page 8 A system comparison using the above guidelines evaluates communication performance. Consider a Binary Symmetric Channel. The BSC system capacity and transmission error probability can be shown to be C 2 BT [1 ( )] Q[ ( S N ) R ] where ( ) is the binary entropy function. The figure below plots C/B T versus (S/N)R for the BSC system and the ideal one. For a sufficiently high level of signal-to-noise ratio, the BSC and ideal curves depart. Thus, M-ary signaling is needed to get closer to ideal performance. Further information could potentially be extracted as evidenced from the gap difference, which has led to techniques for doing so called softdecision decoding. FIGURE 16.3.3