LIPITAKIS-EV-LIPITAKIS-ALEX-HERCMA-2009

advertisement

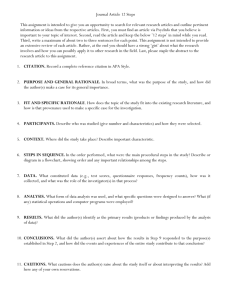

HERCMA 2009 THE USE OF ADAPTIVE ALGORITHMIC PROCEDURES FOR COMPUTING ACADEMIC PERFORMANCE EVALUATION MEASURES BY USING SEARCH ENGINES AND DATA BASES Evangelia Lipitakis and Alexandra Lipitakis Kent Business School, University of Kent Canterbury, Kent CT2 7PE, England Abstract In this article a novel adaptive algorithmic approach combined with the singular perturbation concept is proposed for the efficient solution of e-business and strategic management problems. A characteristic e- business case study concerning the computation of academic performance measures by using search engines and data bases is considered and it is shown that an efficient solution can be obtained by using its corresponding adaptive algorithm. These dynamic algorithmic schemes, by using the singular perturbation approach, can be efficiently used for obtaining a (near) optimum solution of the considered e-business case studies. Both the adaptability and compactness of the dynamic algorithms allow their application for solving efficiently a wide class of e-service and strategic management problems. Key-words and phrases: adaptive algorithms, dynamic algorithmic schemes, ebusiness problems, e-publishing, e-publishing case studies, e-service strategy management methodologies, strategy management (SM) methodologies 1. Introduction In recent years the rapid growth of scientific and technical knowledge has allowed scientists, engineers, economists, social scientists and humanists to join in addressing complex problems that must be solved simultaneously with deep appropriate knowledge from different perspectives and multidisciplinary fields. Such problems of global importance include a wide spectrum of applications in economic development, social inequality, disease prevention, global climate change etc., which can be addressed through several interdisciplinary advanced research topics, i.e. nanotechnology, bioinformatics, neuroscience, genomics-proteomics, DNA computing etc. [9] The fields of Computing, Informatics, Applied Mathematics, Engineering, Economics, e-Business and Strategic Management have special commitment to interdisciplinary work, with their researchers inter-collaborating in the design and development of new methodologies, exploration of existing methods and practices, formation of theories of on-line business and commerce, discovery of new advanced methods to extract information from enormous data-sets, integration of library and archival sciences etc. A thorough examination of the existing e-business and e-commerce literature reveals the fact that it relies almost exclusively on several selected case studies and conceptual frameworks. Few research studies use empirical data to characterize the Internet based initiatives and indicate their impact on organization performance (Brynjolfsson et al, 2000 [4], Zhu et al, 2004 [51]). Furthermore, there is a lack of theory to guide the empirical work (Wheeler, 2002 [49]), and an increasingly number of researchers argue that the literature is 1 weak in making the linkage between theory and measures apart from subjecting proposed measures for empirical validation for reliability and validity (Straub, 1989 [42]). Towards these lines and in order to make such a linkage between theory and measures, a new algorithmic treatment of e-business, e-commerce and strategy management in digital information management methodologies, based on an adaptive algorithmic approach, has been proposed by Lipitakis, 2005 [22], 2007a [23], 2007b [24], 2008 [26], Lipitakis and Phillips, 2007 [25]. According to a recent McKinsey Global Institute research study, today’s business leaders need to incorporate algorithmic decision-making techniques to successfully run their organizations [25]. This exploratory study attempts to investigate and bridge the gap between e-business strategy and algorithmic procedures. In this study we show that suitable adaptive algorithmic procedures can be efficiently used for solving a wide class of complex computational problems, including e-business, eservice and strategic Management problems. Furthermore, we point out that the selective usage of both algorithmic and sp-concepts, i.e. the usage of adaptive algorithms in combination with the dynamical choice of sp-parameters, can lead to their (near) optimized solutions. This research work is based on key-field concepts of three different sciences, i.e. Computer Science (Adaptive Algorithmic Theory), Applied Mathematics (Singular Perturbation Theory and Partial Differential Equations) and Management Science (Strategy Management and e-business). The adapted basic concepts of the first two sciences are applied for and used in connection with problems of e-business and strategy management. 2. The Algorithmic Approach and Singular Perturbation Concept In this section we will synoptically present the important issues of adaptive algorithmic approach and the singular perturbation concept for e-business problems and strategic management methodologies in Digital Information Management (DIM) applications. The Algorithmic Approach The basic concepts of the adaptive algorithmic approach on e-business problems and strategic management methodologies have been recently presented in [22, 23, 24, 25, 26]. It is known that an algorithm can be simply defined as a finite set of rules which leads to a sequence of operations for solving specific types of problems, with the following five important characteristic features: Finiteness, Definiteness, Input, Output and Effectiveness, as described in [18, 38]. The algorithms can be easily presented by the so-called ‘pseudoalgorithmic’ form, that is also called ‘pseudoalgorithm’ or ‘pseudocode’ in the case of preparation computer programs/codes. A psedoalgorithm/ pseudocode can be considered as an outline of a program, written in a form that can easily be converted into real programming statements. In fact, everyone can easily write a pseudocode without even knowing what programming language is going to be used for the final implementation of his algorithm. A pseudocode consists of short English phrases used to explain specific tasks within a program's algorithm should not include keywords in any specific computer languages and should be written as a list of consecutive phrases. The main purpose of a pseudocode is not only to help the programmer to efficiently write the corresponding code (program), but under certain circumstances (by using suitable parameterized forms) it can be effectively used in solving a wider class of computational problems apart of the original given problem. In view of these facts the usage of the adaptive algorithms, with their previously mentioned advantages and the additional important features of compactness, adaptiveness and incorporation of singular parameters that allow the computation of (near) optimum solutions, can be extended for the efficient solution of e-business and SM problems and in a wide area 2 of corresponding applications, a part of which is presented in the references and bibliography section [4, 20, 22, 23, 24, 29, 32, 36, 37, 41, 49] The term ‘adaptive algorithm’ denotes here an algorithm which changes its behavior based on the available resources. The adaptive algorithm functions provide a way to indicate the user’s choice of adaptive algorithm and let the user specify certain properties of the algorithm. The concept of adaptive algorithm is closely related to the corresponding concept of ‘adaptive’ or ‘variable’ choices of the parameters (input elements) of the algorithm. Adaptive algorithms are algorithms that adapt to contention, often have adjustable steps that repeat (iterate) or require decisions (logic or comparison) until the task is completed, are simple and easy to program. The general technique of adapting simple algorithmic methods to work efficiently on difficult parts of complex computational problems can be a powerful one in the algorithm design, evaluation and practice [23, 24, 25, 36]. The Singular Perturbation Concept In the last decade research has been directed in the study of a class of initial/boundary-value problems and the behaviour of the approximate solutions of the resulting linear systems by considering a small positive perturbation parameter, affecting the derivative of highest order, cf. [46, 27]. The singular perturbation (sp) parameters have been firstly used by Tikhonov [46] for solving numerically certain classes of initial/boundary value problems. Following this approach, a class of generalized fully parameterized singularly perturbed (sp) non-linear initial and boundary value problems can be considered and the way that the sp parameters variation affects their numerical solution can be studied [27]. In this article we show that suitable adaptive algorithmic procedures can be efficiently used for solving a wide class of complex computational problems, including ebusiness and strategic Management problems. Furthermore, we point out that the selective usage of both algorithmic and sp-concepts, i.e. the usage of adaptive algorithms in combination with the dynamical choice of sp-parameters, can lead to (near) optimized solutions. The applicability of the proposed adaptive algorithmic approach and sp-concept methodology is demonstrated by considering a characteristic case study in E-Publishing Business applications. 3. Web and Scientific Communication, Web Search Engines and Databases, Citation Analysis and Research Performance Evaluation The evaluation of the academic research work, the assessment of the published faculty members’ research papers and the overall research work performance measurement in an academic environment are considered to be critical factors of major importance for the improvement of services of academic institutions and research centers in the scientific community and the society at large. In the last decades several research searching engines/ machines and scientific data bases have been derived and extensively used for research work assessment and retrieval of bibliographic database elements and reference information. Such data bases and search engines include the Google Scholar, Web of Knowledge, Social Science Citation Index (SSCI), Academic Search Elite, AgeLine, ArticleFirst, GEOBASE, POPLINE etc. In this study we will discuss some research performance measuring indicators, the citation, research citation and the citation of research papers for an academic environment using two Internet databases, namely the Google Scholar and Web of Knowledge. It is universally acknowledged that the discovery and usage of the Internet and World Wide Web (WWW) or simply Web are considered to be the major scientific and technological breakthroughs of the last decades with still unpredictably consequences on the evolution of knowledge and culture of the human race. 3 The Web is a dynamic, distributed and interactive hypermedia environment, where almost anyone can be an information producer and consumer. Members of the scientific community can create their symbolic presence by constructing individual home pages showing their scientific and research work, published research papers, projects, personal information, and to use efficiently the linkage to other selected sites. The Web can be used as a valuable resource space for scientists and researchers in both producer and consumer perspective (Cronin et al, 1995 [7]). It has been said that the Web could result in the emergence of a universal bibliographic and citation database ‘linking every scholarly work ever written-no matter how published- to every work that it cites and every work that cites it’ (Cameron, 1997 [6]). The Web can be used both as a publishing and conversational medium allowing scholar interaction and signaling, i.e. scientists can post, publish, review papers on the Web, discuss issues and debate positions, put forward informally new ideas for discussion, exchange ideas and opinions on scientific matters etc. The Web can afford both economics of scale (larger markets, lower distribution costs) and economics of scope (global reach, diversity of audience), and can efficiently use a great variety of digital information objects, such as plain texts, images, data, video contents etc, in an effective manageable and easily reconfigurable manner which cannot be compared by any traditional print production tools. The Web is considered to be the largest and richest contemporary information repository. The most common access routes to this valuable source are the search engines. The important role of these search engines is often limited to the retrieval of lists of potentially relevant documents. The critical task of analysis of the returned documents and the identification of knowledge of interest is usually left to the users. It should be noted that the citations are generally subject to several forms of errors, such as common typographical errors in the source papers, errors due to some non-standard reference formats, errors in GS parsing of the references etc. Citations of several publication works can be complete, incomplete, completely missing according to inconsistent treatment of books, conference proceedings etc. and that may lead to inaccurate citation counts in certain disciplines (Harzing, 2008 [16]). Citation analysis is usually performed to evaluate the impact of scientific collections, i.e. research journals and conferences, publications and scholar authors during promotion, tenure, merit pay decisions, hiring processes etc. The ranking of scientific publications can be performed by using several ranking algorithms. The ranking algorithms that are used in bibliometrics can be classified into the following categories: (i) the collection-based ranking algorithms (ISI Impact Factor algorithm, Garfield, 1972 [12] and 1996 [14], SCEAS algorithm, Sidiropoulos et al, 2005 [39] and (ii) the publication-based ranking algorithms (PageRank algorithm [Google Scholar [53, 54], HITS algorithm, Kleinberg, 1999 [17]). Both ranking algorithm categories use citation graphs and graph nodes in order to represent the total number of citations that point from one collection to another (Sidiropoulos et al, 2006 [40]). It is reported that citation analysis in conjunction with advanced visualization techniques will help to understand the structure of individual fields and it will be particularly useful for emerging and rapidly developing top research areas, such as biotechnology and nanotechnology (Leydesdorff, 2007 [21]). It should be noted that the traditional citation analysis can provide indicative information about the intellectual influence of researchers, but it is unable (as Web can do) to provide any indications about the scientist’s ideas, thinking and general professional presence. The evaluation of scientific research work is an important problem of great theoretical and practical importance. The quality of the published papers is closely related to the analysis of the citations received, that enables the approximate measurement of several academic performance concepts, such as the quality of research work, the academic reputation and scientific influence of researchers. It should be pointed out that in all the research performance evaluation models the citation reception is considered as a positive factor (whatever is the nature of citation). 4 A well-known evaluation measurement indicator of the importance of a scientific journal is the so-call Impact Factor computed by the ISI and introduced by E. Garfield, 1972 [12]. Note that the scientific community does not agree unanimously about the effectiveness of this measurement, that is a metric of popularity but does not capture certain evaluation criteria such as the importance, prestige etc. (Bollan et al, 2006 [3]). A brief description of the Impact Factor and its computation can be found in [26]. An interesting problem is the evaluation of scientific research journals, authors, papers, fields, academic institutions and research centers. The importance of a paper should be determined by certain factors, such as the quality of paper and its author, the quality of the citations from other papers, the importance of journal, the importance of author(s) and coauthors. A paper is considered to be important if it is published in important journal, is written by important author(s) and receives citations from other important papers. An integrated multi-class research evaluation model, that considers all the above described subjects with appropriated weight coefficients, can be efficiently used by taking into account the classes of citations, authors and journals respectively (Bini et al, 2007 [2]). This model for evaluating papers, authors and journals is based on citations, co-authorship and publications. The rapid development of Web search engines followed by their subsequent evaluation that aimed mainly to inform the Web users in their choice of search engines and to the development of search algorithms and search engines. Among the most commonly used commercial search engines are: the Google (www.google.com), Alta Vista (www.altavista.com) and Teoma (www.teoma.com). Google is the largest publicly available search engine (Notes, 2002 [35]), using the PageRank algorithm, a new generation of link-based ranking algorithm. Alta Vista is one of the oldest Web search engines, the largest search engine by database size (Notes, 2002 [35]) and the most commonly used search engine for data collection for Webmetrics studies (Vaughan et al, 2003 [47]). Teoma is the youngest of the previous search engines, using the Subject Specific Popularity (SSP) algorithm, a unique ranking algorithm for the determination of quality and authority of a site’s content (Teoma, 2002 [44]). Google Scholar is an Internet based search engine designed to locate scholarly literature, including peer reviewed papers, abstracts, books, book chapters, theses, preprints and technical reports from all broad areas of research, available in the Web or occasionally in print format only. The results are presented in a relevance ranked format (Myhill, 2005 [33]). It should be noted that the great majority of academic literature can be found in the so-called hidden Web. The Google Scholar includes a wide range of appropriate resources, but cannot include all the partner publishers offerings and often misses a considerable number of quality resources that are usually accessible to academic researcher through institutional subscriptions. Its searchability can be greatly improved by using advanced search pages with standard criteria such as authors, publishers, publication dates etc. The GS offers the options of Simple Search and Advanced Search. The Simple Search is very fast, can be used for searching on authors names in several ways and uses the stemming technology, which retrieves documents with word variations based on the keywords entered. By using the GS advanced search a number of search options for articles is available. The GS keyword searching includes searching by all words, exact phrases, at least one of the words, without the words, and where the words occur in the considered documents (Burright, 2006 [5]). The Google Scholar is a free resource with very basic and familiar interface, can serve as alternative to several metasearch engines, such as MetaLib and WebFeat and can be used more expensive citation indexes, i.e. Scopus of Elsevier Publishers and Web of Knowledge of Thomson Scientific. Although the reviews and critiques of GS about its contest, search engine, interface, citation counts, the academic scholars, attracted mainly by its simplicity and familiarity of GS interface, are increasingly use it and in substantial numbers (Neuhaus et al, 2008 [34]). 5 It should be noted that the Google Scholar is considered to be the largest new multidisciplinary tool that can be used for accessing and measuring hidden citations, especially from non-journal web documents, which cannot traced by conventional bibliographic and citation databases. GS contain citation information from many publishers, by doesn’t use any quality control procedure for its collection of journal publications and includes substantial coverage of non-journal documents, such as research papers, conference papers, books, book chapters, theses, research/technical reports etc. (Kousha et al, 2008 [19]), (Google Scholar, 2006 [52, 53]). A universal Internet-based bibliographic and citation database that would link every scholarly work ever written, no matter how published, to every work that it cites and every work that cites it, has been proposed by Cameron, 2003 [6]. The usage of this database could revolutionize several aspects of academic communication, such as literature research, evaluation of research work, keeping current with new literature, choice of publication venue etc. [6]. The commercial Scopus service was introduced in 2004 by Elsevier Publishers, while the open access database Google Scholar in beta version followed. The Web of Science (WoS) database is also a commercial database based on a family of ISI (Institute of Scientific Information) citation indices. The Scirus database of Elsevier Publishers [55] it is reported that have the best science, technology and medicine coverage on the Web, with more than 200 million specific pages indexed (Felter, 2005 [10]). Scirus is considered as a good alternative to GS and lists its content sources and 13 million patents from Europe, USA and Japan, while Elsevier tries to make more content available by negotiating with other scientific publishers (Scirus, 2005 [55]). In the framework of the objective assessment of the research work of a faculty member, the promotion committee members thoroughly examine the impact and quality of his scholarly/ research publications, the subjective opinions of peers, tenure and several other decisive factors including the citation analysis. In relation to this faculty members try to identify as many citations to their published research work as possible to provide a comprehensive assessment of their publication impact on the academic, scientific and professional communities. The ISI citation database, a well known source for locating citations, appears to have several limitations in the full coverage of citations to a faculty members’ research work. It should be noted that the WoS is the portal used to search the three ISI citation databases, namely Arts & Humanity Citation Index, Science Citation Index, Social Sciences Citation Index. GS has been mainly introduced as a research oriented web search machine providing full text searching of relative material and extracts formal citations from the material. These capabilities show that GS can act both as a citation index and a search engine. Furthermore, GS extracts citations to print materials that have been referenced in web publications. In this case the database provides access to certain material in the conventional print environment and to web based material. GS has become widely used for searching research oriented material, it has proved to be a useful reference tool for academic librarians, White 2006 [50] and on several occasions contained citations to significant resources that were not covered by the Science Citation Index database, Kousha & Thelwall 2006 [19]. The cybermetric analysis of research publications can be made, apart of the GS, by using Web of Knowledge (the web interface to ISI’s citation databases) and SCOPUS (The Elsevier citation database) [52]. 6 4. Search Engines Literature Review In this section we synoptically comment on the evaluation and research performance measurements of Search Engines for World Wide Web. In a recent paper of Bettencourt and Houston, 2001 [1] the impact of paper method type and subject area on paper citations and reference diversity in certain journals have been studied. The authors combine a content analysis of a selected sample of 561 Marketing research papers with citation analysis and reference analysis on article citation rates and reference diversity. Several experimental results for the impact of method type and subject area on reference diversity of published papers of Marketing Journals (Journal of Marketing, Journal of Marketing Research, Journal of Consumer Research) are presented and several limitations (distinct sampling time periods, transfer of knowledge methodologies, usage of other methodologies) of the proposed research methodology are reported [1]. A set of new measurements, adapted from the concepts of recall and precision, for the evaluation of Web search engine performance have been proposed by Vaughan, 2003 [47]. These concepts are commonly used criteria in the evaluation of traditional information retrieval systems. The precision is the proportion of retrieved documents that are relevant, while recall is the proportion of relevant documents that are retrieved (Voorhees et al, 2001 [48]). The two ranking measurements have been proposed as counterparts of traditional recall and precision and correspond respectively to the quality of result ranking and the ability to retrieve top ranked pages [47]. Some other new measurements have been also derived for the evaluation of search engine performance stability, which is proposed to be used as an evaluation criterion [45]. It is noted that if a search engine is not stable, then the results obtained from the search engine may not represent the general performance of the search engine. Furthermore, the testing of search engine stability can provide information for Webmetrics research, i.e. for users of Web search engines who collect data [47]. The research study proposed a set of measurements for the evaluation of performance stability and the commercial search engines Google, Alta Vista and Teoma have been selected in order to examine the stability of search engine performance over time. The three stability measurements were: (i) the stability of the number of pages retrieved, (ii) the number of pages among the top 20 retrieved pages that remain the same in two consecutive tests over a predetermines short period of time and (iii) the number of pages among the top 20 retrieved pages that remain in the same ranking order in two consecutive tests over a predetermines short period of time [45]. The concept of the invisible web and its implications for academic librarianship has been presented in Devine et al, 2004 [8]. The majority of the Internet and Web users, including both faculty members and students, are often aware of the limitations of standard search engines. A guide to tools that can be used to mine the invisible web and the benefits of using the invisible web to promote interest in certain library services are discussed. Several suggestions for incorporating the invisible web in reference work and library promotion are given [8]. The authors report several facts associated with the invisible web, such as The size of Invisible Web (IW) is about 500 times larger than the surface web (SW), IW contains approximately 550 billion individual documents as compared with the SW’s one billion IW is the largest growing category of new information on the web The greatest part of IW (about 95%) is publicly accessible information More than 50% of IW resides in topic specific databases Several characteristics examples of the IW content are given, such as database content, real time information, subscription or fee based services, sites requiring password access or registration for use, sites with no-index protocols etc. [8]. 7 A novel approach aiming for automatic ranking of scientific conferences using digital libraries, such as the Science Citation Index by the Institute for Scientific Information and CiteSeer by the NEC Research Institute, has been presented by Sidiropoulos et al, 2005& 2006 [39, 40]. A web-based system that has been constructed by extracting data from the Databases and Logic Programming (DBLP) website of the university of Trier is presented and a new citation metrics for ranking scientific collections is used [39]. An extensive overview of the two major technological changes in academic publishing during the last decades, i.e. the development of Bibliometrics and the creation of the Webometrics, a modern rapidly growing branch of Bibliometrics has been presented by Thelwall, 2007 [45]. Both changes are related to the measurement of science. Bibliometrics encompasses the measurement of properties of documents and related processes by using special techniques such as citation analysis, frequency analysis, co-word analysis and document counting. i.e. number of publications by an author, research group, academic institution or country. After the development of Science Citation Index (SCI) by Garfield, 1979 [13], two types of bibliometric application have been created: (i) the evaluative bibliometrics with main purpose to assess the impact of scholarly work (comparison of relative research contributions, research funding) and (ii) the relational bibliometrics, aiming to the relationships within research (new research fronts, structure of research fields etc.). In a recent article of Leydesdorff, 2008 [21] some explanations for the use of citation indicators in research and journal evaluation are presented. It is reported that the evaluations using publication and citation counts have become standard practices in several research assessment exercises and evaluations in academic environments. The US National Science Foundation (NSF) recently launched new programs for the study of sciences and innovation policies http://www.nsf.gov/pubs/2007/nsf07547/nsf07547.htm while the Seventh Research Framework Program (FP7) includes the science indicators in its research announcements [21]. In the basic question ‘can journals and journal rankings be used for the evaluation of research?’, the author points out that Garfield’s original purpose when created the impact factor and the Journal Citation Reports was to evaluate journals and not to evaluate research work! The impact factors can provide a summary statistics that allows the ranking of research journals. The statistics however are based on the means of a highly skewed distribution and the context of the measurement is of great importance [19]. The use of Google Scholar as a cybermetric tool and methodology issues in obtaining citations counts for academic institutions has been presented by Smith, 2008 [41]. The GS is used to compare the web citation rate of New Zealand universities with the results of New Zealand’s performance based research funding (PBRF) research assessment exercise. Two methodology problems occurred with GS have been considered, i.e. (i) the case of identification of a work related to a university, GS does not have an institutional name field (ii) the case that a total citation count is obtained, GS displays only a citation count for each item ,and allows only the first 1000 hits to be displayed from a search. 5. Adaptive Algorithms for computing Citations In order to describe analytically the computational procedure that has been used in our experimental research work for the computation of citations of KBS Faculty members for a certain number of publication types in the period 1980-2007 by using specific Search Engines [28], we present the corresponding algorithmic procedure in its ‘pseudoalgorithmic form’ or ‘pseudoalgorithm’. This presentation has the advantage that the several stages (stepby-step) of the algorithmic procedure can be easily understood and can be also presented by corresponding flow charts [28]. 8 It should be noted that an algorithm is simply defined as a procedure (a finite set of well-defined instructions) for accomplishing some task which, given an initial state, will terminate in a defined end-state. Alternatively, an equivalent definition can be used, i.e. a step-by-step problem solving procedure, especially an established recursive computational procedure for solving a problem in a finite number of steps. The algorithms posses five important characteristic features, i.e. finiteness, definiteness, input, output, effectiveness, with the additional advantage of adaptivity, Knuth, 1968 [18], Sedgewick, 1984 [38]. In the following we present the algorithm COCISE, for the COmputation of CItation by using a specific Search Engine under certain hypotheses. Algorithm COCISE (PT, TI, AU, NMAX, CIT) Purpose: This algorithm computes the number of citations and related publication information by using a specific search engine and available databases Input: Publication Type (PT), Publication Title (TI), Author(s) (AU), Max number of publication types (NMAX) Output: Citation Information Table (CIT) Computational Procedure Step 1: Determine the max number of the original publication types Step 2: Select a group of data (i.e. author(s), title, year of publication etc) Step 3: Enter the selected (primary) data to the specific search engine for processing Step 4: Check the resulting output Step 4.1: if there are not any resulting records, then manipulate, add or select the proper secondary data in order to determine the required output Step 4.1.1: continue the iterative procedure until you get the acceptable result Step 4.1.2: if there is no such result, then update properly the database and print a proper message, i.e. ‘no records found’ Step 4.2: else, check the results for the matching output/ record Step 5: Check the resulting output. Step 5.1: If the output is an acceptable one, then Step 5.1.1: print the required information, i.e. number of citations, related references etc. Step 5.1.2: Update the database Step 5.2: else, make the necessary corrections and update the corresponding correction columns in the database Step 6: if the maximum number of the original publication types has been exceeded, then Step 6.1: print an appropriate message and Step 6.2: Form the final Citation Information Table (CIT) Step 7: else, go to step 2 and continue the iterative procedure The algorithm COCISE describes step-by-step the computational procedure for computing the number of citation and presenting related publication information by using a specific search engine and available databases. It should be noted that the computational steps of the algorithm COCISE can be further refined in order to give a more detailed version of the computational procedure. The corresponding algorithms for computing the number of citation and presenting related publication information by using the Google Scholar and World of Science search engines can be derived in a similar manner. The proposed adaptive algorithmic procedures in combination with the singular perturbation approach (i.e. use of sp-parameters) can be efficiently used for the numerical and algorithmic modelling of the computation of Citations, leading to their corresponding (near) optimized solution. 9 A (near) optimized algorithmic scheme of an Academic Performance Evaluation model problem by using the Citation system and singular perturbation parameters approach [23] can be derived by using the adaptive algorithm COCISE-OPT , i.e.Algorithm COCISEOPT-1 (εPT PT, εTI TI, εAU AU, NMAX, εUF OCIT), where the sp-parameters εPT, εTI, εAU affect the input parameters of the algorithm COCISE-1 of the previous section and εUF (the uncertainty factor parameter) affects the output.The algorithm COCISE-OPT-1 describes step-by-step the computational procedure for computing the optimized number of citation and presenting related publication information by using a specific search engine and available databases. 6. Experimentation and Numerical Results In this section we present some numerical results related to the application of GS and WoS in the KBS academic environment. Following the research methodology used by Meho and Yang, 2007 [30, 31], we present general information about specific elements of Databases and tools used in the research study [28], such as breadth, depth, subject coverage, citation browsing and searching options, analytical tools, downloading and exporting options of the two sources. Web of Science (WoS) Google of Scholar (GS) Breadth of Coverage 36 million records (1955- ), 8700 titles (190 open access Journals & hundreds conference Papers) Over 30 document types, Unknown number of sources, Unknown number of records Depth of Coverage ISI Citation Databases: SSCI (1956- ) AHCI (1975- ) SCI (1900- ) Unknown Subject Coverage All Subjects All Subjects Citation Searching Options Cited Author, Keyword & Phrase Searching Cited Year, Limit-Search Options include: Cited Work: (requires Author, Publication, Date, Journal, Book, Conf. Paper Subject areas etc. Title in which the work appeared) Citation Browsing Options Cited Author Cited Year N/A Analytical Tools Ranking by Author, Publication Year, Source Name, Subject Category, Institution Name, Document Type N/A Downloading and Exporting options to Citation Management Software Yes Yes Table 1: Comparisons of GS and WoS Databases and Tools used in this research study. Note that ISI=Institute for Scientific Information, SSCI=Social Science Citation Index, AHCI=Arts & Humanities Citation Index, SCI=Science Citation Index. In the following Table a comparison of GS and WoS concerning the citation coverage of journal articles of KBS researchers. 10 Publication Types Books Book Sections/Chapte rs Conference Papers Conference Proceedings Edited Books Journal Articles/Papers Reports (research & technical) Unpublished Work Web Pages TOTAL No of Pubs found in WoK Number n 19 n% 1.57 No of Pubs found in GS 11 109 8.99 64 58.72 479 7.48 330 27.23 154 46.67 399 2.59 29 12 2.39 0.99 14 8 48.28 66.67 58 313 4.14 39.13 593 48.93 548 115 9.49 63 54.78 319 5.06 4 1 1,212 0.33 0.08 100 3 1 866 75.00 100 71.45 18 1 7,600 6 1 8.76 292 % GS 57.89 92.41 292 % WoK 49.24 49.24 No of Citation s found in GS 405 No of Citation s found in WoK 5,608 GS Citation Per Output 36.82 1,519 1,519 10.23 Table 2: WoS and GS citation count by KBS Publication type (1980-2008). The results of Table 2 show that among Google Scholar and Web of Knowledge, the former offers the most comprehensive citation coverage of journal articles. In specific, GS includes records for 548 of 593 articles. This number equals to 92.41% coverage of the total of journal articles that KBS has published. Web of Knowledge provides coverage for 292 of 593 articles which corresponds to 49.24%. In the following Figures 1 and 2, the percentages of KBS faculty member publications per document type and the KBS authors academic ranking are respectively presented. KBS Member Staff Publications per Document Type in Percentages 1980 - 2008 0% 9% 0% 2% Books 9% Book Section Conference Papers 27% Conference Proceedings Edited Books Journal Articles Reports 50% 1%2% Unpublished Work Web Pages Figure 1: KBS faculty members’ publications per document type 11 WoK Citatio n Per Output 5.20 5.20 KBS Author Ranking in Percentages 5% 5% 40% Professors Senior Lecturer Lecturer Reader 35% Other 15% Figure 2: KBS Authors academic ranking Further statistical analysis and numerical results can be found in [28]. 7. Conclusions and Future Research Work The adaptive dynamic algorithmic approach and the singular perturbation concept have been applied for solving efficiently several e-business problems. A characteristic e- business case study concerning the computation of academic performance measures by using search engines and data bases has been studied and its corresponding adaptive algorithmic scheme has been presented. Furthermore, the adaptability and compactness of the proposed algorithms through the choice of singular perturbation parameters can lead to an (near) optimum solution of the considered e-business case studies. The choice of the singular perturbation parameters, leading to efficient solutions, is an interesting subject of future research work. The proper choice can be related to quantitative and qualitative nature of the input parameters (data) of the given problems and their corresponding dynamical algorithms can lead to (near) optimum solutions. The use of adaptive algorithms for academic research performance evaluation, the study of several academic research evaluation models by using advanced search engines and data banks, and the usage of universal Internet based databases for academic communication and transfer of knowledge are some very interesting related research topics for future research work. References and Bibliography [1] BETTENCOURT L. and HOUSTON M. (2001): The Impact of Article Method and Subject Area on Article Citations and Reference Diversity in JM, JMR, and JCR, Marketing Letters Vol 12, No 4, pp 327 – 340 [2] BINI D.A., DEL CORSO G.M and ROMANI F.: Evaluating scientific products by means of citation-based models: A first analysis and validation, [3] BOLLAN M.A., RODRIGUEZ M.A. and VAN DE SOMPEL H. (2006): Journal Status, Scientometrics 69, pp. 669-687 [4] BRYNJOLFSSON E. and SMITH M.D. (2000): Frictionless commerce? A comparison of Internet and conventional retailers, Management Science 46, 563-585 [5] BURRIGHT M. (2006): Google Scholar: science and technology, Issues in Science and Technology Librarianship 45 [6] CAMERON R.D. (2003): A universal citation database as a catalyst for reform in scholarly communication, http://elib.cs.sfu.ca/project/papers/citebase/citebase.html 12 [7] CRONIN B. (1984): The citation process: The role and significance of citations in scientific communication, Taylor Graham, London [8] DEVINE J. and EGGER-SIDER F. (2004): Beyond Google: The invisible web in the academic library, Journal of Academic Librarianship 20, pp. 265-269 [9] Facilitating Interdisciplinary Research, The National Academies Press, 2004 [10] FELTER L.M.(2005): Google Scholar, Scirus and the scholarly search revolution, Searcher 13, pp. 43-48, http://www.scirus.com/press/pdf/searcher_reprint.pdf [11] GARFIELD E. (1954): Association of ideas techniques in documentation: Shepardizing the literature of science, Research Information Center, National Bureau of Standards, http://www.garfield.library.upenn.edu/papers/assocofideasy1954.html [12] GARFIELD E. (1972): Citation Analysis as a Tool in Journal Evaluation: Journals can be ranked by frequency and impact of citations for science policy studies, Science 178, no. 4060, pp. 471-479 [13] GARFIELD E. (1979): Is citation analysis a legitimate tool? ISI Press, Philadelphia [14] GARFIELD E. (1996): SCI Journal Citation Reports: A bibliometric analysis of science journals in the ISI Database, Institute for Science Information, Philadelphia [15] GARFIELD E.: History of citation indexing, (online) http://scientific.thomson.com/knowtrend/essays/citationindexing/history/ [16] HARZING A.W. (2008): Google Scholar- a new data source for citation analysis, http://www.harzing.com/pop_gs.htm [17] KLEINBERG J.M. (1999): Authoritative sources in a hyperlinked environment, Journal of the ACM 46, pp. 604-632 [18] KNUTH D.E. (1968): The Art of Computer Programming-Volume 1: Fundamental Algorithms, Addison-Wesley Publ. co., Reading, Massachusetts, Amsterdam-London-Tokyo. [19] KOUSHA K. and THELWALL M. (2006): Sources of Google Scholar citations outside the Science Citation Index: a comparison between four science disciplines, 9 th International Conference on Science ant Technology Indicators, September 2006, Leuven, Belgium. [20] KOUSHA K. and THELWALL M. (2008): Sources of Google Scholar citations outside the Science Citation Index: A comparison between four science disciplines, Scientomerics 74, pp. 273-294 [21] LEYDESDORFF L. (2008): Caveats for the use of Citation Indicators in Research and Journal Evaluations, Journal of the American Society for Information Science and Technology 59, pp. 278-287 [22] LIPITAKIS A. (2005): On certain Strategic Management methodologies of E-Business in contemporary micro-medium sized Publishing firms, HERCMA 2005 Conference Procs, LEA Publishers, Athens, Greece [23] LIPITAKIS A. (2007): Adaptive Algorithmic Methods and Dynamical Singular Perturbation Techniques for Strategic Management Methodologies, HERCMA 2007 Conference Procs, LEA Publishers, Athens, Greece [24] LIPITAKIS A. (2007): Adaptive algorithmic schemes for e-service strategic management methodologies: Case studies on Knowledge Management, ICEBE/ SOKM 2007 Conference Procs, IEEE Intl. Conf. on E-Business Engng, 2007, Hong Kong, China [25] LIPITAKIS A. and PHILLIPS P. (2007): E- Business Strategies and Adaptive Algorithmic Schemes, HERCMA 2007 Conference Procs., LEA Publishers, Athens, Greece [26] LIPITAKIS A. (2008): On the algorithmic treatment of e-business problems and strategy management methodologies, WorldComp’ 08: World Congress in Computer Science, Computer Engng & Applied Computing, Las Vegas, U.S.A., July 2008. [27] LIPITAKIS E.A.: A universal iterative solver based on the Euclid’s algorithm for the numerical solution of general type Partial Differential Equations, in ‘Computer Mathematics and its Applications’, pp. 597-642, LEA Publishers, Athens, 2006. [28] LIPITAKIS EV.A.E.C.: A Study on the comparison Citation Systems by using the Google Scholar and Web of Knowledge Search Engines and Databases, M.Sc. Thesis, KBS, University of Kent, England, 2008 [29] LYTRAS M., POULOUDI A. and POULIMENAKOU A. (2002): Knowledge Management convergence: Expanding Learning Frontiers, Journal of Knowledge Management 6, 40-51 [30] MEHO L.I. and YANG K. (2007): Impact of data sources on citation counts and ranking of LIS faculty: Web of Science vs. Scopus and Google Scholar, J. Am. Soc. Inform. Sci. Tech. 58, pp. 21052125 [31] MEHO L.I. and YANG K. (2007): A new era in citation and biblimetric analyses: Web of Science, Scopus and Google Scholar, Journal of the American Society for Information Science and Technology 58, pp. 2105-2125 [32] MIZOGUCHI R. (1993): Knowledge acquisition and ontology, Procs KB & KS 1993, Tokyo, pp. 121-128 13 [33] MYHILL M. (2005): Google Scholar review, Charleston Advisor 6, pp. 49-52 (online) http://www.charlestonco.com/review.cfm?id=225 [34] NEUHAUS C., NEUHAUS E. and ASHER A. (2008): Google scholar goes to School: The presence of Google Scholar on College and University Web Sites, The Journal of Academic Librarianship 34, pp. 39-51 [35] NOTTES G.R. (2002): Search engine statistics: relative size showdown, Sept. 2002 [36] PHILLIPS P.A. (1996): Strategic planning and business performance in the UK Hotel sector: Results of an exploratory study, Intl Journal of Hospitality Management 15, 347-362 [37] PHILLIPS P. and MOUTINHO L. (2000): The Strategic Planning Index (SPI): A tool for measuring strategic planning effectiveness, Journal of Travel Research vol. 32, no. 2, pp. 369-379 [38] SEDGEWICK R. (1984): Algorithms, Addison-Wesley Publishing Co., Massachusetts-London. [39] SIDIROPOULOS A. and MANOLOPOULOS Y. (2005): A new perspective to automatically rank scientific conferences using digital libraries, Information Processing & Management 41, pp. 289-312 [40] SIDIROPOULOS A. and MANOLOPOULOS Y. (2006) : Generalized comparison of graph-based ranking algorithms for publications and authors, Journal of Systems and Software 79, Elsevier Science Inc., pp. 1679-1700 [41] SMITH A.G. (2008): Benchmarking Google Scholar with the New Zealand PBRF research assessment exercise, Scientometrics 74, pp. 309-316 [42] STRAUB D. (1989): Validating instruments in MIS research, MIS Quarterly 13, 147-169 [43] SWARTOUT W. et al (1993): Knowledge sharing: Prospects and Challenges, Procs KB & KS 1993, Tokyo, pp. 95-102 [44] TEOMA (2002a): Teoma’s History, Dec. 2002, http://wwwsp.teoma.com/docs/teoma/about/developmentteamhistory.html [45] THELWALL M. (2007): Bibliometrics to Webmetrics, Journal of Information Science 34, 1-18 [46] TIKHONOV A.N., ARSENIN V.Y. (1963): Methods for solving ill-posed problems, Doklady AN SSSR 153, 1. [47] VAUGHAN L. & THELWALL M. (2003): Scholarly use of the Web: What are the key inducers of links to journal Web sites? Journal of the American Society for Information Science and Technology 54, pp. 29-38 [48] VOORHEES E.M. & HARMAN D. (2001): Overview of TREC 2001, NIST Special Publication 500-250: The 10th Text Retrieval Conference pp. 1-15, Dec 2002 http://trec.nist.gov/pubs/trec10/papers/overview_10.pdf [49] WHEELER B.C. (2002): NEBIC: a dynamic capabilities theory for assessing Net-enablement, Information Systems Research 13, 125-146 [50] WHITE B. (2006): Examining the claims of Google Scholar as a serious information source, New Zealand Library & Information Management Journal 50, pp. 11-24 [51] ZHU K., KEN K., SEAN X. and JASON D. (2004): Information technology payoff in e-business environments: an international perspective on value creation of e-business in the financial services industry, Journal of Management Information Systems (JMIS) 21, 17-54 [52] GOGGLE SCHOLAR: http://scholar.google.com/ [53] About Google Scholar: http://scholar.google.com/scholar/about.html [54] About SCOPUS: http://www.info.scopus.com/aboutscopus/contentcoverage/index.shtml [55] About Scirus: http://www.scirus.com/srsapp/aboutus/ 14