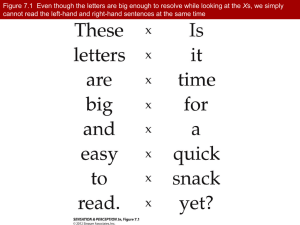

4 A Computational Model of the Attentional Blink

advertisement