3. Bigram language model

advertisement

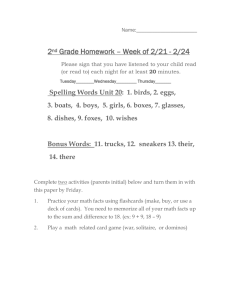

Automatic Tamazight spelling correction using noisy channel model and bigram language model Said Gounane1, Mohamed Fakir2, Belaid Bouikhalen3 1Dep. Of Computer Sciences – FST Beni Mellal, Morocco Gounane.said@gmail.com 2Dep. Of Computer Sciences – FST Beni Mellal, Morocco fakfad@yahoo.fr 3Dep. Of Computer Sciences – Multidisciplinary Faculty, Beni Mellal, Morocco. bouikhalen@yahoo.fr Abstract—In this work we present an application of noisy channel model and bigram language model to Tamazight spelling auto-correction. Texts are written using Tifinagh character. Tmazight language is modelled by the bigram language model using a small corpus extracted from the IRCAM website. The noisy channel algorithm predicts candidates from the corpus for the misspelled word, then bigram language model assign the appropriate correct word given the tow words surrounding the error in the sentence. Keywords—spelling errors Auto-correction, Noisy channel model, Bigram language model, Bayes rule, Tifinagh character. 1. Introduction All modern word processors are using spelling errors detection and correction's algorithms. Those algorithms are also used in Optical character recognition (OCR), on-line handwriting recognition, to improve their recognition rate. Basing on a language model in addition to the information extracted from text images. A spelling error could be a non-word error or a real-word error. The first one is detected easily by looking for that word in a dictionary, if it doesn't appear; it’s a spelling error of an existing word (real word). From that misspelled word one can generate many hypotheses by a single transformation (deletion, insertion, transposition or replacement) then we chose the most probable word to be mistyped as the misspelled word (noisy channel algorithm). The second type of errors needs the surrounding words to make a decision if the word isn't misspelled as another real word. In that case we use the N-gram language model; a special case is the bigram language model where we use only tow surrounding words of the error. 2. Noisy channel model Noisy channel algorithm was first proposed by Kirnigham et al (1990) [2]. This algorithm is made up of two stages: 1. Proposing candidates correction (c) for the observed error (o) 5ème conférence internationale sur les TIC pour l’amazighe 2 SAID GOUNANE, MOHAMED FAKIR, BELAID BOUIKHALEN 2. Scoring these candidates. 2.1. Minimum edit distance The minimum edit distance between two words is the minimum number of editions (insertion, deletion, substitution) needed to transform one into the other [1]. For each of these editing operations one can assign a cost. The particular case used in this work is the Levenshtein distance where each of these operations has a cost of 1. For example the Levenshtein distance between ⴰⴰⴰⴰ and ⴰⴰⴰⴰ is 2 (substitution: one deletion and one insertion), between ⴰⴰⴰⴰⴰⴰ and ⴰⴰⴰⴰⴰ is 7 (three substitution and one deletion). 2.1. Proposing candidates Since most spelling errors are of minimum edit distance one or two [1], we suppose that the correct word will differ from misspelled word by single (insertion, deletion, substitution, transposition). Any word in the corpus resulting from a single transformation of the misspelled word is in the candidate set C . Word ⴰⴰⴰⴰ could be a misspelled as ⴰⴰⴰⴰ, ⴰⴰⴰⴰ or ⴰⴰⴰⴰ. Scoring system is the next stage to select just one or a list of the most appropriate words. 2.2. Scoring candidates The score of a candidate word (c) is the probability Pc / o that one can compute using the equation: Pc / o = Po / c Pc (1) Po c = Arg max Po / c Pc (2) Po The most likely correction is: cC Since Po doesn’t depend on c, equation (2) become: c = Arg max Po / c Pc (3) cC The term P(c) is the probability to get the word c from the corpus, this term is obtained just by counting its number of occurrence in this corpus normalized by the total number of tokens in the same corpus N: P (c )= Count ( c ) N (4) To avoid zero probabilities we use the add-one smoothing technique 5ème conférence internationale sur les TIC pour l’amazighe AUTOMATIC TAMAZIGHT SPELLING CORRECTION USING NOISY CHANNEL MODEL AND BIGRAM LANGUAGE MODEL Pc = Count(c) + 1 N +V 3 (5) Where V is the size of the vocabulary in our corpus. P(o/c) is the probability that the word c is misspelled as o, this probability depends on who the typist is familiar with the keyboard. Therefore, this probability cannot be computed exactly. To get over this problem we use the technique used by Kernigham et all [2], and create a confusion matrix for each editing operation. These matrices represent the number of times one letter was incorrectly used instead of another: Del[x,y] the number of times xy was typed as x. Ins[x,y] the number of times x was typed as xy. Sub[x,y] the number of times x was typed as y. Trans[x,y] the number of times xy was typed as yx. Using these matrices one can estimate P(o/c) as : del[ci 1 ,ci ] Po / c = count[ci 1ci ] ins[ci 1 , oi ] count[ci 1 ] sub[oi 1 , ci ] count[ci ] trans[c , c ] i i+1 count[ci ci+1 ] (6) Where i is the transformation position in the word c to get the error o. 2.3. Noisy channel algorithm 1. Count the number of tokens N and the vocabulary size V in the corpus. 2. If a given word o is not in the vocabulary: a. From o, generate all possible words using a single deletion, insertion, substitution or transposition. b. The set C is made up of generated words belonging to the vocabulary c. For each word c in C Compute Po / c using (6) d. The proposed correction of the word o is given by (3) 4 SAID GOUNANE, MOHAMED FAKIR, BELAID BOUIKHALEN 3. Bigram language model The Noisy channel algorithm failed to return the appropriate word because it uses no information about the other words in a sentence. It deals only with the misspelled word and tries to figure out the correct one just by using single word frequencies in the corpus. For example the misspelled word ‘ⴰⴰⴰⴰⴰⴰⴰ’ in the sentence ‘ⴰⴰⴰⴰⴰⴰ ⴰⴰⴰⴰⴰⴰ ⴰ ⴰⴰⴰⴰⴰⴰ ⴰⴰⴰⴰⴰⴰⴰ’ is corrected as ‘ⴰⴰⴰⴰⴰⴰ’ instade of ‘ⴰⴰⴰⴰⴰⴰⴰⴰ’, just bicause the first correct word is more frequent in the corpus than the seconde one. And the insertion of ‘ⴰ’ is more frequent than its deletion. 3.1. N-gram language model The N-gram approach to spelling error detection and correction was proposed by Mays et al. (1991). In [1] The idea is to generate every possible misspelling of each word in a sentence either just by typographical modifications (letter insertion, deletion, substitution, transposition), or by including homophones as well, (and presumably including the correct spelling), and then choosing the spelling that gives the sentence the highest prior probability. That is, given a sentence w = w1 , w2 ,...., wk ,..., wn , where wk has alternative spelling w'k , wk'' etc, we choose the spelling among these possible spellings that maximizes P(W). In a general way, the probability of a sentence (sequence of words) is given using the chain rul as folow : n P(w1 , w2 ,....., wn ) = P(w1n ) = P(wk / w1k 1 ) (7) k =1 The terms P(wk / w1k 1 ) are approximated by using Markov assumption: P(wk / w1k 1 ) P(wk / wkk-N1+1 ) (8) The N-gram model approximates the probability of a word given all the previous words P(wk / w1k 1 ) by the conditional probability of the N-1 preceding words P(wk / wkk -N1 ) . 3.2. Bigram language model In a particular case, the bigram language model (N=2) assigns probability to sentences (string of words: w1 , w2 ,....., wn ) whether for computing probability of a sentence or for probabilistic prediction of the next word in a piece of a sentence as follows: n P(w1 , w2 ,....., wn ) = P(w1n ) = P(wk / wk 1 ) (9) k =1 For example: P(ⴰⴰⴰⴰⴰⴰ ⴰ ⴰⴰⴰⴰⴰⴰⴰⴰⴰ)= P(ⴰⴰⴰⴰⴰⴰ/<s>) P(ⴰ/ⴰⴰⴰⴰⴰⴰ) P(ⴰⴰⴰⴰⴰⴰⴰⴰⴰ/ⴰ) The <s> is used to indicate the beginning of the sentence. 5ème conférence internationale sur les TIC pour l’amazighe AUTOMATIC TAMAZIGHT SPELLING CORRECTION USING NOISY CHANNEL MODEL AND BIGRAM LANGUAGE MODEL 5 4. Algorithm 1. Count the number of tokens N and the vocabulary size V in the corpus. 2. For a given sentence S. 3. For each word o in S 4. If o is not in the vocabulary: a. From o, generate all possible words using a single deletion, insertion, substitution or transposition. b. The set C is made up of generated words belonging to the vocabulary c. For each word c in C Compute Po / c using (6) Replace o by c in S and compute P(S) using (9) Score= Po / c P(S) e. The proposed correction of the word o is the word c with the highest score. 5. Application and Results The most influent thing in this work is the corpus used to compute all probabilities. If the corpus used in the training stage is too specific to a domain, the probabilities will not generalize well the new test sentences and vice versa. As a beginning, the corpus used is extracted from the IRCAM website. This corpus has N=3322 tokens of a vocabulary size V=893. Algorithms are tested using a Java program. As shows the (fig. 1) the algorithm has detected the misspelled word ‘ⴰⴰⴰⴰⴰⴰⴰ’ and proposed the right correction ‘ⴰⴰⴰⴰⴰⴰⴰⴰ’. The accuracy is about 32%. This is due to the small corpus and the language model used (Bigram) that can’t model langue sentences. Fig. 1 6 SAID GOUNANE, MOHAMED FAKIR, BELAID BOUIKHALEN 6. Conclusion In this work, we've presented the automatic spelling correction applied to Tamazight language written in Tifinagh. We used the noisy channel algorithm and the bigram language model. The most important issue is the corpus design. References Jurafsky D. and Martin H (2000). Speech and language processing. Prentice Hall. Mark D. Kemighan, Kenneth W. Church and William A. Gale(1990). A Spelling Correction Program Based on a Noisy Channel Model. AT&T Bell Laboratories. 5ème conférence internationale sur les TIC pour l’amazighe