Towards an Open Source Repository

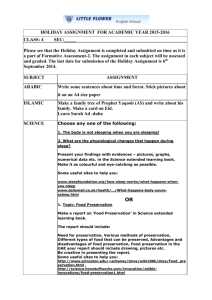

advertisement