The Dean Position @ NHA

advertisement

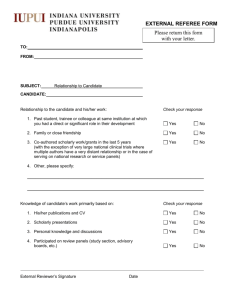

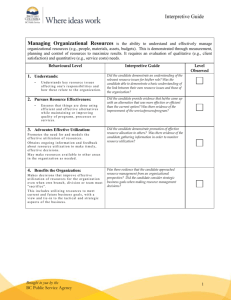

Data Competency Interview Guide Measurement, Research & Accountability A second competency interview that you may wish to perform relates to the candidate’s ability to interpret and use data. Data is an important component of our ability to assess the capability of our teachers. In this exercise, candidates can be presented with NWEA data or state test scores. Candidates are asked to make deductions from this information and in particular note the interventions that they would propose to address deficiencies. You will want to go column by column through the data and ask candidates to explain what each piece of information means. They should be able to tell you what this information tells us about the performance of individual students or the cohort as a whole. It is often important at this stage to discuss benchmarking and to try to understand the way that candidate’s quantify growth in their existing school. Most candidates will be unfamiliar with NWEA data. In these cases, you may need to give the candidates some basic information about RIT scores and the way the information is presented before they can accurately use the information. You may find that what you end up assessing is your opinion on how easy it will be for the candidate to learn how to read NWEA data given proper instruction. In the end you want to come out of the competency interview with an assessment of how comfortable the candidate is using data and if the candidate is likely or not to embrace NHA’s methods of quantifying student achievement. It is best to use real data from your school so that you can discuss real situations with the candidate. To further assist with the interviewing of aspiring Deans, the following three instruments are offered: Interview Form: The interview form that identifies the four (4) areas of interview questioning being: 1. Federal and State Accountability, 2. Testing and Assessment, 3. School Improvement Planning and 4. Educational Statistics. Data Analysis Rubric: An analysis rubric that can be filled in by the interviewer to help summarize findings and help in selecting a 1-5 rating level. Normally an acceptable Dean Candidate would score at least a “3” to be considered a viable candidate. Terms and Definitions: These should help us in how we talk about measurement, instruction, testing, and assessment. To assess a candidate’s ability to understand, use and make deductions from student performance data. Not all candidates will be expert users of school and student achievement data. So, time should be spent in these cases to assess a candidate’s aptitude for learning and adopting NHA practices given proper instruction. Special attention should be given to a candidate’s current knowledge and skill in the areas of: Understanding student and school achievement data. Observing, monitoring, coaching and evaluating teachers Aligning the performance data with the curriculum and helping teacher set improvement goals, identify strategies, knowing different strategies for high and low achievers Knowing the difference between state proficiency and academic growth Articulating their understanding of state grade level content expectations/benchmarks etc. Pointing to previous experiences of supervising others, building team motivation and expressing accountability in a positive light with sincerity. Do they really believe accountability is a good thing? If a candidate does not have a statistical background, do they appear to catch on quickly? Interviewing can be a two-way communication. We can find out the knowledge and skill of a person, the candidate can learn what we think is important and learn about the data NHA will provide to help them be successful. We need to know if they value using data and analytics to make decisions. Measurement, Research & Accountability Dean Interview Interviewee: Candidate Name: Interviewed by: Name(s): Date: Time: Interview Goal: To determine the prior experiences of the candidate and to understand he knowledge base of the candidate in the area of federal and State Accountability Systems, Testing and Assessment, School Improvement Planning, and Educational Statistics. National & State Accountability Systems Question: What can you tell me about the Federal No Child Left Behind Act in terms of (A) the subjects it requires to be tested nationally, (B) what it requires by the year 2013-14 and (C) anything else you want to share about the law itself and what it is/is not accomplishing? (A) (B) (C) Question: What can you tell me about the State Accountability System with which you are familiar? Notes: “Listen For…” State/Accountability System Name Subject/Grades Tested State Designations of School Performance (High/Med/Low) Knowledge of Sanctions for Schools Not Meeting Criteria for Success Acknowledges Political Nature Without Being Overly Critical (Schools should be accountable for performance) Testing and Assessment Question: What Standardized Test(s) are you familiar with and/or have created? Notes: School Improvement Plans Question: Express your opinion of the value of school improvement plans, what experiences you have had with SIP’s and describe the components of the SIP’s you’ve been a part of personally. Notes: Level 1 Has NOT been a part of School Improvement Plan (SIP) at any time/can't describe it with any detail. Level 2 Can describe at least one experience with using a SIP with some detail and expresses that SIP's are good to have in place, but they obviously have not led such an initiative. Level 3 Can describe at least one experience with a SIP with great detail including pre and post testing, implementation strategies, and expresses high value in using an improvement model. Level 4 Can describe more than one SIP experience or model. Can share the specifics of the models what they learned about student performance and what intervention strategies were used. Level 5 Shares a "No Excuses" mentality when it comes to student achievement. Can describe SIP with each of its components (Determine current status, plan for improvement based on student performance data, share data with teachers, create a team of teachers and others to implement and support the initiatives, use formative assessments to check along the way/have intermediate assessments, and retest to document improvement. Include all grades and content subjects.) Can obviously lead a SIP initiative. Educational Statistics Question: How would you rank your training in educational quantitative statistics? 1 “No Training” Question: Can you explain the following terms? 1. Academic proficiency on state tests 2. Academic growth 3. Measures of central tendency 4. Mean/Median/Mode 5. Random sample 6. Raw vs Scale score 7. Cohort of students over time 8. Percentage 9. Percentile 10. Achievement gap 11. Regression analysis 12. Normal Curve/Normal distribution 13. Standard Deviation 14. Standard Error or SEM Notes: 2 3 4 “Equal to Peers” 5 “PhD Vanderbilt” YES NO Data Scorecard - Dean Candidate: Interviewer: Date: Rating & Comments (Analysis of student assessment data) Skill/ Discipline Skill Level Rating = Explanation of Rating: Level 1 Understands standardized testing, but are not able to interpret any descriptive statistics Doesn’t have clear plans for implementing improvements based on student assessment result. Level 2 Understands basics descriptive statistics (mean, percentage, range), but struggles to apply results to drive instruction Has evaluated school quality by analyzing basic descriptive statistics, but made limited improvement efforts. Level 3 Understands more sophisticated descriptive statistics (percentile vs. percentage, median vs. mean) Has evaluated school quality by analyzing basic descriptive statistics and implemented school improvement initiative, but struggles to measure the benefit of the initiative (no post-test analysis) Level 4 Uses: cohort analysis (cross-grade scale), student growth metrics, scaled scores (vs. raw scores), data thresholds for performance goals (i.e., annual growth/proficiency goals), achievement gap analysis to drive improvement (disaggregated subgroup analysis), and is able to reconcile conflicting assessment results appropriately (Normative vs. criterion-referenced test scales). Level 5 In addition to level 4, understands measurement error (need multiple samples to reliably measure performance) and understands variation (articulates how they would measure variance in a sample distribution), understands the difference between standard deviation vs. standard error (+/2 Std. Dev. vs. 95% CI). Current Use of Assessment Data: Rating & Comments (Application & Implementation of Student Assessment Data) Skill Level Rating = Explanation of Rating: Comments: Isolated improvement efforts with full P-D-S-A cycle including pre and posttest analysis Implement continuous P-D-C-A school improvement efforts and sustains gains. Assess impact across content areas and grade levels. “No Excuses” mentality regarding student assessment results. Level/ Score Candidate Average/Total Score Page 2 - Data Scorecard Combined Student Assessment Skill Level = (see highlighted section below for a description of this rating) Level 1: the candidate clearly does not have the skills required and does not demonstrate that they have the desire or ability to learn. Level 2: the candidate clearly does not have the skills required but shows some interest in learning; ability and capacity questioned. Level 3: the candidate has a basic understanding of the skills required, but cannot demonstrate how to apply or replicate desired results; ability and desire to learn exists on a moderate level and are viewed as somewhat “coachable.” Level 4: the candidate has a good working base for skills required and in most situations can replicate and apply the skills to a positive result; capacity to learn is good, and candidate shows a strong desire to learn and be coached. Level 5: the candidate clearly has demonstrated mastery to near-mastery of the skill, and can apply the skill in any situation to demonstrated positive results; candidate not only looks for improvement and development opportunities, but could also be seen as a coach in specific skill. General Rule of Thumb: Assistant Principal >=Level 2 and Principal >=Level 3. Comments: “AT A GLANCE” NWEA/MAP Terminology NWEA – The Northwest Evaluation Association (NWEA) is a national non-profit organization dedicated to helping all children learn. NWEA provides researchbased educational growth measures, professional training, and consulting services to improve teaching and learning. MAP – MAP is a criterion-referenced, nationally normed computerized adaptive assessment in reading, math and language usage for grades 2-8. Also offered in Science, which is a single adaptive assessment that measures students in two critical areas: General science and concepts and processes. MAP is not a "mastery" test. Students are not expected to get every question right. In fact, the best assessment measure is one where a student gets 50% of the questions right and 50% of the questions wrong. MAP Primary Grade Assessment (PGA) – MAP for Primary Grades are diagnostic and computerized adaptive assessments in reading and mathematics, specifically tailored to the needs of early learners (k-1 grade). RIT (Rasch Unit) – is a growth measure for students. A RIT score is not related to a student's grade (grade independent). The RIT score is used to determine the instructional level of students. The RIT scale is an equal interval scale much like a ruler or meter stick. All of the test items are placed on the scale according to their level of difficulty. Lexile Range – A Lexile is a unit for measuring text difficulty and reader comprehension. Students are considered to be at an appropriate level when they can comprehend approximately 75% of the material they read. This ensures students are neither frustrated nor bored, thereby stimulating their learning processes while rewarding their current reading abilities. A resource that allows teachers to use the student's RIT score to find appropriately challenging books, periodicals, and other reading material. Proficiency – A student is considered “proficient” or “at grade level” on NWEA MAP test when s/he scores at the 50th percentile or higher on a given test. Growth – Change in student achievement over time. Median – The middle score in a list of scores; it is the point at which half the scores are above and half are below. Mean – The average of a group of scores. N W EA MAP MAP (PGA) RIT Lexile Range Proficiency Growth Median Mean DesCartes Typical Growth Equal Interval Norm Group Percent Percentile Triangulatiom Rigor Instructional Level DesCartes – A Continuum of Learning translates test scores into skills and concepts students may be ready to learn. It orders specific reading, language usage, mathematics, and science skills and concepts by achievement level. DesCartes contains separate sections for each subject (mathematics, reading, language usage, and science). The goal strands are broken down into ten-point RIT bands. Within each band, sub-categories, which further divide the content within the goal area, break down the skills and concepts found in the NWEA item banks. Uses for DesCartes include: Targeting and individualizing instruction, creating instructional grouping, monitoring student progress, sharing resources, conferencing with students and parents, partnering with parents and support staff for enrichment. Typical Growth – We can use the norms to help us define the “typical” amount of growth commonly experienced by students. Typical growth is best thought of as the average amount of growth attained by students with the same starting RIT value, grade, and subject. Students attaining the same amount of growth as their NWEA typical growth values have demonstrated growth equal to the average of students in the norming sample under the same conditions (starting RIT, grade, subject). The terms “typical” and “average” are preferred over “expected” and “target,” which ignore how typical growth values are calculated. Teachers and students should work together to set goals for growth that are greater than those measured as typical by norms. Equal Interval – The RIT scale is infinite, but most student scores fall between the values of 140 and 300. Like meters or pounds, the scale is equal-interval, meaning that the distance between 170 and 180 is the same as the distance between 240 and 250. Norm Group – In 2008, NWEA completed its most recent norming study designed to describe student achievement status and growth along the RIT scales shows the mean and median achievement values for students in the study at each grade level (grades K-11) for both fall and spring. The study included over 2 million students across the states. Percent – Number out of 100. Percentile – How well a student performed compared to others. Triangulation – Using at least three data points when making critical decisions about students. One assessment does not give educators an overall picture of a student's achievement, nor does it address every purpose. It is imperative that we use data from multiple, appropriate sources when making decisions about students. Rigor – Providing instruction that is above a student’s current level of achievement. Instructional Level – The items associated with the RIT score achieved by the student indicate the student’s instructional level. The RIT score represents items that a student gets right about half of the time. Therefore, the data is relative to the student’s current learning and is not representative of “mastery” of the items. The instructional level is a starting point which informs the teacher where to begin. Contact Person regarding these materials and interviewing “Dean Candidates” about data: Ed Richardson Director of MRA erichardson@nhamail.com (616)717-8192