survey_final

advertisement

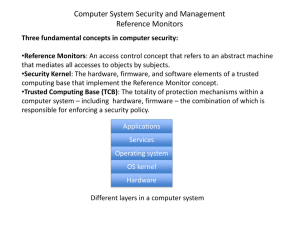

Kernel Synchronization in Linux. Professor: M.Khalil Students: K. Bean and W. Jaffal CSE 8343 1. Abstract This survey paper introduces a kernel synchronization technique implemented by Linux, a very popular and relatively new Unix-like operating system. The presented work considers uniprocessor and multiprocessor environments supported by Linux. 2. Introduction As part of his computer science project at the University of Helsinki (1991), Linus Torvalds developed Linux to serve as an operating system for IBM-compatible personal computers (based upon the Intel 80386 microprocessor) [ME02]. This work was presented in 1997 as a Master’s thesis entitled “Linux, a Portable Operating System” by L. Torvalds [LinTor97]. Modern versions of Linux are now available on other architectures such as Alpha, SPARC, Motorola MC680x0, PowerPC, and IBM System/390. In contrast with the Windowsbased operating systems, Linux is not a commercial operating system. Its source code under GNU General Public License is available to the public and is downloadable via http://www.kernel.org/. In the “Kernel Control Path” section of this paper, problems that could occur during kernel control path interleaving are investigated. In “Synchronization Techniques,” different techniques to avoid race conditions are considered. In “Linux Multiprocessor Architecture,” the SMP 1 multiprocessor architecture is compared with other architectures. At the end of this section, process synchronization on hardware level is considered. In “The Linux/SMP Kernel”, Linux support of multiprocessor environment is described. 3. Kernel Control Path Kernel processes execute due to the following reasons [BC00]: A process executing in User Mode caused some exception (i.e., divide by zero); An external device sent a signal to a Programmable Interrupt Controller using an IRQ, with a corresponding interrupt being enabled. According to [BC00], a kernel control path is the sequence of instructions executed in Kernel Mode to handle a kernel request. A kernel control path can handle various situations: system calls, exceptions or interrupts. When one of the following conditions occurs, the CPU does not execute a kernel control path sequentially: A context switch occurs. An interrupt occurs. CPU starts processing another kernel control path. A race condition (outcome of some computation depends on how two or more processes are scheduled) can occur not only when a user process is preempted by a higher priority process, but when a CPU interleaves a kernel control path. 2 4. Synchronization Techniques 4.1 Non-preemptability of a Process in Kernel Mode According to [BC00], the Linux kernel is not preemptive; a higher-priority process cannot preempt a process running in Kernel Mode. The following assertions always hold true for a running process in Kernel Mode within Linux: The process cannot be replaced by another process, except when the former voluntary relinquish control of the CPU. Interrupt or exception handling can preempt a running process; however, when an interrupt handle terminates, the process resumes. Only a kernel control path can interrupt another kernel control path. 4.2 Atomic Operation As defined in [BC00], an atomic operation is an operation performed by executing a single assembly language instruction; therefore, this instruction cannot be interrupted in the middle. C language operations such as a = a + 1 or a++ could be implemented by a compiler as single atomic operations or it could be compiled into more complicated code. To guarantee that some operations compiled every time as single atomic operations, Linux kernel provides special functions such as: 3 atomic_int(v) v++ atomic_dec(v) v— and so on. For more complete list of these functions, see [BC00]. 3.2 Interrupt Disabling The concept of a critical section - only a single process executes in this section – is introduced. Such a task could be very complicated to code as a single atomic operation. Interrupt disabling is one of the techniques implemented to ensure that a sequence of kernel statements is performed as a critical section. In this case, a kernel control path should not be interleaved while a hardware device issues an IRQ signal. This technique does not always prevent kernel control path interleaving. For example, a program executed in Protected Mode could raise a “Page Fault” exception. Because of its simplicity, interrupt disabling is widely used by kernel functions for implementing a critical region. Interrupts are disabled/enabled by assembly language instructions placed at the beginning and at the end of the section. To obtain a more detail description, one can use [BC00] as a reference. According to the same authors, a critical section should be short since any communication between CPU and I/O is blocked while a kernel control path is running in this section. 3.3 Locking Through Kernel Semaphores This locking technique allows a kernel process to access shared data (entering a critical section) only after acquiring a “lock.” A kernel control path releases the lock after leaving the critical section, allowing another kernel process to access shared data. 4 According to [BC00], Linux offers two kind of locking: Kernel semaphores, used by both uni-processor and multiprocessor systems; Spin Locks, used by only multiprocessor systems. Spin locks will be considered in a later section while introducing multiprocessor environments. Implementation kernel semaphore, described in [BC00], is an object of type structure semaphore, found within the include/asm/semaphore.h file, and has the following fields: count – an integer number. Possible values of count: o If count is greater than zero, the resource is currently available. o If count is less than or equal to zero, the semaphore is busy and a protected resource is currently unavailable. The absolute value of count should equal the number of kernel control paths waiting for resources. o If count is equal to zero, one kernel control path is using the resource and nothing is waiting for it to complete. The count field is decremented when a process acquires the lock and is incremented when the same process releases it. The value of count can be initialized to n, in this case n processes can concurrently access the resource. wait – the address of a wait queue list that contains all blocking processes that are currently waiting for the resource. If count is greater or equal than zero, the wait queue is empty. waking – ensures only one of the sleeping process in the waiting queue can wake up and acquire a resource. 5 When a process wishes to acquire a kernel semaphore lock, it invokes the down() function. The function decrements the count field, then checks if its value is negative. The decrement and test should be atomic operations; otherwise, another kernel control path can access the count value. If count is greater than or equal to zero, the current process enters the critical section; otherwise, the count is negative and the current process must be suspended and is inserted into the wait queue. Because several processes could wait for resource, it is necessary to choose the “winning” process via the waking field. When the releasing process (leaving critical section) wakes up the processes in wait queue, it increments the waking field. Each awakened process then enters a critical section of the down() function and a test to determine whether the waking field is positive is made. If the field is positive, it decrement waiting and acquire the resource, otherwise it goes back to sleep. When a process releases a kernel semaphore lock, it invokes the up() function. The function increments the count value and determines if its value is negative or positive. [These two operations must be atomic.] If the new value is positive (no process is waiting for a semaphore), the function terminates; otherwise, it must wake up the one of the processes that is currently waiting for a semaphore. Because each kernel control path usually needs to acquire just one semaphore at a time, Linux has few problems with deadlocks. To avoid the deadlock problem, semaphore requests are performed in the order given by its address: the semaphore request whose semaphore data structure is locked at the lowest address is issued first. 6 4. Linux Multiprocessor Architecture 4.1 Comparison of Different Multiprocessor Architectures Because multiprocessor computers were rare at the time, Linux was initially developed as a uni-processor operating system. Modern Linux versions support shared memory symmetric multiprocessors (SMP) architecture, executable on machine with a maximum of 32 CPUs. Because of this feature, Linux is a scalable operating system. According to [SGG], scalability is the capability of a system to adapt to an ever-increasing service (work) load. The following diagram (Figure 1) describes the typical organization of this machine. CPU 1 CPU n system bus Graphical card Memory Figure 1 According to [ME02], in a SMP machine, CPUs are connected to the shared system bus. System memory also connects to this bus. Communication between CPUs is accomplished through shared memory. According to [CK01], on SMP machine operating system, application processing and kernel processing are spread amongst all available CPUs. A different arrangement is called asymmetric multiprocessing, see [CK01]. The master CPU (CPU 0 or 1) executes the operating system code and application programs run on the 7 remaining CPUs. Asymmetric multiprocessing systems are easy to build, making efficient use of symmetric processing more difficult, and requiring that the operating system itself be treaded – broken down into separate processes. The benefit of SMP is an operating system subtask can be distributed round-robin style to different processors, increasing system efficiency and making it less likely those CPUs are underutilized. Another way to design the multiprocessor machine [ME02] is to assemble hundreds or thousand of CPUs, each with own system memory. Communication among CPUs is then accomplished through message passing. Linux supports neither this nor the asymmetric multiprocessing system. It is necessary to understand a Pentium dual-processing system to get familiar with Linux multiprocessor architecture. 4.2 Pentium dual-processing system, process synchronization on hardware level Shared memory RAM chips may be accessed concurrently by independent CPUs. Since read/write operations on a RAM chip must be performed serially (according to [BC00]), a hardware circuit called a memory arbiter is inserted between the bus and every RAM chip. It grants access to a CPU if the chip is free and delays access if the chip is busy. Hardware support for cache synchronization Hardware cache is utilized using the locality principle: addresses close to the ones most recently used have a high probability of being used in the near future. To make the communication process between CPU and RAM faster, cache (the smaller and faster memory) is introduced. A line containing a few dozen contiguous bytes are transferred in 8 burst mode between slow DRAM and fast on-chip static SRAM used to implement caches. In multiprocessor environment, each CPU has its one cache. Updating shared data is more cumbersome (according to [BC00]): when one CPU modifies its hardware cache, it must check to see if the same data is contained within other CPUs’ cache; if so, cache within those CPUs are updated with the proper value. This activity is called cache snooping and implements much faster on hardware level without kernel involvement. SMP atomic operations Lock instruction prefixes (a special byte prefixed to an assembly language instruction) have been introduced. Whenever a control unit detects this byte, it locks the memory bus so that no other process can access the memory location specified by the destination operand of any following assembly language instruction. Distributed Interrupt Handling To deliver an interrupt to any CPU, Intel introduced I/O Advanced Programmable Interrupt Controller (APIC). See Figure 2. 9 CPU 1 CPU n Local APIC Local APIC ICC bus I/O APIC Figure 2 Each CPU has its own Local APIC. An Interrupt Controller Communication (ICC) bus connects an I/O APIC to the Local APICs. Device IRQ lines are connected to the I/O APIC, which acts like a router with respect to the Local APICs. 10 5. The Linux/SMP Kernel 5.1 Process Descriptor Modification To accommodate the multiprocessor environment, Linux added new fields to its process descriptor. These fields represent relationships between process and processor, see [BC00]. has_cpu – flag indicates if the process is currently running. processor – logical number of the CPU that is running the process. When a new process is created by fork(), it has has_cpu = 0 and processor = NO_PROC_ID. When a new process is selected to run, it sets its has_cpu = 1 and the processor field to the logical number of the CPU doing the task switch. 5.2 Spin Lock According to [BC00], spin locks are a special mechanism designed to work in the multiprocessor environment. Because a context switch is very expensive, blocked process keeps its own CPU by spinning while waiting for a resource. One example of the spin lock is read/write lock. This lock allows several kernel control paths simultaneously to read the same data structure. However, if kernel control path wishes to write data, it must acquire the write version of read/write lock, which grants exclusive access to resource. 11 Bibliography [BC00] D. P. Bovet, M. Cesati Understanding the Linux Kernel O’Reilly, 2000 [CK01] R. Cowart, B. Knittel. Using Microsoft 2000 Professional QUE 2001 [LinTor97] Linus Torvalds. Linux: a Portable Operating System. Master of Science Thesis, University of Helsinki, Finland, 1997 [ME02] D. Mosberg, S. Eranian IA-64 Linux Kernel Prientice Hall PTR, 2002 12