JLAIGameTermProject

advertisement

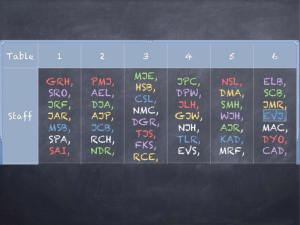

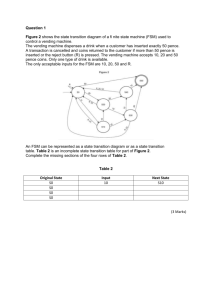

CS510 AI and Games Term Project Design Juncao Li Abstract: Computer games, as entertainment or education media, are becoming more and more popular. The design of AIs in computer games usually focuses on the adversaries that play against the human players. However the ultimate goal of the game design is not to make the AIs win against players, but to entertain or educate the players. As a result, it is important to have AIs that can mimic the behaviors of human players and serve as the benchmark in game designs. In this project, we design player AIs in the game Advanced Protection (AP) trying to mimic the human players and win the game. We first hardcode a static Finite State Machine (FSM) that mimics players’ strategies, and then use the FSM to train a Neural Network (NN) as an adaptive player AI. We also design an approach to evaluate the AIs on both sides, i.e., the Human and the Chaos. The evaluation is based on the win/lose ratio of the AIs given random initial treasury on a fixed map. Introduction: As shown in Fig. 1, the game of Advanced Protection (AP) is played between a human player and a computer opponent (known as Chaos) on a 24 x 24 wraparound grid. The game is split into turns, which are composed of 50 phases. Before each turn begins, the human player is able to buy, distribute, and salvage as many units as his or her treasury allows. When the turn begins, Chaos's minions are randomly placed on squares not occupied by the human's units. During each phase of the turn, every minion is allowed one or two moves and every human farming unit generates money. Minions can 1) Move Forward, 2) Turn Right, 3) Turn Left, and 4) Special Action. (The Special Actions vary between minions. The special action of Scouts is to broadcast. The Special Action of Scavengers is to farm. The Special Action of barbarians is to attack a human unit.) When the turn ends, Chaos's remaining units are removed from the board and then salvaged for the units' full value. Both the human player and Chaos create new units between turns by purchasing them using their respective treasuries. Chaos and the human both start the game with $2000. The human player wins when Chaos surrenders (when the human treasury overwhelms the Chaos treasury). Chaos wins when the human has no units and no money left in his or her treasury. Fig. 1 The UI of Advance Protection AP is an adaptive turn-based strategy game that updates its AI strategy each turn according to the human player’s performance. The difficulty ratio will scale up or down if the player wins or loses. To do this, AP encodes each minion with a brain (namely automaton) by a 128-bit string. Each minion has 250 candidate brains to use, among which 20 brains are hardcoded and 230 brains are generated from genetic algorithms. During the play, brains are rated based on their performance against the player. The brain rating varies on different players because the differences between players’ strategies. AP has a fitness function that dynamically chooses the most fit minion brain to satisfy the player’s need. AI design details: We design two player AIs in this project: a Finite State Machine (FSM) that hardcodes the player’s strategy and a Neural Network that is firstly trained by the FSM and further improved by randomly generated playing cases. The goal of a player AI is to maximize its treasury income each turn comparing to the Chaos’ income. The FSM captures game strategies in AP to play as a human player. It gathers necessary information to make decisions in each turn: (1) the treasury of both Human and Chaos; (2) the Terrain; and (3) current human nodes on the map. The output of the FSM includes: (1) where to place the units; and (2) what units to place. We classify the human units into two types: (1) farming units such as drone and settler, whose main purpose is to make money; (2) aggressive units such as mine and artillery, whose main purpose is to cause damage to the Chaos’ units. The FSM makes unit placement following this two categories to maximize its income and minimize the Chaos’ income. Fig. 2 The strategy of the FSM on the first game turn Fig. 3 The strategy of the FSM on the last game turn (before its victory) Figure 2 shows the strategy of the FSM on the first game turn, where it tries to make money by selecting most human units as farming type. Figure 3 shows the strategy of the FSM on the last game turn before its victory, where it has enough treasuries to sustain its growing and instead of making money its top interest is to suppress the Chaos. The FSM is static, where it hardcodes the human knowledge and never learns by itself. Furthermore, programming is limited to consider all the implications that may contribute the game strategy. For examples, some small templates that contain certain unit placement combinations are complex being hardcoded; game strategies can also depend on map terrain patterns, where the patterns are dynamic generated during the game. These limitations request us to design an adaptive player AI. Fig. 4 The strategy of the NN on the first game turn We use Neural Network (NN) to design an adaptive player AI that can evolve by proper training. The input size of our NN is 578, which contains the map terrain information (24*24) and the initial Human/Chaos treasury (1 respectively). The output is the human unit placement on the map (with the map size: 24*24). To simplify the design, we employ one hidden neural layer that contains the same number of nodes as the input. We normalize the inputs to fall in the range between [0.0, 1.0], so a large-number inputs will not overwhelm other inputs at the starting phase of the training. In development, we borrow two neural network implementations from the “AI Game Engine” and “Tim Jones Book”. The later implementation is simple and proved to be efficient during our practice. We design two steps to train our NN player AI. Firstly, we use the static FSM to train the NN, where the training inputs are randomly generated maps and random initial Human/Chaos treasury, and the outputs are generated by the FSM. The first step can efficiently help the NN recognize different maps and map patterns. Secondly, we use randomly generated strategy to train the NN if the strategy performs better than the NN. By doing this, the NN can keep improving itself against the Chaos’ brains. During the training, the NN player AI adapts to recognize different maps. It is often possible that the outputs of the NN are not as what we expect exactly, so we need to interpret the outputs. We first normalize the outputs to the range between [0.0, 1.0]. Then we match the outputs to the most similar answers. Figure 4 shows the NN player AI’s first-turn output on the same map as the FSM did. The NN has been trained for about 4 hours with 1 million iterations. The picture shows that the NN is adapting to recognize maps. Evaluations: We evaluate the player AIs and Chaos AI based on single turns because only for each turn, the Chaos’ brain is certain. We fix the test map in order to reduce the uncertainty cause by maps. Given certain amount of initial treasury, the player and Chaos AI should run 50 times for statistics in order to minimize the influence caused by the encoded AI fuzzy logics. We consider our AIs perform well or win iff: given random initial treasury and map, they have advantage against most Chaos brains (out of 250) in terms of the money earned. This comes from a simple observation that good performance on each turn can lead to final win of the game. Fig. 5 Statistics on the FSM: wins out of the 250 brains for given initial treasury Figure 5 shows the statistics of the hardcoded FSM against the 250 Chaos brains. We can infer from the picture that the FSM is not smart dealing with the treasury between 4000 and 10000, where it can never win against any Chaos brain. Figure 6 shows the statistics of the NN player AI trained after about 1 million iterations. Although trained by the FSM, the NN performs better than the FSM in many cases, especially when the treasury is between 4000 and 10000. The NN AI does not perform better than the FSM when the initial treasury is larger than 10000, because the selecting of the training set does not favor the cases with large initial treasury. Fig. 5 Statistics on the NN AI: wins out of the 250 brains for given initial treasury Conclusion and Discussion: In this project, we studied the turn-based game Advanced Protection (AP) especially on its adaptive AI strategy. We developed a static FSM to encode the human player’s game strategy against the AP AI in terms of brains. The FSM can well deal with certain brains under certain initial settings, but it does not perform well in general cases, especially when the initial treasury is large. We designed a Neural Network (NN) that hopefully can adapt to perform better than the FSM. We didn’t get enough time to train the NN based on the random generated cases, but the training results of the NN by the FSM already showed us a potentially promising outcome of the second training step. This project shows us an approach to memorize and mimic the game players’ behaviors. This could potentially help game companies improve their games after release. The player AIs can be created by users for free during their game plays and used as training cases to improve the game AIs. Developing NNs could be hard because the unpredictability of AIs hides bugs deeply. We ran into several bugs that make the NN fail to evolve properly. We found those bugs mostly by breakpoint check on data status and code review. An efficient way to check if the NN is implemented correctly is to train the NN by always the same training pair, seeing if it adapts as expected. Link to the source code, this report and associated presentation slides: http://web.cecs.pdx.edu/~juncao/links/src/ Most of my code is in the files listed below: The NN player AI class: NNPlayer.h, NNPlayer.cpp The FSM player AI class: Player.h, Player.cpp The NN code I borrowed and modified: backprop.h, backprop.cpp My code of learning: JLLearning.h, JLLearning.cpp Although I have code in other files, I don’t think it’s interesting. Please search “JL” for my comments and code.