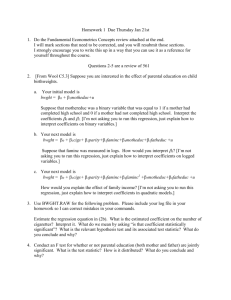

Tips form Allison, 1999, for understanding regression

advertisement

Learning Objectives: Be able to get means, SDs, frequencies and histograms for any variable Be able to determine the Cronbach’s alpha for any set of items. Be able to reverse score a single item and combine items into a single scale 1. Check for errors (range, distribution) 2. Reverse code any relevant items 3. Estimate reliability (Cronbach’s alpha) 4. Combine items Be able to correlate any two variables (represented as single item or as scale). Be able to “center” a variable before entering it as a predictor in a multiple regression equation. Be able to distinguish between mediators and moderators. Be able to draw simple path diagrams. Be able to create “product” terms to test interaction questions. Be able enter predictors in multiple steps to test mediation questions. Two favorite resources: http://www.quantpsy.org/medn.htm (Kristopher Preacher’s website) http://www.afhayes.com/ (Andrew Hayes’ website) Words of wisdom (adapted from Allison, P., 1999): The most popular kind of regression is ordinary least squares, but there are other methods. Ordinary multiple regression is called linear because it can be represented graphically by a straight line. A linear relationship between two variables is usually described by two numbers, slope and intercept. We assume relationships are linear because it is the simplest kind of relationship and there’s usually no good reason to consider something more complicated (principle of parsimony). You need more cases than variables, ideally a lot more cases. Ordinal variables are not well represented by multiple regressions. Ordinary least squares choose the regression coefficients (slopes and intercept) to minimize the sum of the squared prediction errors. The R2 is the statistic most often used to measure how well the outcome/criteria variable can be predicted form knowledge of the predictor variables. To evaluate the least squares estimates of the regression coefficients, we usually rely on confidence intervals and hypothesis tests. Multiple regression allows us to statistically control for measured variables but this control will never be as good as a randomized experiment. To interpret the numerical value of a regression coefficient, it is essential to understand the metrics of the outcome/criteria and predictor variables. Coefficients for dummy (0, 1) variables usually can be interpreted as differences in means on the outcome variable for the two categories of the predictor variable, controlling for other variables in the regression model. Standardized coefficients can be compared across predictor variables with different units of measurement. They tell how many standard deviations the outcome variable changes as an increase of one standard deviation in the predictor variables. The intercept (or constant) in a regression model rarely tells you anything interesting. Don’t exaggerate the importance of R2 in evaluating a regression model. A model can still be worthwhile even if R2 is low. In a multiple regression, there is no distinction among different kinds of predictor variables, nor do the results depend upon the order in which the variables appear in the model. Varying the set of variables in the regression model can be helpful in understanding the causal relationships. If the coefficient for a variable x goes down when other variables are entered, it means that either a) the other variables mediate the effect of x on the outcome variable y, or b) the other variables affect both x and y and therefore the original coefficient of x was partly spurious. If a dummy predictor variable has nearly all the observations in one of its two categories, even large effect of this variable may not show up as statistically significant. If the global F test for all the variables in a multiple regression model is not statistically significant, you should be very cautious about drawing any additional conclusion about the variables and their effects. When multiple dummy variables are used to represent a categorical variable with more than two categories, it is crucial in interpreting the results to know which is the omitted category. Leaving important variables out of a regression model can bias the coefficients of other variables and lead to spurious conclusions. Non-experimental data tells you nothing about the direction of a causal relationship. You must decide the direction based on your prior knowledge of the phenomenon you are studying, Time ordering usually gives us the most important clues about the direction of causality. Measurement error in predictor variables leads to bias in the coefficients. Variables with more measurement error tend to have coefficients that are biased toward 0. Variables with little or no measurement error tend to have coefficients that are biased away from 0. The degree of measurement error in a variable is usually quantified by an estimate of its reliability, a number between 0 and 1. A Cronbach’s alpha of 1 indicated perfect measure, a Cronbach’s alpha of 0 indicates that the variation in the variable is pure error. Most psychologists prefer alphas to be above .70, anything below .60 is difficult to defend. With small samples, even large regression coefficients may not be statistically significant. In such cases, you are not justified in concluding that the variable has no effect – the sample may not have been a large enough sample to detect it. In small samples, the approximations used to calculate p values may not be very accurate, so be cautious in interpreting them. In a large sample, even trivial effects may be statistically significant. You need to look carefully at the magnitude of each coefficient to determine whether it is large enough to be substantively interesting. When the measurement scale of the variable is unfamiliar, standardized coefficients can be helpful in evaluating the substantive significance of a regression coefficient. If you are interested in the effect of x on y, but the regression model also includes intervening (mediating) variables w and z, the coefficient for x may be misleadingly small You have estimated the direct effect of x on y, but you have missed the indirect effects through w and z. If intervening variables w and z are deleted from the regression model, the coefficient for x represents its total effect on y. The total effect is the sum of the direct and indirect effect. If two or more predictor variables are highly correlated it is difficult to get good estimates of the effect of each variable controlling for the others. This problem is known as multicollinearity. When two predictor variables are highly collinear, it is easy to incorrectly conclude that neither has an effect on the outcome variable. It is important to consider whether the sample is representative of the intended population. Check data to make sure all values falls within range of variable response categories. Default method for handling missing data is listwise deletion – deleting any case that has missing data on any variable. Studentizied residuals are useful for finding observations with large discrepancies between the observed and predicted values. Influence statistics tell you how much the regression results would change if a particular observation were deleted from the sample. The standard error of the regression slope is used to calculate confidence intervals. A large standard error means an unreliable estimate of the coefficient. The standard error goes up with the variance in y. The standard error goes down with the sample size, the variance in x and the R2. The ratio of the slope coefficient to its standard error is a t statistic, which can be used to test the null hypothesis that the coefficient is 0. With samples larger than 100, a t statistic great than 2 (or less than -2) means that the coefficient is statistically significant (.05 level, two tailed test). The regression of y on x produces a different regression line from the regression of x on y – the two lines cross at the intersection of the two means. The two slopes will always have the same sign. We can get a confidence interval around a regression coefficient by adding and subtracting twice its standard error. The two lies coincide when and x and y are perfectly correlated. Efficient estimate methods have standard errors that are as small as possible. That means that in repeated sampling, they do not fluctuate much around the true value. If we have a probability sample drawn so that every individual in the population has the same chance of being chosen, then the least squares regression in the sample is an unbiased estimate of the least squared regression in the population. The standard linear model has five assumptions about how the values of the outcome variable are generated from the predictor variables. The assumptions of linearity and mean independence imply that least squares is unbiased. The additional assumptions of homoscedascity and uncorrelated errors imply that least squares is efficient. The normality assumption implies that a t table gives valid p values for hypothesis tests. The disturbance U represents all unmeasured causes of the predictor variable y. It is assumed to be a random variable, having an associated probability distribution. Mean independence means that the mean of the random disturbance U does not depend on the values of the x variables. Mean independence of U is the most critical assumption because violations can produce severe bias. Homoscedasity means the degree of random noise in the relationship between y and x is always the same. You can check for violations of the homoscedasity assumption by plotting the residuals against the predicted values of y. There should be a uniform degree of scatter, regardless of the predicted values. If observations are clusters or can interact, more likely to have correlated errors. Correlated errors lead to underestimates of standard errors which inflate test statistics (so you should use generalized least squares). Normality least critical, particularly if large sample. Tolerance is computed by regressing each independent variable on all the other independent variables and then subtracting the R2 from that regression from 1. A low tolerance indicates serious multicollinearity. Multicollinearity means that it is hard to get reliable coefficients. Can suppress effects. To address multicollinearity, delete one or more variables, combine variables into index, estimate latent variable model or perform joint hypotheses tests. The most common way to represent interaction in a regression model is to add a new variable that is the product of two variables already in the model. Such models implicitly say that the slope for each of the two variables is a linear function of the other variable. In models with a product term, the main effect coefficients represent the effect of that variable when the other variable has a value of 0. The best way to interpret a regression model with a product term is to calculate the effects of each of the two variables for a range of different values on the other variable. When one of the variables is a dummy variable, the model can be interpreted by splitting up the regression into two separate regressions, one for each of the two values of the dummy variable.