Final Project - Jennifer A. Buxton Portfolio II George Mason

advertisement

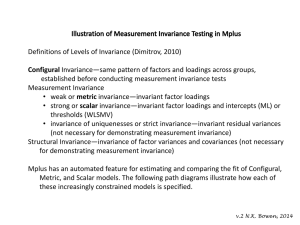

Running Head: READING STRATEGIES, INSTRUCTION, AND ATTITUDES Structural Equation Modeling Approach to Examine Reading Strategies, Instruction, and Attitudes Jennifer A. Buxton George Mason University For Partial Fulfillment of the Requirements for EDRS 797 1 READING STRATEGIES, INSTRUCTION, AND ATTITUDES 2 Abstract Confirmatory factor analysis (CFA) was employed to test the hypothetical relations between observed and latent variables related to reading comprehension strategies. Structural equation modeling (SEM) was then used to model the relationship between students’ strategy use, reading attitudes, strategy instruction, and treading performance. Once factorial invariance between the groups was established MIMIC group comparisons were used to test for gender differences across all variables. The statistically significant results of the relationships among the latent variables, between the latent and observed variables, and gender across all variables all provided by the sophisticated analysis offered by SEM, suggest a myriad of implications for reading comprehension strategy assessment, instruction, and future research. Keywords: Reading, Strategies, Confirmatory Factor Analysis, Structural Equation Modeling READING STRATEGIES, INSTRUCTION, AND ATTITUDES 3 Structural Equation Modeling Approach to Examine Reading Strategies, Instruction, and Attitudes For those fortunate enough to read with ease, it is difficult to imagine life without the ability to recognize and make sense of words. Reading, or the ability to decode and understanding the meaning of words, is a fundamental and integral component of our daily functioning. It is a basic necessity for success in today’s society; yet, once it becomes automatic the complex processes involved in reading and learning to read are often forgotten. Lower level reading skills involved in developing the basic process of reading tend to receive precedence in the literature over higher level skills involved in developing proficient reading comprehension. Proficient readers are not only successful with basic decoding and other word level skills, they are also able to make sense of text by explicit use of reading comprehension strategies. Comprehension strategies are methods readers employ to support higher level understanding of what they’ve read. Proficient readers use comprehension strategies such as monitoring, evaluating, making connections, and text management to support their comprehension. Students do not develop reading comprehension strategies implicitly. In order for students to obtain the greatest benefit from reading comprehension strategy instruction, each strategy needs to be taught explicitly including what, when, and how students should use reading comprehension strategies. Additionally, the students need to be instructed how to integrate the new strategy to build on their existing repertoire of reading comprehension strategies. A crucial instructional component, assessment, is often underused prior to instruction of reading comprehension strategies. In order to most effectively guide instruction to best meet the needs of all learners, teachers should be aware of each of their students’ strengths and READING STRATEGIES, INSTRUCTION, AND ATTITUDES 4 individualized areas of need. A diagnostic assessment measuring students’ understanding and use of each of the reading comprehension strategies would be extremely useful for both initial and ongoing assessment of students’ progress with intentionally and appropriately utilizing reading comprehension strategies. The current political platform of education in the United Stated has created a reality that, although often denied and highly disputed, judgments of teacher and student success are based on standardized test results. An implication of those judgments is that despite the array of professionals involved in the process of reading instruction, ultimately classroom teachers are held accountable for student success. With the increasing pressure on teachers to utilize evidenced based instruction to increase student test scores, teachers need access to research based practices to effectively support the individualized instruction of all students in the general education classroom. A significant amount of literature currently exists supporting the benefits of reading comprehension strategy use. However, after an extensive review of reading strategy and reading assessment literature, it was determined that a scant amount of literature exists about how to assess students’ reading comprehension beyond the overall comprehension level. Various assessment are available that specifically target reading comprehension. However, a majority of those assessments only examine students’ overall comprehension ability. Unfortunately, the few available tools that assess beyond students’ overall comprehension and focus on students’ use of specific reading comprehension strategies fall short in that they only assess a few of the many existing strategies. Therefore, a comprehensive tool feasible for classroom teachers to assess student’s explicit use of all reading comprehension strategies is needed. Further, due to the vast number of READING STRATEGIES, INSTRUCTION, AND ATTITUDES 5 possible reading comprehension deficits that could be identified in a classroom of students, it would benefit classroom teachers even more if the diagnostic assessment was just the first part of a comprehensive assessment/intervention package. It is assumed that many teachers would welcome an assessment/intervention package that offers teachers evidenced based suggestions to guide instruction based on students’ assessment results, including multiple intervention approaches for each of the reading comprehension strategies. Purpose The purpose of this study is to examine a recent, large scale public data set, PISA 2009, to obtain relevant support that can be integrated with other data sources to inform the exploratory phase of a Mixed Methods research project aimed at developing and testing a comprehensive reading strategy assessment/intervention package. The PISA 2009 survey was specifically chosen because of its’ focus on reading. The goal of this investigation is to examine latent and observable reading comprehension related variables, within a structural equation model (SEM) framework, to identify possible significant relationships between the variables offering insightful information valuable to the assessment/intervention package design process. 1. Are there significant relationships between the three latent constructs, “Reading Strategy Use”, “Reading Strategy Instruction”, and “Reading Beliefs”? 2. Are there significant relationships between the three latent constructs and “Reading Performance”? 3. Are there significant differences in class size or gender in “Reading Strategy Use”, “Reading Attitudes”, “Reading Strategy Instruction”, or “Reading Performance”? READING STRATEGIES, INSTRUCTION, AND ATTITUDES 6 Method Sample Sample data for this investigation was selected from the United States subset of a large scale international survey administered in September and November of 2009 across 65 countries by the Program of International Student Assessment (PISA). PISA is an international program to obtain comparable data about 15 year old students’ achievement in reading, math, and science literacy. PISA is coordinated by the Organization for Economic Cooperation and Development (OECD), an intergovernmental organization of industrialized countries. PISA was first administered in 2000. It has been, and will continue to be administered every third consecutive year. Each time PISA is administered, it evaluates students’ literacy in reading, math, and science, and depending on the year, a heavier focus is placed on one of the three subject areas. The focus of the 2009 PISA was on reading. The initial set of raw data contained nationally representative sample data from 5,233 15year-old students selected from 165 public and private schools, and after selecting variables and cleaning the data, 4,025 students were still included in the analyses. Data Collection Procedure The raw data and its accompanying SPSS syntax from the 2010 PISA were downloaded from the National Center for Education Statistics website into Notepad. Once an error in the SPSS syntax was corrected, it was used to open the data file in SPSS 19.0. A tentative model, informed by relevant theories and research, was drawn to hypothesize the latent constructs of the problem of interest and how they might relate. The items of the 2009 PISA student survey were reviewed and those relevant to the latent constructs were selected. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 7 Data for the observed variables, gender, class size, average class time per week, and reading performance, were also obtained from the PISA 2009 data. The data for the 10 indicators and four observable variables were used to create a new SPSS file. The file with 5,233 initial cases was examined and cleaned of missing data for each of the 14 variables. 4, 025 cases remained for analysis after the missing data was removed. The SPSS file was saved as a Fixed ASCII file so it could be analyzed using the Mplus software. Data Measures The ten indicators selected from the PISA 2009 US dataset were separated into three scales associated with different aspects of reading, “Reading Strategy Use”, “Reading Attitude”, and “Reading Performance” and labeled L, A, and I, respectively. Reading Strategy Use (L) The four items selected to explain the latent factor “Reading Strategy Use” (L), were taken from Question 2 of the ‘Reading for School’ questions. Question 2 stated: ‘During the last month, how often did you have to do the following kinds of tasks for school (in the classroom or for homework)?’ The following four of the nine total reading strategies items were selected: b) Explain the causes of events in text (L1), c) Explain the way characters behave in text (L2), e) Explain author’s purpose of the text (L3), and i) Explain text connections (L4). Options for student responses were 1 = Many Times, 2 = Two or Three Times, 3 = Once, or 4 = Not at All, with the lower values indicating higher “Reading Strategy Use”. Reading Attitude (A) The three items selected to explain the latent factor “Reading Attitude” (A), were taken from Question 28 of the 2009 PISA ‘Student Questionnaire’. Question 28 stated: ‘How much to you agree with the following statements about reading?’ After close examination of the 11 statements READING STRATEGIES, INSTRUCTION, AND ATTITUDES 8 in this question, it was substantively assumed that some of the statements might cross load with the first latent factor. The following three statements were finally selected to explain “Reading Attitude”: b) Reading is one of my favorite hobbies, c) I like talking about books with other people, and g) I enjoy going to a bookstore or library. These three items were chosen because they seem to capture three different aspects of “Reading Attitude” fun, social/communication, and engagement, respectively. Options for student responses were 1 = Strongly Disagree, 2 = Disagree, 3 = Agree, or 4 = Strongly Agree, with the higher values indicating a more positive “Reading Attitude”. Reading Strategy Instruction (I) The three indicators selected to explain the latent factor “Reading Strategy Instruction” (I), were taken from Question 41 of the 2009 PISA ‘Student Questionnaire’. Question 41 stated: ‘In you English classes, how often do the following occur?’ The three indicators from the list of seven statements were chosen because they related the closest to specific student reading strategies, indicating the teacher’s initiation of student reading strategy practice. The following three indicators were selected: a) The teacher asks students to explain the meaning of a text, e) The teacher encourages students to express their opinions, and g) The teacher shows students how the information in texts builds on what they already know. Options for student responses were 1 = Never of Hardly Ever, 2 = In Some Classes, 3 = In most Classes, or 4 = In All Classes with the higher values indicating a higher use of “Reading Strategy Instruction” (I). Reading Performance (P) Values for “Reading Performance were obtained from the “Plausible Value” score for reading that was included in the PISA 2009 data set. The values were left as is and “Reading Performance” was an observable variable in the structural model. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 9 Reading Instruction Time (T) Values for “Reading Instruction Time” were also taken directly from the 2009 PISA dataset and used as is. Class Size (S) The binary coding for class size (0 = Small, 1 = Large) was based on the median class size (250) indicated by all survey respondents. Class sizes below 250 were recoded as ‘Small”, and class sizes 250 or more were recoded as ‘Large’. There were 1,799 students in the small class group and 2,226 students in the large class group. Gender Each respondent indicated their gender in survey Question 4, “Are you male or female?” In this survey, female = 1 and male = 2. The gender variable was recoded into groups (0 = female, and 1 = Male) to stay consistent with class size groups. There were 1,966 female students and 2059 male students. Reliability Internal consistency estimates (i.e. Cronbach’s Alpha coefficient) were obtained for the three scales using SPSS. A widely-accepted social science cut-off alpha value .70 was used to assess the reliability of the three scales. Table 1 contains the reliability estimates for the three reading strategy use constructs. Their reliability were all relatively high, ranging from α = .71 for the Learning Strategies scale to α = .86 for the Reading Attitude scale; thus indicating strong construct reliability. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 10 Table 1 Reliability Estimates of the Reading Strategy Use Constructs (9 items) _____________________________________________________________________________ Reliability Variables Number of Items Estimates (α) ______________________________________________________________________________ Learning Strategies 3 .67 Reading Attitude 3 .83 Instructional Strategies 3 .76 ______________________________________________________________________________ Statistical Analysis Model Identification Prior to analyzing the data, the identification status of the initial Confirmatory Factor Analysis (CFA) was established. A model where the number of known elements is smaller than the number of freely estimated parameters has negative df and is considered under-identified. A model with the same amount of known and unknown parameters has df = 0, and is justidentified. The desirable model is the overidentified model where the number of known elements is greater than the number of unknown elements, resulting in positive df. The formula [p(p+1) ]/2, where p is the number of observed variables in the model. The amount of free parameters was obtained from the Mplus output to complete the formula by subtracting the number of unknown elements from the number of known elements to determine the df and identification status of the initial model. Descriptive Statistics and Multivariate Normality Procedure Descriptive statistics for preliminary analysis, including minimum and maximum values, frequencies, means, standard deviations, skewness, and kurtosis, were obtained using SPSS. The preliminary analysis first examined the multivariate normality of the data because testing for CFA data fit is valid under the SEM assumption of multivariate normality. Multivariate READING STRATEGIES, INSTRUCTION, AND ATTITUDES 11 normality assumes a normal distribution of each observed variable, joint distribution for all combinations of observed variables are normal, all bivariate scatter plots are linear and homoscedastic, which means all conditional distributions have equal variances. Violation of the multivariate normality assumption can cause the goodness-of-fit indices to be distorted, affecting the results of the chi-square test, resulting in inflated or deflated chi-square values. It can also cause inflation of Type I error when testing for statistical significance of factor loadings, correlations, and other model parameters. Skewness and kurtosis of each variable were examined to first verify the univariate normality assumptions of the constructs based on the suggested values of < 2.0 and <7.0 of each, respectively. SPSS was used to create Q-Q Plots to verify multivariate normality. Mardia’s normalized estimate (< 3) can also be used to verify the assumption of multivariate normality and can be obtained using the TECH13 command in Mplus. NOTE*When I tried the TECH13 command I received and error message stating that the TECH13 command is only available in the Mplus software that allows for mixture modeling. I unsuccessfully searched all over for other ways to get this estimate, but eventually moved on. Structural Equation Modeling Confirmatory Factor Analysis under the SEM framework was used to determine whether a hypothesized model fits the sample data or not. Once the CFA measurement model was specified a structural regression model was used to examine the relationships among the constructs and observed variables. Factorial Invariance was tested to determine that the constructs have the same meaning for both groups, because groups were compared on the latent constructs in SEM. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 12 CFA – Measurement Part in Structural Equation Modeling Confirmatory factor analyses (CFAs) in SEM are analyses of measurement models that specify how latent constructs are measured by observed variables. Based on a combination of theoretical and empirical evidence from existing literature, CFA was used in this investigation to specify a hypothesized model with three latent constructs, “Use of Reading Strategies” (L), “Reading Attitude” (A), and “Reading Strategy Instruction”(I), measured by four, three, and three indicators, respectively. It was hypothesized that the three latent factors were correlated. Five goodness-of-fit indexes were used to jointly examine the CFA model: chi-square fit statistic, comparative fit index (CFI), Tucker-Lewis Index (TLI), standardized root-mean-square residual (SRMR), and root mean-square error of approximation (RMSEA) with a 90% confidence interval (CI). Hu and Bentler’s (1999) recommendations for the judgment of good model fit were used, where a model with CFI and TLI greater than .95, SRMR less than .08, and RMSEA less than .05 where the lower limit of the 90% CI is close to or includes zero, and the upper limit is less than .08(Hu & Bentler, 1999). Modification Indices (MIs) were also examined when the initial model does not indicate a good fit. Modification Indices greater than 10 are examined and a judgment is made to try to improve the model fit by either freeing the largest MI, or free a parameter based on other substantive considerations. Finally, unstandardized factor loadings were examined to determine if the parameter estimates were proper solutions and made substantive sense. The first item for each latent factor was the reference indicator for that construct indicating that the scale of that indicator was used as the scale for all indicators of that construct. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 13 Structural Regression Once the constructs were assessed, a structural model was developed to test the plausibility of the hypothesized relationships among the three latent constructs, “Reading Strategy Use”, “Reading Attitude”, and “Reading Strategy Instruction” and an observed variable, “Reading Performance”. The hypothesized structural model is provided in Figure 2. The model hypothesizes that the three latent constructs have an explanatory role in students’ reading performance. Analysis of the structural regression model for direct and indirect effects of the four variables followed the confirmation of a good model fit based on a joint examination of model indices. Once goodness-of-fit was confirmed, the structural coefficients were analyzed to determine if the hypothesized path are statistically significant, supporting the hypothesized variable relationships. Factorial Invariance Factorial Invariance must first be established before the model can be tested for differences across groups. Testing for factorial invariance requires testing for configural invariance, measurement invariance, and structural invariance. Configural Invariance NOTE* – I wasn’t sure if I did something wrong that the results for the groups together and separate and together were exactly the same. I referred to a number of articles, the various textbooks I have, and the Mplus website and couldn’t find any helpful information about a possible error to correct, or confirmation that this is OK. I played around with the coding trying everything I could to see if I got different results, but they were always the same for all three. I READING STRATEGIES, INSTRUCTION, AND ATTITUDES 14 wasn’t sure if I should or not, but I included the χ2 contributions from each group in the table, then proceeded as is. Prior to testing for factorial invariance, it is necessary to test for configural invariance across groups. This involves the identification of a baseline model, which is estimated for each group separately. The most parsimonious, yet substantively most meaningful and best-fitting model to the data for a group is referred to as the baseline model for this group. Configural invariance was tested using the three-factor model identified in the CFA as the baseline model. The baseline model was tested for data fit in each of the two groups separately using Mplus. The goodness-of-fit indexes for both groups together and each group separately should indicate a very good model fit.* Assuming configural invariance was in place across the class size and gender groups, the measurement and structural invariance tests for invariance of the factor loadings, intercepts, item uniqueness, and factor variances/covariances were conducted. Measurement Invariance The step-up, or forward approach was used to test for factorial invariance in this study. This approach started by testing the least constrained solution (Model 0), with no invariance, and imposed restrictions creating nested models. The nested models were tested against each other to determine if there were significant chi-square differences. When testing for factorial invariance across gender groups, a significant difference in the chi-square of two models was found, at which time, a new model was created to examine partial invariance. The model with partial invariance was used in the remaining invariance tests. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 15 Although the chi-square difference was the predominant indicator of invariance, the significance of the ΔCFI was also considered based the suggested rule of rejecting invariance (ΔCFI < -.01) as additional support for invariance. Using the step-up approach the restriction of invariant factor loading was first imposed onto the baseline model (Model 1), and a judgment of weak measurement invariance was made based on the Δχ2. Next, the restriction of invariant factor loadings and invariant intercepts was imposed to determine strong measurement invariance(Model 2). This step was followed by imposing invariant factor loading, invariant intercepts, and invariant item uniqueness to determine strict measurement invariance (Model 3). As mentioned above, if the Δχ2 for any of the nested models was statistically significant, some restrictions were removed to test for partial invariance. Once measurement invariance was tested, the model was tested for structural invariance Structural Invariance Structural invariance involves imposing invariant factor loadings, invariant intercepts, and invariant factor variances and covariances. This model (Model 4) refers to invariance of factor variances and covariances. Model 4 was tested against Model 2 to determine structural invariance. Once factorial invariance was established comparison of the variables across class size and gender groups was allowed. MIMIC Group Comparisons procedure The multiple-indicator, multiple-cause (MIMIC) group code modeling was used to test hypotheses about differences among the groups on the constructs. Based on the testing for factorial invariance, the assumption of invariant factor loadings, item uniqueness, and factor variance/covariances necessary across the two groups was met. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 16 The structural coefficients were examined for statistical significance. Statistically significant regression coefficients were interpreted based on their sign (+/-) and the group coding. The magnitudes, reflection of the differences between the population means for the two groups, of any statistically significant coefficients were calculated to interpret the effect size of the group differences. The unstandardized estimates of the structural coefficients γ and the ̂ (𝜍), to compute the effect residual variances VAR(ς), were used in the equation, 𝑑̂ = |𝛾̂|/√𝑉𝐴𝑅 sizes of the mean group differences on “Reading Strategy Instruction”, “Reading Attitude”, and “Reading Performance”. Judgments about the magnitude of the effect sizes were made based on Cohen’s (1988) guidelines; small effect size (d = .2), medium effect size (d = .5), and large effect size (d = .8). Results Model Identification Using the formula [p(p + 1)]/2, where p = number of indicators, the number of known elements in the initial CFA model with 10 indicators was calculated as 55, and the number of unknown numbers obtained in Mplus was 33. When the number of unknown elements was subtracted from the known elements, (55-33), it was determined that this model is overidentified and has df 22. Descriptive Statistics and Multivariate Normality Results The descriptive statistics are presented in Table 2. The table includes distributions for the 10 individual items initially hypothesized to define the three reading strategy use constructs. The item means ranged from 1.90 to 2.91 and standard deviations ranged from .83 to 1.06. The skewness values were all less than 2.0 and kurtosis values were all less than 7.0. The acceptable skewness and kurtosis values as well as the absence of outliers indicated acceptable distributions READING STRATEGIES, INSTRUCTION, AND ATTITUDES 17 of the variables for univariate normality. Visual inspection of the Q-Q Plots indicated normal QQ Plots, where most of the data points fell on the straight line. Table 2 Distributions of the Initial Items of the Three Reading Strategy Use Constructs (N = 4025) ______________________________________________________________________________ Construct Strategy Mean SD Skewness Kurtosis Items ______________________________________________________________________________ Learning Strategies Item 1 Explain Cause 1.94 .97 .72 -.51 Item 2 Explain Behavior 1.90 .98 .82 -.43 Item 3 Explain Purpose 1.91 .98 .77 -.51 Item 4 Explain Connections 2.28 1.06 .30 -1.14 Reading Attitude Item 5 Reading is Fun 2.12 .94 .47 -.66 Item 6 Social/Communication 2.54 .97 -.13 -.97 Item 7 Engagement 2.26 .91 .11 -.91 Instructional Strategies Item 8 Explain Text 2.91 .83 -.29 -.61 Item 9 Think Aloud 2.87 .88 -.29 -.77 Item 10 Connect Prior Knowledge 2.72 .89 -.12 -.81 ______________________________________________________________________________ CFA The initial CFA model, illustrated in Figure 1, to test the measurement portion of the structural equation indicated adequate model fit. The chi-square was statistically significant, χ2 (32) = 286.86, and although the other modification indices were within the suggested range RMSEA (.043), CFI (.980) and TLI (.971); the large number and values of modification indices (MI) suggested to possibility of increasing the model fit by freeing some of its parameters. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 18 Figure 1 Initial CFA Model 1.00 e1 1.00 X1 e5 X6 e6 X7 e7 -0.10 1.16 e2 X5 X2 1.07 L Reading Strategy Use A Reading Attitude 0.96 0.89 e3 X3 -0.13 0.10 0.85 e4 I Reading Strategy Instruction X4 1.00 1.24 1.32 X8 X9 X10 e8 e9 e10 Figure 1. Model for three reading constructs: Reading Strategy Use (L), Reading Strategy Instruction (I), and Reading Attitudes (A).x1 through x10 are the indicators for each factor, and e1 through e10 indicate the item residuals. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 19 The decision of what order to individually free the indicators, in attempt to improve model fit, was substantively based. Three additional tests of model fit were completed, each freeing one indicator at a time and improving the overall model fit. As seen in Table 3, after freeing three indicators, the fourth model, which will be referred to as the reduced model provided a better fit to the data. The chi-square was still statistically significant χ2 (11) = 49.46, p < .001, but joint examination of the other fit indices indicated improvement in their values from the initial CFA model, RMSEA (.029), CFI (.994) and TLI (.988). Table 3 Goodness-of-Fit Indexes of the Initial and Reduced CFA Models _____________________________________________________________________________ 90% CI χ2 df CFI TLI SRMR RMSEA LL UL ______________________________________________________________________________ Initial CFA Model 268.862* 32 .980 .971 .028 .043 .04 .05 Reduced CFA Model 49.459* 11 .994 .988 .016 .029 .02 .04 ______________________________________________________________________________ Note: CFI = Comparative Fit Index; TLI = Tucker–Lewis index; SRMR = Standardized Root Mean Square Residual; RMSEA = Root Mean Square Error of Approximation; CI = Confidence Interval; LL = Lower Limit; UL = Upper Limit. *p < .001, (n = 4025). Unstandardized factor loadings were examined to determine if the parameter estimates are proper solutions and make substantive sense. These values can be seen on the one way arrows from the three latent factors to their indicators in Figure 2. The statistically significant standardized coefficients associated with the two-way arrows connecting “Reading Strategy Use” and “Reading Strategy Instruction”, “Reading Strategy Use” and “Reading Attitude”, and “Reading Attitude” and “Reading Strategy Instruction” represent the estimate of the correlation between the constructs, (r = -.33, p < .001, r = -.26 , p < .001, r =.28 , p < .001, respectively). NOTE* I could not figure out how to make the two way arrows in power point or word. The only option I found was a two headed connector, but I couldn’t get it shaped correctly. So, the model has the correlation estimates but is missing the two way arrows. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 20 Figure 2 Reduced Three Factor Confirmatory Factor Analysis Model. 1.00 e1 1.00 X1 1.26 e2 X6 -0.10 X2 1.06 L Reading Strategy Use e6 A Reading Attitude 1.16 e3 X3 -0.13 0.10 X7 I Reading Strategy Instruction 1.00 .84 X9 X10 e9 e10 Figure 1. Model for three reading constructs: Reading Strategy Use (L), Reading Strategy Instruction (I), and Reading Attitudes (A).x4, x5, and x8 were eliminated from the initial CFA model to obtain better data fit. e7 READING STRATEGIES, INSTRUCTION, AND ATTITUDES 21 Table 4 provides the parameter estimates for the structural coefficients including the estimation of regression slopes, standard errors, squared factor loading values, and residual variances. All parameter estimates were statistically significant (p < .001), with critical ratios ranging from 4.799 to 102.683. No indicator of ill fit was found, and a good overall fit of the CFA model was concluded. The squared values of the factor loadings (R2) were examined to determine the proportion of variance in the indicators explained by their respective factors. Use of reading strategies explains 33.80% of the variability of students who reported explaining the causes of events to better understand what they’ve read, 58.90% of the variability of students who reported explaining character’s behavior to better understand what they’ve read, and 41.50% of the variability of students who reported explaining the author’s purpose to better understand what they’ve read. Reading attitude explains 47.10% of the variability of students who reported enjoying talking about books, and 72% of the variability of students who reported enjoying getting new books from the library or book store. Reading strategy instruction explains 69.90% of the variability of students who reported their teacher’s use of “Think Aloud” to model use of reading comprehension strategies, and 48.70% of the variability of the students who reported their teacher’s modeling and prompting student to connect prior knowledge while reading. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 22 Table 4 Reduced CFA Model Standardized Factor Loadings, Standard Errors, and Residual Variances by Construct (Items 4, 5, and 8 removed). ______________________________________________________________________________ Estimation Standard Squared Residual of Regression Error Factor Variance Slope Value ______________________________________________________________________________ Learning Strategies Explain Cause .62 .01 .338 .61 Explain Behavior .77 .01 .589 .41 Explain Purpose .64 .01 .415 .59 Reading Attitude Social/Communication .69 .02 .471 .53 Engagement .85 .03 .720 .28 Instructional Strategies Think Aloud .83 .02 .696 .30 Connect Prior Knowledge .70 .02 .487 .51 ______________________________________________________________________________ Note. All values were statistically significant (p < .001). Critical Ratios of the values ranged from 28.741 – 58.98. The correlation between the three constructs… The correlation between the Reading Strategy Use and Reading Attitude constructs is r = -.26; the correlation between the Reading Strategy Use and Reading Strategy Instruction is r = -.33; and, the correlation between the Reading Attitude and Reading Strategy Instruction constructs is r = .28. Structural Regression Results A joint examination of the goodness-of-fit indices suggests a good model fit. The chisquare statistic was significant χ2 (15) = 137.63, p < .001, however, due to its sensitivity to sample size, additional fit indices were examined. The other four fit indices indicated a good model fit. RMSEA (.045) was below the recommended .05, and the CFI (.983) and TLI (.968) values were both above .95. As seen in Figure 3, the structural coefficients for all paths connected to “Reading Performance” were statistically significant, indicating marked relationships between “Reading Strategy Use”, “Reading Attitude”, “Reading Strategy Instruction”, and “Reading Performance”. The expected directions of the parameters and their statistical significance provided additional substantive support to the model fit. Unstandardized Estimates of the Structural Coefficients, READING STRATEGIES, INSTRUCTION, AND ATTITUDES 23 Residual Variances, and Effect Sizes for the Three Latent Constructs and Performance are provided in Table 5. The structural coefficient with a path from “Reading Strategy Use” to “Reading Performance”, (γ1 = -.09, p < .001) is statistically significant. Given the assigned values of the survey response options, the negative sign of this coefficient indicates that students who reported using more reading comprehension strategies had higher reading performance. The structural coefficient with a path from “Reading Strategy Instruction” to “Reading Performance” is also statistically significant, (γ2 = .06, p < .01). Given the assigned values of the survey response options, this coefficient indicates that students who reported a higher frequency of reading comprehension strategy instruction demonstrated higher reading performance. Likewise, the structural coefficient with path from “Reading Attitude” to “Reading Performance” was statistically significant, (γ3 = .43, p < .001). Given the assigned values of the survey response options, this coefficient indicates that students who reported a better attitude toward reading had higher reading performance. Indirect effects were tested in the model, however, no indirect effects were found to be statistically significant. The overall results of the structural regression support the hypothesized structural equation model, and contain valuable information regarding reading comprehension strategies. Further analysis of the structural equation model will examine the data for class size and gender differences in the variables of interest, once factorial invariance is verified. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 24 Table 5 Unstandardized Estimates of the Structural Coefficients, Residual Variances, and Effect Sizes for the Three Latent Constructs and Performance. ______________________________________________________________________________ Structural Residual Effect Coefficients Variance Size ______________________________________________________________________________ Reading Attitude Instructional Strategies Reading Performance .54 .07 26.89 .48 .61 7815.52 .78 .09 .30 ______________________________________________________________________________ Note. The structural coefficients shown as absolute values. Configural Invariance Because the results were identical for when the baseline models of class size and gender were tested for both groups together and each group separately, only one set of goodness-of-fit indices (Table 6) and one set of unstandardized and standardized factor loadings (Tables 7 and 8) of the baseline model for class size and groups can be provided. Based on the lack of model results for each group separately, configural invariance can’t be determined. The goodness-of-fit indices for the class size and baseline models indicated a good fit for each. Mplus results included the χ2 contributions for each group, which are included with the goodness-of-fit indices. Table 6 Goodness-of-Fit Indexes for the Baseline Model of Class Size and Gender Groups _____________________________________________________________________________ 90% CI χ2 df CFI TLI SRMR RMSEA LL UL ______________________________________________________________________________ Class Size 58.102* 22 .994 .989 .017 .029 .020 .038 Small 14.153 Large 43.950 Gender 53.101* 22 .995 .990 .016 .027 .017 .036 Female 35.167 Male 17.934 ______________________________________________________________________________ Note: CFI = Comparative Fit Index; TLI = Tucker–Lewis index; SRMR = Standardized Root Mean Square Residual; RMSEA = Root Mean Square Error of Approximation; CI = Confidence Interval; LL = Lower Limit; UL = Upper Limit. *p < .001 (n = 4). READING STRATEGIES, INSTRUCTION, AND ATTITUDES 25 Table 7 Standardized Factor Loadings and Standard Errors by Small and Large Class Size ______________________________________________________________________________ Large Class Size Small Class Size Construct/Indicator SFL SE SFL SE ______________________________________________________________________________ Learning Strategies Explain Cause .63 .02 .62 .02 Explain Behavior .77 .02 .77 .02 Explain Purpose .65 .02 .64 .02 Reading Attitude Social/Communication .65 .03 .73 .03 Engagement .87 .04 .83 .04 Instructional Strategies Think Aloud .83 .03 .85 .03 Connect Prior Knowledge .68 .03 .72 .03 ______________________________________________________________________________ Note. All values were statistically significant (p < .001). Critical Ratios of the values ranged from 28.741 – 58.98. The correlation between the Reading Strategy Use and Reading Attitude constructs is -.25 and -.25; the correlation between the Reading Strategy Use and Reading Strategy Instruction is -.33 and -.36; and, the correlations between the Reading Attitude and Reading Strategy Instruction constructs is .26 and .29 for the small and large class size groups, respectively. Table 8 Standardized Factor Loadings and Standard Errors by Gender ______________________________________________________________________________ Male Female Construct/Indicator SFL SE SFL SE ______________________________________________________________________________ Learning Strategies Explain Cause .65 .02 .60 .02 Explain Behavior .76 .02 .78 .02 Explain Purpose .65 .02 .64 .02 Reading Attitude Social/Communication .70 .03 .63 .04 Engagement .78 .04 .88 .05 Instructional Strategies Think Aloud .84 .03 .82 .03 Connect Prior Knowledge .7o .03 .71 .03 ______________________________________________________________________________ Note. All values were statistically significant (p < .001). Critical Ratios of the values ranged from 28.741 – 58.98. The correlation between the Reading Strategy Use and Reading Attitude constructs is -.25 and -.25; the correlation between the Reading Strategy Use and Reading Strategy Instruction is -.33 and -.36; and, the correlations between the Reading Attitude and Reading Strategy Instruction constructs is .26 and .29 for the small and large class size groups, respectively. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 26 Measurement and Structural Invariance The results of the tests for measurement and structural invariance among the class size and gender groups are illustrated in Table 9 and Table 10, respectively. As noted in Table 8, the results of the first of the tests for factorial invariance among the class size groups indicated a nonsignificant chi-square difference between Model 1 and Model 0, Δχ2 (4) = 1.975, p > .05, thus indicating invariant factor loadings across the two groups. The chi-square difference between Model 2 and Model 1is also not statistically significant, Δχ2 (4) = 5.658, p > .05, indicating there are also invariant intercepts across the two groups. The statistically nonsignificant chi-square difference between Model 3 and Model 2, Δχ2 (7) = 13,689, p > .05, indicates invariant item uniqueness across the two class size groups. The determination of invariant factor loadings, intercepts, and item uniqueness indicates strict measurement invariance between the two class size groups. Further, structural invariance was identified by the nonsignificant chi-square difference between Model 4 and Model 2, Δχ2 (6) = 6.045, p > .05, verifying the invariance of factor variances and covariances in this model. Table 9 Testing for Factorial (Measurement and Structural) Invariance Across Class Size Groups ______________________________________________________________________________ Model Model χ2 df Comparison Δχ2 Δdf CFI ΔCFIa RMSEA ______________________________________________________________________________ M0 58.102 22 .994 .029 M1 60.077 26 M1–M0 1.975 4 .995 .001 .026 M2 65.735 30 M2–M1 5.658 4 .994 - .001 .024 M3 79.424 37 M3–M2 13.689 7 .993 -.000 .024 M4 71.780 36 M4–M2 6.045 6 .994 .000 .022 ______________________________________________________________________________ Note: χ2 = conventional chi-square fit statistic (under maximum-likelihood estimation); CFI = comparative fit index; RMSEA = root mean square error of approximation; M0 = baseline model (no invariance imposed); M1 = invariant factor loadings; M2 = invariant factor loadings and invariant intercepts; M3 = invariant factor loadings, partially invariant intercepts, and invariant residual variances; M4 = invariant slopes, partially invariant intercepts, and invariant factor variances and covariances. a. ΔCFI ≤ −0.01 signals lack of invariance targeted by the respective comparison of nested models. *p < .05. **p < .01. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 27 The results of the second set of tests for factorial invariance, in Table 10, indicate strong measurement invariance and structural invariance for the gender groups. The chi-square difference between Model 1 and Model 0 is not statistically significant, Δχ2 (4) = 6.519, p > .05, thus indicating invariance of the factor loadings across the two groups. The chi-square difference between Model 2 and Model 1is statistically significant, Δχ2 (4) = 31.432, p < .05, which indicates the intercepts across genders are not completely invariant. On the examination of the MIs, a decision was made based on substantive reasoning to free the parameters higher than 10 one at a time. Model 2 was modified first by freeing the intercept for Item 6. Because the Δχ2 was still statistically significant, the intercepts for Item 3 were also freed. After freeing the intercept for Item 3, the chi-square value for Model 2P dropped from 90.691 to 63.957, making the chi-square difference between Model 2P and Model 1 no longer statistically significant, Δχ2 (2) = 4.698, p > .05. Therefore, there are invariant factor loadings and invariant intercepts across the two groups, except for the intercept of two indicators (Item 3 and Item 6). The Δχ2 difference between Model 3 and Model 2P was not statistically significant, Δχ2 (7) = 12.283, p > .05, thus indicating invariance of item uniqueness across gender. And lastly, the Δχ2 between Model 4 and Model 2P in the test for structural invariance, was not statistically significant, Δχ2 (6) = 67.416, p > .05, thus indicating invariant factor variances and covariances, READING STRATEGIES, INSTRUCTION, AND ATTITUDES 28 Table 10 Testing for Factorial (Measurement and Structural) Invariance Across Gender Groups ______________________________________________________________________________ Model Model χ2 df Comparison Δχ2 Δdf CFI ΔCFIa RMSEA ______________________________________________________________________________ M0 53.101 22 .995 .027 M1 59.259 26 M1–M0 6.519 4 .995 .000 .025 M2 90.691 30 M2–M1 31.432* 4 .990 -.005 .032 M2P 63.957 28 M2P–M1 4.698 2 .994 -.001 .025 M3 76.240 35 M3–M2P 12.283 7 .993 -.001 .024 M4 67.416 34 M4–M2P 3.459 6 .994 .000 .022 ______________________________________________________________________________ Note: χ2 = conventional chi-square fit statistic (under maximum-likelihood estimation); CFI = comparative fit index; RMSEA = root mean square error of approximation; M0 = baseline model (no invariance imposed); M1 = invariant factor loadings; M2 = invariant factor loadings and invariant intercepts; M3 = invariant factor loadings, partially invariant intercepts, and invariant residual variances; M4 = invariant slopes, partially invariant intercepts, and invariant factor variances and covariances. a. ΔCFI ≤ −0.01 signals lack of invariance targeted by the respective comparison of nested models. *p < .05. Overall, the results on testing for factorial invariance indicate invariance of form, factor loadings, intercepts, residual variances, and factor variances and covariances for the class size groups. While the results of factorial invariance testing for the gender groups indicate invariance of form, factor loadings, residual variances, and factor variances and covariances; and partial invariance of item intercepts. The overall results offer a dependable framework of the testing for class size and gender differences on the reading strategy constructs. MIMIC Group Comparisons Based on the results of testing for measurement and structural invariance, it is assumed that the MIMIC assumption of invariant factor loadings, item uniqueness, and factor variances and covariances across the two groups has been met. For the model in Figure 2, joint examination of the goodness-of-fit indices suggest there is a good model fit, despite the statistically significant chi-square, χ2(27) = 160.59, p < .001, RMSEA (.035) was below the recommended .05, and the CFI (.983) and TLI (.965) values were both above .95. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 29 The Mplus results again reported the correlations between the constructs, as previously described in the structural regression results. None of the structural coefficients associated with the two class size groups were statistically significant, indicating no differences on any of the factors based on class size. The structural coefficient with a path from gender to “Reading Strategy Use” (γ1 = .03, p = .08) is not statistically significant, indicating there is no difference reported between the females and males on their use of reading comprehension strategies. The statistically significant structural coefficient with a path from gender to “Reading Strategy Instruction” (γ2 = -.05, p < .05), indicate the two groups differ on “Reading Strategy Instruction”. Given the group coding (0 = female, 1 = male), the negative sign of this coefficient indicates that females reported a higher amount of reading strategy instruction than males. The effect size calculated using the equation previously mentioned, .54/.69 = .78, indicates a moderate to high magnitude in the difference between females and males “Reading Strategy Instruction”. The statistically significant structural coefficient with path from gender to “Reading Attitude” (γ3 = -.36, p < .001), indicate the two groups also differ on “Reading Attitude”. Given the group coding (0 = female, 1 = male), the negative sign of this coefficient indicates that females have a better attitude toward reading than males. The effect size calculated using the previously mentioned equation, .07/.78 = .09, indicates a moderate to small magnitude in the difference between females and males for “Reading Attitude”. The statistically significant structural coefficient with path from gender to “Reading Performance” (γ4 = -.15, p < .001), indicates the two groups differ on “Reading Strategy Instruction”. Again, based on the group coding (0 = female, 1 = male), the negative sign of this coefficient indicates that females performed higher on the reading assessment than males. The READING STRATEGIES, INSTRUCTION, AND ATTITUDES 30 effect size calculated using the previously mentioned equation, 26.89/88.41 = .30, indicates a small to medium magnitude in the difference between females and males for “Reading Performance”. Figure 3 Reduced CFA Model for Three Hypothesized Constructs (Reading Strategy Use, Reading Attitude, and Reading Strategy Instruction),One Observable Variable (Reading Performance), and One Grouping Variable (Gender) e6 X6 1.00 1.16 X7 A Reading Attitude γ1 = -.09 -0.10 e1 e2 X1 X2 P Reading Performance γ2 = .06 1.00 1.26 e7 γ3 = .43 L Reading Strategy Use 1.06 e3 0.10 X3 γ3 = -.36 -0.13 γ1 = .03 I Reading Strategy Instruction 1.00 γ2 = -.05 .84 X9 X10 e9 e10 γ4 = -.15 G Gender O = Female 1 = Male Figure 3. Reduced CFA Model for Three Hypothesized Constructs (Reading Strategy Use, Reading Attitude, and Reading Strategy Instruction),One Observable Variable (Reading Performance), and One Grouping Variable (Gender) READING STRATEGIES, INSTRUCTION, AND ATTITUDES 31 Discussion In response to the first research question, ‘Are there significant relationships between the three latent constructs, “Reading Strategy Use”, “Reading Strategy Instruction”, and “Reading Beliefs”?’ As indicated in the results section, there were statistically significant relationships between all three of the latent constructs. The implications of these results are numerous, and many of them are quite easy to ascertain. For instance, because of the correlation of all three areas, strategy use, instruction, and beliefs should all be considered when planning changes in one area. Subsequent changes in other areas may be necessary, or, use changes in one area may also produce changes in another area. If this correlation is accounted for when designing instruction, elements of each construct could be utilized concurrently to allow for more flexible and interesting instruction. For example, since reading strategy use and instruction are correlated, if teachers would like to see change in students’ use of reading strategies, instead of simply focusing on how they can change the student, also focusing of how they can change their reading strategy instruction making changes in their teaching is likely to produce greater changes in students’ reading strategy use, than only attempting to make change in the student. Also in relation to the first research question, when the structural coefficients associated with the specific indicators were examined, the highest correlation between all of the indicators and the factors they are associated with was the student’s report their teacher’s use of the ‘think aloud’ instructional strategy. One possible interpretation of this result that students are more aware of the teachers use of reading comprehension strategy instruction with the ‘think aloud’ strategy because it is more obvious and explicit than some other strategies, such as helping students make connections in the text. The ‘think aloud’ strategy involves a teacher verbalizing their active use of reading comprehension strategies while they are reading with the students. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 32 This possible interpretation offers additional support of effectiveness of explicit reading comprehension strategy instruction. The results related to the second research question, ‘Are there significant relationships between the three latent constructs and “Reading Performance”?’ indicate statistically significant relationships between all three factors and reading performance. The implications of these results are similar to those in the first question because it was already established that the three construct were all correlated, now “Reading Performance” is correlated with all three factors. As mentioned previously, one of the benefits of correlation among all of the variables, is the possibility of using the changes in one factor to complement changes in another factor. The results related to the third research question, ‘Are there significant differences in class size or gender in “Reading Strategy Use”, “Reading Attitudes”, “Reading Strategy Instruction”, or “Reading Performance”?’ offer an enormous amount of useful information. Statistically significant differences were found between females and males on all variables except self-reported use of reading strategies. The lack of differences between students reported use of reading strategies, may also be related to the increased need for explicit instruction. Perhaps students use reading comprehension strategies more than they realize, but they are not as aware of this use because they haven’t received explicit instruction teaching them the nature, benefits, and proper use related to each individual reading comprehension strategy. The gender results of this analysis are interesting in that the significantly higher performance, awareness of reading instruction, and attitudes of females compared to males in a sample this large could possibly be interpreted as support for the age old belief that reading is a “feminine” activity. This is an area that may need further investigation. If this belief is READING STRATEGIES, INSTRUCTION, AND ATTITUDES 33 something still commonplace in our society, it may partly explain the statistically significant lower attitudes of males toward reading found in this investigation. As other results of this study indicated a statistically significant correlation between attitude and performance, it is possible that efforts made to positively impact the attitudes of males toward reading will also positively impact males reading performance. Conclusion The integrated results of this study are one example of the many benefits of the multifaceted nature of SEM. The ease of mixing the interpretation of results from different elements of the analysis to provide a more meaningful and complete picture of the nature of reading strategy use, instruction, and reading attitude, subsequently allowing for more meaningful conclusions to be drawn. The results of this study support the logic of the hypothesis even more than was anticipated, offering positive reassurance of the need to continue work on the reading strategy assessment and intervention project. The nearly perfect correlation between reading strategy use and reading performance is excellent support for the need of an assessment/intervention design help teachers become more aware of the strategies students already know as well as how effectively they are using them. Another goal of the assessment/intervention project is to help students become more aware and engaged in monitoring their use of reading comprehension strategies. Considering that females demonstrated statistically significant differences on all variables except the self-reported use of reading strategies, may offer some credence to the notion that students need a more explicit understanding of if and how they are actually using reading comprehension strategies to support their own understanding of text. READING STRATEGIES, INSTRUCTION, AND ATTITUDES 34 As defined by PISA, “Reading is the skill to understand, use and think about written texts. This skill is needed to reach one’s goals, to develop one's knowledge and potential, and to take part in society.” In recent years, the importance of reading comprehension strategy instruction has become more widely recognized in reading instruction at all age and grade levels. By helping students understand how these flexible tools work, teachers enable readers to tackle challenging texts with greater independence. The underlying assumption is that the processes or skills induced by the strategies become more automatic with practice. Strategies provide the means to tackle complex problems in more efficient ways and, with practice, the strategies lead to skills that eventually become automatic.