classroom research report

advertisement

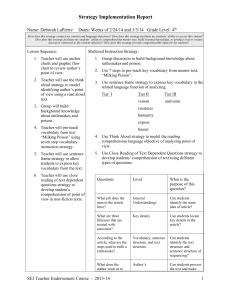

Which Test Works? A different assessment for ELL students. Abby Boughton Shuford Elementary Third Grade Background/Introduction I chose this study because I was frustrated with my ELL students’ selection test scores after providing reading instruction from our basal series. Throughout the week my ELL students would be actively involved in discussions and able to demonstrate knowledge on skills practiced through workbook pages and other activities. At the end of the week when the selection test was given, they were scoring below average or failing the assessment given on the story. Throughout instruction I was including the same types of questions students would have to answer on the selection test to expose them to the different question types. Vocabulary activities, background knowledge information, and different methods of instruction were provided each day. Since they were demonstrating comprehension throughout the week, I thought an alternate assessment might allow them to show their ability better than the regular multiple choice selection test. I was also concerned because the ESL teachers informed me of two of my ELL students causing problems when going to ESL class, and also show a lack of interest during independent reading time in class, which led to another question to research. A particular study was conducted using two specific types of tests, the Woodcock-Johnson Language Proficiency Battery-Revised(WJLPB-R) Passage Comprehension test, and the Diagnostic Assessment of Reading Comprehension (DARC). I found this study to be rather interesting not because I gave my students this particular type of test, but because of the content of these tests that related to the content of the tests I assessed my students with. The WJLPB-R test uses a cloze procedure to examine the ability to understand information read silently. The examinee reads a sentence or short passage from which individual words have been omitted, then provides the most appropriate word to fill in the blank given the meaning of the sentence or passage. The DARC requires children to read a passage and answer 30 truefalse questions about the information provided in the story. The questions are designed to assess the students’ background knowledge, memory for the text, ability to form inferences based on information provided in the text, and the ability to form inferences that require integration of information presented in the text with information known from background knowledge ( Preciado, Horner, Baker 2009). The reason I was so interested in this research was because I wanted to know if my tools of assessment were valuable for my students to really show what they know. In short, the DARC places a premium on verbal processing of information and not simply on verbal knowledge as reflected in measures of vocabulary (Preciado, Horner, Baker 2009). When interviewing my students, I asked them different types of questions that included true/false and cloze sentences that they were able to correctly answer. Their lack of vocabulary didn’t interfere with providing their knowledge about the story, showing me their ability to comprehend the story. Researchers Martinez, Aricak and Jewell point out that researchers have noted that students develop good or poor attitudes toward reading only after repeated failure or success, respectively, with reading (qtd. in Swanson, 1985). They also point out that according to social cognitive theory, the ensuing feedback students received from peers and adults as a result of reading well or reading poorly shapes their own attitudes and beliefs about reading (qtd. in Bandura, 1986). In addition to, they also state that students’ attitudes toward reading develop across time as a result of specific reading experiences and their beliefs about the outcomes of the experiences (qtd. in McKenna, Kear, & Ellsworth, 1995). This study was conducted with fourth grade students. They were given a Curriculum-Based Measurement tasks test in reading and the Elementary Reading Attitude Survey, then given a high-stakes statewide reading assessment. From this study, they reported that although reading attitude and reading achievement were not related in the early elementary grades(grades 1-2), both had causal paths to reading achievement five years later(grade 7) (Martinez, Aricak and Jewell 2008). I found this interesting because my students are showing and maintaining an interest in reading, along with average success. One of my ELL students is reading below grade level and is having the most difficulty in reading. I’m fearful that if her achievement level doesn’t increase that she might have a negative attitude towards reading for many years to come. This research made me ask myself why I don’t do this for my students. Time? Mandated curriculum? This study was conducted with a fifth grade student in a 4/5 combination classroom who came from India. The student was given passage with multiple choice questions to answer to assess comprehension. She didn’t answer the questions correctly, providing many questions for the researcher. Her rating was “not yet within expectations,” giving the teacher no direction for instruction. This researcher examined her free writing notebook where he found a different conclusion about this student’s comprehension ability. Children indicated (and created) alliances and conflicts with other children through their writing. Humorous stories were highly valued, and writers who produced such stories were coaxed by others into reading their work often (Toohey 2007). In this classroom the students wrote to each other and to the teacher. Like other students, Aman was learning to write through guided participation in writing, using the cultural tools of her community: plot lines, characters, dialogue, and so on (Toohey 2007). This study shows me that the use of a formal assessment doesn't necessarily show the best demonstration of a student's ability. That is why I had them write me a summary about the story for one type of assessment. I think I have been pulled away at looking at other means to assess my students because I teach in a testing grade where students are required to pass a statewide mandated test. This gave me another idea to explore more in my future writing and reading instruction. Although this article wasn’t research, I did find some examples to assess ELL students. I wanted to include this because this article provided useful information when planning my alternate assessments. Offer students opportunities to show and practice knowledge in nonlanguagedependent ways through Venn diagrams, charts, drawings, mind maps, or power points (Lenski, Ehlers-Zavala, Daniel, & Sun-Irminger 2006). My Research Questions 1. What are the effects of different informal assessments on ELL students’ ability to demonstrate their reading comprehension? 2. What are the effects of different informal assessments on ELL students’ ability to demonstrate their achievement? 3. How are ELL student’ attitudes towards reading affected by using different forms of assessment? Participants I chose my 4 ELL students out of my 20 in my third grade class. Three out of the four ELL students read on grade level, and one reads one grade level below according to the Accelerated Reader Program implemented in my school. All four students’ families speak only Spanish at home, and receive little help. All are of middle to lower class families, living off one parent’s income. Anai has older siblings who help her at home if needed. None have been retained, and Yobany, Cinthia, and Anai all are earning a letter grade of a C in Reading. Beverly is earning the letter grade of a D. Instruction Procedures Each week for four weeks, I followed the Scott Foresman Reading Street series instruction. I would have conducted my study longer, but EOG prep took over that block of time (principal mandated). I would have liked to have more time to try other types of assessments. A new skill was taught each week, vocabulary found in the story, and a story was read several different times and different ways. I thought the stories were at an appropriate level for reading and comprehending for the students. They were leveled according to the Accelerated Reader Program, which I thought leveled them accurately according to difficulty. I gave all students the same guided instruction during the 45 minute block allotted in the day. During the week students completed work book pages that allowed practice of vocabulary skills and the specific skill addressed in our standard course of study. Students were given a selection test at the end of the week that assessed vocabulary, comprehension and the skill addressed. It was a multiple choice test with three free write questions, a total of 20 questions. I gave my ELL students a different form of assessment first, and then took the basal selection test so I could compare the results. Data Collection Methods/Data Analysis Procedure To answer the question about students’ ability to demonstrate comprehension, I collected student samples of the alternate assessment and the basal test to compare. I also gave students a survey about how well they thought they showed their knowledge about the content learned through the selection test in the beginning of the study. At the end I gave them the same survey for them to rate how they think they showed their knowledge now after being assessed in different ways. To answer the question about students’ ability to demonstrate achievement I sent home a parent survey about what they thought about their child’s reading ability and interest in reading. I also gave students a rating scale of which assessment they liked taking the best. I recorded students’ test scores from the selection test and the alternate assessments on a spreadsheet, and organized the survey information in a table. I then graphed the results of each selection test and alternate assessment for that story. To conduct the interviews, I sat at my computer and typed their responses in a quiet place in the room while they talked. They liked that, except for Yobany. Results My students were not consistent with their selection test scores. Their scores pretty much show similar data. There was no significant increase or decrease. The first test Anai scored the same on both tests. Yobany, Cinthia, and Beverly showed some improvement in their ability to show their comprehension through the interview over the selection test. Their achievement level didn’t seem to have a direct impact from these assessments. They seemed to be showing around the same level of achievement as before. Test #1 80 60 40 Basal Test Interview 20 Interview 0 Yobany Anai Basal Test Cinthia Beverly Test #2 Cinthia demonstrated comprehension better through the basal test, than the summary. Yobany and Beverly both demonstrated comprehension of the text better through their summary. Anai showed the same on both assessments. 100 80 60 40 Basal Test 20 Written Summary Beverly Basal Test Cinthia Anai Yobany 0 Test #3 Here the students scored poorly using the Venn diagram to show their comprehension (Maybe a poor choice on my part). 100 50 Basal 0 Yobany Anai Basal Cinthia Beverly Test #4 Here students were given the choice of assessment they wanted to take. Their choices were summary, Venn diagram, interview or cloze sentences. Students were aware of what cloze sentences were when I conducted the interview. I showed them the sentence on the computer when I was typing their responses. Yobany chose the summary, and Beverly, Cinthia and Anai chose the interview. Yobany scored well on the written summary, but was a little surprised because he does not like to write as he indicated on his interest survey. The girls love to talk and picked the interview which wasn’t a surprise to me, even though Cinthia and Beverly didn’t score too high. Basal 80 75 Yobany 80 80 Anai Choice 70 75 Cinthia 60 65 Beverly Student Survey From their survey, I found it interesting to see which alternate assessment they preferred. I assumed the venn diagram wasn’t going to be a favorite, and I know since the Anai and Cinthia are both a little chatty that they would like the interview the best. Yobany 1 1 1 1 Venn Diagram Anai Cinthia Beverly 3 3 1 2 3 3 3 3 2 2 2 3 Summary Interview Cloze Sentences Parent Surveys From the parent surveys, I found out Yobany and Anai’s family thought their reading ability was average(2), and that Cinthia and Beverly’s reading ability was above average(3). Compared to how interested in reading they thought they were I found interesting as well. Yobany’s was the same as his ability(2), Anai’s interest in reading was higher than her ability, and Beverly’s interest was lower than her ability. It was interesting to see even after conferences and report cards how these families interpret their child’s reading ability. reading interest reading ability reading ability reading interest Beverly Yobany Anai Cinthia 3 2 1 0 Discussion I was quite disappointed in my research. I thought going into this that I would find the best alternate assessment that my ELL students would do great on and really show their comprehension better than the selection test. I quickly realized that wasn’t the case. By conducting my research for four weeks because of EOG prep, I really didn’t get enough data to really analyze and use as a true demonstration of their ability to comprehend a text. Providing alternate assessments just allowed them to show in different ways what they knew. Each way was distinct in requirements, although, I don’t think each was equal in comparison to the selection test. The basal selection test asks many questions about the story, providing a clear way of grading. I found it difficult to accurately score my students’ work, knowing I was comparing it to the basal selection test. Teaching in a testing grade, my mind is wired to what kinds of questions the students will be asked. I have a hard time separating the two when assessing student understanding. These students are generally performing at what an average third grader should be, besides Beverly who is performing below grade level (reading grades). The ESL teachers shared that they see the same type of performance, and that my results were pretty accurate according to their work shown with them as well. In an ideal world, these students would receive more specialized instruction according to their needs and abilities. I try to work more with them on what they need, but it is never enough. The additional 45 minutes a day in small group with the ESL teachers isn’t enough. They aren’t getting the practice, repetition, modeling, and help needed to improve from home. I believe that is a big factor in their learning. Future Direction I think if I find a better and more thorough alternate assessment that will show students’ ability to demonstrate their comprehension ability better; I will definitely include it in my instruction for next year. I also think that there is never going to be the perfect assessment, but that it depends on the background knowledge and vocabulary the student possesses. I would also like to spend more than one week on a single selection. Unfortunately the curriculum is so big, in order to get all objectives taught by the End of Grade Test, we do not have the time to spend more than one week on a single story. I feel that really short changes our students in their learning. I know I could pull more into each selection and make it so much more applicable to them if I had more time. I might also not focus so much on achievement levels because those didn’t change much, but instead on self-efficacy. That might be more interesting to see with each story. References Francis, D., Snow, C., August, D., Carlson, C., Miller, J., & Iglesias, A. (2006, July). Measures of Reading Comprehension: A Latent Variable Analysis of the Diagnostic Assessment of Reading Comprehension. Scientific Studies of Reading, 10(3), 301-322. Retrieved March 19, 2009, doi:10.1207/s1532799xssr1003_6 Lenski, S., Ehlers-Zavala, F. Daniel, M., & Sun-Irminger, X. (2006, September). Assessing English-language learners in mainstream classrooms. Reading Teacher, 60(1), 24-34. Retrieved February 20, 2009, doi:10.1598/RT.60.1.3. Martinez, R., Aricak, O., & Jewell, J. (2008, December). Influence of reading attitude of reading achievement: A test of the temporal-interaction model. Psychology in the Schools, 45(10), 1010-1023. Retrieved February 20, 2009, from Education Research Complete database. Parker, A., & Paradis, E. (1986, May). Attitude Development Toward Reading in Grades One Through Six. Journal of Educational Research, 79(5). Retrieved March 19, 2009, from Education Research Complete database. Preciado, J., Horner, R., & Baker, S. (2009, February). Using a Function-Based Approach to Decrease Problem Behaviors and Increase Academic Engagement for Latino English Language Learners. Journal of Special Education, 42(4), 227-240. Retrieved March 19, 2009, from Education Research Complete database. Toohey, K. (2007, December). Are the lights coming on? How can we tell? English language learners and literacy assessment. Canadian Modern Language Review, 64(2), 249-268. Retrieved March 19, 2009, from Education Research Complete database.