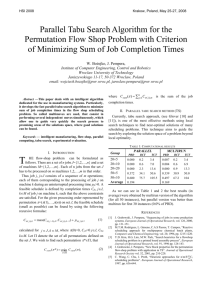

Evaluation of a Hybrid Genetic Tabu Search framework on Job Shop

advertisement

1

Evaluation of a Hybrid Genetic Tabu Search framework on Job

Shop Scheduling Benchmark Problems

S Meeran and M S Morshed1

School of Management, University of Bath, Bath, BA2 7AY, UK

E-mail: s.meeran@bath.ac.uk, Phone: 00 44 1225 383954

1

Mechanical and Manufacturing Engineering, University of Birmingham, Birmingham, B15 2TT, UK

Abstract: It has been well established that to find an optimal or near-optimal solution to job shop

scheduling problems (JSSP), which are NP-hard, one needs to harness different features of many

techniques, such as genetic algorithms (GA) and tabu search (TS). In this paper, we report usage of

such a framework which exploits the diversified global search and the intensified local search

capabilities of GA and TS respectively. The system takes its input directly from the process

information in contrast to having a problem-specific input format, making it versatile in dealing with

different JSSP. This framework has been successfully implemented to solve industrial job shop

scheduling problems (Meeran and Morshed 2011). In this paper, we evaluate its suitability by

applying it on a set of well-known job shop benchmark problems. The results have been variable. The

system did find optimal solutions for moderately hard benchmark problems (40 out of 43 problems

tested). This performance is similar to, and in some cases better than, comparable systems, which also

establishes the versatility of the system. However, for the harder benchmark problems such as those

proposed by Taillard (1993), it had difficulty in finding a new improved solution. We analyse the

possible reasons for such a performance.

Keywords: Makespan, Genetic Algorithms, Tabu Search, Job Shop Scheduling, Heuristics

1. INTRODUCTION

The importance of as well as the requirement for scheduling in human activities are self-evident. In

production and service organisations, scheduling is one of the vital and critical elements in making

these organisations efficient and effective in the usage of scarce resources. When every job going

through a production or service unit is unique in its processing requirement, the scheduling is known

2

as the job shop scheduling problem (JSSP). Many organisations will be able to benefit from a solution

to the JSSP. However, a generic solution to the JSSP has eluded researchers until now, and the

problem is considered to be an NP-hard problem. Over the second half of the last century, many

traditional techniques coming from the traditional Operations Research community, ranging from

simple dispatching rules to complex and sophisticated parallel branch and bound algorithms and

bottleneck-based heuristics, have been employed to find a solution to the JSSP. In spite of such a

concerted effort, an efficient generic solution could not be found. However, in the recent past,

researchers from different fields such as biology, genetics and computing have proposed many new

algorithms and methodologies, such as neural networks and evolutionary computation, increasing the

possibility of finding a generic solution to the JSSP.

Although the conditions associated with the classical deterministic job shop scheduling

problem are well known to most readers, for completeness we reproduce the salient elements of the

problem as follows: The problem consists of a finite set J of n jobs {J i }in1 which need to be processed

m

on a finite set M of m machines{M k } k 1 . Each job Ji consists of a unique chain of mi operations that

must be processed on m machines in a predetermined order, a requirement called a precedence

constraint. The problem is further characterised by capacity constraints (otherwise called disjunctive

constraints) which stipulate that at any one time, a machine can process only one operation of any job.

The objective of the scheduler is to determine starting times for each operation in order to minimise

the makespan while satisfying all the precedence and capacity constraints.

The dimensionality of each problem instance is specified as nm, and each job has to be

processed exactly once on each machine. In a more general statement of the classical job-shop

problem, machine repetitions (or machine absence) are allowed in the given order of the job Ji J,

and thus mi may be greater (or smaller) than m. The main focus of this work relates to the case of

mi m-non pre-emptive operations per job Ji, unless otherwise stated.

The solution methods proposed to solve the JSSP could be broadly categorised into two types:

exact (sometimes called exhaustive methods or optimisation methods) and approximation methods,

although there have been some attempts to combine both exact and approximation methods. Although

the exact methods aim to find an optimal solution, invariably they consume a substantial amount of

time as they explore the solution space systematically, and hence their practical use has been

somewhat limited for large real-life problems. This encouraged the growth of the use of

approximation methods to find the solution to the JSSP. Although the approximation methods, as the

name indicates, do not guarantee an optimal solution, they do provide an approximate but useful

solution in a practicable time. The approximations methods have been generally categorised into four

groups: priority dispatch rules (Baykasoglu and Ozbakir 2010), bottleneck-based heuristics (Adams et

al. 1988, Zhang and Wu 2008), artificial intelligence (Jain and Meeran 1998, Weckman et al. 2008)

and local heuristic search methods, with many sub-techniques within them (Jain and Meeran 1999).

3

Many attempts have been made to find solutions to the JSSP using these sub-techniques, with

varying success. Also, some notable innovative improvements in these techniques, such as beam

search, simulated annealing (SA) and shifting bottleneck, have been implemented, resulting in

substantial enhancement of the quality of the solutions. However, the deviations from optimality are

found to be still high, and most of these methods require high computing effort to achieve their best

results. Hence the search for a solution continues! Out of the four sub-techniques of the

approximation methods mentioned above, the local heuristic search method attracted a healthy interest

among researchers because of its intuitiveness, as well as its ability in finding a good approximate

solution and sometimes optimal solutions reasonably quickly. In the recent past, there have been a

substantial number of publications in the area of applying the heuristic method to the JSSP. Although

there have been successes, the narrowness of the strength of the individual heuristic techniques used

has affected progress in finding a solution that is not problem specific. The natural progression, as is

to be expected, is trying to combine multiple techniques, exploiting different strengths of different

techniques. This paper uses one such framework, which combines GA and TS techniques in

exploiting their global diversification and local intensification strengths respectively. The GA and TS

techniques are fine-tuned to maximise the chance of finding an optimal solution. The rest of the paper

is structured as follows: in the following section, we will discuss the hybrid solution methods

available for solving the makespan JSSP in the literature and explore other existing GA and TS hybrid

systems. In section 3, we will describe the hybrid GA and TS framework that we have used, followed

by a section in which we assess the suitability of this framework for the job shop scheduling

benchmark problems by analysing the results obtained. We also compare the system performance

with other systems including recent ones which exist in the literature. In the last section, we draw a

conclusion from the tests.

2. LITERATURE REVIEW

Within the local search category, many methods have been proposed by various researchers. Satake et

al. (1999) proposed algorithms using simulated annealing (SA) to solve the JSSP, whereas Della

Corce et al (1995), Dorndorf and Pesch (1995), Mattfeld (1996), Park et al. (2003), Goncalves et al.

(2005), Jia et al. (2011) and Pérez et al. (2012) used genetic algorithms (GA) for the same purpose.

Glover (1989), Dell’Amico and Trubian (1993), Taillard (1994), Nowicki and Smutnicki (1996),

Thomsen (1997), Pezzella and Merelli (2000) and Eswarmurthy and Tamilarasi (2009) applied tabu

search (TS) to obtain solutions to JSSP. Techniques such as ant optimisation and genetic local search

(GLS) (Yamada and Nakano 1996, Zhou et al 2009), path re-linking (PR) (Nowicki and Smutnicki

2005), and Greedy Randomised Adaptive Search Procedure (GRASP) (Fernandes and Lourenco

4

2007) have been also tried. Out of these local search methods, tabu search, by virtue of its intensive

local searching capability, has provided impressive results by generating good schedules within

reasonable computing time. However, tabu search, like many other local search methods, has

oneoverwhelming strength, namely intensification, and does not have sufficient capability to explore

the search space globally. Also, TS requires fine-tuning of many parameters to suit each problem,

which is difficult to achieve. Similarly, all other heuristic techniques, as mentioned earlier, are unable

to solve the JSSP satisfactorily as individual techniques, although considerable progress has been

made in recent years. Another important issue is the fact that most of the heuristic methods proposed

do not guarantee a particular performance level for any arbitrary problem. Many in the scheduling

research community therefore postulated that if progress has to be made in breaking the current

barriers in attaining solutions to job shop scheduling problems, merging unique strengths of different

approaches is a candidate worthy of consideration.

One of the popular solution models considered by many researchers has been a hybrid

construction through amalgamating several methods with an over-arching strategy (known as metaheuristics) to guide a myopic local heuristic to the global optimum (Yu and Liang 2001 (NN&GA),

Wang and Zheng 2001 (GA&SA), Park et al. 2003 (parallel GA (PGA), Goncalves et al. 2005

(GA&PDR), Zhang and Gen 2009 (Hybrid Swarm optimisation), Seo and Kim 2010 (Ant colony

optimisation with parametric search), Azizi et al. 2010 (SA with memory), Nasiri and Kianfar 2012a

(GES/TS/PR) (GES is a variant of SA)). Although many of the authors reported improved

performance through hybridisation, it has been found to suffer from the requirement of substantial

computing time; also, simple hybridisation of the techniques in their raw form as such, has not

performed well in many instances. Further, formal methods have not been developed in order to

identify effective ways of finding suitable techniques as well as combining them; hence there is a

need for exploring various combinations of search techniques along with implementing possible

improvements in the individual search methods themselves. The framework used here is one such

attempt, combining the search techniques GA and TS in addition to using intelligent sub-techniques

such as genetic operators, restricted neighbourhood and innovative initial solutions.

There have been only a few systems in the literature that use the combination of GA and TS

to provide a solution to the JSSP (Meeran and Morshed 2007, Tamilselvan and Balasubramanie 2009,

Gonzalez et al. 2009, Chiu et al. 2007, Zhang et al. 2010). Tamilselvan and Balasubramanie (2009)

have used GA as the base search mechanism and TS to improve their search. They have demonstrated

the effectiveness of the combination of GA and TS, which is called GTA, against standalone GA and

TS, using only a limited number of example problems that they have devised. There is no evidence of

their system being tested on established benchmark problems or on real-life practical problems.

Gonzalez et al. (2009) presented a successful hybrid GA and TS system for the job shop scheduling

5

problem with set-up times. Obviously, they have tested their system on a different set of benchmark

problems. Most other systems (Chiu et al. 2007, Zhang et al. 2008b) have shown good progress in

solving a specific set of benchmark problems, albeit in some cases the benchmark problems used are

not from the established sets such as ORB (Applegate and Cook 1991), FT (Fisher and Thompson

1963), LA (Lawrence 1984) and ABZ (Adams et al. 1988). Also, there is no evidence from the

publications that most of these systems work well with real-life practical problems, as well as solving

standard JSSP benchmark problems. Hence, the suitability of most of the systems as generic solution

methods for solving the job shop problem is questionable.

The hybrid system used in this paper, no doubt as in the case of the small number of systems

mentioned above, exploits the complementary strengths of GA and TS. However, the framework

presented here has been tested for its versatility and suitability for solving a variety of scheduling

problems, such as real-life problems and benchmark problems. In this paper, we present the results

when the system is used to solve a large number of benchmark problems, including instances from

FT, LA, ABZ and ORB. Furthermore, the system was tested on some TA (Taillard 1993) problems to

evaluate how it performs in dealing with very hard instances.

3. SYSTEM DESCRIPTION

3.1. Hybrid Framework

In the model used here (shown in Figure 1), during the hybrid search process, the system starts with a

set of initial solutions, on each of which TS performs a local search to improve them. Then GA uses

the TS improved solutions to continue with parallel evolution. Within TS, a tabu list (more details on

this are given later) is used to prevent re-visiting the solutions previously explored. Such a hybrid

system combining both GA and TS, using their complementary strengths of parallel search and

intensified search, has a good chance of providing a reasonable solution to global combinatorial

optimisation problems such as the JSSP.

An initial set of solutions necessary can be generated by different methods, such as priority

dispatch rules (PDR), random generation and mid-cut heuristic, details of which are as follows. From

the input processing data and precedence relations, a sequential chain of operations is created. This

string is cut between the first and the last genes at a random position, creating two sub-strings of

operations. The first sub-string is moved to the back of the second sub-string. This procedure

effectively maintains the total number of operations in a string, and creates a new solution string. This

process is continued until each job is at the first position of a solution string. Thus the total number of

solution strings created using this method equals the total number of jobs. For a 44 problem, there

will be four strings that are produced by this heuristics.

6

Let us elaborate this heuristic, using an example of a 44 problem. If all the jobs in this problem

had the same operations sequence of being machined in Machine 1, Machine 2, Machine 3 and

Machine 4 in this specified order, then the initial string would be [1,2,3,4,1,2,3,4,1,2,3,4,1,2,3,4]. To

apply the mid-cut heuristic on this string, what we need to do first is to pick a random position in this

string. Let us say our random position is 3rd position. The initial string is cut at this position to make it

into two substrings, namely:

substring1 = [1, 2,

substring2 = [

] and

3,4,1,2,3,4,1,2,3,4,1,2,3,4].

The substring1 (i.e. [1,2])is moved to the back of substring2, creating a new string,

[3,4,1,2,3,4,1,2,3,4,1,2,3,4,1,2]. (operations 1,2 are shown in italics to indicate these are added to

substring2). The above procedure of picking a random position is repeated and checked to see

whether the first gene after the cut is a 2 or 4. If not another random position is picked until this

condition (i.e. getting a 2 or 4) is achieved. (Probabilistically it should take only two attempts to get a

2 or 4). As done above, the second piece of the string (i.e. substring2) resulting from the cut with a 2

(or 4) as the first gene is moved to the front and the first piece (i.e. substring1) is moved to the back.

By this procedure we get another two strings starting with a 2 and a 4 respectively. In this process any

string which has a job that is already at the head of another string is rejected and hence the number

of strings produced is limited to the total number of jobs. These strings that are produced by midcut heuristic as initial solutions have been found to be better than the initial solutions created by the

random method, or by priority dispatch rules. More details can be found in Morshed (2006).

3.2. Details of GA and TS

3.2.1. Chromosome Representation

It is generally accepted that the capability of GA to solve a problem depends on having an appropriate

chromosome to represent a solution (i.e. a string of operations in the scheduling problem) (Cheng et

al. 1996). It is then essential to ensure that all chromosomes, which are generated during the

evolutionary process, represent feasible solutions. In the classical JSSP, if one considers there are n

jobs to be processed on m machines, then there are a total of n × m operations to be scheduled. One

needs a chromosome [gene1, gene2, gene3,…, genenxm] to represent such a schedule, which could be

an arbitrary list of n × m genes (operations in operation-based representation) to start with. This

chromosome could be translated into a schedule of any format, such as a Gantt chart, using certain

heuristics. The genetic operators, such as crossover and mutation, could operate on this solution string

to evolve better solutions and eventually to find the optimum schedule. A good crossover operator

should preserve job sequence characteristics of the parent’s string in the next generation. If the good

7

features of different strings are properly combined, the offspring strings generated may have even

better features.

There are two types of sequences which are relevant in the discussion of the job shop

scheduling problem: first is the process sequences of jobs, dictated by the technical requirements of

the manufacturing processes required to complete the jobs (i.e. the precedence constraints); second is

the sequences of operations to be loaded on the machines, which needs to be determined by a solution

method. In manufacturing domain, a synchronisation of process planning and scheduling has always

been considered a requirement for production efficiency (Nasr and Elsayed 1990). In our context, the

encoding should be able to deal with both these sequences, and the information with respect to both

has to be preserved simultaneously. In such a case, many crossover methods dealing with literal

permutation encodings (i.e. all possible permutations, many of them leading to infeasible solutions)

cannot deliver that. A chromosome could be either infeasible, in the sense that some precedence

constraints might be violated, or illegal in the sense that the same operations might be duplicated

while others were omitted in the resulting chromosomes.

The chromosome representation for the JSSP used here, based on Gen et al. (1994) and Cheng

et al. (1996), encodes a schedule (solution) as an ordered sequence of job/operations, where each gene

stands for one operation. For an n job and m machine problem, a chromosome will have ‘n × m’

genes. Each job appears in the chromosome exactly m times, and each repeating gene does not

indicate a specific operation of a job, but the operation of a job it represents is defined by the relative

position the gene occupies in the string. For an illustrative example relating to a 4 jobs × 4 machines

problem (shown in Table 1), a typical chromosome could be in the form [4 3 3

2 2

1 4

4

3 4 3 1 2

1 1 2]. In this chromosome, the gene ‘1’ stands for operations of Job 1 (J1), showing

it has four operations. The identity of these operations corresponds to the relative positions of the

genes ‘1’, i.e. their order of occurrence in the chromosome. Hence, any permutation of the

chromosome always yields a feasible schedule. In short, the genes are represented generically and not

anchored to a particular order or position. Trivially, the relative order between them is always valid,

whatever actual positions the genes occupy in the chromosome. The main concern here is to find the

correspondence between the traditional representation of schedules and the chromosomes with which

we would like to represent the schedule, so that we can use the GA to evolve these chromosomes to

find better schedules. Put simply, the machine requirements for the operations represented by the

genes in the chromosome and their processing times have to be linked.

The information on machine requirements, as well as the processing time for different

operations, could be extracted from the input data given in Table 1. These machine requirements are

gathered in a list and can be linked to the operations, i.e. genes in the chromosome. Such a list could

be [3 1 3 2 2 4 4 1 3 1 4 2 1 3 4 2 ] (which is called a machine list). This list corresponds to

8

the operations, one to one, in the current chromosome above, i.e. [4 3 3

1 4

4

3 4

3 1 2

2 2

1 1 2]. These two lists are linked by a mechanism of a shadow chromosome, which operates

in the background. Such a shadow Machine chromosome for this example is [43 31 33 42 32 44 34

11 23 21 24 12 41 13 14 22], as shown in Figure 2. The subscripts identify the machines in which the

operations are processed. This shadow chromosome is not operated by the genetic operators, but used

as ready reference for identifying the machines associated with the genes (i.e. operations) in the

chromosome. The first member, 43, in the shadow chromosome represents the first operation of Job 4

(J4), which is operated by Machine 3.

Let us see how to formulate a schedule (and represent the same in a format such as a Gantt

chart), given a chromosome. The first operation (gene) of the chromosome is scheduled first, and then

the second operation in the chromosome is considered, and the process continues. Each operation

under consideration is allocated to the earliest available time on the machine which processes this

operation. The process is repeated until all operations in the chromosome are scheduled. It can be seen

that the actual schedule generated in this manner is guaranteed to be an active schedule. (Active

schedules are the ones in which no job can start earlier without delaying other jobs). From the

machine list we can now deduce the sequence of operations for each machine. This is done by simply

scanning the machine list for a particular machine. For example, it can be seen that the operation

sequence (job processing order) for Machine 1 is [J3, J1, J2, J4] (refer to Figure 2). A full schedule

can be made by supplementing the job sequences with processing times taken from the input data.

3.2.2 Genetic Operators

The construction of the relative order crossover operator for JSSP, used here, can be described as

follows: at first, two parents are chosen randomly from the population pool and then a certain number

of jobs, q (when q = 2, the jobs could be Job 1 and Job 3) in these parents are selected randomly (Note

the ‘q’ is a random integer number which is greater than one and less than the total number of jobs).

These q selected jobs correspond to the q × m operations in each parent. A string (string1) is produced

from the first parent by picking out these operations with their order unchanged, while filling the

positions belonging to other jobs (in this case, Job 2 and Job 4) with holes (E). Then the string is

rotated forward or backward by r positions (where r is a random integer number), maintaining the

relative positions of individual genes. Another string (string2) is produced by selecting the same jobs

(i.e. Job 1 and Job 3) in the second parent and the positions belonging to other jobs are filled with

holes (E), as in the case of the first parent. This string is not rotated. The holes (E) in the first parent

are filled with the operations of the ‘not-selected’ jobs of the second parent, SubString2, while

maintaining the relative order of the not-selected operations. One child is produced this way.

Similarly, the other child can be produced with the selected operations of the second parent and the

9

non-selected operations of the first. The following is a step-by-step description of how the cross-over

works, and is shown pictorially in Figure 3 (i).

Let us start with two parents with the following solution strings

parent1=[2,2,3,4,3,1,4,3,1,1,2,2,1,4,3,4]

parent2=[1,1,4,3,1,3,2,4,4,2,1,2,2,3,4,3]

As mentioned earlier, these chromosomes have shadow chromosomes which show the machine

requirements for the operations. However, to avoid cluttering, they are omitted from the discussion

below. Incidentally, both the parents have a makespan of 28.

Next, we have to select ‘q’ jobs randomly. For example, in this case, J1 and J3 are selected in

parent1 : (which is shown below in bold in the chromosome)

parent1=[2,2,3,4,3,1,4,3,1,1,2,2,1,4,3,4].

The ‘not-selected’ operations are filled with ‘E’, and hence from parent1 the following string

(string1) is produced.

String1=[E,E,3,E,3,1,E,3,1,1,E,E,1,E,3,E]

Similarly, string2 shown below is produced from parent2.

parent2=[1,1,4,3,1,3,2,4,4,2,1,2,2,3,4,3]

string2 =[1,1,E,3,1,3,E,E,E,E,1,E,E,3,E,3]

Then two sub-strings are produced from both parents respectively, having only the notselected operations of their respective parents (SubString1 [2,2,4,4,2,2,4,4] from parent1 and

SubString2 [4,2,4,4,2,2,2,4] from parent2).

The string1 is rotated one position forward (in order to have more diversity) and string2 is

rotated to ‘zero’ positions (i.e. left as such) to make string3 and string4 respectively:

string3=[ E,3,E,3,1,E,3,1,1,E,E,1,E,3,E,E]

string4 =[1,1,E,3,1,3,E,E,E,E,1,E,E,3,E,3]

Finally, the ‘child’ strings are produced by filling the holes of the strings as follows: (i)

string3 with SubString2 [4,2,4,4,2,2,2,4] to produce child1 (with a Cmax of 23, which is an improvement

due to the crossover operation) (ii) string4 with SubString1 [2,2,4,4,2,2,4,4] (i.e. the not-selected

operations from parent1) to produce child2 (with a Cmax of 25) .

child1=[ 4,3,2,3,1,4,3,1,1,4,2,1,2,3,2,4]

child2=[1,1,2,3,1,3,2,4,4,2,1,2,4,3,4,3].

Just to demonstrate how the machine association works with these chromosomes, a shadow

machine chromosome for child2 is extracted as follows: Referring to Table 1, the four operations of

Job 1 are done in Machines 1,2,3,4 respectively and hence the four ‘1’s in child2 chromosome will be

10

associated with machines 1,2,3,4 in that sequence, the four ‘2’s representing Job 2 are associated with

machines 3,1,2,4 in that sequence and machines required for operations of other jobs are known

similarly. Hence the first two operations representing Job 1’s first two operations need Machines 1

and 2. The third operation in the chromosome stands for first operation of Job 2 which needs to be

machined by Machine 3 and hence the third gene of the machine chromosome would be 3. Continuing

this procedure will result in a shadow machine chromosome of [1 2 3 1 3 3 1 3 2 2 4 4 4 2 1

4 ]. This can be incorporated with the child2 chromosome to get the corresponding machine index of

child2 as follows:

child 2 11 12

23

31 13

33

21

43

42

22

14

24

44

32

41

34 . The machine

requirements of the operations of a job are not changed by the genetic operators as all the operations

of a job are denoted by the same gene and a particular gene is not anchored to its position. The gene’s

relative position among its fellow genes in a chromosome is simply its position in the operation

sequence. In the above example, the first ‘1’ in child2 chromosome is, as always, the first operation of

Job 1, which is to be machined by Machine 1.

The second genetic operator, mutation, can help GA to get a better solution in a faster time. In

this model, relocation (technically called reinsertion) is used as a key mechanism for mutation.

Operations of a particular job that is chosen randomly are shifted to the left or to the right of the

string. Hence the mutation can introduce diversity without disturbing the sequence of a job’s

operations. When applying mutation, one has to be aware that if the diversity of the population is not

sufficiently maintained, early convergence could occur and the crossover cannot work well.

Figure 3(ii) gives a pictorial representation of the mutation process.

3.2.3. Working of GA

Every solution (chromosome) was evaluated using makespan as the fitness function.

It follows that the lower the value of the string (in terms of makespan), the higher the fitness

of the string. We have considered a single value for each genetic string where normalisation is

not required. The initial population required was generated using various methods such as

random method, mid-cut heuristic and PDRs, as explained earlier. At the start of the GA-TS

search process, these solutions are improved through tabu search (TS). We apply the elitist

strategy for population selection; in every generation a certain number (which is dictated by

the parameter setting for the particular problem) of the strings with the highest fitness is

directly input into the population of the subsequent generation (which we call elite solutions),

while all strings in the whole of the population (i.e. elite and non-elite solutions) are

considered for applying genetic operations according to the specified cross-over and mutation

rates. The cross-over is enacted by choosing a pair of individuals from the whole of the

11

population by random sampling without replacement. By applying ordered crossover to the

chosen pair of individuals, a pair of children is generated. This process is repeated several

times depending on the crossover rate (pc) to produce several children. The mutation operator

is applied on a certain number (depending on mutation rate, pm) of chromosomes which have

not been operated by the crossover operator. The resulting strings from these GA operations,

along with the original strings, are ranked and an appropriate number of strings (equal to the

number of non-elite solutions), after discarding the non-chosen strings, is passed to the next

generation. These solutions and the elite solutions that have been already ear-marked for next

generation together make the population pool for the next generation. These solutions are

operated by tabu search to improve their quality locally. If an improved solution is obtained

by the local search, the resulting string replaces the original one and this GA-TS cycle process

continues for the specified number of generations. As can be seen above, in general the

population pool resulting from the GA is ranked for fitness to select the individuals for the

next generation. However, individuals with the lower rank may be selected because it is

prohibited for more than two identical individuals to exist at the same time in order to

maintain a diversity of population. Further, in order to increase the diversity, five randomly

generated new solution strings are also introduced to the new generation, replacing five

randomly selected non-elite strings. This model of information passing between generations is

identified as Lamarckian learning.

3.2.4. Neighbourhood structure for TS

The other aspect of the search mechanism is the local improvement procedure used in the

system based on Tabu Search (TS). In essence, TS is a simple deterministic search procedure that

transcends local optimality by storing a search history in its memory. In TS, a function (called a

move) that transforms a solution string into another solution string is defined. Hence for any solution

string, S, a subset of moves applicable will form the neighbourhood of S.

In order to achieve the best neighbourhood, all the strengths of the existing neighbourhood

structures were first investigated and, using that knowledge, more innovative ways of designing a

neighbourhood structure were explored. A good neighbourhood structure needs (i) to be suitable for

the data representation used in other parts of the system (i.e. capable of using the chromosome

representation of the schedules used in the GA side) and (ii) to have as small as possible a

neighbourhood, while not compromising the possibility of wide exploration of the search space. Ten

Eikelder et al. (1997) indicates that the time complexity of a search depends on the size of the

neighbourhood and the complexity involved in evaluating the cost of the moves. Accordingly,

different types of existing critical path based neighbourhood structures, such as the one proposed by

Nowicki and Smutnicki (1996), are identified. (These neighbourhood structures are formed by

12

swapping the operations in the critical path.) It has been widely accepted that Nowicki and Smutnicki

(1996)’s neighbourhood is the most efficient (i.e. most restricted) one, for example, having only two

improving moves in the solution shown for FT06 in Figure 4. (FT06 is one of the original benchmark

problems proposed by Fisher and Thomson (1963), which has always had a special place in

scheduling literature. Figure 4 illustrates the Nowicki and Smutnicki (1996)’s neighbourhood of

moves for FT06. One of the possible critical paths (CP) is identified as [1,3,5,8,14,17,28,30,31,33] as

given in Figure 4.)

In the system presented here, the strategy of Nowicki and Smutnicki’s neighbourhood

generation is adapted to suit the schedule representation, which is in the form of chromosomes, as

described earlier. The critical operations of a solution string, containing all operations to be scheduled,

needs to be identified by using simple earliest and latest operation completion times. Once the critical

path of operations is identified for any string, the system needs to find all critical blocks (i.e. critical

operations bunched together without any idle time on the same machine. If two critical operations are

on the same machine and the finish time of one operation is the same as the start time of the second

operation, then they could form a critical block). The move function is applied on relevant operations

of the critical blocks. For example, in a two critical blocks situation, only two moves are required,

namely at the last two operations of the first block and the first two operations of the second block

(Nowicki and Smutnicki 1996). Then, as in the case of generic TS, the new solution string, after a

move, will create another neighbour, which will be a new point on the search space. Every time the

move function gets a new improved solution string, this will be kept in the memory for further

exploration until after a specified number of iterations (i.e. tabu tenure), unless an optimal solution

string is found in the meantime. After the tabu tenure has finished, the stored string is used to explore

its neighbourhood for better solutions, using applicable moves. The aspiration criterion required in

traditional TS has been incorporated by the virtue of hybridisation of GA and TS. In the hybrid

framework, it is worth noting that TS is directly applied on every solution string of the solution pool

resulting from GA side of the system (or it could be said TS is applied on every solution going into

GA as the system operates GA-TS cycle) to improve its quality.

Although the above-described neighbourhood structure is most efficient, we attempted to explore

other neighbourhood generations directly from the solution strings (chromosomes). A few schemes

explored are (i) swapping the adjacent genes, (ii) in contrast to the previous scheme, swapping the

genes which are not adjacent but at a distance (distance could be any arbitrary length of more than

one, measured in terms of number of genes) and (iii) permutation swapping of multiple genes. All

these techniques helped to explore the search space with the aim of getting the optimum solution for

the JSSP quickly. Some details of the generation of the neighbourhood based on permutation

swapping of multiple genes are given in the following paragraph.

13

This neighbourhood function is comparable to the neighbourhood search based on mutation,

but linking different job numbers. In this, p number of different non-identical genes, which represent

different jobs, are selected. These genes are replaced, on the same places in the chromosome, by

different permutations of these genes. For example, if we have chosen three genes: 3, 5, and 1, the

permutations of these genes could be 3,1,5; 1,3,5; 1,5,3; 5,3,1; 5,1,3. Any move in this neighbourhood

will create a valid and legal solution for further exploration, as in the case of GA (i.e. any of these

permutations put in the original positions of 3, 5 and 1 will produce a valid chromosome). For a

problem such as the FT06 problem, the ‘5 move’ neighbourhood produced by such permutation

swapping is shown below.

Given String “1,4,1,3,3,2,2,3,5,6,1,4,6,2,6,1,4,5,3,3,4,5,2,3,6,1,4,2,5,6,4,2,1,6,5,5”(3,5,1)

String1 “1,4,1,1,3,2,2,3,5,6,1,4,6,2,6,1,4,5,3,3,4,5,2,3,6,1,4,2,5,6,4,2,3,6,5,5”(1,5,3)

String2 “1,4,1,3,3,2,2,3,5,6,1,4,6,2,6,1,4,1,3,3,4,5,2,3,6,1,4,2,5,6,4,2,5,6,5,5”(3,1,5)

String3 “1,4,1,1,3,2,2,3,5,6,1,4,6,2,6,1,4,3,3,3,4,5,2,3,6,1,4,2,5,6,4,2,5,6,5,5”(1,3,5)

String4 “1,4,1,5,3,2,2,3,5,6,1,4,6,2,6,1,4,1,3,3,4,5,2,3,6,1,4,2,5,6,4,2,3,6,5,5”(5,1,3)

String5 “1,4,1,5,3,2,2,3,5,6,1,4,6,2,6,1,4,3,3,3,4,5,2,3,6,1,4,2,5,6,4,2,1,6,5,5”(5,3,1)

3.2.5. System Parameters:

A number of combinations of parameter settings are experimented with in order to choose the best

combination of parameters for a given problem. The values of search parameters depended on

problem instance (in terms of size and complexity), representation, and interrelation between

parameters. As a consequence, the parameter values varied over a range for the group of problems

that we have tested. For example, the population size for a simple 4x4 problem is chosen to be 30

whereas the population size for the problem ABZ8 problem we used 200. However for most cases the

following parameters worked well: a population size of 200 (except for small problems), 300

generations (although in most cases after 90 generations there was no performance improvement), a

cross over rate of 0.80, a mutation rate of 0.10 and 5 elite solutions that are carried forward to the next

generation. For the tabu list length we did use a range varying between 6 and 36. Larger problems

(with number of jobs more than 8) had a tabu list length of 36 and for other problems the tabu list

length has been fixed equal to the number of jobs. All of this information is summarised in Table 2 in

terms of range of values for different parameters. In general it can be said, smaller problems adopted

the lower end of the values in the range and larger problems took the higher end of the values in the

range. Table 2 also shows the parameters we have chosen for the problem FT06.

4. RESULTS AND DISCUSSION

The main focus of this paper has been to evaluate how the hybrid GA and TS framework used in this

paper performs on job shop scheduling benchmark problems. We have tested the system progressively

on harder problems, starting from easy-to-solve problems. In the first set, we have tested this system

14

on 43 well known traditional ORB, FT, LA and ABZ benchmark problems. These problems are

chosen to cover a range of simple and moderately hard problems. Most of the problems for testing the

system were chosen taking into consideration what other researchers have used in their systems, in

order to facilitate comparison.

Although many of these problems are ‘square’ problems, which are generally known to be

harder than rectangular problems to solve, they are not considered to be very hard (just moderately

hard) by the scheduling research community. The main thrust of the test, at this stage, was to see

whether the proposed system could solve these problems optimally. The test showed that the system

has done well in finding optimum solutions for 40 of these benchmark problems, while achieving an

average mean relative error (MRE) of 0.08%. Twenty-five runs with a population size of 200 are

conducted to achieve this result in 90 generations on the GA side of the system. On the TS side, a tabu

list length varying between 6 and 36 and a maximum number of iterations of 100 are used. Table 3

shows the makespan achieved by the proposed system for the 43 JSSP benchmark problems at

different generations of GA, culminating in producing 40 optimal makespans at the 90th generation.

We have implemented the system in VB (Visual Basic) and achieved a computational time

which varies between 0.10 sec and 60 min (quite a wide range!) for each generation for this set of

benchmark problems solved on a Pentium III 128MB 1GHz machine. The time for running small and

simple problems, such as LA14 and LA15, has been at the lower end of this range, whereas the timing

for bigger and complex problems, such as ABZ problems, has been very large (in the range of days)

to get to the optimal solution. Some problems such as ABZ7 did not attain the optimum even after

three days of running time. Even though the computer used is slow and basic and the coding language

used is simple and not the fastest, it is evident that these may not be the only reasons for not finding

optimal solutions for harder problems.

We attempted to compare the performance of the system presented here with that of similar

systems which exist in the literature. We faced a few problems in doing so. We could not find many

comparable systems which use GA and TS on the same benchmark problems. Hence the comparison

is widened to include the best GA systems and the best TS systems, as well as hybrid systems which

use TS and GA which had makespan as their objective function. `

The results suggest that the proposed system has been very effective in finding the optimum

solutions per se in comparison to similar systems that exist in the literature. Table 4 and Table 5 give

comparisons of the proposed system’s performance against older systems. Table 6 gives the

comparison with newer systems. In Table 4, the results obtained by the best GA based systems for

eight of these benchmark problems are given, and are compared with the results obtained by the

15

proposed model. As can be seen from Table 4, the proposed model has been able to achieve optimal

solutions for these eight problems, whereas other models (Pesch 1993, Della Croce et al. 1995,

Dorndorf and Pesch 1995, Mattfield 1996 and Yamada and Nakano 1996) did not report optimal

solutions for these eight cases.

In Table 5, results of the various Tabu Search techniques attained for several benchmark

problems are given and are compared with results of the proposed model. The proposed model has

been able to achieve optimal solutions for most of these 16 problems, whereas Dell’Amico and

Trubian (1993), Barnes and Chambers (1995), Nowicki and Smutnicki (1996) and Thomsen (1997)

did not report as many optimal solutions. It is evident from Table 4 and Table 5 that the proposed

model performs well in comparison with the best of both older GA and TS models.

With regard to the category of hybrid systems, the proposed system is compared with the

hybrid TS systems of Pezzella and Merelli (2000), which obtained an average MRE of 2.56%, and

with the GA hybrid system of Goncalves et al. (2005), which got an average MRE of 0.39% by

solving 31 out of 43 (not the same ones that the proposed system solved) optimally, while the

proposed system got an average MRE of only 0.08%. In summary, for these benchmark problems,

although the framework proposed here has done well in minimising the makespan, as far as the

computer timing is concerned, it has not done well (problems such as ABZ7 took an unreasonably

long time to find a solution).

The comparison was continued with the recent systems which use GA or TS or GA and TS to

minimise the makespan. We have found that in terms of obtaining optimal solutions, the system

presented here has done as well as, or better than, most systems reported in the literature (Hasan et al.

2009, Velmurugan and Selladurai 2007, Sevkli and Aydin 2006, Zhang et al. 2008b). The majority of

these systems appear to be performing at a similar level, at least in terms of the number and

percentage of optimal solutions that they have achieved, as highlighted in Table 6. However, it has to

be acknowledged that the percentage of optimal solutions that various systems have obtained does not

reflect the overall performance on all the problems these systems have solved. It is more pronounced

in the percentage that we have calculated for Sevkli and Aydin (2006). Although they attempted

solutions for many of the problems that we have attempted, they have given equivalent measures to

MRE for just six problems, namely ABZ7, ABZ8, ABZ9, LA21, LA24 and LA25 that are in our list.

In contrast to other researchers, they also did not provide the Cmax value they obtained explicitly for

any of the problems. We have observed in all of the above systems that the very hard instances, such

as TA problems, are not tested and we also noted that all of these systems are based on GA.

16

In general, the solutions to this set of hard problems (i.e. TA) are rarely reported except for a

few (see below), which may indirectly suggest that these problems are exceptionally hard. However,

to fully establish the capabilities and limitations of the proposed system, we have done another stage

of evaluation of the proposed system on a small sample of TA problems TA1, TA11 and TA21 of size

15 x 15, 20 x 15 and 20 x 20 respectively. These problems are chosen as representative sample

problems from the range of TA problems. Table 7 shows the results of this evaluation. The MREs for

these problems are 6.74%, 4.65% and 0.72% respectively. Although these results are promising, even

at the 100th generation (taking more than three days on a Pentium 1GHz machine) a better than

existing solution for these problems was not achieved. From the results, one could infer the proposed

system does not appear to be able to cope with very hard problems, such as Taillard’s problems. This

result, and other GA based systems not attempting TA problems, may suggest that the GA (at least in

its original form) needs to be further evaluated for its suitability as a tool for complex problems which

need a large number of generations and bigger population size.

Next, we have considered other recent systems proposed by Nowicki and Smutnicki (2005),

Beck et al. (2008) and Zhang et al (2008a), Nagata and Tojo (2009) and Nasiri and Kianfar (2012b)

that have tackled hard problems, including TA benchmark problems. All these systems have done

very well in finding quality solutions, taking only a very short computing time. One clear difference

we have noticed is that these systems are all bedded on a TS base, and did not use GA as one of their

local search methods. Although most of these systems also used other novel heuristics over and above

TS to achieve such an excellent performance, it appears that not using GA would have given an

advantage to these systems over the ones presented in Table 6, reducing the computer load that the

multi-point search of GA brings.

However, there have been some very recent systems which use GA as one of the local search

methods (Goncalves and Resende 2011, Qing-dao-er-ji and Wang 2012). We compared our system

with these systems. We found that Qing-dao-er-ji and Wang (2012) used a hybrid Genetic Algorithm

in which they optimally solved 33 out of 43 problems (three FT and 40 LA problems) that they have

attempted. They have used many novel ideas such as similarity between chromosomes in addition to

traditional fitness function in the selection of chromosomes for genetic operation to achieve such a

result. Of the 43 problems they solved, only 28 were from the list of problems that we tackled (i.e.

FT06, FT10, FT20 and LA01-25). They have solved 23 out of these 28 problems optimally, whereas

we have solved all the problems optimally except one (LA21). Although our system has been found to

be slow when the problems became bigger, we had no chance of contrasting the efficiency

performance of their system with ours as they did not also report the CPU times. Goncalves and

Resende (2011) report a system that they called ‘A biased random-key genetic algorithm’ which is

very fast in finding the solutions and is also very good at finding quality solutions. (For ease of

17

reference, let us call it ‘GR’ system). They used a biased random key generator to construct

chromosomes along with an extension of Akers (1956)’s graphical method to find feasible schedule

solutions. They solved a substantial number of problems optimally among the wider suite of 165

benchmark problems (comprising FT, LA, SWV, YN, TA, DMU, ORB and ABZ problems) that they

attempted and in addition they found 57 new, better solutions (i.e. new upper bounds). We found that

their chromosome construction is very similar to one being reported here in substance although the

GR system used a simpler scheme, which essentially involved a random number generation and a sort

operation. They also used Nowicki and Smutnicki (1996)’s neighbourhood to do a local search, as we

did. However, the GR system did use Taillard (1994)’s method in evaluating the moves quickly,

which we have not done. More importantly, their schedule encoding was done using an extension of

Akers (1956)’s graphical method and adjusting the resulting schedule, which is substantially faster

than the method we have used, which is a traditional method of building schedules by allocating the

operations to the earliest available positions without violating the precedence and disjunctive

constraints. Although both the systems have used GA and TS searches in very similar ways, probably

demanding similar amounts of computer power, notwithstanding the difference in power of computers

used, the substantial improvement in the GR system came from an appropriate faster encoding of the

chromosomes, efficient evaluation of the NS neighbourhood using Taillard (1994)’s method and

faster formation of schedules and evaluation of them using Akers’ method.

We have reconfirmed other researchers’ experience that when GA is used in a traditional way

of building a full schedule for every solution it produces and evaluating such a schedule, the

framework does do well in finding solutions quickly for simple and moderately hard problems by

virtue of its multi-point search process; however, for the complex problems, its requirement for

memory as well as computational time increases substantially. Maqsood et al (2012) also confirms in

their recent work this phenomenon of the systems consuming a substantial amount of computational

time when the population size and number of generations are increased. In contrast, the higher the

population size of GA, the better it is for small and medium sized problems. For example, optimal

solutions were found in seconds for simple problems, such as most of the LA and FT problems, on a

Pentium III 128 MB, 1 GHz PC.

In addition to what has been discussed above regarding faster evaluation of solutions, it is

worth exploring the possibility of cutting down the quantity of evaluations needs to be carried out. As

mentioned earlier, in the system presented here, GA is used in its fullest sense to minimise the

makespan by evaluating every solution in the GA solution pool for its fitness. The system also

evaluates the schedules after each move in TS, i.e. each change in the string. It can be noted that in

each generation, the system worked out the makespan for 5200 complete schedules on average, before

moving to the next generation, and eventually had to evaluate half a million schedules in each run.

One possible avenue for reducing the quantity of evaluations is to explore the use of binary encoding

18

of chromosomes in GA just to decide whether or not to retain a solution, which can be attractive in

reducing number of schedules that need to be evaluated fully (Rajendran and Vijayarangan 2001).

However, the reliability of pruning the solutions without full evaluation needs to be further

investigated. The way forward, could be a compromise between these two extreme forms of usage of

GA. Theoretically, it could be envisioned that a scheme could be developed in which GA solutions

could be evaluated quickly, without going through full evaluation of every single solution.

5. CONCLUSION

Even though many hybrid systems have been developed to provide a solution to the JSSP, only a

small number of systems appear to be using GA and TS. We have presented here one such hybrid GA

and TS framework. At the outset, it appeared that GA and TS could work together well, by exploiting

the complementary strengths of the global parallel search of GA and the local optimum avoidance of

TS for solving the JSSP. Furthermore, we have attempted to enhance the effectiveness of constituent

techniques, i.e. GA and TS, by incorporating features such as mid-cut heuristic to improve the quality

of the initial solution in GA and the restricted neighbourhood of Nowicki and Smutnicki (1996) in TS.

We focussed on developing a job shop scheduling system which is not problem-specific. The

framework suggested here was designed to cater for any arbitrary job shop scheduling problem, and,

for that matter, other scheduling problems such as flow shop. The input format of the system is

designed for intake of the raw production process data, such as job processing time, machine

information, and process sequence (normally given in any job process sheet) directly, and the same is

processed by the system through the use of a versatile chromosome to represent the schedule.

Furthermore, we used genetic operators on this chromosome representation, which always produced a

feasible schedule. Also, the system is designed in a way that a tabu search is done directly on the

chromosome representation of GA, making the input format common to both GA and TS. Due to its

ability to take raw process information and use it, the hybrid system appears to be one of the few

systems which can find a solution to any arbitrary job shop scheduling problem, rather than being a

problem-specific method. We have demonstrated that this system is capable of dealing with real-life

job shop problems (Meeran and Morshed 2011) as well as benchmark problems, without needing any

modification or adaptation, as shown in this paper. This complementary combination of GA and TS

solved 40 out of 43 benchmark problems from ORB, FT, LA and ABZ optimally; however, this was

done at a certain computational cost due to the generic nature of the framework. In particular, the

GA’s multiple solution searches did not allow the solution to be found quickly. When it came to TA

19

(Taillard 1993) problems, the system was not able to deliver the optimal (even improved) solutions

within a reasonable time.

We have compared the quality of the solution produced by the system proposed with that of

comparable systems. As shown in Table 4, Table 5 and Table 6, this system did better than

comparable systems on the problems that we have solved. All these systems have used GA as well as

TS in their natural form. However, there are other systems which have incorporated novel innovations

in GA and TS that have superior performance both in quality and computing time when it came to

hard problems such as TA problems. As mentioned elsewhere, we have to pay a price to produce a

versatile system which is not problem dependent. We wanted the system to deal with not only

benchmark problems but also real-life problems. We also wanted the system to deal with not only job

shop problems but also other types of scheduling problems. The cost of such a generic framework was

reflected in its performance when it came to hard problems.

We have identified two substantial improvements to speed up computing on the multi-point

search aspect resulting from GA: increase the speed of schedule evaluation as done by Goncalves and

Resende (2011) using methods such as Akers (1956) and reduce the number of full evaluations, in a

similar way to Ten Eikelder’s (1997) method. One could also explore the use of binary coding for

traditional GA representation (Rajendran and Vijayarangan 2001) and evaluation, which will be faster

in comparing schedules. Although it will not produce a working schedule itself, it will help prune

non-attractive solutions. It can be seen the proposed system did better than comparable systems

which have used GA in its traditional form in formulation and evaluation, the system did not do very

well when compared with the systems which used novel innovations incorporated in their GA search

and evaluation mechanisms. Hence yet another possibility is to add novel heuristics to the GA

mechanism as done by Zhang et al (2008b).

Other aspects of the system should also be looked into. We have chosen the parameters

manually, as mentioned earlier, and tuned them to get the perceived best performance. In any

possible future work, we will explore the adaptive memory feature more, and see whether the

parameters could be automatically optimised, which would improve the quality of the solutions. It is

agreed that automatic parameter selection is a complex problem especially considering multiple

factors involved in such a system as the one proposed here. However there has been some good work

done such as the dissertation by de Garca Lobo (2000) in the area of automatic parameter selection. In

this work parameters for GA are selected automatically to optimise the performance. Similarly, in the

work done by Burke et al (2003) the tabu search parameters are optimised. These could be a starting

point for a possible future addition to this work in automatic parameter selection. As the size of the

problem increases, the parameters such as population size of GA needed to get a good solution

20

increase tremendously, which automatically puts a high computational demand in terms of memory

requirement and computational time. Hence a higher specification computer, with more memory and

processor speed, should be considered for any further implementation of the system.

ACKNOWLEDGEMENT

Both the authors would like to thank Prof. Ball, then Head of Mechanical and Manufacturing

Engineering, at the University of Birmingham for allowing the first author to provide partial

funding to the second author for his PhD which formed the basis of this paper.

REFERENCES

Adams, J., Balas, E., and Zawack, D., 1988. The Shifting Bottleneck Procedure for Job-Shop

Scheduling. Management Science, 34(3), 391-401.

Applegate, D. and Cook, W., 1991. A Computational Study of the Job-Shop Scheduling Problem.

ORSA Journal on Computing, 3(2), 149-156.

Azizi, N., Zolfaghari, S., and Liang, M., 2010. Hybrid simulated annealing with memory: an

evolution-based diversification approach. International Journal of Production Research, 48(18),

5455-5480.

Barnes, J. W. and Chambers, J. B., 1995. Solving the Job Shop Scheduling Problem Using Tabu

Search. IIE Transactions, 27, 257-263.

Baykasoglu, A. and Ozbakir, L., 2010. Analyzing the effect of dispatching rules on the scheduling

performance through grammar based flexible scheduling system. International Journal of

Production Economics, 124 (2), 369-381.

Beck, J.C., Feng, T.K., and Watson J.P., 2011. Combining constraint programming and local

search for Job shop scheduling. INFORMS Journal on computing, 23(1), 1-14.

21

Burke, E.K., Kendall, G. and Soubeiga, E., 2003. A Tabu-Search Hyperheuristic for Timetabling

and Rostering, Journal of Heuristics, 9: 451–470, 2003, Kluwer Academic Publishers, The

Netherlands.

Cheng, R., Gen, M., and Tsujimura, Y., 1996. A Tutorial Survey of Job-Shop Scheduling

Problems using Genetic Algorithms-I. Representation. Computers & Industrial Engineering, 30,

4, 983-997.

Chiu, H.P., Hsieh, K. L., Tang, Y. T., and Wang C.Y., 2007. A Tabu Genetic algorithm with

search adaptation for the Job Shop Scheduling Problem. Proceedings of the 6th WSEAS Int.

Conference on Artificial Intelligence, Knowledge Engg, Data bases, Greece Feb 16-19, 2007

Dell’Amico, M. and Trubian, M., 1993. Applying Tabu Search to the Job-Shop Scheduling

Problem. Annals of Operations Research, 41, 231-252.

Della Croce, F., Tadei, R., and Volta, G., 1995. A Genetic Algorithm for the Job Shop Problem.

Computers and Operations Research, 22(1), 15-24.

Dorndorf, U. and Pesch, E., 1995. Evolution Based Learning in a Job-Shop Scheduling

Environment. Computers and Operations Research, 22(1), 25-40.

De Garca Lobo, F. M. P., (2000). The parameter-less Genetic Algorithm: Rational and automated

parameter Selection for simplified Genetic Algorithm operation, PhD Dissertation, University of

Lisbon, Lisbon.

Eswarmurthy, V. and

Tamilarasi, A., 2009. Hybridizing Tabu Search with ant colony

optimization for solving Job Shop Scheduling Problem, The international Journal of Advanced

Manufacturing Technology, 40, 1004-1015.

22

Fernandes, S. and Laurenco, H., 2007. A GRASP and Branch-and-Bound Metaheuristic for the

Job-Shop Scheduling. In C.Cotta and P.Cowling, eds. Evolutionary Computation in

Combinatorial Optimisation, Lecture Notes in Computer Science, Springer-Verlag Berlin,

4446/2007, 60-71.

Fisher, H. and Thompson, G. L., 1963. Probabilistic Learning Combinations of Local Job-Shop

Scheduling Rules. In J. F. Muth and G. L. Thompson, eds. Industrial Scheduling, Prentice Hall,

Englewood Cliffs, New Jersey, Ch 15, pp. 225-251.

Gen, M., Tsujimura, Y., and Kubota, E., 1994. Solving Job-shop Scheduling Problems by Genetic

Algorithm. Proceeding of 1994 IEEE International Conference on Systems, Man, and

Cybernetics, 2, 1577-1582.

Glover, F., 1989. Tabu search - Part I. ORSA Journal on Computing, 1, 190-206.

Goncalves, J. F., Mendes, J. J., and Resende, M. G. C., 2005. A Hybrid Genetic Algorithm for the

Job Shop Scheduling Problem. European Journal of Operational Research, 167, 77- 95.

Goncalves, J. F., and Resende, M. G. C., 2011. A biased random-key genetic algorithm for job

shop scheduling, AT&T Labs Research Technical Report.

González, M. A., Vela, C. R., and Varela, R., 2009. Genetic Algorithm Combined with Tabu

Search for the Job Shop Scheduling Problem with Setup Times. In Mira et al, eds. Methods and

Models in Artificial and Natural Computation. A Homage to Professor Mira’s Scientific Legacy,

Part I, Lecture Notes in Computer Science, Springer-Verlag, Berlin, 5601/2009, 265-274.

Hasan, S., Sarker, R., Essam, D., and Conforth, D., 2009. Memetic algorithms for solving jobshop scheduling problems. Memetic Computing , 1(1), 69–83.

Jain, A. S. and Meeran, S., 1998. Job-Shop Scheduling Using Neural Networks. International

Journal of Production Research, 36(5), 1249-1272.

Jain, A. S. and Meeran, S., 1999. Deterministic Job-Shop Scheduling: Past, present and future.

European Journal of Operation Research, 113, 390-434.

23

Jain, A. S. and Meeran, S., 2002. A multi-level hybrid framework applied to the general flowshop scheduling problem. Computers & Operations Research, 29, 1873–1901.

Jia, Z., Lu, X., Yang, J., and Jia, D., 2011. Research on job-shop scheduling problem based on

genetic algorithm. International Journal of Production Research, 49(12), 3585-3604.

Lawrence, S., 1984. Supplement to Resource Constrained Project Scheduling: An Experimental

Investigation of Heuristic Scheduling Techniques. Graduate School of Industrial Administration,

Carnegie-Mellon University, Pittsburgh, USA.

Maqsood, S., Noor, S., Khan, M. K., and Wood, A., 2012. Hybrid Genetic Algorithm (GA) for

job shop scheduling problems and its sensitivity analysis. International Journal of Intelligent

Systems Technologies and Applications, 11(1-2), 49-62.

Mattfeld, D. C., 1996. Evolutionary Search and the Job Shop: Investigations on Genetic

Algorithms for Production Scheduling. Physica-Verlag, Heidelberg, Germany.

Meeran, S. and Morshed, M. S., 2007. A hybrid configuration for solving job shop scheduling

problems. The 8th Asia Pacific Industrial Engineering and Management Science conference,

Kaohsiung, Taiwan, Dec 2007.

Meeran, S. and Morshed, M. S., 2011. A Hybrid Genetic Tabu Search Algorithm for solving job

shop scheduling problems – A case study. Journal of Intelligent Manufacturing, Springer, DOI:

10.1007/s10845-011-0520-x.

Morshed, M.S., 2006. A hybrid model for Job Shop Scheduling. Thesis (PhD). University of

Birmingham, UK

Nagata, Y. and Tojo, S., 2009. Guided Ejection Search for the Job Shop Scheduling Problem. In

C.Cotta and P.Cowling, eds. Evolutionary Computation in Combinatorial Optimisation, Lecture

Notes in Computer Science, Springer-Verlag Berlin, 5482/2009, 168-179.

Nasiri, M. and Kianfar, F., 2012a, A GES/TS algorithm for the job shop scheduling. Computers &

Industrial Engineering. 62(2012), 946-952.

24

Nasiri, M. and Kianfar, F., 2012b, A guided tabu search/path relinking algorithm for the job shop

problem. International Journal of Advanced Manufacturing Technology. 58(9-12), 1105-1113.

Nasr, N. and Elsayed , E. A., 1990. Job shop scheduling with alternative machines. International

Journal of Production Research, 28(9), 1595-1609.

Nowicki, E. and Smutnicki, C., 1996. A Fast Taboo Search Algorithm for the Job-Shop Problem.

Management Science, 42(6), 797-813.

Nowicki, E. and Smutnicki, C., 2005. An advanced tabu search algorithms for the job shop

problem. Journal of Scheduling, 8, 145–159.

Park, B. J., Choi, H. R., and Kim, H. S.,2003. A Hybrid Genetic Algorithm for the Job Shop

Scheduling Problems. Computers & Industrial Engineering, 45, 597-613.

Pérez, E., Posada, M. and Herrera, F., 2012. Analysis of new niching genetic algorithms for

finding multiple solutions in the job shop scheduling. Journal of Intelligent Manufacturing,

23(3), 341-356.

Pesch, E., 1993. Machine Learning by Schedule Decomposition, Working Paper, Faculty of

Economics and Business Administration, University of Limburg, Maastricht.

Pezzella, F. and Merelli, E., 2000. A Tabu Search Method Guided by Shifting Bottleneck for the

Job Shop Scheduling Problem. European Journal of Operation Research, 120,297- 310.

Qing-dao-er-ji, R and Wang, Y., 2012. A new hybrid genetic algorithm for job shop scheduling

problem, Computers & operations Research, 39, 2291-2299.

Rajendran, I. and Vijayarangan, S., 2001. Optimal design of a composite leaf spring using genetic

Algorithms. Computers and Structures, 79, 1121-1129

Satake, T., Morikawa, K., Takahashi, K., and Nakamura, N., 1999. Simulated annealing approach

for minimizing the make-span of the general job shop. International Journal of Production

Economics, 60-61, 515-522.

Seo, M. and Kim, D., 2010. Ant colony optimisation with parameterised search space for the job

shop scheduling problem, International Journal of Production Research, 48(4), 1143-1154.

25

Sevkli, M. and Aydin, M., 2006. A Variable Neighbourhood Search Algorithm for Job Shop

Scheduling Problems. Journal of Software, 1(2), 34-39.

Taillard, E., 1993. Benchmarks for basic scheduling problems. European Journal of Operational

Research, 64(2), 278-285.

Taillard, E., 1994. Parallel Taboo search techniques for the job shop scheduling problem. ORSA

Journal of Computing, 16(2), 108-117.

Tamilselvan, R. and Balasubramanie, P., 2009. Integrating Genetic Algorithm, Tabu Search

Approach for Job Shop Scheduling, International Journal of Computer Science and Information

Security, 2 (1), 1-6.

Ten Eikelder, H. M. M., Aarts, B. J. M., Verhoeven, M. G. A., and Aarts, E. H. L., 1997.

Sequential and Parallel Local Search Algorithms for Job Shop Scheduling. MIC’97 2nd

International Conference on Meta-heuristics, Sophia-Antipolis, France, 21-24 July, pp. 75-80.

Thomsen, S., 1997. Meta-heuristics Combined with Branch & Bound. Technical Report,

Copenhagen Business School, Copenhagen, Denmark.

Velmurugan P.S. and Selladurai, V., 2007. A Tabu Search Algorithm for Job Shop Scheduling

Prolbem with Industrial Scheduling Case Study. International Journal of Soft Computing, 2, 531537.

Wang, L. and Zheng, D. Z., 2001. An Effective Hybrid Optimisation Strategy for Job Shop

Scheduling Problems. Computers and Operations Research, 28, 585-596.

Weckman, G. R., Ganduri, C.V., and Koonce, D.A., 2008. A neural network job-shop scheduler.

Journal of Intelligent Manufacturing, 19(2), 191-201.

Yamada, T. and Nakano, R., 1996. Scheduling by Genetic Local Search with Multi-Step

Crossover. PPSN’IV Fourth International Conference on Parallel Problem Solving from Nature,

Berlin, Germany, 960-969.

Yu, H. and Liang, W., 2001. Neural network and genetic algorithm-based hybrid approach to

expanded Job Shop scheduling. Computers and Industrial Engineers, 39, 337-356.

26

Zhang C., Li, P., Rao, Y., and Guan. Z., 2008a. A very fast TS/SA algorithm for the job shop

scheduling problem. Computers and Operations Research, 35, 282–294.

Zhang, H., and Gen, M., 2009. A parallel hybrid ant colony optimisation approach for job-shop

scheduling problem. International Journal of Manufacturing Technology and Management, 16(12), 22 – 41.

Zhang, C., Rao, Y., and Li, P., 2008b. An effective hybrid genetic algorithm for the job shop

scheduling problem. International Journal of Advance Manufacturing Technology, 39, 965-974.

Zhang, G.,

Gao, L., and Shi, Y., 2010. A Genetic Algorithm and Tabu Search for Multi

Objective Flexible Job Shop Scheduling Problems. CCIE- 2010, International Conference on

Computing, Control and Industrial Engineering, 5-6 June 2010, 251 - 254

Zhang, R. and Wu, C., 2008. A hybrid approach to large-scale job shop scheduling. Applied

Intelligence, 32(1), 47-59.

Zhou, R., Nee, A. Y. C. and Lee, H. P., 2009. Performance of an ant colony optimisation

algorithm in dynamic job shop scheduling problems. International Journal of Production

Research, 47, 2903-2920.