Logit and probit differ in terms of A) how their

advertisement

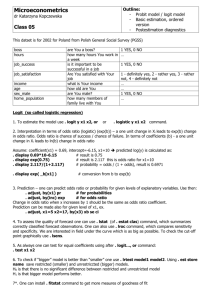

Center for Teaching, Research and Learning Research Support Group American University, Washington, D.C. Hurst Hall 203 rsg@american.edu (202) 885-3862 Advanced SPSS: Logit and Probit Regression Workshop Objective This workshop is designed to give a basic understanding of how to preform Logistic Unit and Probability Unit Regressions in SPSS, which are standard ways of running regressions with discrete dependent variables. We will use a combination of the SPSS point-and-click interface and syntax coding. Learning Outcomes 1. Understand Logit and Probit Models 2. Run preliminary analyses and data management 3. Use Logit and Probit Models 4. Interpret results Scenario: We are interested in predicting employment status using predictors such as gender, age, marital status, number of children, and race. Employment status starts out as five levels, but we will convert it to two levels, and then explore some options for running analyses with bivariate dependent variable. I. Understanding Logit and Probit Models A traditional regression (OLS) is designed to predict the value of a dependent variable using the values of one or more independent variable, on the assumption that the variables are linearly related. Depending on the values of the independent variables that you enter into the equation, the resulting predictions can come out as fractions, and can be very large or small. Typically this is no problem, but other times you are dealing with data where the outcome cannot be fraction and where there are top and bottom limits to acceptable answers. For example, if you have a model where “0” is the answer “No” and “1” is the answer “Yes”, what would you make of it if your model predicted that someone would answer “.64”, or “2.4”, or “-3”. There are 1 certainly ways to make sense of those results, but the model itself seems non-ideal if it is giving you those sorts of predictions, because no one will ever actually produce those responses. Logit and probit are two generalizations of regression analysis designed to deal with discrete dependent variables, for which fractional values do not make sense and there are a limited number of outcomes. Traditionally, logit and probit are performed on data with binary outcomes (e.g., Yes, No), but the methods can be generalized to cases with more outcomes (multinomial logit or probit, e.g., Republican, Democrat, Libertarian, Green, Other), including cases in which the outcomes have ordinal properties (ordered multinomial logit or probit e.g., unemployed, employed part time, employed full time). In any of these cases, where a traditional regression would try to predict the most likely value of a dependent variable, logit and probit try to predict the probability that a specific value of the dependent variable will be found. These differences are captured in the following graph. Notice that the linear model continues above and below the possible y values, whereas the logistic model switches relatively abruptly from predicting a “0” to predicting a “1”. Why would you want to do this? It might make more sense when you see it with some data. Compare the following graphs; the first has continuous data that is fairly linear, the second has dichotomous data with a value of either 0 or 1: 2 Logit and probit differ in terms of A) how their results are traditionally displayed, B) the assumed underlying distribution, and C) because the assumed distributions differ, the parameter estimates can often differ slightly. Taking those one at a time: As we will discuss below, logit traditionally produces log-odds of a given outcome, which are then converted to odds, while probit traditionally produces probabilities. As for the assumptions, logit assumes a logistic probability distribution, while Probit assumes a normal distribution. Which type of model should you use? In most cases, there is no obvious right or wrong answer. The results tend to be very similar, and preference tends to vary by discipline. II. Preliminary Tests We covered descriptive statistics in the introductory and intermediate courses. You always want to run descriptive statistics before you perform more advanced analyses. In this case we have included the code necessary for those analyses, but we will not spend time on them here. First, run the “Get”, “Descriptives”, and “Frequencies” commands from the syntax file. Note in the last frequency chart produced that our metric for employment “empstat” has many levels, which will make our analysis difficult. The following two commands recode that variable to have either two or three levels. RECODE empstat (10 thru 12=1) (21 thru 22=2) (ELSE=SYSMIS) INTO employed2. VARIABLE LABELS employed2 'Employment Status 2 Groups'. EXECUTE. Value Labels employed2 1 'Employed' 2 'Unemployed'. RECODE empstat (30=3) (10 thru 12=1) (21 thru 22=2) (ELSE=SYSMIS) INTO employed3. VARIABLE LABELS employed3 'Employment Status 3 Groups'. EXECUTE. Value Labels employed3 1 'Employed' 2 'Unemployed' 3 'Not in Labor Force'. You can run the two “Crosstabs” commands to ensure that the recoding worked as desired. CROSSTABS /TABLES=empstat BY empstat1 /FORMAT=AVALUE TABLES /CELLS=COUNT /COUNT ROUND CELL. CROSSTABS /TABLES=empstat BY employed1 /FORMAT=AVALUE TABLES /CELLS=COUNT /COUNT ROUND CELL. There is a non-linear effect of age on employment. We can see this effect if we run the “Graph” command, then double click on the resulting graph and add a quadratic fit line. Alas, there is no 3 elegant way to do this all in the code. We can then create a new variable called “agesq”, to account for that non-linear effect. GRAPH /SCATTERPLOT(BIVAR)=age WITH employed2 /MISSING=LISTWISE. COMPUTE agesq=age * age. EXECUTE. III. Using Logit and Probit Models When you are finished with your preliminary analyses, it is time to run our models. Running these analyses in SPSS can be a bit tricky, because there are several commands that allow you do the analyses, and each works in slightly different ways. Thus we will use a variety of pull down menues and commands. LOGIT To perform a logistic regression using the menues: Go to ANALYZE then REGRESSION then BINARY LOGISTIC To make it easier to select variables, right click on the list of variables and “Display Variable Names”, then right click again and “Sort Alphabetically”. In the “Dependent variable” field, enter employed2 In the “Factors” field, enter age, agesq, female, educ_cat, married, black Press the “Categorical” tab and in the pop-up window move all the categorical independent variables to the “Categorical Covariates” field. Select each one of the categorical variables by clicking once on it; the “Change contrast” panel is now active and you can change the “Reference Category” from “Last” to “First”. (this simply tells SPSS to treat the first category of the categorical variables as the base against which the other categories of the variable are to be compared). Continue. OK. You could also perform this analysis using the “LOGISTIC REGRESSION” command in the syntax. The first big chunk of the output “Block 0: Beginning Block” shows the analysis without including any of your variables, it is not very interesting. You are interested in “Block 1: 4 Method = Enter”. In that Block, the first thing you see is an “Omnibus” test that uses the χ2 (Chi-square) statistic, and it is significant, so we can move on to look at the rest of the model. Notice in particular the last box, which shows “Variables in the Equation”. The “B” values in this chart are the log of the odds per unit change in the independent variable, which are very hard to interpret. Lucikly, by default SPSS gives us the last column “exp(B)”, which gives us the odds. If the odds are exactly 1 (read as 1:1), that means that the independent variable has no effect on the dependent variable. When the odds are above 1, there is a positive relationship between the variables, when the odds are below 1, there is a negative relationship. For example, the odds of your being employed if you did not finish high school vs. if you finished college are “1 : 3.241”, meaning you are three times as likely to be employed if you finished college. Multinomial Logit A logistic regression with more than one level of the dependent variable is a multinomial logistic regression. We created the “Employed 3” variable above for this purpose. To perform a logistic regression using the menues, follow the instructions below. In the next to last step, as an added bonus, we will have it create new variables with the estimated probability of an individual falling into each category, the category the model predicts is most likely, and the probability of that particular prediction. ANALYZE then REGRESSION then MULTINOMIAL LOGISTIC Move employed3 to the “Dependent” field; under the “Reference category” tab. By default the “Reference Category” will be “Last”, which means that “Employed” is our comparison group. In the “Factor(s)” field, enter female, educ_cat, married, black. In the “Covariates” field, enter age, agesq. Under the “Save” tab, check “Estimated response probabilities”, “Predicted category” and “Predicted category probability”. Continue. OK. You could also run this analysis using the “NOMREG” syntax. Note that in the syntax categorical variables come after “BY”, while continuous variables come after “WITH”. 5 The output here is a little more straightforward. Under the “Likelihood Ratio Tests” we can see that we have a significant effect of sex, education level, and marital status, but not of skin color. If we continue on to the “Parameter Estimates”, you can see that “Not in Labor Force” and “Unemployed” have been seperatly compared with our reference group “Employed”. Each chart can be read on its own just like tha charts above (though notice the “exp(B)” column moved), and notice that the higher value for each variable is now used as the default value. You can also compare between the groups. PROBIT Probit is not quite as elegant through the pull down menues, but it works pretty much the same in the code. To use the pull down menues: ANALYZE, then REGRESSION, then ORDINAL REGRESSION, followed by selecting "Probit" from the "links" menu in the options. In the “Dependen” field, enter employed1 In the “Factor(s)” field, enter female, educ_cat, married, black In the “Covariates” field, enter age, agesq. OK. Note that all categorical variables here use the highest value of the variable as the contrast condition as they did in the Multinomial Logit. You could also run this analysis using the “PLUM” command in the syntax file. The output here is pretty similar to the logit outputs above, but is much more difficult to interpret. The “Parameter Estimates”, in the last part of the output, show the amount that a unit change in the independent variable affect the z-score associated with the probability of the dependent variable. Using the output from the code that includes age: For every year you are older, the increase the z-score “.078” (modified by -.001 times the square of your age). Because you are using the coefficient to modify a z-score, the affect of any change is depends on the values you started with. For example, if your variables add up to a zscore of 1, the probability of being employed is 84.13%. Adding another year of age (ignoring 6 the affect of squared age) will increase your probability to 85.77%, an increase 1.64%. However, if your z-score started at 2, an additional year of age would only increase your probability by .49%, because you are further out on the tail of the normal curve. (I looked up the probabilities for the z-scores in the back of an stats textbook.) In the case of the discrete variables, note that the coefficents are all in comparison to the highest value in the category (there is not option in SPSS’s probit command to change the comparison group). Additional Notes to Consider a) Empty or incomplete variables: You should check for empty or incomplete cells by doing a crosstab between categorical predictors and the outcome variable. If a variable has very few cases, the model may become unstable or it might not run at all. b) Separation or quasi-separation (also called perfect prediction), a condition in which the outcome does not vary at some levels of the independent variables. c) Sample size: Both logit and probit models require more cases than OLS regression because they use maximum likelihood estimation techniques. 7