Coordinating IT developments in on-going (EC-) projects

advertisement

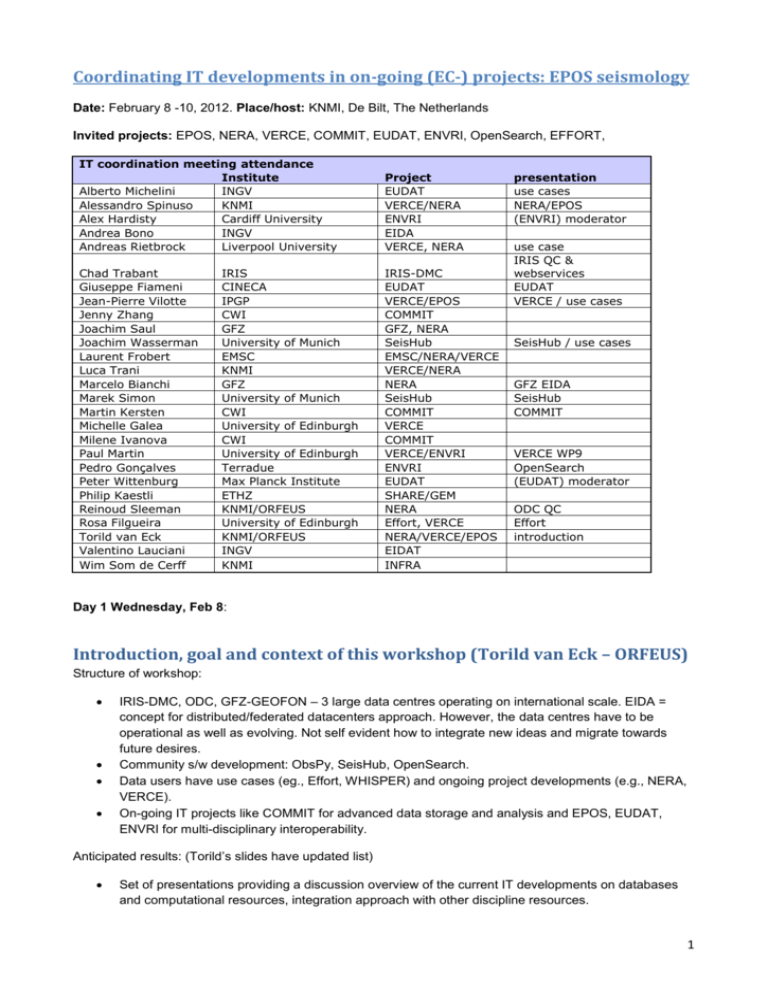

Coordinating IT developments in on-going (EC-) projects: EPOS seismology Date: February 8 -10, 2012. Place/host: KNMI, De Bilt, The Netherlands Invited projects: EPOS, NERA, VERCE, COMMIT, EUDAT, ENVRI, OpenSearch, EFFORT, IT coordination meeting attendance Institute Alberto Michelini INGV Alessandro Spinuso KNMI Alex Hardisty Cardiff University Andrea Bono INGV Andreas Rietbrock Liverpool University Project EUDAT VERCE/NERA ENVRI EIDA VERCE, NERA Chad Trabant Giuseppe Fiameni Jean-Pierre Vilotte Jenny Zhang Joachim Saul Joachim Wasserman Laurent Frobert Luca Trani Marcelo Bianchi Marek Simon Martin Kersten Michelle Galea Milene Ivanova Paul Martin Pedro Gonçalves Peter Wittenburg Philip Kaestli Reinoud Sleeman Rosa Filgueira Torild van Eck Valentino Lauciani Wim Som de Cerff IRIS-DMC EUDAT VERCE/EPOS COMMIT GFZ, NERA SeisHub EMSC/NERA/VERCE VERCE/NERA NERA SeisHub COMMIT VERCE COMMIT VERCE/ENVRI ENVRI EUDAT SHARE/GEM NERA Effort, VERCE NERA/VERCE/EPOS EIDAT INFRA IRIS CINECA IPGP CWI GFZ University of Munich EMSC KNMI GFZ University of Munich CWI University of Edinburgh CWI University of Edinburgh Terradue Max Planck Institute ETHZ KNMI/ORFEUS University of Edinburgh KNMI/ORFEUS INGV KNMI presentation use cases NERA/EPOS (ENVRI) moderator use case IRIS QC & webservices EUDAT VERCE / use cases SeisHub / use cases GFZ EIDA SeisHub COMMIT VERCE WP9 OpenSearch (EUDAT) moderator ODC QC Effort introduction Day 1 Wednesday, Feb 8: Introduction, goal and context of this workshop (Torild van Eck – ORFEUS) Structure of workshop: IRIS-DMC, ODC, GFZ-GEOFON – 3 large data centres operating on international scale. EIDA = concept for distributed/federated datacenters approach. However, the data centres have to be operational as well as evolving. Not self evident how to integrate new ideas and migrate towards future desires. Community s/w development: ObsPy, SeisHub, OpenSearch. Data users have use cases (eg., Effort, WHISPER) and ongoing project developments (e.g., NERA, VERCE). On-going IT projects like COMMIT for advanced data storage and analysis and EPOS, EUDAT, ENVRI for multi-disciplinary interoperability. Anticipated results: (Torild’s slides have updated list) Set of presentations providing a discussion overview of the current IT developments on databases and computational resources, integration approach with other discipline resources. 1 Contribution to the EarthCube debate (COOPEUS). Includes bringing IT developers and earth scientists closer together. How is EarthCube tackling this? Minimise overlaps. Set development priorities that fit within the current on-going projects Possible additional developments to be anticipated and requiring additional resources Input to EPSO, EUDAT, ENVRI. Clear definition of the EPOS work within ENVRI. Also input in other direction. NOTE: References to “=ENVRI problem no. X” are my annotations to highlight problem areas I heard about during the workshop that may actually be common with those of other ESFRI RIs. I will take these back into the ENVRI project for further consideration. IRIS-DMC, datacenter presentation – developments (Chad Trabant – IRIS/DMC) Presentations\IRIS DMC Service Overview.pptx USA consortium. Data services, Instrumentation services incl. global seismograph network, temporary experiment instruments loaned out, Earthscope – IRIS runs the seismology part of this. Education and public outreach, international development. DMC currently holds 155TB (Jan 2012) – this is huge for open public data but small compared to oil companies! 150-200 sources collected from. Holds data back to 1868. People are requesting data from across the archive all the time. Currently shipping out 85TB (2010), 180TB (2011) in response to 10s100,000s requests. ½ million requests per day, delivering 1TB per day. Increase in requests is because of the way the use of data is changing, not because there is more data to request. Web services have had a huge impact on how users are requesting (in comparison with older email based mechanisms). Retirement of old mechanisms is difficult. Currently using 4 linux VMs in front of Isilon NAS disk arrays. Meta-data stored in Oracle. Logs in postGres. All fronted by load-balancer. “Real-time” in seismology typically means 1-5 minutes latency. mSEED (miniSEED) is the std seismology data format. Seedlink – std open streaming protocol. ArcLink. Web Services are future both at external interface and internally. Standard REST. Once switched over to WS, can start changing back-end infrastructure. See www.iris.edu/ws/ for data retrieval services and for data transformation / calculation services. Users have many levels of data use. =need to process raw data that delivers data products that match users’ needs. Also, many kinds of users, from novice, IT illiterate through to professionals. Also, IRIS Web Services Java Library – beta release 7/2/12. Serves data. Don’t need to know anything behind. Also, MatLab interface. QC algorithms applied to raw data to make it ‘research ready’ e.g., to calculate/know s:n ratio, remove noise, etc. 2 GFZ, data center presentation – developments EIDA (Marcello Bianchi – GFZ/GEOFON) Presentations\whatiseida.pdf 70 networks, 2800 stations, 15,000 channels feeding GEOFON. 35TB of data. In Europe, many networks, many data centres, organisations and users. How to find the right data stream from the right place? EIDA creates a federation in which each data centre retains their own interests. ArcLink is the mechanism for federation. It delivers time-series data in response to requests or inventory of holdings. Is an asynch mechanism. **Look at IRIS-DMC web services and EIDA/ArcLink use cases as examples for what kinds of requests users want to make from federated data collections. Look at set-up for seismic monitoring and data collection as a model example for biodiversity sensor data collection. There were some challenging questions from audience about associated metadata that is needed and about the efficiency of synchronizing ArcLink nodes to maintain accurate routing approach. ODC, data center presentation – developments (Reinoud SLeeman – ODC/KNMI) Presentations\IT-QC-ORFEUS.pdf “Some considerations with respect to a seismic station” – a lot of QC work needs to be done to detect, for example, changes in the type of sensor – because data centre has no control over seismic stations. IT challenges: a) efficient algorithm for locating the highest quality data in the archives. b) provenance c) use of QC parameters in delivery services d) PQLX web service SOH=State of Health. N= north E=east Z=vertical channels. OFEUS will put ADMIRE gateway in place. Metadata changes are problematical. When it changes should one go back and re-calculate all the QC metrics for already held data? Need also to retain the old poor data too. Versioning? Provenance? **Do the kinds of approaches to QC outlined in this presentation have transferable and more generic applicability in other areas. = ENVRI solution to ENVRI common problem no.1 OpenSearch (Pedro Gonçalves, Terradue) Presentations\T2-EC-GENESIDEC-HO-11-117 OpenSearch applied to Earth Science.ppt OpenSearch is a descriptor for multiple search templates. It can be adopted across multiple sites to homogenize the access and the discovery of distributed information performed through most of the already available clients. 3 It expects to provide RESTful search interfaces and the output formats can be selected among several options as long as they can be specified through mime-types. Most common format suggested is ATOM Is the EPOS community proposing to support OpenSearch queries on the data it holds?.... with geo and time and other potential extensions?? Will the various data centres that have been described eventually like to support the OpenSearch capability? This is a common solution they could adopt coming from ENVRI. How can OpenSearch sit on top of ArcLink? Q. Can OpenSearch be used to solve some of the problems raised in the discussion about ArcLink? i.e., on the need of ArcLink servers to synchronise with each other and to hold copy of all routing information. Marcello thinks it would be necessary to define some seismology extensions to make it possible to obtain the right granularity. Return atom feed with the matching objects (metadata) but haven’t reached the data yet. …… discussion can continue. Checklist in presentation lists what a data provider’s search engine has to provide in order to be accessible by OpenSearch. www.opensearch.org – describes capabilities of a search engine. Geospatial and temporal extensions added by GENESI. Q. Does OpenSearch represent an alternative approach to the Catalogue interface approach? A. It is the default binding in OGC CSW 3.0. http://www.google.co.uk/url?sa=t&rct=j&q=ogc%20csw%203.0&source=web&cd=1&ved=0CCIQFjAA&url= http%3A%2F%2Fwww.genesi-dec.eu%2Fpresentations%2FOGC_201009.pdf&ei=qJMyT4DBsLa8APJjKHrBg&usg=AFQjCNEO9b7KkdxNcpXTQQ4TE9_h0vUndA&cad=rja Chad – what’s the obvious advantage? Much more useful than failed attempts to adopt ontologies. BUT Firefox can make the link but it has no idea to what to to do with the data cos it doesn’t understand the data format. A. Need to define and register the mime type so that universal readers start to appear. E.g., as has happened with netCDF. Also need to adopt and use aggregators to help out to discover sources of results that will match queries. SeisHub (Joachim Wassermann & Marek Simon, LMU) Presentations\joachim.wasserman.pdf ObsPy – allows read, write, manipulation, process and visualize seismology data, including using old codes for analysis. = rapid apps development for seismology. www.obspy.org . Very nice tutorials and installers and focus on students/young researchers are some of the reasons why it is so widely used. SeisHub – a small institution or personal solution. Only used by 1 institution. Unsustained and seems unlikely to see more widespread adoption. 4 Use cases (Andreas Rietbrock, Liverpool /VERCE) Pilot project – RapidSeis: Virtual computing through the NERIES data portal. User can alter the functionality of virtual application using a plugin. Everything done through a browser. Based on Edinburgh’s RAPID portlet kit to develop Editor portlet to create/compile scripts/jobs (using SDX) and Executor portlet to parametise them and run them, … on whatever resources are available. Used for examining waveform data and localizing extracts from it. …..ideas carried forward into ADMIRE project …. and to VERCE. Seismic data sets are becoming denser and larger. Greater coverage of an area. Higher frequency sampling. Turning up in the local institutes where it is collected / coordinated. What is the role of the data center in this scenario? Add computational capacity. This is the DIR problem. Does it require data staging approach or does it require moving computation to where the data is. = ENVRI common problem no. 2 Need local institutional infrastructure to deal day-to-day with large datasets that may not be deposited in the data centres. Once have moved data e.g., from data centre or from field expedition into the institute, one wants to maximize the utilization of it. J-P Villotte suggests a hierarchical infrastructure is needed. Use cases (Alberto Michelini, INGV /EPOS/VERCE/EUDAT) Presentations\Use_cases_in_Seismology.pdf Make it feasible to do scientific calculations otherwise impossible on standard desktops, laptops, or small clusters. Has large data volumes (e.g., data mining). Very intensive CPU applications (e.g., forward modelling and inversion). = ENVRI common problem no. 3 Metadata definition and assignment – in a way that allows scientists to select data they need, especially when they are acessing data that is not from their native domain of expertise. = ENVRI common problem no.4 What are the requirements with respect to assigning metadata to real-time seismological data streams? What metadata has to be assigned? With what frequency? And how quickly? e.g., in order to satisfy the NERA and VERCE use cases for immediate and continuous analysis K.Jeffery is suggested 3 level metadata scheme based on Dublin Core, CERIF, INSPIRE and other relevant standards. EPIC or Datacite being proposed in EUDAT for persistent identifier mechanisms. PID scheme = ENVRI common problem no. 5. Needs coordination with other cluster projects. Data Centres should adapt to processing incoming real-time data as it arrives and to QC, downsample it if needed, and assign metadata and store it. Build a search engine over it. Do it like Google. = ENVRI common problem no. 6 5 Use cases (Jean Pierre Vilotte, IPGP /VERCE/EPOS) VERCE – e-science environment for data intensive (seisomological) research based on extensive SOA. Continuous waveform datasets going back 20 years. Community well structured around data infrastructures. EIDA with international links to USA and Japan. Science = Earth interior imaging and dynamics; natural hazards monitoring; interaction of solid earth with ocean and atmosphere. WHISPER – new methods using seismic ambient noise for tomography and monitoring of slight changes of properties in the Earth. Typical workflow steps: 1. Downloading waveforms (=gather the data). Continuous waveform data in mseed/sac/wav formats and its metadata. 1-100sTB. Large number of small data sets, typically. From data centres and from local groups / institutes. Issues: gathering / aggregating a large number of datasets; ftp access and bandwidth; disk transfer and data ingestion to local storage capacity where maximal use can be made of it. Data has various lifecycle lengths. 2. Pre-processing (aligning waveforms, filtering and normalization). Trace extraction, aggregation, alignment, processing, filtering, whitening, clipping, resampling. = low level data processing. Highly parallel. Often messy to do on e.g., 5 years of data. Then has to be stored. Issues: different trace durations, frequencies, overlapping, metadata definition (is currently manual and no common understanding of what metadata is needed), trace format. 3. Computing correlations. More HPC intensive. Compute correlation of all coupling of traces. Mainly FFTs. Complexity increases with number of stations. Results have to be stored efficiently. 50 10**6 correlations, 5000 points = 22 hours. Spectral whitening adds 40%. Can be done on Grid and clusters. Issues: variable time windows, stacking strategy, trace query and manipulation, storage of results, metadata definition. 4. Orchestrated applications a. Measuring travel times and analysing travel time variations b. Tomographic inversions and spatial/temporal averaging. Leads to images and models. Issues: orchestrated workflow between between the data intensive applications and the HPC intensive applications. Data movement and access across HPC and data intensive infrastructures. Heterogeneous access policies and data management policies from one processing facility to the next. Need reusable software libraries, workflows, support for interaction and traceability. Datasets derived during the processing has to be published so that they can be re-used for higher level processing, and then archived after several months or years. What is the role of the data centres here and what is the vision? = ENVRI problem no.7. 3 kinds of data lifecycle: persistent and resilient data as a public service; massive data processing pipelines; community analysis of large data sets. 6 Use cases (Rosa Filgueira, UEDIN /Effort) Earthquake and failure forecasting (of rock) in real time from controlled laboratory test to volcanoes and earthquakes. Presentations\DIR-effort.pptx Project VERCE (Paul Martin, UEDIN / VERCE) Presentations\paul.martin.pdf Aim – create a good platform for DIR. Most of the expertise / experience so far comes from the ADMIRE project – which consumes workflows and executes them on the resources registered with the ADMIRE gateway. **How do VERCE and D4Science relate to one another? **How do ADMIRE platform and Taverna Server relate to one another? What opportunities are there for Taverna to generate DISPEL representations of its workflows? Wittenberg: Workflows can be talked about on different levels. e.g, Workflows for researchers using eg Taverna. workflow for data management e.g., OGSA-DAI, e.g., iRODS Key issue is interfacing infrastructures like VERCE and others to PRACE and other compute infrastructures. Need to be able to hide heterogeneities of access from the users. Project COMMIT (Martin Kersten, ICW / COMMIT) Presentations\KNMIcommit.ppt €100m project in Netherlands. National broad activity for 5 years. http://www.commit-nl.nl/ Database technology for events storage and processing – trajectory analysis. MonetDB competes with mySQL and postGres and has far better performance above 100GB. MonetDB, SQL and SciQL query languages, SciLens computational lenses. SQL based on relational paradigm. SciQL changes that – symbiosis of relational and array paradigm. Allows array operations. Driven by needs of astronomy, seismology, remote sensing. See TELEIOS project (www.earthobservatory.eu) for an example. Dream machine. Today’s scientific repositories – usually high volume file based with domain specific standard formats (eg SEED). Locating data of interest is hard. Limited flexibility, scalability and performance. Raw data and metadata are often held separately, the latter in an RDBMS. Brought together through middleware and delivered to apps. Why are DBMS techniques not better exploited in science? Desire to hold data more locally. Incompatibility between local stores and centralised data centres. 7 Schema mismatch between relational data model and scientific models. ....... MonetDB Data Vault offers symbiosis between file repositories and databases with transparent just-in-time access to external data of interest. http://www.monetdb.org/Documentation/Cookbooks/SQLrecipies/DataVaults Project EUDAT (Giuseppe Fiameni, CINECA / EUDAT) Presentations\Fiumeni KNMI_Coordination_Meeting.pptx VPH have already implemented mechanisms for running multi-scale applications involving elements of PRACE and EGI. Simple flat; structured; detailed; = 3 levels of metadata. Dublin Core, CERIF and domain specific. Is proposal of Keith Jeffery. **Adopt it in ENVRI? How does EUDAT intend to cope with deposition of real-time / streaming data? At present it only supports deposition of previously collected data sets. (Reveals possible need for links between community specific data stores (eg., to collect sensor data) and repository data stores of EUDAT (eg. To deposit previously collected data objects.) Thus, communities require staging area to collect real-time data and gather it up into digital objects that can be deposited into EUDAT. =ENVRI problem no. 8 In ENVRI recognise this staging area as a high-level component in ODP, along with EUDAT component. Does the EPOS community have the notion of data objects? What are they? **Kahn model – consider adopting it in ENVRI? Discussion Set joint development priorities; minimise overlaps. Identify gaps. Establish the follow-up. Input to EPOS, EUDAT and ENVRI. Topics How to deal with the complexity? – separate components managed by recognised authorities, registries, loose coupling, standards PID implementation – EUDAT proposal? (EPIC) QC procedures implementations Metadata (mapping) organization and definitions Versioning and provenance and traceability Handling large datasets: archives, computational resources, processing Federated data center: most efficient organisation Archiving secondary data products (synthetics / correlation) Workflow / dataflow / processing flow PID implementation – EUDAT proposal? (EPIC) 8 DONA – Digital Object Numbering Authority. – being established. Suggestion (obvious) is to use DONAs to persistently identify data objects. Community needs to define how to use DONAs. http://www.pidconsortium.eu/, http://www.handle.net/. Can’t find DONA website. There are multiple possibilities for how to define allocation of PIDs to objects. Community needs to find the way most appropriate to its needs. And to support PIDs allocated to aggregations / collections of data. Astronomers have got this cracked, for data and whole processing workflow. Why can’t this be used in seismology? http://www.astro-wise.org/what.shtml cf.Bechhofer. QC procedures implementations n/a Metadata (mapping) organization and definitions What metadata has to be assigned? With what frequency? And how quickly? The PID is a reference to an object and it’s used ad part of the metadata. In theory PID are assigned to immutable object, a modified object is a new object (new PID). The Data Center deals with the private backyard, data already used have a state. Discussions points over the PID o PID on data samples vs PID on chunks: also chunks are often too many. o PID on a station configuration on a specific day. That does not help to replicate the experiments using the data coming from a certain station. o A PID could be also assigned to any webservice request in a way that can be reproduced o PID per day linking to the PID of the chunks. NERA/VERCE/EPOS have to keep EUDAT in contact with relevant people to make sense of the PID system to agree on the assignment policy (it might be also applicable to different schemas if the community can’t agree on one). Should be able to identify secondary data produced during the (VERCE) workflow process. ISO 19115 metadata std for geographic information. From LifeWatch: Primary and derived information (including metadata) related to biodiversity research Meta-information, that is: descriptive information about available information and resources with regard to a particular purpose (i.e. a particular mode of usage) www.dataone.org/BestPractices Versioning and provenance and traceability 9 An area to watch. Research objects. May be multiple approaches to reproducibility problem. Is naive to think there is only one solution. Distributed computing world and HPC worlds may require different solutions. e.g, way I have predicted seismograms for global scale model on PRACE is completely different from reproducing workflow process for cross-correlation calculations. Each needs different kind of information. Handling large datasets: archives, computational resources, processing MapReduce approach in the future? Try a VERCE use case in monetdb – exploratory. More people can use cpu cycles closer to the data rather than moving data to where cycles are. Cloud is not a solution at present because it is a huge computer capacity but with bottleneck of data i/o. Not obvious that cloud storage can solve the problem. It’s still a lot of data to move around. Federated data centre concept (FDSN): most efficient organisation Working system (EIDA) based on ArcLINK. EUDAT studying other approaches. Aggregating metadata does not mean aggregating the data. Need to explore technology stack promoted by EUDAT and to consider whether EUDAT will become a sustainable infrastructure. Moving data around continues to be a problem. No-one knows the answer to this. AAA – what are the security requirements and solutions? Probably minimal until restricted access to data becomes necessary. Use eduGain possibly. Although relatively small scale of restriction may not make it worthwhile at this stage. Archiving secondary data products (synthetics / correlation) Starts with community deciding what is important to archive, costing and justifying it. Can’t define IT solution until these fundamentals are known. L0 – raw data; L1 – QC data; L2 – filtered data; L3 – research level pre-processed data; L4 – research product. This categorisation comes from NASA remote sensing (I think). **In ENVRI we need to think about how to handle each of these data products, vis a vis cost of archiving vs cost of regenerating when required again. Can we find common solutions here? = ENVRI common problem no. 9 Get the community closely involved in these decisions. They get to decide. Workflow / dataflow / processing flow ? 10 Day 3 Friday, Feb 10: 9:00 – 12:00 reporting back from yesterday’s followed by discussions Metadata definitions: how to start moving QC: establish QC standards; standardized QC services Projects: NERA,VERCE, EUDAT, ENVRI responsibilities Followup and actions Metadata enabling data mining Searching Priorities (Chad): Location of the data (which federation data is where). A pointer that specify where do I go to fetch the data. Discussion has to start about the Meta information, what would you like to search on? EUDAT will start with the metadata task force soon. Therefore input needs to be pushed to them once the metrics are defined. Laurent provide information about the same discussion within GEM: http://www.nexus.globalquakemodel.org/gem-ontology-taxonomy/posts Data characteristics: time window, location, QC parameters (integrity, having gaps or not, spectral density), RMS values (statistic on the waveform). Other examples discussed: - Filtering on noise (currently possible) - Mean ( based on timewindow, defining the granules is an issue ) - IRIS calculates QC metrics on daily granularity (see Chad slides) - IRIS has to take its own way at the moment, despite the EU decisions on metadata metrics. - Networks operators contributes giving input metrics (IRIS won’t ask to everybody though) - SeisComP approach (timestamp configurable) Delay, Offset, RMS - Similar metrics but different algorithms (further detail on the procedures are needed) This discussion will receive a follow up within the community. As a first step an EIDA wiki will be created. Meta Data actions: Peter Wittenburg: a group of people must get together to define and store the concepts and the terms in order to define the metadata items. It has to be done within the FDSN. A useful starting point: http://www.dataone.org/bestPractices The EIDA wiki is a first step for our community. 11 QC Actions - - Test dataset are needed to check the validity of the packages and proceduresPrepare the Seiscomp list of QC parameters as input for the Mustang design. Next step will be to have a closer look to the implementation of a certain metrics. Marcelo will distribute the SeisComp QC list (first) Orfeus will create an EIDA wiki for the definition of the QC metrics and meta data discussions. Projects Coordination VERCE VERCE: SA3 Portal and centralized administration of the platform in coordination with NERA development. VERCE SA1-SA2 through CINECA and UEDIN: Data replication, AAI and resources management in coordination with EUDAT. It’s important to keep Amy (UEDIN) updated and actively involved on these activities. VERCE JRA1-2 SA2: definition of use-cases and workflow implementation. Jean Pierre comment: Having people in low level discussions doesn’t mean that the projects are coordinated. The coordination needs to be formalized. Architects in all involved projects and the project managers have to coordinate these cross-projects activities. This will receive attention in the next VERCE meeting. Use-case coordination is also needed among the two projects VERCE-EUDAT VERCE use cases: Reinoud Sleeman and Marek Simon will work out a use case using synthetics for Quality Control. Feedback to VERCE NA2. 12 EUDAT Replication: PID production (needed also by VERCE catalogs) Collaboration with VERCE will focus on data staging (PRACE also will be involved) AAI: EPOS is expected to take part to the discussion that will impact also VERCE. We expects some clear directives within one year. The EPOS and VERCE communities should come up with requirements. Users of the VERCE use cases must be represented under the EPOS umbrella in order to find the pragmatic solution together with EUDAT on the adopted standards. Responsibility of this coordination should be taken within the HPC participants in VERCE in collaboration with EUDAT .We need to avoid technology driven solution, therefore requirements need to be provided from the use-cases. Inviting relevant partners to the periodically video-conference is needed. CINECA might represent VERCE on this discussion related to AAI. * Notes: based on Alex Hardisty and Alessandro Spinuso meeting notes; merged 20/2/2012 13 Background information and links (provided at the workshop): EUDAT (www.eudat.eu) ; iRODS (www.irods.org) VERCE (www.verce.eu) coordinator Jean-Pierre Vilotte COMMIT http://www.commitnl.nl/Messiaen53/Update%2013%20mei%202011/Toplevel%20document%20COMMIT%20%20April%202011.pdf (representative: Martin Kersten): NERA (www.nera-eu.org) (representative: Torild van Eck) OGC web services : Web Notification Services; WMS/WFS for earthquake data viz with std GIS tools. Analysis of large datasets – execution of predefined workflows designed to filter, down sample and normalise large datasets. Resource orientation – unique and persistent ids for all kinds of raw data, data products, user composed collections, etc. including annotation. - Seismic portal (www.seismicportal.eu) ENVRI : EPOS (www.epos-eu.org): WG7 Data centres: ORFEUS (www.orfeus-eu.org), IRIS-DMC (www.iris.edu), GFZ - GEOFON (http://geofon.gfz-potsdam.de/) EarthCube (http://www.nsf.gov/geo/earthcube/) OpenSearch (http://www.opensearch.org) SeisHub (www.seishub.org) ObsPy (www.obspy.org) WHISPER (http://whisper.obs.ujf-grenoble.fr) 14