revised notes from sessions

advertisement

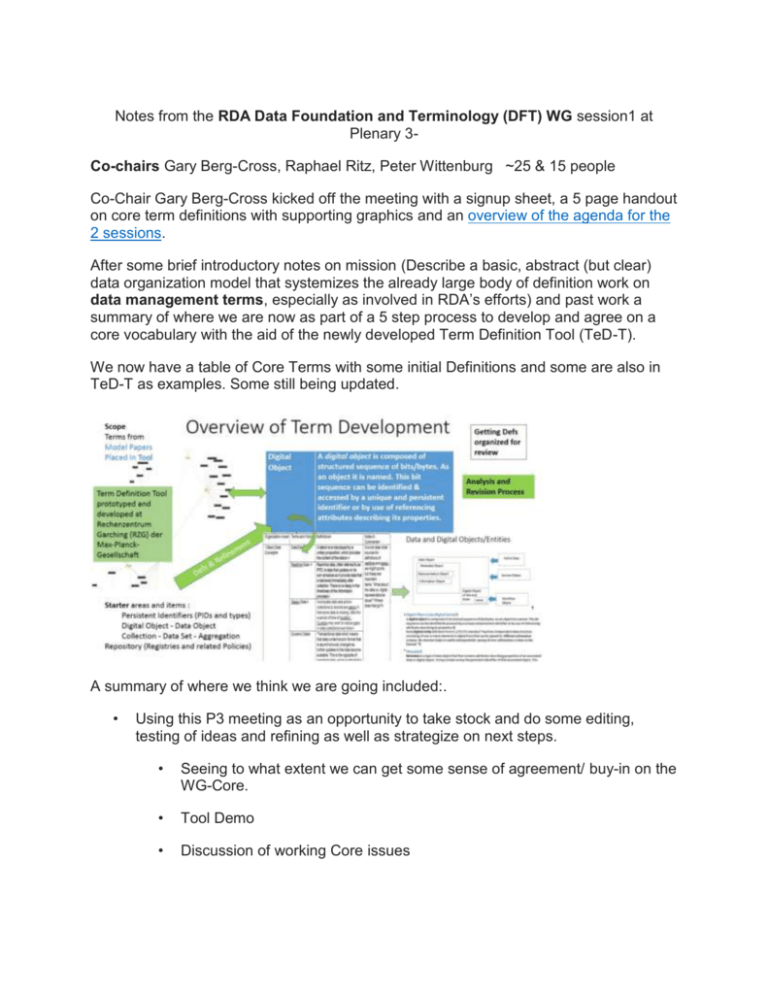

Notes from the RDA Data Foundation and Terminology (DFT) WG session1 at Plenary 3Co-chairs Gary Berg-Cross, Raphael Ritz, Peter Wittenburg ~25 & 15 people Co-Chair Gary Berg-Cross kicked off the meeting with a signup sheet, a 5 page handout on core term definitions with supporting graphics and an overview of the agenda for the 2 sessions. After some brief introductory notes on mission (Describe a basic, abstract (but clear) data organization model that systemizes the already large body of definition work on data management terms, especially as involved in RDA’s efforts) and past work a summary of where we are now as part of a 5 step process to develop and agree on a core vocabulary with the aid of the newly developed Term Definition Tool (TeD-T). We now have a table of Core Terms with some initial Definitions and some are also in TeD-T as examples. Some still being updated. A summary of where we think we are going included:. • Using this P3 meeting as an opportunity to take stock and do some editing, testing of ideas and refining as well as strategize on next steps. • Seeing to what extent we can get some sense of agreement/ buy-in on the WG-Core. • Tool Demo • Discussion of working Core issues A Checklist of Issues Needed for DFT Term Progress was presented” • • Ramp up of effort by DFT WG Community • Review of table, categories and definition refinement • Confirmation of scope of work • How do we handle points of contention? • What is the process by which we converge and move to adoption? Training in and exposure of Term Tool (Demo tomorrow) • Use by other WGs for their needs • • Is our table example useful as a model for them? Further test of Use Case Scenarios Most of the remainder of the first session was spent presenting and discussing Use Cases. Peter Wittenburg presented several scenarios of use starting with P1 a CLARIN Collection Builder scenario which creates collections out of data, gives the data citable PIDs and metadata in various repositories. The collection itself also has a PID and metadata. P2 Replication of data ( a replication workflow) from different communities in the EUDAT Data Domain is not trivial and may require adding a PID and other additional metadata as part of replication in EUDAT data storage. For this we need several PID Info Types including: - cksm - cksm type - data_URL+ - metadata_URL+ - ROR_flag - mutability_flag - access_rights_store - etc. P3 Curate Gappy Sensor Data (delays in transmission) in Seismology. Data being collected has gaps since sensing is opportunistic and yields dynamic data since gaps are being filled at random times. Hence what a data collection looks like is different with fewer gaps over time. But researchers have to refer to the data collection before gaps are filled. In conversation it was noted that some thought that gappy data referred to “incomplete” data. Defining it by time makes sense. P4 Crowd Sourcing Data Management requires Curation/Structuring of a stream of data so it is permanently stored with proper MD, that may need to be created for that store, with all needed annotations of relations to other data and a register PIDs for all objects. P5 Language Technology Workflows As collections evolve over they are modified by a workflow processing modules/service objects which are informed by the PID system and metadata. An example of the processing in such service objects is: 1. read collection MD 2. read MD 3. interpret MD & get PID 4. get data 5. process data & create new data 6. register PID 7. create MD* 8. update collection 9. if more in collection go 2 10. end Reagan Moore’s scenario concerned reproducible data-driven research and its capture as a service object/workflow of chained operations. To be reproducible one must understand processes & operations: Where did the data come from? How was the data created? How was the data managed? Researcher operations for the RHESSys workflow to develop a nested watershed parameter file (worldfile) containing a nested ecogeomorphic object framework, and full, initial system state include human operations, such as below along with repository operations (further below). An abstracting graphic was provided to show some of the relations. 1. Pick the location of a stream gauge and a date 2. Access USGS data sets to determine the watershed that surrounds the stream gauge (may needed named digital object) 3. Access USDA for soils data for the watershed 4. Access NASA for LandSat data 5. Access NOAA for precipitation data 6. Access USDOT for roads and dams 7. Project each data set to the region of interest 8. Generate the appropriate environment variables 9. Conduct the watershed analysis 10. Store the workflow, the input files, and the results There are an equal number of data repository operations such as: 1. Authenticate the user (a response of the repository system) 2. Authorize the deposition 3. Add a retention period 4. Extract descriptive metadata 5. Record provenance information 6. Log the event 7. Create derived data products (image thumbnails) 8. Add access controls (collection sticky bits) 9. Verify checksum We need to understand vocabulary of each of these types of operations. Reagan proposed some definitions for key terms from bits, digital object, data object, Representation object, Operations, Workflow and Workflow object. As part of Practical Policy work these operations are driven by policy so there are collection policies.. Hans Pfeiffenberger provided some relationship scenarios for research data objects, which are „complex objects“ as framed by the Open Archives Initiative Object Reuse and Exchange (OAI-ORE). He considered what we mean by a research data object ina simple case involving and article in a classical journal which is related to an article in a data journal, each of which points to data in a repository. Many more complex relationships are full studies, such as clinical trials, which incluse connections to method protocols, data collection forms, raw and cleaned data records, a published primary report along with results DBs and conference reports. Thousands of pages may be involved in the aggregate research object and we need to take all of these realtions into account. „A plethora of objects per research data set! i.e.: a complex (yet fixed!) object In different formats, under different control, ... i.e.: in different repositories Each with a distinct timeline (no circles or cycles!) And then: Reliable and stable bidirectional linkages between all these elements”