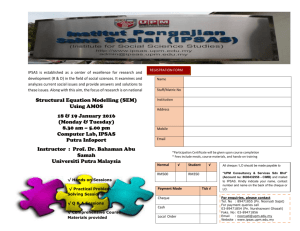

Annotating Output in AMOS

advertisement

CFA and SEM Basics Putting Titles in Amos output Choose Title icon from AMOS tool box. Click on diagram. The default location is “Center on Page”. I prefer “Left align”. The default font size is 24. To get AMOS to compute a predetermined quantity, put the name invoking the quantity immediately after a reverse backslash, \. Put descriptive text in front of it, if you wish. Chi-square = 19.860 df = 9 p = .019 24.43 visperc 22.74 1.00 e1 10.46 .66 vis 1 cubes 1.17 lozenges 1 e2 30.78 1 e3 2.83 9.69 1.00 paragrap lang 1.33 sentence 2.24 wordmean 1 e4 8.11 1 e5 19.56 1 e6 Intro to SEM II - 1 02/10/16 Using Summary Data for Amos Since the analyses in Amos are based on summary statistics - the means and variances and covariances between the variables - only the means, variances and covariances need be entered. They can be entered 1) as means, variances and covariances or 2) as means, standard deviations, and correlations. The summary data must be entered using a fairly rigid format, however. Here’s an example of correlations, means, and standard deviations prepared in SPSS for use by Amos. SPSS Example 1.00 Here’s an example prepared in Excel. Excel Example Rules: I. Rules regarding names of columns in the data file. A. First column’s name is rowtype_ Note that the underscore is very important. Without it, Amos won’t interpret the data correctly. B. Second column’s name is varname_ Again, the underscore is crucial. C. 3rd and subsequent columns. The names of these columns are the names of the variables. Intro to SEM II - 2 02/10/16 II. Rules regarding rows of the data file A. Row 1: Contains the letter, n, in column 1. Contains nothing in column 2. Contains sample size in subsequent columns. B. Row 2 through K+1, where K is the number of variables: Column 1 contains either “corr” without the quotes or “cov” dependent on whether the entries are correlations or covariances. Column 2 contains the variable names, in same order as listed across the top. Columns 3 through K+1 contain correlations or covariances, depending on what you have, until the diagonal of the matrix. C. Row K+2 Contains the word, stddev, in column 1, nothing in column 2, and standard deviations in columns 3 through K+2. D. Row K+3 Contains the word, mean, in column 1, nothing in column 2, and means in columns 3 through K+3. Analyzing Correlations By default, Amos analyzes covariances. If you enter correlations along with means and standard deviations, it converts the correlations to covariances using the following formula: CovarianceXY rXY = ---------------------------------- which is equivalent to CovarianceXY= rXY * SX*SY SX * Sy If you want to analyze correlations, you have to fake Amos out by making it think it’s analyzing covariances. To do that, enter 1 for each standard deviation and 0 for each mean. It will multiple the correlation by 1 and then analyze what it thinks is a covariance. Example . . . rowtype_ n corr corr corr corr corr corr corr corr corr stddev mean varname_ M1T1 M1T2 M1T3 M2T1 M2T2 M2T3 M3T1 M3T2 M3T3 500 500 500 500 500 500 500 500 500 M1T1 1 M1T2 0.42 1 M1T3 0.38 0.33 1 M2T1 0.51 0.32 0.29 1 M2T2 0.31 0.45 0.19 0.44 1 M2T3 0.3 0.28 0.39 0.38 0.32 1 M3T1 0.51 0.31 0.3 0.62 0.36 0.28 1 M3T2 0.35 0.48 0.21 0.25 0.68 0.25 0.46 1 M3T3 0.28 0.19 0.39 0.24 0.23 0.59 0.37 0.36 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 Intro to SEM II - 3 02/10/16 EFA vs. CFA of Big Five scale testlets The data are from Wrensen & Biderman (2005). The 50 Big Five items were used to compute 3 testlets (parcels) per dimension. Data were collected in two conditions – honest response and fake good. The honest response data will be analyzed here. First, an Exploratory Factor Analysis Analyze -> Data Reduction -> Factor ... Intro to SEM II - 4 02/10/16 Choose Maximum Likelihood as the extraction method. Sometimes it fails. In those instances, choose Principal Axes (PA2). Intro to SEM II - 5 02/10/16 I requested an oblique (correlated factors) solution, to see how much the factors are correlated. Output you should see . . . ------------------------ FACTOR ANALYSIS --------- Factor Analysis [DataSet1] G:\MdbR\Wrensen\WrensenDataFiles\WrensenMVsImputed070114.sav Correlation Matrix hetl1 Correlat hetl1 1.000 ion hetl2 hetl3 hatl1 hatl2 hatl3 hctl1 hctl2 hctl3 hstl1 hstl2 hstl3 hotl1 hotl2 hotl3 .729 .313 .020 .066 -.057 .002 -.039 -.067 -.085 .001 .045 .139 .127 .153 hetl2 .729 1.000 .306 .049 .165 .036 .000 -.027 -.007 -.132 .022 .015 .152 .163 .244 hetl3 .313 .306 1.000 .069 -.061 .044 -.080 -.067 .045 .078 .169 .150 .117 .061 .127 hatl1 .020 .049 .069 1.000 .463 .120 .137 .090 .150 .027 -.063 -.023 .096 .037 .202 hatl2 .066 .165 -.061 .463 1.000 .235 .168 .064 .100 -.098 -.053 -.103 .055 -.003 .220 hatl3 -.057 .036 .044 .120 .235 1.000 .041 -.013 -.035 -.119 -.050 -.031 -.113 -.056 .066 hctl1 .002 .000 -.080 .137 .168 .041 1.000 .734 .610 .163 .155 .136 .080 .071 .099 hctl2 -.039 -.027 -.067 .090 .064 -.013 .734 1.000 .622 .099 .075 .056 -.077 -.031 .082 hctl3 -.067 -.007 .045 .150 .100 -.035 .610 .622 1.000 .313 .272 .177 .172 .178 .303 hstl1 -.085 -.132 .078 .027 -.098 -.119 .163 .099 .313 1.000 .688 .762 .263 .271 .190 hstl2 .001 .022 .169 -.063 -.053 -.050 .155 .075 .272 .688 1.000 .649 .248 .275 .070 hstl3 .045 .015 .150 -.023 -.103 -.031 .136 .056 .177 .762 .649 1.000 .174 .169 .111 hotl1 .139 .152 .117 .096 .055 -.113 .080 -.077 .172 .263 .248 .174 1.000 .648 .499 hotl2 .127 .163 .061 .037 -.003 -.056 .071 -.031 .178 .271 .275 .169 .648 1.000 .466 hotl3 .153 .244 .127 .202 .220 .066 .099 .082 .303 .190 .070 .111 .499 Intro to SEM II - 6 02/10/16 .466 1.000 Communalitiesa Initial Extraction hetl1 .562 .655 hetl2 .588 .824 hetl3 .199 .161 hatl1 .263 .282 hatl2 .335 .792 hatl3 .125 .086 hctl1 .619 .677 hctl2 .628 .835 hctl3 .558 .588 hstl1 .711 .841 hstl2 .572 .586 hstl3 .641 .737 hotl1 .512 .684 hotl2 .480 .608 hotl3 .436 .437 Extraction Method: Maximum Likelihood. Total Variance Explained Rotation Sums of Squared Factor Total Initial Eigenvalues Extraction Sums of Squared Loadings % of Variance Total Cumulative % % of Variance Cumulative % Loadingsa Total 1 3.307 22.047 22.047 2.861 19.071 19.071 2.461 2 2.284 15.226 37.273 1.830 12.199 31.270 2.208 3 2.157 14.378 51.652 1.994 13.291 44.560 1.741 4 1.495 9.967 61.618 1.111 7.406 51.967 1.294 5 1.320 8.801 70.419 1.000 6.664 58.631 2.115 6 .895 5.969 76.388 7 .815 5.433 81.822 8 .564 3.763 85.585 9 .506 3.375 88.960 10 .392 2.616 91.576 11 .333 2.219 93.794 12 .277 1.845 95.640 13 .249 1.661 97.301 14 .221 1.472 98.773 15 .184 1.227 100.000 Extraction Method: Maximum Likelihood. Intro to SEM II - 7 02/10/16 The scree plot is not terribly informative, so, as is the case in many instances, it must be taken with a grain of salt. Intro to SEM II - 8 02/10/16 Goodness-of-fit Test Chi-Square df More on goodness-of-fit later. Sig. 51.615 40 .103 Pattern Matrixa Path Diagram Factor S C 1 E 2 A 3 O 4 5 hetl1 -.067 .027 .813 -.008 .002 hetl2 -.105 .045 .895 .087 .038 hetl3 .122 -.048 .360 -.050 .016 hatl1 .030 .051 -.034 .505 .107 hatl2 .007 -.001 .033 .885 .055 hatl3 -.017 -.029 .004 .282 -.082 hctl1 .021 .811 .012 .053 -.010 hctl2 -.067 .943 .023 -.075 -.139 hctl3 .119 .688 -.046 .009 .153 hstl1 .891 .029 -.127 .003 .066 hstl2 .743 .033 .030 .003 .040 hstl3 .880 -.007 .074 .007 -.100 hotl1 .013 -.065 -.013 -.033 .834 hotl2 .019 -.021 .009 -.096 .773 hotl3 -.015 .083 .094 .158 .590 E E1 E2 E3 A A1 A2 A3 C C1 C2 C3 S S1 S2 S3 O O1 O2 O3 Extraction Method: Maximum Likelihood. Rotation Method: Oblimin with Kaiser Normalization. a. Rotation converged in 5 iterations. Factor Correlation Matrix Factor 1S E C2 A4 3 Mean of factor correlations is .096. O 5 1 S 1.000 .179 .074 -.158 .314 2 C .179 1.000 -.061 .157 .155 3 E .074 -.061 1.000 .030 .218 -.158 .157 .030 1.000 .054 .314 .155 .218 .054 1.000 4 5 A O Extraction Method: Maximum Likelihood. Rotation Method: Oblimin with Kaiser Normalization. Intro to SEM II - 9 02/10/16 If the factors were truly orthogonal and there were no other effects operating, the mean of factor correlations would probably be smaller than .096. This is an issue we’ll return to. The S~O correlation of .314 is one that some people would kill for. Confirmatory Factor Analysis of the same data using Amos The procedure . . . 1. Open Amos. 2. File -> Data Files…(Wrensen_070114) a. Click on “File Name”. b. Choose the name of the file containing the data. c. Click on OK. 3. Draw the path diagram a. Choose the rectangle Observed Variable tool, b. Draw as many rectangles as there are observed variables. c. Give the variables the same names they have in the SPSS file. d. Draw residual latent variables using the latent variable tool, e. Draw factors using the latent variable tool. f. Connect the latent and observed variables using either regression arrows or correlation arrows. Intro to SEM II - 10 02/10/16 The resulting Input path diagram should look something like the following . . .(Inclassexample081030) cmin is Amos’s term for chi-square. Amos allows you to request values fof selected quantities for a Title. Notes . . . 1. I’ve allowed the factors to be correlated. So I’ll estimate the factor correlations. 2. I’ve assigned values to some of the regression arrows. Specially, one of the regression arrows connecting each latent variables to its indicator(s) must be 1. (Alternatively, I could have fixed the variances of the factors and estimated all regression arrows.) So one of the three arrows emanating from each Big 5 factor has been set to 1. Also, the regression arrow connecting each residual latent variable has been set to 1 (or the residual variance has to be set = 1.) The variables to which fixed arrows are connected are called the reference indicators. Intro to SEM II - 11 02/10/16 Here’s a slightly different version of the same model, this time with factor variances fixed at 1 and ALL loadings estimated . . . (MDB\R\Wrensen\WrensenAmos\Inclass example cfa 131030) Intro to SEM II - 12 02/10/16 After running the above Amos program, the Unstandardized estimates output window looks like the following. Variances of factors A factor variance of 0 would mean that the factor was not influencing any observed variables. Goodness-of-fit statistics Raw regression slopes Covariances of factors. 0 means two factors are independent. Variances of the residuals. 0 would mean that there is no variation in the variable that is unexplained . Note that there are no crossloadings. So all influence on each variable is assumed to come from only 1 factor. Absence of crossloadings forces a “simple structure” solution. Structural Equations Models : There is an equation relating each endogenous variable to other variables. e.g., HETL1 = 0 + 1.00*HE + 1*e1. Means and intercepts are not estimated, so all data are centered. HETL2 = 0 + 1.09*HE + 1*e2. (Refer to Factor Analysis Equations in 1st FA lecture.) HETL3 = 0 + 0.25*HE + 1*e3. Intro to SEM II - 13 02/10/16 Here’s the output window for the other example – same data, slightly choices for what parameters to fix and what to estimate . . . Same as version on previous page Different from version on previous page Intro to SEM II - 14 02/10/16 This is the standardized estimates output window. Standardized regression slopes – simple correlations when each indicator is influenced by only one factor. Proportion of variance of indicators accounted for by model. (Square of standardized loading since each indicator is affected by only one factor. Standardized variances of eis set = 1 and not printed by Amos. Correlations of factors. Mean of factor correlations is .11, about the same as in the EFA. All standardized loadings should be “large” - .3 - .5 is a gray area. Larger is OK. Smaller is not. Goodness-of-fit . . . 1. Chi-square p-value should be bigger than .05. (It’s not here, nor is it hardly ever.) 2. CFI should be larger than .9 or .95. 3. RMSEA should be less than .05. (It’s not here.) Mean of factor correlations is +0.11. If Big 5 are orthogonal, it should be close(r) to zero. Intro to SEM II - 15 02/10/16 Here’s the Standardized solution from the differently parameterized version of the model in which the factor variances were set equal to 1 and all loadings of items onto factors were estimated.. . . Note that ALL of the standardized estimates are identical to those obtained from the original parameterization. Bottom Line: Changing options for which parameters are fixed vs estimated affects ONLY the unstandardized estimates. The standardized estimates will be the same for all choices regarding which parameters are fixed and which are estimated. Intro to SEM II - 16 02/10/16 Comparison of EFA and CFA factor correlations . . . Factors E~A E~C E~S E~O A~C A~S A~O C~S C~O S~O EFA r .030 -.061 .074 .218 .157 -.158 .054 .179 .155 .314 CFA f .16 -.02 -.07 .24 .16 -.11 .09 .21 .10 .34 Correlation of the correlations . . . r = .871 This indicates that in this particular instance, the estimates of factor correlations from a CFA are about the same as the estimates of factor correlations from an EFA. Most people would say that the EFA correlations are the gold standard, since the CFA model might be distorted by forcing all of the cross-loadings to be zero. Intro to SEM II - 17 02/10/16 What should be the indicators of a latent variable? A rule-of-thumb is that you should have at least three indicators for each latent variable in a structural equation model including factor analysis models. Ideally, this means that you should have three separate indicators of the construct. Each of these indicators might each be a scale score – the average or sum of a group of items, created using the standard (Spector, DeVellis) techniques. Often however, especially for studies designed without the intent of using the SEM approach, only one collection of items - not scale scores - is available. There are four possibilities with respect to this situation. 1. Let the individual items be the indicators of the latent variable. I think ultimately, this will be the accepted practice. The following example is from the Caldwell, Mack, Johnson, & Biderman, 2001 data, in which it was hypothesized that the items on the Mar Borak scale would represent four factors. The following is an orthogonal factors CFA solution. This is conceptually promising, but it is cumbersome in Amos using its diagram mode when there are many items. (Ask Bart Weathington about creating a CFA of the 100-item Big Five questionnaire.) This is not a problem if you’re using Mplus, EQS, or LISREL or if you’re using Amos’s text editor mode. Goodness-of-fit indices generally indicate poor fit when items are used as indicators. I believe that this poor fit is due to failure of the models to account for the accumulation of minor aberrations due to item wording similarities, item meaning similarities, and other miscellaneous characteristics of the individual items. Intro to SEM II - 18 02/10/16 2. Form groups of items (testlets or parcels), 3 or more parcels per construct, and use these as indicators. This is the procedure often followed by many SEM researchers. It allows multiple indicators, without being too cumbersome, and has many advantageous statistical properties. The following is from Wrensen & Biderman (2005). Each construct was measured with a multi-item scale. For each construct, an exploratory factor analysis was performed and the item with the lowest communality was deleted. Then testlets (aka parcels) of items each – 1,4,7 for one testlet, 2,5,8 for the 2nd, 3,6,9 for the 3rd were formed. The average of the responses to the three items of each testlet was obtained and the three testlet scores became the indicators for a construct. (Note that the testlets are like mini scales.) We have found that the goodness-of-fit measures are better when parcels are used than when items are analyzed. See the separate section on Goodness-of-fit and Choice of indicator below. This is a common practice. There is some controversy in the literature regarding whether or not it’s appropriate. My personal belief is that the consensus of opinion is swinging away from the use of parcels as indicators. One problem with parcels is that averaging may obscure specific characteristics of items, making them “invisible” to the researcher. Wording (positive vs negative), evaluative content of items (high valence vs. low valence) may be obscured. The “invisible” effects may be important characteristics that should be studied. Intro to SEM II - 19 02/10/16 3. Develop or choose at least 3 separate scales for each latent variable. Use them. This carries parceling to its logical conclusion. 4. Don’t have latent variables. Instead, form scale scores by summing or averaging the items and using the scale scores as observed variables in the analyses. This is called path analysis. Not using latent variables means that the relationships between the observed variables will be contaminated by error of measurement – the “residual’s that we created above. As discussed later, this basically defeats the purpose of using latent variables. References Alhija, F. N., & Wisenbaker, J. (2006). A monte carlo study investigating the impact of item parceling strategies on parameter estimates and their standard errors in CFA. Structural Equation Modeling, 13(2), 204-228. Bandalos, D. L. (2002). The effects of item parceling on goodness-of-fit and parameter estimate bias in structural equation modeling. Structural Equation Modeling, 9(1), 78-102. Fan, X., Thompson, B., & Wang, L. (1999). Effects of sample size, estimation methods, and model specification on structural equation modeling fit indexes. Structural Equation Modeling, 6(1), 56-83. Gribbons, B. C. & Hocevar, D. (1998). Levels of aggregation in higher level confirmatory factor analysis: Application for academic self-concept. Structural Equation Modeling, 5(4), 377-390. Little, T. D., Cunningham, W. A., Shahar, G., & Widaman, K. F. (2002). To parcel or not to parcel: Exploring the question, weighing the merits. Structural Equation Modeling, 9(2), 151-173. Marsh, H., Hau, K., & Balla, J. (1997, March). Is more ever too much: The number of indicators per factor in confirmatory factor analysis. Paper presented at the annual meeting of the American Educational Research Association, Chicago. Meade, A. W., & Kroustalis, C. M. (2006). Problems with item parceling for confirmatory factor analytic tests of measurement invariance. Organizational Research Methods, 9(3), 369-403. Sass, D. A., & Smith, P. L. (2006). The effects of parceling unidimensional scales on structural parameter estimates in structural equation modeling. Structural Equation Modeling, 13(4), 566-586. Intro to SEM II - 20 02/10/16 Goodness-of-fit 1. Tests of overall goodness-of-fit of the model. We’re creating a model of the data, with correlations among a few factors being put forth to explain correlations among many variables. A natural question is: How well does the model fit the data. The adequacy of fit of a model is a messy issue in structural equation modeling at this time. One possibility is to use the chi-square statistic. The chi-square is a function of the differences between the observed covariances and the covariances implied by the model. The decision rule which might be applied is: If the chi-square statistic is NOT significant, then the model fits the data adequately. But if the chi-square statistic IS significant, then the model does not fit the data adequately. So EFAer, CFAer, and SEMers hope for nonsignificance when measuring goodness-of-fit using the chi-square statistic. Unfortunately, many people feel that the chi-square statistic is a poor measure of overall goodness-of-fit. The main problem with it is that with large samples, even the smallest deviation of the data from the model being tested will yield a significant chi-square value. Thus, it’s not uncommon to ALWAYS get a significant chi-square. (I’ve gotten fewer than 10 nonsignificant chi-squares in 10 years of SEMing.) For this reason, researchers have resorted to examining a collection of goodness-of-fit statistics. Byrne discusses the RMR and the standardized RMR, SRMR. This is simply the square root of the differences between actual variances and covariances and variances and covariances generated assuming the model is true - the reconstructed variances and covariances. The smaller the RMR and standardized RMR, the better. She also discusses the GFI, and the AGFI. In each case, bigger is better, with the largest possible value being 1. I have also seen the NFI reported. Again, bigger is better. Others use the CFI – a bigger-is-better statistic. Finally, the RMSEA is often reported. Small values of this statistic indicate good fit. Much recent work suggests that RMSEA is a very useful measure. Amos reports a confidence interval and a test of the null hypothesis that the RMSEA value is = .05 against the alternative that it is greater than .05. A nosignificant large p-value here is desirable, because we want the RMSEA value to be .05 or less. Three test statistics now being recommended: RMSEA, CFI, and NNFI. We’ve used CFI, RMSEA, and SRMR (mainly because they are what Mplus prints automatically.) Goodness-of-fit Measure Chi-square CFI RMSEA SRMR Recommended Value Not significant .90 or above .05 or smaller .08 or smaller (?) Intro to SEM II - 21 02/10/16 Goodness-of-fit varies across choice of indicator. A problem that SEM researchers face is that models with poor goodness-of-fit measures are less likely to get published. This dilemma may cause them to choose indicators that yield the best GOF measures. From Lim, B., & Ployhart, R. E. (2006). Assessing the convergent and discriminant validity of Goldberg’s International Personality Item Pool. Organizational Research Methods, 9, 29-54. It’s apparent that the poor fit of the models when items were used as indicators is the reason that Lim and Ployhart used parcels as indicators in their analyses. Our data corroborate these authors’ perception of the situation and suggest that goodness-of-fit of individual items may be considerably poorer than goodness-of-fit when parcels, even two-item parcels, from the same data are used as indicators. Following is some evidence concerning the extent of improvement. CFI as the measure of goodness-of-fit –bigger is better. A value of .9 or larger is considered “good” Individual-items Two-item Parcels For each study, the same data were used for both individual-item analyses and two-item parcel analyses. CFI increased by .1 or more in each study when 2-item parcels were used as indicators, rather than individual items. I believe the data were from honest response conditions. Intro to SEM II - 22 02/10/16 RMSEA as the measure of goodness-of-fit. Smaller is better. A value of .05 or less is “good”. Individual-items Two-item parcels For each study, the same data were used for both individual-item analyses and two-item parcel analyses. RMSEA decreased in two studies and stayed the same in 2 when 2-item parcels were used as indicators, rather than individual items. The bottom line here is that there is no doubt that goodness-of-fit measures will be better when parcels of items rather than individual items are used as indicators. But there is possibly useful information lost when items are “parcel’d”. Items may contain nuances of meaning that might be important for understanding what a factor represents. Those nuances may be lost when item responses are averaged to create parcel scores. Negative wording of some items may be important. That negative wording may be lost if negatively worded items (after reverse-scoring) are averaged with positively-worded items. Intro to SEM II - 23 02/10/16 Hypothesis Tests in SEM 1. The critical ratios (CRs) to test that population values of individual coefficients are 0. For all estimated parameters, Amos prints the estimated standard error of the parameter next to the parameter value. The first standard error you probably encountered was the standard error of the mean, /N or S/N. We used the standard error of the mean to form the Z and t-tests for hypotheses about the difference between a sample mean and some hypothesized value. Recall X-bar - H X-bar - H Z = ------------------------ and t = -----------------/N S/N The choice between Z and t depended on whether the value of the population standard deviation, , was known or not. When testing the hypothesis that the population mean = 0, these reduced to X-bar - 0 X-bar - 0 Statistic - 0 Z = ------------------------ and t = -----------------------. That is -----------------------------/N S/N Standard error That is, for a test of the hypothesis that the population parameter is 0, the test statistic was the ratio of the sample mean to its standard error. The ratio of a statistic to its standard error is quite common in hypothesis testing whenever the null hypothesis is that in the population, the parameter is 0. The t-statistics in the SPSS Regression coefficients boxes are simply the regression coefficients divided by standard errors. They’re called t values, because mathematical statisticians have discovered that their sampling distribution is the T distribution. In Amos and other structural equations modeling programs, the same tradition is followed. Amos prints a quantity called the critical ratio which is a coefficient divided by its standard error. These are called critical ratios rather than t’s because mathematical statisticians haven’t been able to figure out what the sampling distributions of these quantities are for small samples. In actual practice, however, many analysts treat the critical ratios as Z’s, assuming sample sizes are large (in the 100’s). Some computer programs, including Amos, print estimated p-values next to them. On page 74 of the Amos 4 User’s guide, the author states: “The p column to the right of C.R., gives the approximate two-tailed probability for critical ratios this large or larger. The calculation of p assumes the parameter estimates to be normally distributed, and is only correct in large samples.” Intro to SEM II - 24 02/10/16 2. Chi-square Difference test to compare the fit of restricted models to general models. When comparing the fit of two models, many researchers use the chi-square difference test. This test is applicable only when one model is a restricted or special case of another. But since many model comparisons are of this nature, the test has much utility. For example, setting a parameter, such as a loading to 0 creates a special model. A comparison of the fit of the special model in which the parameter = 0 with the fit of the general model in which the parameter is estimated forms a test of the hypothesis that the parameter = 0 in the population. The specific test is Chi-square difference (Δχ2)= Chi-square of Special Model - Chi-square of General model. The chi-square difference is itself a chi-square statistic with degrees of freedom equal to the difference in degrees of freedom between the two models. If the chi-square difference is NOT significant, then the conclusion is that the special model fits no worse than the general model and can be used in place of the general model. Since our goal is parsimony, that’s good. The chi-square difference test also “suffers” from the issue that it is very sensitive to small differences when sample sizes are very large. Some have recommended comparing CFI or RMSEA values as an alternative. Intro to SEM II - 25 02/10/16 Example A. Comparing an orthogonal solution to an oblique solution – Example 8 from AMOS Manual. The General Model – An oblique solution. The Special Model – An orthogonal solution. Chi-square = 7.853 (8 df) p = .448 23.30 spatial 1.00 .61 1.20 visperc cubes lozenges 1 1 1 23.87 err_v 11.60 err_c 9.68 verbal 1.00 1.33 2.23 paragrap sentence w ordmean 1 1 22.74 spatial 28.28 1.00 .66 visperc cubes 1 1 24.43 err_v 10.46 err_c err_l 7.32 1 Chi-square = 19.860 (9 df) p = .019 1.17 2.83 lozenges 1 30.78 err_l err_p 7.97 err_s 9.69 19.93 verbal err_w Exam ple 8 Factor analysis: Girls' sam ple Holzinger and Swineford (1939) Unstandardized estim ates 1.00 1.33 2.24 paragrap sentence wordmean 1 1 1 2.83 err_p 8.11 err_s 19.56 err_w Example 8 Factor analysis: Girls' sample Holzinger and Swineford (1939) Unstandardized estimates Many times, the only difference between a general and a special model will be the value of one parameter. That’s the case here: The only difference between the two models above is in the value of the covariance (or correlation, depending on whether you prefer the unstandardized or standardized coefficients). In the general model, it’s allowed to be whatever it is. In the special model, its value is fixed at 0 – thus the special model is a special case of the general model. (Mike – describe the models ) We’ve discussed the Critical Ratio test and the chi-square difference test. Whenever there is only one parameter separating the special from the general model, both of the tests described above can be used to distinguish between them. First a CR test of null hypothesis that the parameter is 0 can be conducted. That test is available from the Tables Output, shown below. Covariances Estimate S.E. C.R. P Label spatial <--> verbal 7.315 2.571 2.846 0.004 Second, a chi-square test of the difference in goodness-of-fit between the two models can be conducted. Here, the value of X2Diff = X2Special – X2General = 19.860 – 7.853 = 12.007. The df = 9-8 = 1. p < .001. So, in this case, both tests yield the same conclusion – that the special model fits significantly worse than the general model. Most of the time, both tests will give the same result. Of course, when the differences between a special and general model involves two or more parameters, only the chi-square difference test can be used to compare them. Intro to SEM II - 26 02/10/16 The argument for analyses involving latent variables. Start here on11/11/15. Recall that X = T + E. An observed score is comprised of both the True score and error of measurment. The basic argument for using latent variables is that the relationships between latent variables are closer to the “true score” relationships than can be found in any existing analysis. If we compute the average of 10 Conscientiousness items to form a scale score, for example, that scale score includes the errors associated with each of the items averaged. Here’s a path diagram representing a scale score . . . e Item 1 e Item 2 e Item 3 e Item 4 e Item 5 e Item 6 e Item 7 e Item 8 e Item 9 e Item 10 A scale score contains everything – pure content plus error of measurement. C+Junk e e e e ee e e e Intro to SEM II - 27 02/10/16 So any correlation involving a scale score is contaminated by the error of measurment contained in the scale score. But if we create a C latent variable, the latent variable represents only the C present across all the items, and none of the error that also contaminates the item. The errors affecting the items are treated separately, rather than being lumped into the scale score. The result is that the latent variable, C, in the diagram below is a purer estimate of conscientiousness than would be a scale score. Its correlations with other variables will not be contaminated by errors of measurement. e Item 1 e Item 2 e Item 3 e Item 4 C e Item 5 e Item 6 e Item 7 e Item 8 e Item 9 e Item 10 GPA From Schmidt, F. (2011). A theory of sex differences in technical aptitude and some supporting evidence. Perspectives on Psychological Science, 6, 560-573. “Prediction 3 was examined at both the observed and the construct levels. That is, both observed score and true score regressions were examined. However, from the point of view of theory testing, the true score regressions provide a better test of the theoretical predictions, because they depict processes operating at the level of the actual constructs of interest, independent of the distortions created by measurement error.” Intro to SEM II - 28 02/10/16 What we get from Latent variable analyses Uncontaminated measurement Latent variable estimates of characteristics will be “purer”, since they’re unaffected by measurement error. The way to access a latent variable based estimate of a characteristic is through factor scores. Right now, only Mplus makes that easy to do. I expect more programs to provide such capabilities soon. Purer relationships As stated above, relationships among latent variables are free from the “noise” of errors of measurement, so if two characteristics are related, their latent variable correlations will be farther from 0 than will be their scale correlations. That is – assess the relationship with scale scores. Assess the same relationship using a latent variable model. The r from the latent variable analysis will usually be larger than the r from the analysis involving scale scores. The extent of augmentation of correlations depends on the amount of error of measurement. The latent variable r will generally be farther from 0, with the amount of augmentation depending on, for example, the reliability of scale scores. Augmentation will be more and the advantage of using latent variables will be greater the less reliable the scale scores. Sanity and advancement of the science The sometimes random-seeming mish-mash of correlations will be a little less random-seeming when the effects of errors of measurement on relationships and on measurement of characteristics are taken out. Intro to SEM II - 29 02/10/16 Example illustrating the differences between scale score correlations and latent variable correlations. From Biderman, M. D., McAbee, S. T., Chen, Z., & Nguyen, N. T. (2015). Assessing the Evaluative Content of Personality Questionnaires Using Bifactor Models. MS submitted for publication. The red’d correlations are those between three different measures of affect. For each measure, the scale was represented by a single summated score on the left and by a single general factor indicated by all items on the right. Table 3 Correlations of Measures of Affect Summated Scales General Factor RSE PANAS Dep. 1 NEO-FFI-3 NEO-FFI-3 .90 RSE .33 .90 PANAS .35 .81 .88 -.39 -.76 -.79 Depression Latent Variables General Factor RSE PANAS Dep. .90 .94 .45 .96 .66 1.00 -.52 1.00 -.84 -1.00 .97 HEXACO-PI-R2 HEXACO-PI-R .89 RSE .34 .91 PANAS .43 .74 .89 -.47 -.78 -.73 Depression .89 .94 .36 .96 .49 .80 .96 -.46 -.87 -.82 .97 Note. 1N=317, 2N=788. Dep. = depression. Values on diagonals are reliabilities of Scales and factor determinacies of latent variables. As an aside, note that even the scale scores were very highly correlated, raising the issue of whether the three scales might all be measuring the same affective characteristic. Intro to SEM II - 30 02/10/16