Notes - aarya classes

advertisement

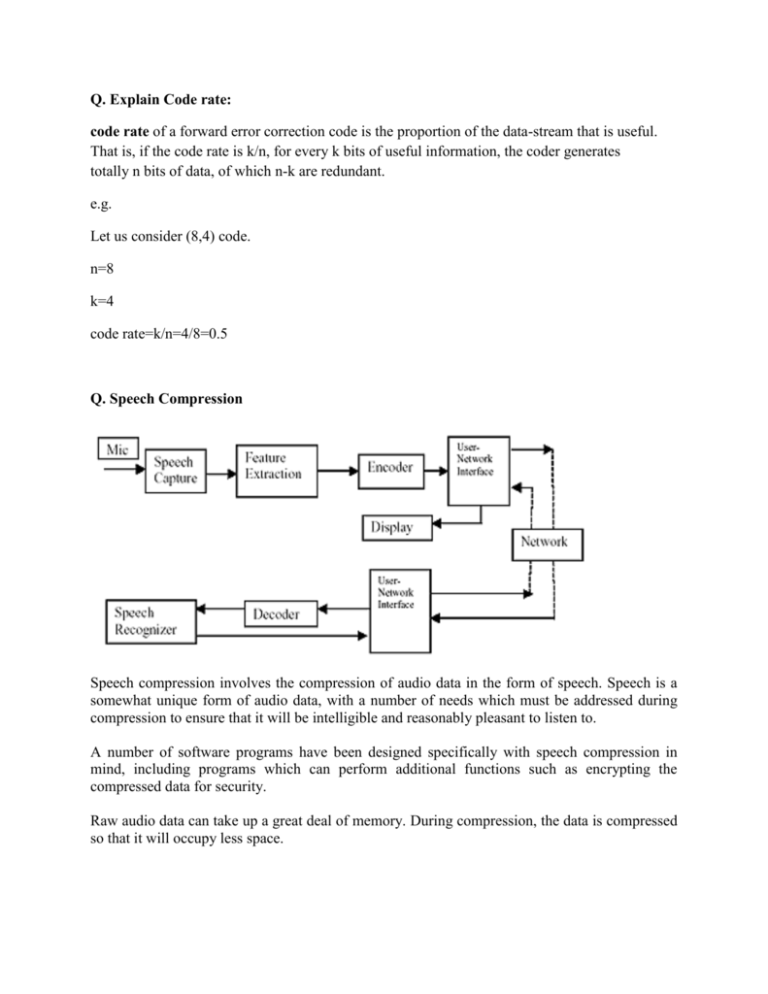

Q. Explain Code rate: code rate of a forward error correction code is the proportion of the data-stream that is useful. That is, if the code rate is k/n, for every k bits of useful information, the coder generates totally n bits of data, of which n-k are redundant. e.g. Let us consider (8,4) code. n=8 k=4 code rate=k/n=4/8=0.5 Q. Speech Compression Speech compression involves the compression of audio data in the form of speech. Speech is a somewhat unique form of audio data, with a number of needs which must be addressed during compression to ensure that it will be intelligible and reasonably pleasant to listen to. A number of software programs have been designed specifically with speech compression in mind, including programs which can perform additional functions such as encrypting the compressed data for security. Raw audio data can take up a great deal of memory. During compression, the data is compressed so that it will occupy less space. This frees up room in storage, and it also becomes important when data is being transmitted over a network. On a mobile phone network, for example, if speech compression is used, more users can be accommodated at a given time because less bandwidth is needed. Likewise, speech compression becomes important with teleconferencing and other applications; sending data is expensive, and anything which reduces the volume of data which needs to be sent can help to cut costs. Q. Random Number Generation Random numbers are useful for a variety of purposes, such as generating data encryption keys, simulating and modeling complex phenomena and for selecting random samples from larger data sets. They have also been used aesthetically, for example in literature and music, and are of course ever popular for games and gambling. When discussing single numbers, a random number is one that is drawn from a set of possible values, each of which is equally probable, i.e., a uniform distribution. When discussing a sequence of random numbers, each number drawn must be statistically independent of the others. With the advent of computers, programmers recognized the need for a means of introducing randomness into a computer program. However, surprising as it may seem, it is difficult to get a computer to do something by chance. A computer follows its instructions blindly and is therefore completely predictable. There are two main approaches to generating random numbers using a computer: PseudoRandom Number Generators (PRNGs) and True Random Number Generators (TRNGs). The approaches have quite different characteristics and each has its pros and cons. Q. JPEG Compression The Joint Photographic Experts Group (JPEG) is the working group of ISO, International Standard Organization, that defined the popular JPEG Imaging Standard for compression used in still image applications. The counterpart in moving picture is the ``Moving Picture Experts Group" (MPEG). 1. Divide the image to form a set of block. blocks and carry out 2D DCT transform of each 2. Threshold all DCT coefficients smaller than a value T to zero, or alternatively, low-pass (either ideal or smooth) filter the 2D DCT spectrum of each sub-image; 3. Quantize remaining coefficients: Divide (element-wise) each block by a quantization matrix Q. 4. Predictive code all DC components of the blocks (as the DC components are highly correlated) 5. Scan the rest coefficients in each block in a zigzag way to code them by run-length encoding; 6. Huffman code the data stream 7. Store and/or transmit the encoded image as well as the quantization matrix. Q. BCH code BCH codes are cyclic codes. Only the codes, not the decoding algorithms, were discovered by these early writers. The original applications of BCH codes were restricted to binary codes of length 2 − 1 m for some integer m. These were extended later by Gorenstein and Zieler (1961) to the nonbinary codes with symbols from Galois field GF(q). Q. Discrete probability All probability distributions can be classified as discrete probability distributions or as continuous probability distributions, depending on whether they define probabilities associated with discrete variables or continuous variables. If a random variable is a discrete variable, its probability distribution is called a discrete probability distribution. e.g. Suppose you flip a coin two times four possible outcomes: HH, HT, TH, and TT. Now, let the random variable X represent the number of Heads that result from this experiment. The random variable X can only take on the values 0, 1, or 2, so it is a discrete random variable. The probability distribution for this statistical experiment appears below. Number of heads Probability 0 1 2 0.25 0.50 0.25 The above table represents a discrete probability distribution because it relates each value of a discrete random variable with its probability of occurrence. Q. Discrete Logarithms If is an arbitrary integer relatively prime to and is a primitive root of , then there exists among the numbers 0, 1, 2, ..., , where is thetotient function, exactly one number such that The number is denoted is then called the discrete logarithm of with respect to the base modulo and The term "discrete logarithm" is most commonly used in cryptography, although the term "generalized multiplicative order" is sometimes used as well. In number theory, the term "index" is generally used instead. For example, the number 7 is a positive primitive root of in fact, the set of primitive roots of 41 is given by 6, 7, 11, 12, 13, 15, 17, 19, 22, 24, 26, 28, 29, 30, 34, 35), and since , the number 15 has multiplicative order 3 with respect to base 7 (modulo 41)