October 2014

advertisement

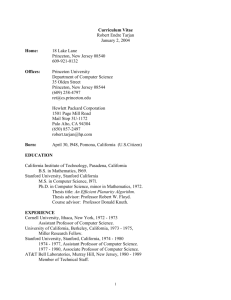

HEADLINES AT A GLANCE Could a Robot Do Your job? W3C, Now Age 20, Gives Official Recommendations for HTML5 Met Office to Build Supercomputer New 'Surveyman' Software Promises to Revolutionize Survey Design and Accuracy Princeton Computer Scientist Robert E. Tarjan Appointed Chief Scientist at Intertrust Technologies We Could've Stopped Ebola If We Listened to the Data The Robot in the Cloud: A Conversation With Ken Goldberg Massachusetts Schools Strive to Increase Access to Coding Courses HELLO? Sweat and a Smartphone Could Become the Hot New Health Screening Professor Awarded $1.2 Million for Computer Science Curriculum Project Maria Klawe: Esteemed Computer Scientist. Proud Mathematician. Watercolor Painter. A Physician-Programmer Experiments With AI and Machine Learning in the ER Machine-Learning Maestro Michael Jordan on the Delusions of Big Data and Other Huge Engineering Efforts Could a Robot Do Your job? USA Today (10/29/14) Mary Jo Webster Advances in technology could mean people in low-skill jobs such as home health care workers, food service workers, retail salespeople, and custodians will be replaced by robots. For example, Carnegie Mellon University researchers are developing the Home-Exploring Robot Butler (HERB), a robot that is learning to retrieve and deliver objects, prepare simple meals, and empty a grocery bag. About 70 percent of low-skill jobs, and about 50 percent of all jobs, could be replaced by robots or other technology within 20 years, according to University of Oxford researcher Carl Benedikt Frey. He says low-skill workers will need to acquire creative and social skills to stay competitive in the future labor market. "We don't just have machines that are faster than us, but we also are starting to have machines that might be smarter than us," says Rice University professor (and CACM editor-in-chief) Moshe Y. Vardi. "There will never be things we cannot automate. It's just a matter of time." For example, algorithms and software improvements have reduced the need for tax preparers, and computers that scan millions of pages of legal documents have displaced paralegals. Meanwhile, IBM's Watson supercomputer is now helping doctors match patients to clinical trials and determine the best cancer treatment course. View Full Article | Return to Headlines | Share W3C, Now Age 20, Gives Official Recommendations for HTML5 eWeek (10/28/14) Chris Preimesberger The World Wide Web Consortium (W3C) marked the 20th anniversary of HTML at its week-long annual conference at the Computer History Museum this month, recognizing the history and influence of the Internet markup language and publishing its official recommendations for HTML5, its fifth major revision. HTML5 spent seven years in development and already is widely used. It offers many new features that make it very attractive to developers in the era of Internet-connected devices of all sizes. Its built-in capabilities make it a powerhouse for audio/visual media, including computer games, as well as science and mathematics. "Today we think nothing of watching video and audio natively in the browser, and nothing of running a browser on a phone," said W3C director Sir Tim Berners-Lee. "Though they remain invisible to most users, HTML5 and the Open Web Platform are driving these growing user experiences." Although HTML5 already is supported on most devices and used by a large percentage of developers, W3C HTML Working Group co-chair Paul Cotton of Microsoft says the format will continue to grow. In particular, he expects to see developers fork the code into their own version, "and start to innovate on that kind of platform going forward." View Full Article | Return to Headlines | Share Met Office to Build Supercomputer BBC News (10/28/14) Jonathan Webb The British government has approved a 97-million British pounds investment in a new supercomputer to improve weather forecasting and climate modeling by the Met Office, the U.K.'s national weather service. The new system will be housed partly at the Met Office headquarters in Exeter, and partly at a new facility in the Exeter Science Park. When the system reaches full capacity in 2017, it will have 480,000 central processing units and reach a top speed of 16 petaflops, which is 13 times faster than the current system. A Cray XC40 system will be used to create U.K.-wide forecast models with a resolution of 1.5 kilometers to be run every hour. The new system "will allow us to add more precision, more detail, more accuracy to our forecasts on all time scales for tomorrow, for the next day, next week, next month, and even the next century," says Met Office CEO Rob Varley. He says climate scientists can use the extra capacity to run detailed models over much longer time scales. "It will be one of the best high-performance computers in the world," placing the United Kingdom at the forefront of weather and climate science, says U.K. Science Minister Greg Clark. View Full Article | Return to Headlines | Share New 'Surveyman' Software Promises to Revolutionize Survey Design and Accuracy University of Massachusetts Amherst (10/22/14) Janet Lathrop The Object-oriented Programming, Systems, Languages and Applications (OOPSLA) track of the ACM SIGPLAN conference on Systems, Programming, Languages and Applications: Software for Humanity recently honored University of Massachusetts at Amherst doctoral student Emma Tosch with its Best Paper award. The recognition came for her work on a first-of-its-kind software system designed to improve the accuracy and trustworthiness of surveys. A free and publicly available tool, "Surveyman" can identify problems in any survey from the design stage and onward. Tosch says the tool guides users through steps to create a spreadsheet that will become a new survey, addressing key areas that can lead to bias in a survey, such as question order and word variation. She says Surveyman also conducts diagnostic sweeps to warn survey creators when certain questions become correlated, or redundant, and should be removed to avoid respondent fatigue. In addition to automatically addressing these shortcomings, Surveyman will administer a survey online and perform diagnostics on incoming data. The software also will kick out respondents who it determines are not answering truthfully. View Full Article | Return to Headlines | Share Princeton Computer Scientist Robert E. Tarjan Appointed Chief Scientist at Intertrust Technologies Business Wire (10/29/14) Intertrust Technologies has named Princeton University professor and 1986 ACM A.M. Turing Award laureate Robert E. Tarjan as chief scientist. Tarjan joins more than 100 researchers and engineers in solving technical problems in trust and security on the Internet. "Dr. Tarjan is one of the world's most esteemed computer scientists, and we look forward to driving another generation of breakthroughs with him," says Intertrust CEO Talal Shamoon. Tarjan will focus on new research on technologies that combine data science, algorithms, and secure systems to help large networks govern and secure the data they collect and manage. "Intertrust has always looked over the technology horizon," Tarjan says. "Their recent work on big data, privacy, and secure systems technologies poses an exciting and challenging set of new research questions whose solutions could have far reaching effects on the way people use and trust the Web for everything from advertising to healthcare to the Internet of Things." Tarjan, who has made fundamental contributions in the fields of algorithms and data structures, has received many academic distinctions, including the Nevanlinna Prize in mathematical aspects of computer science. View Full Article | Return to Headlines | Share We Could've Stopped Ebola If We Listened to the Data Quartz (10/24/14) Caitlin Rivers Computational epidemiologists were some of the first to sound alarm bells about the potential for a dangerous Ebola outbreak in West Africa earlier this year, writes Caitlin Rivers, a Ph.D. candidate at the Virginia Polytechnic Institute and State University's (Virginia Tech) Network Dynamics and Simulation Science Laboratory. Rivers says multiple computational models showed the potential for explosive growth, yet their warnings were not heeded. She says this is in part due to computational epidemiologists' remoteness from the levers of power and those that pull them. Rivers also notes most computational epidemiologists work at universities and are more familiar with theoretical research than practical research. However, Rivers notes this is starting to change. One team of computational epidemiologists at Virginia Tech partnered with the U.S. Department of Defense to plot out potential outbreaks and their trajectories. The researchers do this by overlaying existent outbreak data with other data such as census records, clinical data, and other contextual information, which yields information about how the outbreak may spread. Other computational epidemiologists are focusing more on prediction, using similar techniques to determine which areas are likely to be vulnerable so the state can react and find ways to protect them. One example is Healthmap, which was founded in 2005 and draws on information from publicly available sources to detect potential outbreaks. View Full Article | Return to Headlines | Share The Robot in the Cloud: A Conversation With Ken Goldberg The New York Times (10/25/14) Quentin Hardy University of California, Berkeley professor Ken Goldberg has spent three decades in the field of robotics and has published more than 170 peer-reviewed articles about robots. Goldberg, who currently is focused on cloud robotics and its potential applications in medical fields, is establishing a research center that will focus on developing surgical robots. He says cloud robotics is an application of the basic principles of cloud computing and storage to robotics: moving the heavy-duty processing tasks into the cloud, which he notes offers two major benefits. First, robots no longer need to carry large amounts of computer hardware, and second, they get access to more computing resources than they could ever carry. Goldberg says moving robotics to the cloud enables machine-learning and big data techniques to be applied to a group of robots rather than to individual machines. "One robot can spend 10,000 hours learning something, or 10,000 robots can spend one hour learning the same thing," he notes. Cloud robotics already is being employed by Google's self-driving cars, which send the information they gather to the Google cloud, enabling it to improve the performance of all of its cars. Goldberg says health care also holds numerous possibilities for cloud-based robotics, from administering radiation therapies to suturing wounds and giving patients intravenous fluids. View Full Article - May Require Free Registration | Return to Headlines | Share Massachusetts Schools Strive to Increase Access to Coding Courses The Boston Globe (10/23/14) Ellen O'Leary Several efforts are underway to promote computer science (CS) education in Massachusetts. For example, state education officials and innovation school practitioners teamed up in 2012 to launch the Innovation School Network, a group of 28 approved Innovation Schools, as well as an additional 27 schools in the planning stages. In addition, the Massachusetts Computing Attainment Network (MassCAN) advocates for greater access to technology education across the state. The Digital Literacy and Computer Science Standards Task Force is working to develop new standards for teaching computer science. "Our goal is to produce more software engineers; really create free thinkers not just add new classes," says Sprout & Co. director and Digital Literacy and Computer Science Standards Task Force member Alec Resnick. MassCAN currently is working with the Department of Elementary and Secondary Education to analyze and update best practices for teaching technology. "We have quite a few opportunities planned for teachers to take advantage of in the form of invaluable professional development to prepare teachers to teach CS and integrate Computational Thinking, problem-solving, and Computer Science units into the K-12 program," says MassCAN's Kelly Powers. View Full Article | Return to Headlines | Share HELLO? Sweat and a Smartphone Could Become the Hot New Health Screening UC Magazine (10/22/14) Colleen Kelley University of Cincinnati (UC) researchers are developing lightweight, wearable devices that analyze sweat using a smartphone to gather medical information in near-real time. The devices are patches resembling band-aids that use paper microfluids to collect and gather biomarkers, such as electrolytes, metabolites, proteins, small molecules, and amino acids, which can signal the physical state of the body and are carried in sweat. "The newer patches in development are also meant to measure recovery from stress, which in many cases is more important initially than measuring the stressors themselves," says UC professor Jason Heikenfeld, head of UC's Novel Devices Laboratory. "One example goal is to measure cortisol levels and tell you how they return to normal over time." The patch wicks sweat in a tree-root pattern, maximizing the collection area while minimizing the volume of paper. The patch includes a sodium sensor, voltage meter, communications antenna, microfluidics, and a controller chip. Heikenfeld notes the system also could be applied to drug monitoring. "Ultimately, sweat analysis will offer minute-by-minute insight into what is happening in the body, with on-demand, localized, electronically stimulated sweat sampling in a manner that is convenient and unobtrusive," he says. View Full Article | Return to Headlines | Share Professor Awarded $1.2 Million for Computer Science Curriculum Project Georgia State University (10/21/14) Angela Turk The U.S. National Science Foundation recently awarded Georgia State University (GSU) researchers a three-year $1.2-million grant to develop a curriculum for integrating computer science into urban elementary school classrooms. The researchers, led by GSU professor Caitlin McMunn Dooley, are working with the International Society for Technology in Education and the Georgia Institute of Technology on the Integrated Computer Science in Elementary Curricula project, which is designed to improve elementary students' capacity for academic learning, creativity, and motivation as they learn about computer science. The project involves helping third through fifth grade teachers at an Atlanta elementary school to integrate computer science into project-based learning using the same strategies industry leaders employ to create websites, apps, games, and other digital media. The results will be compared to those of a nearby school conducting a similar project-based method without integrating computer science. The researchers hope the project can show how historically underrepresented groups can become participants and leaders in an increasingly digital society. "By focusing students' learning on how to create and adapt new technologies, teachers will be well-suited to innovate and inspire with learning with technology and provide pathways for kids to access well-paying jobs in the future," Dooley says. View Full Article | Return to Headlines | Share Maria Klawe: Esteemed Computer Scientist. Proud Mathematician. Watercolor Painter. Tech Republic (10/24/14) Lyndsey Gilpin Maria Klawe, computer scientist and president of Harvey Mudd College, entered computer science (CS) at the height of women's participation in the field and has watched women's presence decline throughout her career. This experience has driven her to make encouraging women to enter computer science one of her chief goals as Harvey Mudd's president. Klawe, a former president of ACM, was the first female president of the college and during her eight years there, Harvey Mudd has seen its female enrollment rate jump from 31 percent to 47 percent, with a similar increase among the faculty. Klawe also has promoted a program to expand and open up Harvey Mudd's computer science program. Starting in 2006, the staff sought to increase the number of computer science majors at the college and made changes including revamping the introductory courses to make them more stimulating, supportive, and efficient. One major change was to create separate sections for students who already had prior experience so they would not dominate the CS courses, which can often discourage less-experienced students. Klawe says these changes have helped encourage many new students, especially female students who would previously have avoided CS, to pursue the field, with the field now accounting for 40 percent of all majors at the college. View Full Article | Return to Headlines | Share A Physician-Programmer Experiments With AI and Machine Learning in the ER SearchCIO.com (10/24/14) Nicole Laskowski Steven Horng and his team at Beth Israel Deaconess Medical Center in Boston are working on ways to bring machine learning and artificial intelligence to the emergency room. Horng says many health care information technology efforts focus on automating physician duties, but his project seeks to augment physicians. Horng and his team are developing a method to capture the "chief complaint," the patient's reason for visiting the ER. He notes this can be very difficult because chief complaints often are written out due to a desire for rigorous exactness. Although this can result in clear diagnoses, it also makes it difficult to feed the information into a searchable database. "The struggle has always been in how to collect this type of structured data when humans want to be explicit and do their own thing," Horng says. To address this issue, Horng and his team have deployed machine-learning algorithms that can structure the unstructured data, which is then run through a predictive analytics engine. The program helps to account for factors such as misspellings, double meanings, and incorrect initial diagnoses. Horng says the program's collection rates for chief complaint data have gone from 25 percent initially to 95 percent. View Full Article | Return to Headlines | Share Machine-Learning Maestro Michael Jordan on the Delusions of Big Data and Other Huge Engineering Efforts IEEE Spectrum (10/20/14) Lee Gomes In an interview, University of California, Berkeley professor Michael I. Jordan discusses the ways in which he sees the rhetoric surrounding machine learning and other major computer science fields going too far and making promises it cannot keep. In particular, Jordan sees "neural realism," the move to metaphorically link many machine-learning efforts to the human brain, as inappropriate and inaccurate, because the current understanding of how the brain works is still too rudimentary to truly be used as a basis for computer systems. Big data is another field in which Jordan sees extravagance and carelessness. He says big data is being pushed aggressively without any thought for the ways it can go wrong, and he worries about a future swamped by false positives from big data systems. Jordan says the pools of data being used to feed analytic systems are growing so vast that false positives are inevitable, and this inevitability needs to be accounted for with the incorporation of error bars and other statistical methods. He also believes advances in computer vision are being overhyped; Jordan says the technology is still in its infancy and has a long way to go before it begins to approach the capabilities of human vision. View Full Article | Return to Headlines | Share Abstract News © Copyright 2014 INFORMATION, INC.