Lecture 7. Oct 14 at classroom

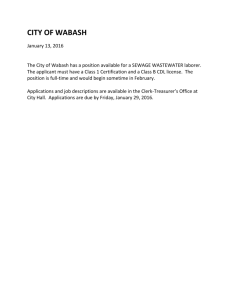

advertisement

1. EMAX models for Dose-Response.

Bates, DM. And Watts, DG. 1988. Nonlinear Regression Analysis and Its Applications. Wiley and Sons,

NY

Allison, Paul D. (1999) Logistic Regression Using SAS: Theory and Practice. Cary, NC: The SAS

Institute.

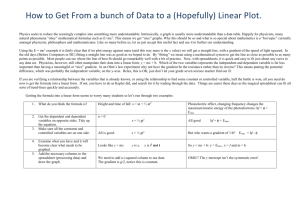

For dose-response modeling, one of the most common parametric approaches is to use a 3-parameter

EMAX model by fitting the dose response function g(D)

g ( D ) E Y D E0

Emax D

,

ED50 D

where E0 is the response Y at baseline (absence of dose), Emax is the asymptotic maximum dose effect and

ED50 is the dose which produces 50% of the maximal effect. A generalization is the 4-parameter EMAX

model for

g ( D) E Y D E0

Emax D

,

ED50 D

where is the 4th parameter which is sometimes called the Hill parameter. The Hill parameter affects the

shape of the curve and is in some cases very difficult to estimate. In Figure 1 we show curves for a range

of Hill parameter values.

In fitting models the error distribution is assumed to be iid normal with zero mean and equal variance .

This assumption is not in any way a restriction of the methods that we discuss here but a convenient

assumption that simplifies the issues that we address. Our model becomes:

Y = g(D) +

where =(E0,ED50,EMAX, and ~iid N(0, ). Model (1.1) can be written similarly as in (1.3) by

setting =1.

Figure 1: Curve Shapes for Different Values of Hill Parameter .

Figure 1 illustrates the rich variety of shapes generated by changing the Hill parameter . For

≤the curves have concave downward shapes whereas for the curves have a sigmoidal

shape.

For very small the curve represents a flat response for any dose greater than zero, whereas for very

large the curve approaches a step function at ED50.

2. Maximum Likelihood Estimation for the EMAX model.

Under the EMAX model the MLE estimator of is the least squares estimator ˆ . One way to calculate ˆ

is by non-linear least squares minimization (NLS). Given the data {(Di, yi), i=1,…,N} the NLS estimator

minimizes (in ) the following quantity:

N

SSE()= ( yi g ( Di )) 2

i 1

The algorithm to minimize SSE is a special case of Newton-Raphson specialized for minimizing sums of

squares. It is implemented in many standard software packages such as R, S-plus and others. The NLS

implementation of the MLE’s is convenient because it allows the computation of confidence intervals for

the model parameters and p-values for testing the significance of the parameters.

Example 1. Dose response estimation in a clinical trial.

In order to illustrate the issues that we address in this paper we use data from an unpublished phase II

clinical trial. The study had 5 doses (0, 5, 25, 50 and 100 mg) and corresponding group sizes (n=78, 81,

81, 81, 77). Unlike most dose-response trials this study has about 80 patients per arm and a high signal to

noise ratio. Figure 2 plots the response against the doses with 90% confidence intervals around the mean.

The graph shows that it is a potentially good candidate for the EMAX model. We first fit 3-Paramater

EMAX model to the data. The maximum likelihood estimators (MLEs) converge quickly and have

estimates of Êmax = 15.13, Ê0= 2.47, and ED50=67.49.

3. A Bayesian approach to EMAX and logistic modeling using BUGS.

As an alternative to the MLE we apply Bayesian methodology to this problem. We introduce a prior

distribution on each parameter and estimate the model parameters by the posterior means.

The parameters EMAX and E0 both have unbounded ranges and we assigned them a normal prior with

mean 10 and zero respectively and large variances 100 and 25 respectively. The other three parameters ,

ED50 and are assigned Gamma priors, with means 1.8, 57.5 and 10 and variances 2.16, 2875 and

10000 respectively. The prior for was offset by adding 0.5 to the Gamma random variable. Our choice

of prior distributions is consistent with what the literature suggests. The Bayesian estimate for the 4parameter EMAX model is shown by the solid line in Figure 2. We may claim that 4-parameter Bayes

EMAX fit recovered the 4-parameter MLE fit. With the 4-parameter Bayes EMAX we obtain an estimate

of 1.1, slightly bigger than 1 (1 corresponding to a 3-parameter EMAX).

Figure 2: Clinical trial example with 5 doses. Red stars represent the response at a given dose and

90% CI. Solid line is the 3-parameter fit. Dashed line refers to the 4-parameter fit (using the

estimates after 100 iterations.)

15

10

5

Change in EDD

0

3 Parameter EMAX

4 Parameter EMAX

Bayesian Approach

Power Law

0

20

40

60

80

100

Dose

(mg)

4.

Logistic regression model.

The 4 parameters Emax curve yields the logistic curve by setting E0 = 0 , EMAX =1 and substituting D by

ex. The resulting function is g(x)= ea+bx/(1+ ea+bx) where b= and a= log(ED50). The response can be

binarized by a threshold into Y=1 for high respondents and Y=0 for low respondents. The logistic model

has the property that the P(Y=1| X=x) = E(Y|X=x) = g(x) so both logistic and EMAX models satisfy

similar equation. The maximum likelihood estimator forfor the logistic model is not the least squares

estimator but has to be obtained by maximization of the likelihood function (a standard version of which

is the Iterative Reweighted Least Squares algorithm).

Consider the simple logistic regression model with one predictor x taking values {x1,…, xn} and a

binary response y taking values {y1,…, yn}.

The log-likelihood can be written as

p

l ( p; y) yi log i log(1 pi ) ,

1 pi

i

-1

where pi =Prob(yi=1) = logit ( (xi -).

Let’s consider the special case when the yi’s sorted by the values of xi’s produce a sequence made up of a

subsequence of k zero’s followed by a subsequence of n-k one’s and there are no 0’s between the 1’s and

no 1’s between the 0’s. Let be fixed at a value of x that separates the yi values into 0’s and 1’s.

In this case the lilkelihood becomes

l ( p; y) log( pi ) log(1 pi )

yi 1

yi 0

As gets larger the pi on first sum of the equation become closer to 1 and the pi on the second sum of

the equation converges to zero. Hence the MLE of is plus infinity.

R code for Emax models:

m1 = nls(ch8~e0 +(emax * dose)/(dose+ed50), start= c(ed50=68,

e0=2.4,emax=16),trace=T,data=edd)

m1 = nls(ch8~e0 +(emax * dose)/(dose+ed50), start= c(ed50=68,

e0=2.4,emax=16),control=nls.control(maxiter=100),trace=TRUE,na.action=na.omit,data=edd)

Flick Tail experiment

Objective of the Study

- Detect synergy between Morphine and Marijuana

- Find the best combination of drugs that produces highest efficacy

- Also interested in the followings

- ED50s for each drug and their combination

- Confidence intervals for ED50s using Fieller’s theorem

Data

Predictor variables :

Morphine and Marijuana dosages in milligrams

Response variable :

Proportion of mice respond to the given drug combination

Methodology: Logistic regression

\

options(contrasts = c("contr.treatment", "contr.poly"))

x <- read.table("flick1.txt",head=T)

Fl <- cbind(flick=x$flick, noflick=x$reps-x$flick)

x.lg <- glm(Fl ~ morphine*del9, family = binomial,data=x)

summary(x.lg, cor = F)

plot(x$morphine,residuals.glm(x.lg,type="d"))

plot(x$del9,residuals.glm(x.lg,type="d"))

plot(predict(x.lg),residuals.glm(x.lg,type="d"))

x.lg0 <- glm(Fl ~ log(1+morphine)*log(1+del9)-1, family = binomial,data=x)

dose.p(x.lg0, cf = c(1,3), p = 1:3/4)

dose.p(update(x.lg0, family = binomial(link = probit)),

cf = c(1, 3), p = 1:3/4)

Two way ANOVA

ONE-WAY ANOVA:

Linear Model: xgi

= g +gi

,

g=1,…,k i=1,…,ng

Assumptions: Errors gi are (i) independent (ii) normally distributed (iii) with

equal variances.

Fit: Data = group effect + residual

Hypothesis Testing: All means are equal Vs some are not equal.

ANOVA

(a) H : k

(b) Ha : j

LINEAR MODEL FOR TWO WAY TABLE:

This is a typical dataset where we have a response and two factors.

Exampe:

clarion

clinton

knox

o'neill

compost

wabash

webster

B1

32.7

32.1

35.7

36.0

31.8

38.2

32.5

B2

32.3

29.7

35.9

34.2

28.0

37.8

31.1

B3

31.5

29.1

33.1

31.2

29.2

31.9

29.7

Fitted model: Data = Tot Mean + Row Effect + Col Eff + Residual

B1

B2

B3 row effect

clarion -1.052 -0.024 1.076 -0.390

clinton 0.214 -0.757 0.543 -2.257

knox -0.786 0.843 -0.057

2.343

o'neill 0.614 0.243 -0.857

1.243

compost 0.548 -1.824 1.276 -2.890

wabash 0.648 1.676 -2.324

3.410

webster -0.186 -0.157 0.343 -1.457

col eff 1.586 0.157 -1.743 32.557

SAS DOES IT IN A DIFFERENT WAY, IT SETS THE EFFECT

FOR ONE OF THE GROUPS AS ZERO.

INTERCEPT

TYPE

clarion

clinton

knox

o'neill

compost

wabash

webster

BLOCK

1

2

3

ESTIMATE

TVALUE

29.3571 B 34.96

1.0667

-0.800

3.800

2.700

-1.433

4.867

0.000

3.3285

1.900

0.000

B

B

B

B

B

B

B

B

B

B

1.02

-0.76

3.63

2.58

-1.37

4.65

.

4.85

2.77

.

PVALUE

0.0001

STD ERROR

0.839703

0.3285

0.4597

0.0035

0.0242

0.1962

0.0006

.

0.0004

0.0169

.

1.047294

1.047294

1.047294

1.047294

1.047294

1.047294

.

0.685615

0.685615

.

COMPUTE ANOVA TABLE:

In order to test the significance of row effects or column effects.

Source

TYPE

BLOCK

Source

TYPE

BLOCK

DF

Type I SS

F Value

Pr > F

6

2

103.15142

39.03714

10.45

11.86

0.0004

0.0014

Type III SS

F Value

103.15142

39.03714

10.45

11.86

DF

6

2

Pr > F

0.0004

0.0014

MULTIPLE COMPARISONS:

Once we find that a factor is significant then we need to explore the difference between the

corresponding factor levels. We do that using a multiple comparison procedure such as Tukey or

Duncan.

Another useful tool is to use contrasts if we are interested in testing only a few comparisons or in

some linear combinations of the parameters.

This is the SAS code and output file

options ps=50 ls=70;

*---------------snapdragon experiment---------------*

| as reported by stenstrom, 1940, an experiment was |

| undertaken to investigate how snapdragons grew in |

| various soils. each soil type was used in three

|

| blocks.

|

*---------------------------------------------------*;

data plants;

input type $ @;

do block=1 to 3;

input stemleng @;

output;

end;

cards;

clarion 32.7 32.3 31.5

clinton 32.1 29.7 29.1

knox

35.7 35.9 33.1

o'neill 36.0 34.2 31.2

compost 31.8 28.0 29.2

wabash

38.2 37.8 31.9

webster 32.5 31.1 29.7

;

proc glm;

class type block;

model stemleng=type block; run;

proc glm order=data;

class type block;

model stemleng=type block / solution;

means type / bon duncan tukey;

*-type-order---clrn-cltn-knox-onel-cpst-wbsh-wstr;

contrast 'compost v others' type -1 -1 -1 -1 6 -1 -1;

contrast 'knox vs oneill'

type 0 0 1 -1 0 0 0;

run;

OUTPUT: Output file from "glm.sas"

INCOMPLETE DESIGNS

120 6559 1240

6

71 237

40 689 165 855

202 233 165

62

5 385

40

74

25

36

15

22

34 129

32

54

23

48

10

1

This is the data but with some missing observations:

clarion

clinton

knox

o'neill

compost

wabash

webster

B1

32.7

32.1

35.7

NA

31.8

38.2

32.5

B2

32.3

29.7

35.9

34.2

28.0

37.8

NA

B3

NA

29.1

33.1

31.2

29.2

31.9

29.7

Row Effects :

clarion

clinton

knox o'neill compost

wabash

webster

0.05238095 -2.147619 2.452381 0.252381 -2.780952 3.519048 -1.34761

Column Effects:

B1

B2

B3

1.427778 0.3111111 -1.738889

Main Effect:

32.44762

So what is SAS going to do?

INTERCEPT

TYPE

clarion

clinton

knox

ESTIMATE

29.372 B

TVALUE

27.73

PVALUE

0.0001

0.281 B

-0.969 B

3.630 B

0.20

-0.76

2.84

0.8491

0.4682

0.0196

STD ERROR

1.0591

1.4390

1.2804

1.2804

o'neill

compost

wabash

webster

BLOCK 1

2.209

-1.603

4.696

0.000

3.455

2.236

0.000

2

3

B

B

B

B

B

B

B

1.54

-1.25

3.67

.

4.16

2.69

.

0.1591

0.2421

0.0052

.

0.0025

0.0247

1.4390

1.2804

1.2804

.

0.8308

0.8308

We look at the ANOVA table and we see that order matters.

TYPE BEFORE BLOCK

Source

TYPE

BLOCK

Sourc

TYPE

BLOCK

DF

6

2

DF

6

2

Type I SS

95.93611111

33.76848485

Type III SS

98.19681818

33.76848485

F Value

8.42

8.89

F Value

8.62

8.89

Pr > F

0.0028

0.0074

Pr > F

0.0026

0.0074

F Value

8.30

8.62

F Value

8.89

8.62

Pr > F

0.0091

0.0026

Pr > F

0.0074

0.0026

F Value

8.54

Pr > F

0.0021

BLOCK BEFORE TYPE

Source

BLOCK

TYPE

Source

BLOCK

TYPE

DF

2

6

DF

2

6

Type I SS

31.50777778

98.19681818

Type III SS

33.76848485

98.19681818

THE BASIC STATS

Source

Model

Error

Total

R-Square

0.883610

DF

8

9

17

Sum of Squares

129.70459596

17.08484848

146.78944444

C.V.

4.238642

STEMLENG Mean

32.5055556