Guide to Uncertainty Analysis

advertisement

Guide to Uncertainty Analysis

Updated 11/10/15 - Joe Skitka

Uncertainty analysis is a vital tool for determining the validity of the results of any experimental

investigation. As an aspiring physicist, you should know that a given quantity is only

meaningful when there is something else to compare it to. A length, L = 100 m, can only be

deemed “large” or “small” compared to another length, a nanometer or a light year, for instance.

In the discussion section of a lab report, a measured quantity can be compared to an expected

quantity to imbue meaning. For instance, if the speed of a massless particle is measured as v =

2.993*10^8 m/s while the expected value is 2.998*10^8 m/s (because it’s massless), the

measured value is determined to be small compared to the expected value, and the percent error,

100%*(2.998 – 2.993) / 2.998 = 0.17% indicates how much smaller it is using a dimensionless

scale. But, in the context of an experiment, this scale is not that meaningful. How can we tell if

the result indicates that the particle was actually traveling at the speed of light, as expected, or

just a little bit less than the speed of light (and therefore has a very small mass)? To do this, the

difference, (2.998 – 2.993)*10^8 m/s needs to be compared with a different value reflecting the

reliability of the measurement. This quantity is known as uncertainty.

While uncertainty and error are often used interchangeably, for the purposes of these labs, an

effort will be made to refer to these terms with the following definitions: Error is the difference

between a measured value and the actual value. Uncertainty is the value by which an actual

quantity may deviate from a measured quantity up to a given confidence, typically 68% for 1σ or

95% for 2σ. The use of a constant confidence level along with the assumption that error in a

measurement is governed by uncorrelated, random probability distribution functions, dictates the

way uncertainty is propagated. Notice that while the error is how far a measurement is from the

actual value, uncertainty is how far it may be from the actual value. Here, “actual value” refers

to the value the measurement would produce if it were made with perfect precision and accuracy.

This is distinct from an “expected value” in that the latter assumes a prior result or physical laws

establishing the expectation are correct (for instance, that a neutrino is massless).

Random error is defined by the assumption that each measurement is independent and

governed by a random probability distribution function. Not all error falls into this category.

For instance, a speed measurement apparatus may consistently report 0.003*10^8 m/s less than

the actual value. This is known as bias. While the exact value of the bias is unknown to the

researchers, an estimate of the bias to a given degree of confidence will be figured into the

uncertainty. This uncertainty contribution cannot be reduced by taking multiple measurements;

however, it can be assumed that it is a single result from a random probability distribution

function, so that two separate biases described by uncertainties at 95% confidence can still

combine as via their root mean squares (see below). Again, the key difference is that the

uncertainty will not be reduced by taking multiple measurements. This also means it will not be

captured by fitting software in the stated uncertainty in fitting parameters. Bias can be reduced

by calibration (especially if it is already know or easily measureable). Handling of error that is

governed by processes that are not independent is beyond the scope of this guide.

Again, take note that you will see the terms error and uncertainty used differently or

interchangeably when consulting outside sources.

Estimates of Measured Values

Let’s say that you are measuring the strength of a magnetic field using a Gauss meter. The

digital readout indicates a value of B = 144.7 G. What is the uncertainty of this measurement?

On one hand, if the reading is stable, one may be tempted to use the precision of the instrument,

which might be something like 0.1 Gauss: ΔB = ΔBprecision = 0.1 G, in which case the

measurement indicates B = 144.7 ± 0.1 G.

However, an astute student may be skeptical of this result. Upon removing the probe of the

Gauss meter from the region being measured and placing it back in the same position, the value

now reads B = 145.3 G. Clearly, ΔB > 0.1 G simply because the student can only place the

probe with a limited degree of precision in the exact desired location, introducing some

uncertainty ΔBplacement. If many measurements are taken, then the standard deviation of the

distribution can be used as an uncertainty estimate. Because each measurement includes both the

precision uncertainty and the placement uncertainty, which are assumed to be random, the two

can be combined into an effective Brandom. Let’s assume this is measured to be ΔBrandom = σ =

2.7 G.

But, if the probe is removed and replaced 16 times independently and the measurements are

averaged to find the magnetic field, shouldn’t the measurement have a smaller error and,

therefore, uncertainty? Yes. Random error and its associated uncertainty will be reduced by a

factor of 1/√𝑁, for the average of N measurements:

Δ𝜱N =

ΔΦ1

√𝑁

(1)

So the resulting uncertainty is ΔB16 = ΔB/√16 = 0.675 G.

Next, the astute student may still wonder whether or not the value measured by the device is

accurate. To answer this question, we need to turn to the specifications of the device included in

its manual. Here is an excerpt from the manual of an analogue Gauss meter used in PHYS 0560,

PHYS 1560, and PHYS 2010:

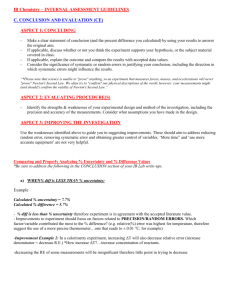

Notice that there the uncertainty associated with the device is a complicated function of the value

measured and the settings of the meter. Elsewhere in the specifications, the susceptibility of the

reading to temperature fluctuations is listed as well. If it this looks like a headache, don’t worry

as students will typically not be required to estimate uncertainty to this level of detail in PHYS

0470 and PHYS 0560 (not true for PHYS 1560 and PHYS 2010). Most measurement devices in

these labs, such as voltmeters, have relatively small specified uncertainty that won’t adversely

affect results. This Gaussmeter is an exception, and if you take one of the courses listed above,

you may see these specifications again. In this case, several different contributions need to be

combined (from full-scale accuracy, instrument accuracy, internal calibration accuracy, and

probe reading, in addition to the reading’s susceptibility to temperature fluctuations, not shown.)

The device uncertainty might be something like ΔBdevice = 3 G if the maximum range is not set

very high.

Note that in the case of the Gauss meter, the uncertainty is not necessarily random, rather, it may

be bias, and for instance, it may consistently be reading 3 Gauss above the actual value. Words

like “calibration” might clue you into this. In general, it should be assumed that uncertainty

provided in manufacturer’s specifications is bias unless specifically stated. The implication of

this is that such uncertainty cannot be reduced by taking multiple measurements and should not

be propagated as though it were random.

Uncertainty contributions will also arise from theoretical assumptions made in the analysis. For

example, in measuring the deflection of an electron in what is assumed to be a uniform electric

field between two charged plates, an additional contribution to the uncertainty should be

associated with the error introduced by the assumption of uniformity. In this case, moreinvolved math/theory, numerical approximations, or additional measurements may be necessary

to estimate this. For instance, one could use a particle of known properties to calibrate for the

effect of the non-uniform field. If all assumptions are accounted for, the measured value should

fall within uncertainty of the correct value. If this is not the case, some assumptions may have

been overlooked.

Propagating Uncertainty

1. Addition: contributions to uncertainty add via their root-mean-square (RMS). This is

because the uncertainties ideally represent the standard deviations of random probability

distribution functions. When two contributions to uncertainty are added, this is modeled

by adding two random probability distribution functions. Because the variances of these

distributions add linearly to give the variance of the new distribution, and the standard

deviation is the square root of the variance, their standard deviations add via the RMS. In

the example of the magnetic field from the previous section, this looks like:

2

Δ𝐵

Δ𝐵𝑡𝑜𝑡𝑎𝑙 = √∑𝑖(Δ𝐵𝑖 )2 = √(Δ𝐵𝑑𝑒𝑣𝑖𝑐𝑒 )2 + ( 𝑟𝑎𝑛𝑑𝑜𝑚

)

16

(2)

Δ𝐵𝑡𝑜𝑡𝑎𝑙 = √(3)2 + (0.625)2 Gauss

(3)

Δ𝐵𝑡𝑜𝑡𝑎𝑙 = 3.064 Gauss

(4)

√

Notice that the uncertainty carries units like the value it pertains to, in this case, Gauss

and that these equations are dimensionally consistent. Also note that the larger

contributions to the uncertainty tend to dominate the smaller ones. In this case, the

random error only accounts for 0.064 Gauss more than it would have been with the

device’s uncertainty alone.

2. Multiplication and more complicated functional dependences combine via first-order

perturbation theory combined with the property that they add via their RMS. This results

in the general uncertainty propagation equation:

𝜕𝛹 2

Δ𝛹 = √∑𝑁

𝑖 (Δ𝜑𝑖 𝜕𝜑 ) ,

𝑖

(5)

Where 𝜳 is a function of parameters {φ1, φ2, … , φi, … , φN-1, φN} each of which has

uncertainty associated with it. This (treating the uncertainty as a first-order perturbation)

is easily visualized for functions of a single variable, where the RMS does not come into

play. The RMS is only used to combine multiple dimensions/contributions.

As an example, in lab 2 of PHYS 0470, the uncertainty of the permittivity of free space is

computed. The equation used to compute the permittivity itself is:

2 𝑑2 m

𝜀𝑜 =

(6)

𝐴

The resulting equation for its uncertainty is then:

Δ𝜀𝑜 = √(∆𝑑

4m𝑑 2

A

) + (∆𝑚

2𝑑2 2

A

) + (∆𝐴

2𝑚𝑑2 2

𝐴2

)

(7)

Where 𝜳 {φ1, φ2, φ3} = 𝜀𝑜 {m, d, A} in equation 5. Again, notice that no matter what the

units of the different parameters 𝜀𝑜 is a function of, the uncertainty will be of the correct

units as long as the original equation (6) was also.

Uncertainty through Fits

If a fit parameter (e.g. the slope of a linear fit) is used to determine a value, there will be some

uncertainty associated with the parameter. It is recommended that software be used to propagate

these uncertainties. Excel can be unintuitive and difficult to producing fit parameters with a

required degree of precision and associated uncertainty estimates. If you are not adept at Excel,

try using a free download of Kaleidagraph. This is great, simple software for making plots and

fitting curves. If you do a custom style fit, it will give the uncertainties of each parameter, even

for a nonlinear fit. See the last section of this manual for instructions. Note that most software

assumes that error is random and use the variability of the data to determine the computed

uncertainties. If bias or a priori uncertainties need to be propagated through a fit, this will need

to be worked out using a more robust system, which may involve programming the fit manually

or using more advanced software.

Uncertainty in Plots

When plotting discrete data sets (scatter plots), uncertainty is ideally expressed with error bars in

both dimensions. Include these whenever possible.

Using Uncertainty to Evaluate the Success of an Experiment

An experiment may be naively deemed “successful” (for the purposes of PHYS 0470 and PHYS

0560) if a measured value falls within the uncertainty of the expected value. This is because we

are safe in assuming that known physical theories are accurate at the parameters and precisions

used in these experiments. This will not be the case in experiments likely to probe new physics,

as in a research-grade lab. An unexpected merits scrutiny of the uncertainty propagation as well

as the assumptions made of the physics involved. When all of these are investigated with an

appropriate amount of rigor, new physics can be discovered; for instance, one might find

neutrinos have a small mass by observing them travelling slightly slower than the speed of light.

Kaleidagraph

Kaleidagraph is a super-quick solution to data analysis, but it is also more versatile than Excel

(at least for the untrained user.) The biggest advantage, besides its efficiency, is that it will

provide uncertainty in fit parameters, both linear and non-linear. Kaleidagraph is available on

the lab computers, but is generally not installed on other campus computers. The software

should be available for download from http://www.brown.edu/information-technology/software/

, but if not, then a free trial may be downloaded from www.synergy.com. Here are a few key

things you need to know to utilize the software:

Don’t label columns in the top-most cell with text. Rather there are specific title boxes,

initially labeled A, B, C, etc. If you do enter text in a box, the column will format for text

entry and will throw an error when you try to plot. (To fix this, go to column formatting

under the data menu. Format the column for “float”.)

Simply enter columns of data for the independent and dependent variables you wish to

plot.

To manipulate data, press the up/down wedges/arrows at the top right of the page to

expand the column labels for reference. A “formula entry” window should be floating

around the screen somewhere. Columns can be edited with simple commands. For

instance, if you put your Voltage data in column c1 and wish to use voltage squared,

simply type: “c2 = c1^2” in the formula entry. Press “Run” and you will see the voltage

squared has its own column.

You may wish to input a column of uncertainties for later use as error bars.

To plot: select the Gallery dropdown menu. Select the Linear menu. Select a Scatter

plot. Choose one column for your independent variable, and one or more for your

dependent variable.

To fit: select the Curve Fit dropdown menu. The presets like “linear” may be used for a

quick fit, but they will not give you uncertainty on the fitting parameters. To do this,

select General. If you’d like a linear fit, fit1 should be set to this be default. Upon

clicking it, a box should come up. Select Define to double check the form of the fit. It

should read:

m1 + m2 * M0; m1 = 1; m2 = 1

Here, {m1, m2, m3…} are the fit parameters and M0 is the independent variable. Clearly

this is just a linear fit. If you, for instance, wanted to make this a quadratic fit instead,

you would write:

m1 + m2 * M0 + m3 * M0^2; m1 = 1; m2 = 1; m3 = 1

The values on the right are initial guesses and must be provided for each parameter.

When you are done, select OK. Check the box of the dependent variable you would like

to fit. Then select OK. The uncertainty in the fit parameter is listed in the Error column

in the plot

To generate error bars, right click on the plot and select Error bars. Error bars can be

added as a percent of the value (in either variable) or from a different column of data.