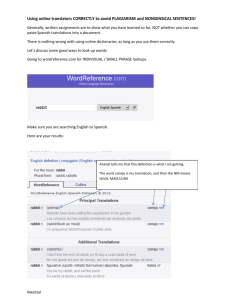

Online Spanish-English Dictionaries Websites

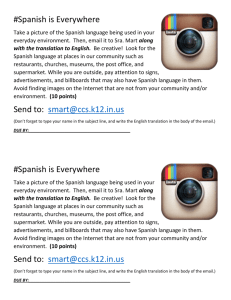

advertisement