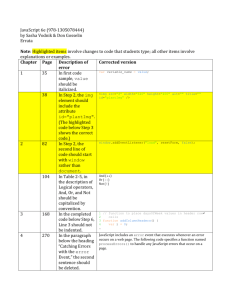

Supplementary Information (docx 533K)

SUPPLEMENTARY MATERIAL

GWAS with longitudinal phenotypes – performance of the approximate procedures

Karolina Sikorska, Nahid Mostafavi Montazeri, André Uitterlinden, Fernando

Rivadeneira, Paul H.C Eilers, and Emmanuel Lesaffre

1.

Appendix

Linear mixed model

The ML estimator of β in model (1) for balanced data set can be expressed as,

𝑁 𝛽̂ = (∑ 𝑋 𝑖

𝑇 𝑖=1

𝑊𝑋 𝑖

)

−1

𝑁

(∑ 𝑋 𝑖

𝑇 𝑖=1

𝑊𝑦 𝑖

), (17)

With (y i1

, … , y in

) T

, X i

the design matrix for the 𝑖 th subject consisting of the columns 1 , 𝒕 , 𝑠 𝑖

𝟏, 𝑠 𝑖 𝒕, and 𝑊 equals 𝑉 −1

. Using Woodbury equation, (𝐴 + 𝐵𝐷𝐵 𝑇 ) −1 = 𝐴 −1 − 𝐴 −1 𝐵(𝐷 −1 +

𝐵 𝑇 𝐴 −1 𝐵) −1 𝐵 𝑇 𝐴 −1 , 𝑊 can be rewritten for model (2) as,

𝑊 =

1 𝜎 2

(𝐼 𝑛

− 𝑐1 𝑛

)(𝐼 𝑛

− 𝛾 𝒕𝒕 𝑇 (𝐼 𝑛

− 𝑐1 𝑛

)), (18)

Where 𝒕 = (𝑡

1

, … , 𝑡 𝑛

) 𝑇 , is the vector of time points, 𝑐 = (𝜎 2 ⁄ 𝜎

0

2 + 𝑛) −1 , 𝛾 = (𝜎 2 ⁄ 𝜎 2

1

+ 𝜃) −1 and 𝜃 = ∑ 𝑡 𝑗

2 − 𝑐 (∑ 𝑡 𝑗

)

2

.

By inserting equation (18) in equation (17) the estimate of interaction term in model (2), can be obtained as: 𝛽̂

3

= cov(𝒔, 𝒖) − 𝒕̅ cov(𝒔, 𝒚)

.

𝑛 var(𝒕) var(𝒔)

Likewise by inserting 𝑊 in the variance-covariance matrix of LMM, i.e. var(𝛽̂

3

) =

(∑ 𝑁 𝑖=1

𝑋 𝑖

𝑇 𝑊𝑋 𝑖

) −1 , the variance of the interaction estimator can be written as:

1

var(𝛽̂

3

) = 𝜎 2 + 𝜎

1

2 𝑛 var(𝒕)

𝑁 𝑛 var(𝒕) var(𝒔)

.

Two-step approach

We can reformulate model (2) into 𝑦 𝑖𝑗

= 𝛽 ∗

0

+ 𝛽 ∗

2 𝑡 𝑖𝑗

+ 𝑏

∗

0𝑖

+ 𝑏

∗

1𝑖 𝑡 𝑖𝑗

+ 𝜀 𝑖𝑗

, (19) with 𝛽 ∗

0

= 𝛽

0

+ 𝛽

1

𝐸(𝑠), 𝛽 ∗

2

= 𝛽

2

+ 𝛽

3

𝐸(𝑠), 𝑏

∗

0𝑖

= 𝑏

0𝑖

+ 𝛽

1

(𝑠 𝑖

− 𝐸(𝑠)), 𝑏 ∗

1𝑖

= 𝑏

1𝑖

+ 𝛽

3

(𝑠 𝑖

− 𝐸(𝑠)).

We call model (19) the reduced model and its fitting constitutes the first step in the two-step procedure. The second step regresses the estimated b̂ ∗

1i

on SNPs with simple regression model 𝑏̂ ∗

1𝑖

= 𝛽 ∗∗

0

+ 𝛽 ∗∗

1 𝑠 𝑖

+ 𝜀 𝑖

∗∗ .

The MLE of 𝛽̂ ∗∗

1

can be expressed as: 𝛽̂ ∗∗

1

= ∑

(𝑠 𝑖

∑(𝑠

− 𝑠̅)𝑏̂ ∗ 𝑖1

, (20) 𝑖

− 𝑠̅) 2 where 𝑏̂ ∗ 𝑖1

is the best linear unbiased predictor (BLUP) and can be computed by the empirical

Bayesian approach as: 𝑏̂ 𝑖

∗ = 𝐷𝑍 𝑇 𝑊(𝑦 𝑖

− 𝛽̂ ∗ ), (21) and where 𝛽̂ ∗

is the ML estimator of the vector of fixed effects of reduced model (19). Likewise by inserting 𝛽̂ ∗

and 𝑊 in equation (21) the BLUP for model (19), can be obtained as: 𝑏̂ ∗

1𝑖

= 𝛾(𝜉 𝑖

− 𝜉̅), (22)

2

where 𝜉 𝑖

= ∑ 𝑡 𝑗 𝑦 𝑖𝑗

− 𝑐(∑ 𝑡 𝑗

)(∑ 𝑦 𝑖𝑗

), 𝜉̅ = ∑ 𝑡 𝑗 𝑦̅ 𝑗

− 𝑐(∑ 𝑡 𝑗

)(∑ 𝑦̅ 𝑗

), 𝑦̅ 𝑗

=

1

𝑁

∑ 𝑁 𝑖=1 𝑦 𝑖𝑗

, 𝑐 =

(𝜎 2 ⁄ 𝜎

0

∗2 + 𝑛) −1 , 𝛾 = (𝜎 2 ⁄ 𝜎 ∗2

1

+ 𝜃) −1

and 𝜃 = ∑ 𝑡 𝑗

2 − 𝑐(∑ 𝑡 𝑗

)

2

.

By inserting the two above equations into equation (20) the estimate of 𝛽 ∗∗

1

can be derived as: 𝛽̂ ∗∗

1

= cov(𝒔, 𝒖) − 𝑛 𝑐 𝒕̅ cov(𝒔, 𝒚) var(𝒔) (𝜎 2 ⁄ 𝜎 ∗2

1

+ ∑ 𝑡 𝑗

2 − 𝑐(∑ 𝑡 𝑗

)

2

)

Note that in the above derivations we have used the assumption that the covariance of the random intercept and slope is zero. We have argued in the text when this assumption is reasonable.

Conditional two-step approach

The transformed matrix (i.e. 𝐴 ) which was introduced in CLMM can be defined as, 𝐴 =

⟨ 𝑎

1

‖𝑎

1

‖

, 𝑎

2

‖𝑎

2

‖

, 𝑎

3

‖𝑎

3

‖

⟩, by using the Gram Schmidt process, where 𝑎

1

= 𝒕 − ⟨𝒕. 𝟏⟩

1 𝑛

.

In the second step of the conditional two-step approach (i.e. model (11)), the ML estimator of 𝛽̂ ∆∆

1

can be expressed as: 𝛽̂ ∆∆

1

= ∑

(𝑠 𝑖

∑(𝑠 𝑖

− 𝑠̅)𝑏̂ ∆ 𝑖1

− 𝑠̅) ´ 2

(23)

Where 𝑏̂

∆ 𝑖1

is the best linear unbiased predictor (BLUP) and can be computed by empirical

Bayesian approach as, 𝑏̂ 𝑖

∆ = 𝐷𝑍 ∗𝑇 𝑊 ∗ (𝑦 𝑖

∗ − 𝑋 ∗ 𝛽̂ ∆ ), (24) where 𝛽̂ ∆

is the MLE of the reduced model (10), 𝑋 ∗

and 𝑍 ∗

are the transformed design matrix of fixed and random effects, respectively. Likewise by using the Woodbury equation, 𝑊 ∗

can be obtained as,

𝑊 ∗

1

= 𝛼 2

(𝐼 𝑛

− 𝛾𝑍 ∗ 𝑍 ∗𝑇 ), where 𝛾 = (𝜎 2 ⁄ 𝜎

1

∗2 + 𝑛 var(𝑡)) −1 .

By inserting 𝛽̂ ∆

and 𝑊 ∗

into equation (24) the BLUP for model (10) can be obtained as:

3

𝑏̂ ∆

1𝑖

= 𝛾 (𝑢 𝑖

−

1

𝑁

∑ 𝑢 𝑖 𝑖

− 𝑡̅𝑦 𝑖

1

+

𝑁 𝑡̅ ∑ 𝑦 𝑖

), 𝑖 with 𝑦 𝑖

= ∑ 𝑦 𝑖𝑗

and 𝑢 𝑖

= ∑ 𝑦 𝑖𝑗 𝑡 𝑗

.

By inserting the two above equations into the equation (23), the ML estimator of SNP effect in model (12) can be derived as: 𝛽̂ ∆∆

1

= var(𝒔)(𝑛 var(𝒕) + 𝜎 2 ⁄ 𝜎

1

∗2 )

.

In the second step of the conditional two-step approach, model (11), variance of the 𝛽̂ ∆∆

1

can be expressed as:

var(𝛽̂ ∆∆

1

) = var(𝑏̂ ∆ 𝑖1

∑(𝑠 𝑖

)

− 𝑠̅) ´ 2

, (25) where var(𝑏̂ ∆ 𝑖1

) with respect to the variance-covariance matrix of LMM, i.e. var(𝑏̂ 𝑖

∆ ) =

𝐷𝑍 𝑇 𝑊 ∗ 𝑍 ∗ 𝐷 − 𝐷𝑍 𝑇 𝑊 ∗ 𝑋 ∗ (𝑋 ∗𝑇 𝑊 ∗ 𝑋 ∗ ) −1 𝑊 ∗ 𝑋 ∗ 𝑍 ∗ 𝐷, can be expressed as: var(𝑏̂ 𝑖

∆

) = 𝜎 ∗2

1

(1 − 𝛾 𝑛 var(𝒕)) 𝑛 var(𝒕). (26) 𝜎 2

By inserting former equation into the equation (25), the variance of estimator of SNP effect can be derived as: var(𝛽̂ ∆∆

1

) = 𝑛 var(𝒕)𝜎

𝑁 𝑛 var(𝒔)(𝜎 2 ⁄ 𝜎

1

∗2

∗2

1

+ 𝑛 var(𝒕))

.

2.

Probit regression to estimate power

In the mixed model framework the distributions of the test statistics for the Wald, t-and F-tests are generally known only under the null hypothesis 1 . Exhaustive simulations are considered the most accurate method to compute statistical power. However fitting many LMMs is time consuming and so is the simulation-based estimation of the power curve. The effect and parameters are computed repetitively for a grid of values. The proportion of times that a SNP is

4

qualified as significant gives the empirical type I error rate (simulation under null hypothesis) and power (simulation under alternative hypothesis). For an exhaustive simulation study this approach demands a discouraging amount of computation time.

We propose a faster way for power calculations based on the probit model. Helms 2 demonstrated via simulations that the distribution of the general F test

𝐻

0

: 𝜉 ≡ 𝐿𝛽 − 𝜉

0

= 0 versus 𝐻

𝐴

: 𝜉 ≠ 0 under the alternative can be approximated by a noncentral F-distribution with noncentrality parameter

δ given by 𝛿 = 𝜉 𝑇 𝑖

𝑇 𝑉 𝑖

−1 𝑋 𝑖

) −1 𝐿 𝑇 ] −1 𝜉 .

From this it follows that the t-test for testing

𝐻

0

: 𝛽

3

= 0 versus 𝐻

𝐴

: 𝛽

3

= 𝜃 , under 𝐻

𝐴 has a noncentral t-distribution with noncentrality parameter √𝛿 3

. In GWAS situations the number of degrees of freedom is large and the (non-central) t-distribution can be approximated by a normal distribution. Consequently, the power curve plotting the effect size versus the statistical power has approximately the shape of the cumulative normal distribution function. We observed the same shape for the approximate procedures. In our simulations, a grid of equally spaced 𝛽

3

-values is chosen on the interval [0, 𝛽

3,𝑚𝑎𝑥

] , where 𝛽

3,𝑚𝑎𝑥

is the smallest value for which the power is practically 100%. Thousand grid values were chosen and for each value one data set was simulated and the considered models were fitted. The obtained p -values can be dichotomized according to the condition p < 0.05, giving 𝑝 𝑏𝑖𝑛

. Finally, a probit model was fitted to 𝑝 𝑏𝑖𝑛

.

3.

Discussion on the power loss of the CTS approach

We observed that the behavior of the CTS is quite similar to the CLMM. That there is some loss in power of the CLMM approach might be surprising given the results in Section 4.2 of

4

. In that section it is argued that the CLMM implies no loss of information from a Bayesian

5

viewpoint. However, this result is based on the assumption that the random intercept has a flat prior, while we have taken the classical assumption of joint normality for the random intercept and slope. That in the balanced case there is (basically) no power loss and in the unbalanced case there must be in general a power loss can be seen from the following reasoning.

The results in Section 4.3 of the same paper applied to the current simplified situation results in: 𝑝(𝒚 | 𝒃

𝟎

, 𝛽

3

, 𝒃

1

, 𝜎 2 ) = 𝑝(𝒚 ∗ | 𝛽

3

, 𝒃

1

, 𝜎 2 ) 𝑝(𝒚 | 𝒃

0

, 𝛽

3

, 𝒃

1

, 𝜎 2 ), with y the stacked vector of responses, 𝒃

0

( 𝒃

1

) the stacked vector random intercepts (slope), 𝒚 ∗ the stacked vector of 𝒚 𝑖

∗

values and 𝒚 the stacked vector of profile means. Now for each of the profile means the following result holds: 𝑦|𝑏

0𝑖

, 𝑏

1𝑖

∼ N(𝛽

0

+ 𝛽

1 𝑠 𝑖

+ 𝛽

2 𝑡 + 𝛽

3 𝑠 𝑖 𝑡 + 𝑏

0𝑖

+ 𝑏

1𝑖 𝑡, 𝜎 2 ), with 𝑡̅ 𝑖

the average time for the 𝑖 th subject. In the balanced case, one can change the time origin such that t̅ i

≡ 𝑡 = 0 without changing anything on the estimation of the longitudinal part of the model. This implies that there is no information on 𝛽

3

anymore in the second part of the likelihood (part of 𝑦 ). That a minimal loss of information for the CTS approach was seen in some of the simulations for the balanced case has to do with the estimation of the variance parameters. Indeed the variance parameters of the LMM are present in both parts of the likelihood and must therefore be estimated better with the LMM than with any of the two parts separately. In the unbalanced case, no change in origin can remove 𝛽

3

from that part, however.

Hence, a loss of power is expected with the CLMM and hence also with the CTS approach, but the loss of power is often minimal as seen in the simulations.

6

4.

Supplementary Figures

Supplementary Figure 1: MAR case, scenario 5. Approximation of the CTS approach compared to the CLMM.

Supplemantary Figure 2: Flowchart describing practical use of the CTS approach

7

Supplementary Figure 3: Time needed to analyze 1 million of SNPs using the CTS approach combined with the semi-parallel regression (left panel). Computation time ratio between the function lmer and the CTS approach combined with the semi-parallel regression depending on the number of longitudinal observations (right panel).

Supplementary Figure 4: 100 SNPs from the BMD data. On the x-axis the p-values for the

SNP x time interaction effect from the mixed model assuming uncorrelated errors; on the y-axis the corresponding p-values from the model assuming continuous autoregressive structure of measurement error.

8

Suppementary Figure 5: Balanced case. Performance of the approximate procedures when a time-varying covariate is included to the linear mixed model.

Suppementary Figure 6: Unbalanced case. Performance of the approximate procedures when a time-varying covariate is included to the linear mixed model.

9

5.

R codes – an example of applying the CTS approach

The data are arranged in a so-called “long format”, with one row per observation. The SNP data are stored in a matrix S with N rows and ns columns. The size of ns depends on the available

RAM. The first few rows of the phenotype data ( mydata ) look as follows: id y Time

1 1.12 1

1 1.14 2

1 1.16 3

1 1.2 4

1 1.26 5

2 0.95 1

2 0.83 2

2 0.65 3

2 0.49 4

2 0.34 5

The code below, with function cond, transforms data for conditional linear mixed model. It is based on the SAS macro provided in Verbeke et al. in “Conditional linear mixed models” (2001).

Variable “vars” is a vector with names of response and all time-varying covariates that should be transformed. cond = function(data, vars) { data = data[order(data$id), ]

### delete missing observations data1 = data[!is.na(data$y), ]

## do the transformations ids = unique(data1$id) transdata = NULL for(i in ids) { xi = data1[data1$id == i, vars] xi = as.matrix(xi) if(nrow(xi) > 1) {

A = cumsum(rep(1, nrow(xi)))

A1 = poly(A, degree = length(A)-1) transxi = t(A1) %*% xi transxi = cbind(i, transxi) transdata = rbind(transdata, transxi)

}

} transdata = as.data.frame(transdata) names(transdata) = c("id", vars) row.names(transdata) = 1:nrow(transdata)

10

return(transdata)

}

The code below applies the conditional two-step approach. First, the data are transformed using function cond . Next, the reduced conditional linear mixed model is fit and the random slopes are extracted. Finally, the semi-parallel regression is performed.

# transform data for the conditional linear mixed model trdata = cond(mydata, vars = c("Time", "y"))

#fit the reduced model and extract random slopes mod2 = lmer(y ˜ Time - 1 + (Time-1|id), data = trdata) blups = ranef(mod2)$id blups = as.numeric(blups[ , 1])

# perform the second step using semi-parallel regression

X = matrix(1, n, 1)

U1 = crossprod(X, blups)

U2 = solve(crossprod(X), U1) ytr = blups - X %*% U2 ns = ncol(S)

U3 = crossprod(X, S)

U4 = solve(crossprod(X), U3)

Str = S - X %*% U4

Str2 = colSums(Str ˆ 2) b = as.vector(crossprod(ytr, Str) / Str2) sig = (sum(ytr ˆ 2) - b ˆ 2 * Str2) / (n - 2) err = sqrt(sig * (1 / Str2)) p = 2 * pnorm(-abs(b / err))

References

1 .G. Verbeke, G. Molenberghs. Linear Mixed Models for Longitudinal Data. New York,

Springer, 2009.

2. R.W. Helms. Intentionally incomplete longitudinal designs: I. methodology and comparison of some full span designs. Statistics in Medicine 1992, 11(14-15) :1889–1913.

3. R.C. Littell. SAS for Mixed Models. North Carolina, SAS institute, 2006.

4. G. Verbeke, B. Spiessens, E. Lesaffre. Conditional linear mixed models. The American

Statistician 2001, 55(1) :25–34.

11