Continuing Medical Education outcomes associated with e

advertisement

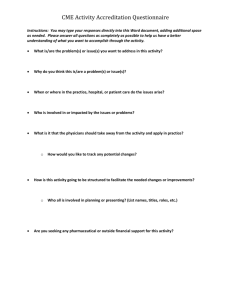

Continuing Medical Education outcomes associated with e-mailed synopses of research-based clinical information: A research proposal Roland M. Grad, Pierre Pluye, Alain C. Vandal, Jonathan Moscovici, Bernard Marlow, SED Shortt, L Hill, Gillian Bartlett, I Goldstine Author Note Roland Grad, Department of Family Medicine, McGillUniversity Pierre Pluye, Department of Family Medicine, McGillUniversity Alain Vandal and Jonathan Moscovici, Department of Mathematics and Statistics, McGill University Bernard Marlow, College of Family Physicians of Canada Sam Shortt, Canadian Medical Association Lori Hill, College of Family Physicians of Canada Gillian Bartlett, Department of Family Medicine, McGillUniversity Ian Goldstine, College of Family Physicians of Canada Correspondence concerning this grant proposal should be addressed to Roland Grad, Department of Family Medicine, McGill University, Montreal. Email:roland.grad@mcgill.ca Cite as follows: Grad, R.M., Pluye, P., Vandal, A.C., Moscovici, J., Marlow, B., Shortt, S.E.D., Hill, L., Bartlett, G., & Goldstine, I. (2012) Continuing Medical Education outcomes associated with e-mailed synopses of research-based clinical information: A research proposal. McGill Family Medicine Studies Online, 07:e06. 1 Archived by WebCite® 2 Abstract 3 Abbreviations IAM: Information Assessment Method CMA: Canadian Medical Association Keywords Knowledge Translation, Primary Health Care, Patient Health Outcomes, Questionnaire Design, Reflective Learning, Continuing Medical Education, Mixed Methods Research Objective By the very nature of their work, physicians must update their clinical knowledge. In part this lifelong learning process happens through participation in brief individual e-learning activities. But how can we improve this type of continuing medical education (CME) activity, to enhance the use of clinical research in patient care?And arethe patient health benefitsassociated withtheseCME activities observable? We address these questions in relation to one specific type of accredited CME – the reading of e-mailed synopses of selected peer-reviewed articles, followed by the completion of a short questionnaire, called the Information Assessment Method (IAM). The IAM questionnaire was developed with knowledge user partners at the College of Family Physicians of Canada, the Royal College of Physicians and Surgeons and the Canadian Medical Association (CMA). Since September 2006, about 4,500 CMA members have submitted more than one million IAM questionnaires linked to e-mailed synopses. The popularity of IAM-related CME programs has been observed in other work. For example, in a project with the Canadian Pharmacists 4 Association,more than 5,500members of the College of Family Physicians used IAM in 2010 to evaluate emailed content from ‘e-Therapeutics+’, a reference book1. Our prior work suggests the response format of the IAM questionnaire influences the willingness of physicians to participate in CME programs. Importantly, this same work suggests some response formats may stimulate the physician to more carefully reflect on what they just read. However, no research has studied these issues, or whetherIAM-related CME programs lead to measurable improvements in the health of specific patients. Ouroverarching objective, debated and formulated in partnership with knowledge users is to optimizethe effectiveness ofquestionnaire-based CME, and to scrutinize patient health outcomes associated withIAM-related CMEprograms. In so doing, we will address the following research questions: Q1. Is physician participation in CME programsaffected by the IAM response format? Q2. Is reflective learning affected by the IAM response format? Q3. Are the patient health outcomes reported through the IAM questionnaire observable in longitudinal follow-up? Deliverables: Answering the first two questions will allow knowledge users to implement IAM questionnaires that optimize participation and reflection, for national programs of continuing education in the health professions.Reflecting on email alerts and the subsequent use of researchbased informationcanimprove health outcomes forspecific patients. Answering the third question will reveal iffamily physicians observe patient health outcomes linked to these programs. This will be the first systematic study using clinical examples to illustrate how reflection on synopses of research can be linked to health benefits for specific patients.It will set the stage for future 5 work to objectively measure patient health outcomes.These questions and deliverables have been debated and defined in partnership with decision makers from theCollege of Family Physiciansand the CMA, in line with CIHR’s definition ofintegrated Knowledge Translation. The term integrated Knowledge Translationdescribes a different way of doing research with researchers and research users working together to shape the research process- starting with collaboration on setting the research questions, deciding the methodology, being involved in data collection and tools development, interpreting the findings and helping disseminate the research results2. Background The Information Assessment Method IAM is a tool used by health professionals to document the value of clinical information. Based on a theoretical model from information science called the Acquisition-CognitionApplicationmodel, questions in IAM followphases ina process of self-assessing the value of clinical information.To illustrate the Acquisition-Cognition-Application model in the context of receiving email alerts; health professionals (1) filter emailed information according to relevance and choose to read a specific alert (Acquisition), (2) absorb, understand and integrate the information in the alert (Cognition), and then they (3) may use this newly understood and cognitively processed information (Application).Our CIHR-funded research has provided empirical data in support of the Acquisition-Cognition-Application model for structuring IAM to study the value ofclinical information retrieved in primary care3.In addition, when IAMwas linked to email alerts of research-based clinical information, we have documented its 6 popularitywith practitioners, and assessedtwo sources of validity evidence: content validity and internal structure validity, based on a Principal Components Analysis1, 4. Our recent literature review suggested a final phase to the Acquisition-Cognition-Application model, namely ‘Outcomes5’. In this same context of receiving email alerts, we operationalized these outcomes as‘expected patient health benefits’ resulting from the use of informationfor a specific patient. As such, the current version of IAM is based on this evolved model of Acquisition-Cognition-Application-Outcomes.IAM operationalizes this model, and documents the value of delivered clinical information in four constructs: (1) clinical relevance for a specific patient, (2) its cognitive impact (10 item response categories), (3) any use of this information for a patient (seven item response categories), and (4) if used, any expected health benefits(three item response categories - see appendix two). Literature Review With respect to assessment of synopses of clinical research delivered as email alerts, we critically reviewed the relevant literature in health sciences and four other disciplines (communication, information studies, education, and knowledge translation). We found methods for global evaluation of educational programs, but no tool such as IAM that permits systematic assessment of the value of one specific synopsis.6,5 Therefore, IAM is still unique. Our CIHR funded research has demonstrated the validity of IAM in an email context, and how IAM can stimulate physician reflection.1, 7 While the importance of IAM-like guidance during reflection is emphasized in other research8, early on we did not anticipatehowanswers to theIAM questionnaire could be influenced by the response format.9Outside of the health professions, 7 limited research has shown that respondents endorse more options in the forced-choice format than in the check all that apply format.10,11Moreover, Smyth et al. (2006) observed that college students took longer to answer in the forced-choice format. Without explicitly studying reflection, they explained the extra time taken as: (1) time to read and check each item, and (2) time to reflect on each choice. In addition, in the currently used check all that apply format, respondents may feel they have reported “enough” when they check just one item.12-14 In contrast, a forced-choice format requires a responseforeach item, discouraging a strategy of minimal response.15This suggests a forced choice format would be preferable for CME as it should maximize reflection. No studies to date have examined the patient health benefits associated with the delivery of clinical information in the form of email alerts. However, printed educational materials (exemplar: clinical practice guidelines) delivered to health care professionals can have beneficial effects on process outcomes related to health care.16Trials comparing printed educational materials to no intervention observed an absolute risk difference of +4.3% on categorical process outcomes (e.g., x-ray requests, prescribing and smoking cessation activities), and a relative risk difference +13.6% on continuous process outcomes (e.g., medication change, x-ray requests per practice).Thus, indications are thatsynopses of clinical research delivered as email alerts may lead to health benefits for patients, if physicianscarefully reflect on them. A July 2011 update of our 2010 published literature reviewrevealed only one new relevant study. In a randomized controlled trial, increased awareness or familiarity with the literature was demonstrated among a large sample of nephrologists and scientists receiving email alerts17.However, study participants did not use a reflective tool like IAM to rate these email alerts. 8 Overview ofResearch Proposal Our proposal is structured around the ‘Continuing Medical Education (CME) outcomes framework’18.The CME frameworkdefinessevenlevels of outcome;from physician participation at one end of the spectrum, to downstream improvements in the health of communities at the other.The extent of physician participation in CME (level one) is an important outcome for knowledge users on the team, as participation precedes all subsequent outcomes. In addition, accredited programsseek to promote reflective learning as the basis for continuing education.19This is a level three outcome in the CME outcomes framework.Finally,understanding how physician reflection on synopses of clinical research can be associated with observable health benefits for patients will provide new evidence to inform and supportCME. This is level sixin the CME outcomes framework, and fits within the CIHR Knowledge-to-Action cycle, at two points: ‘Monitor knowledge use’ and ‘Evaluate outcomes of knowledge use’.The following paragraphs elaborate on our decision to study these three particular CME outcomes. Level 1- Is physician participation in CME programs affected by the IAM response format?With regards to the level one outcome in the CME framework,we conducted preliminary work to inform the present proposal,by examining the participation of CMA members in one IAM-based CME program. In 2008, twodifferent IAM response formats were used at different times on cma.ca. This resulted in the following three data series: Series 1 (henceforth S1) from September 8, 2006 to February 17, 2008: IAM was used by 1,324 practicing family physicians to submit 62,928 synopsis ratings. Physicians used a response format that asked them to ‘Check all that apply’. Family physicians reported an average of 1.4 types of cognitive impact (range 1-10) per synopsis. 9 Series 2 (henceforth S2) from February 20 to April 2, 2008: IAM was used by 965 practicing family physicians to submit 10,316 synopsis ratings. To encourage physicians to reflecton each item of cognitive impact, a forced ‘Yes’ or ‘No’ response format was implemented. At the same time, three new questions were added to IAM. Family physicians reported an average of 3.1 types of cognitive impact (range 1-10) per synopsis (see appendix 3). However, the number of synopsis ratings received at cma.ca dropped25%, from about 4,000 to 3,000per week. Additionally, we applied a Cox model to this data, using the response format as a time dependent covariate. In this model, the hazard ratio of rating a synopsis between S1 and S2 was estimated to be 0.56. Series 3 (henceforth S3) from April 3, 2008 to present: Given a 44%drop in the hazard of rating a synopsis in S2 from S1, our principal knowledge user and one CMA decision-maker recommended that IAM revert back to a ‘Check all that apply’ response format. After reverting back to ‘Check all that apply’ in April 2008,the number of synopsis ratings received at cma.caincreased to 4,000 per week. Since April 2008, participation has grown, and at present, each weekabout 5,000 IAM ratingsof research-based synopsesare submitted. Interpretation:Our pilot work revealed a striking increase in the number of checked types of cognitive impact (‘Yes’ responses) per synopsis, when physicians used a forced choice ‘Yes-No’ response format in S2. At the same time, a 25% drop in participation was observed, but we cannot know how much of this drop was due to the burden of the forced choice response format, as additional questions wereintroduced.20Thus, our pilot work leads us to the following speculative interpretationof these observations (to be tested in the proposed research): The 10 forced choice response formatstimulatesreflectionmore than ‘check all that apply’, whileany increased reflectioncomes at the cost of the participation of some physicians.In summary, level 1 is needed to determine how participation is affected by the response format. Level 3:Is reflective learning affected by the IAM response format?With respect to the level 3 outcome in the CME framework, IAM can stimulate reflective learning when a physician works through allquestionnaire items in sequence. This view is supported by empirical evidence, as we previously observed how IAM can document reflective learning among physicians interviewed about their synopsis ratings.7 In educational psychology, the concept of‘reflection’ has been defined by Mann and colleagues, in line with the pioneering work of Dewey and Schön, as "a deliberate reinvestment of mental energy aimed at exploring and elaborating one's understanding of the problem one has faced (or is facing)”8. Reflection is a learning processthat involves questioningand assessing experiences.Specifically, reflection can transformpractical experience into education by helping clinicians to identify knowledge gaps, and to enable critical thinking, self-assessment, problemsolving and professionalism.Therefore, reflection is considered an essential skill for CME21.In the proposed research, we will explorewhether reflective learning can be enhanced by a forced check IAM response format. Level 6:Are the patient health outcomes reported through the IAM questionnaire observable in longitudinal follow-up?In regards to the level 6 outcome in the CME framework, the fourth and final question in the IAM questionnaire documentsexpectedpatient healthbenefits associated with 11 the use of clinical information delivered on email. Items in question four were derived from literature reviews and interviews with physicians in CIHR-funded research1, 5, 22-24.Through longitudinal follow up, we will document cases of observed health benefit linked to emailed synopses called ‘POEMs’. Focusing onresearch relevant to primary health care, POEMs (Patient Oriented Evidence that Matters) have been emailed to physicians since 2001. Thislevel 6 component is crucial for designing future studies ofpatient health benefitsassociated with synopses of clinical research.POEMs cover a multitude of diseases and issues, given that health professionals in primary care confront a wide spectrum of clinical problems. For example, the table below shows the diversity of health processes and outcomes that would be influenced by the application of clinical information fromPOEMs to specific patients. These include systolic blood pressure in type 2 diabetics, prescribing of montelukast in children with asthma and screening mammography among woman younger than 50. In level 6, we will scrutinizereported health benefits that are actually observed and documented, e.g. in the medical record or administrative databases. This will inform plans for future research. Examples of titles of POEMs synopses of clinical research Blood pressure target of 120 no better than 140 in Type 2 Diabetes Mellitus (ACCORD) Inhaled steroids more effective than montelukast in children with asthma Exercise good for knee osteoarthritis symptoms and function USPreventive Services Task Force: No routine mammography for women younger than 50 years Management options equally effective for simple urinary tract infection 12 Knowledge Translation Approach: Our proposal addresses key issues in knowledge translation(KT) from two perspectives. (1)We propose to improve a widely used KT tool (namely IAM),in terms of optimizing physician participation in national CME programs and promoting more reflection by physicians on important clinical research. Given the possibility of greater reflection and less participation by physicians asked to use IAM with a forced choice response format, our knowledge users seek theresponse format that optimally balances CME outcomes. (2)We will increase understanding of how research knowledge is applied topractice in the complex world of primary care - specifically observations of patient health benefit that can be objectively measured in future research. Importance of This Research:The Commission on the Education of Health Professionals for the 21st Century recommends physicians developcompetency in evidence-based practice, continuous qualityimprovement and the use of new informatics.Thesecompetencies are stimulated by IAMbased CME programs.25If reflection on email alerts of research-based information leads to positive changes in the health of specific patients in primary care, further developing IAM-based CME programs for all health professionalswill contribute to improvements in the health of communities. Presently, thousands of Canadian family physicians use IAM as a core component of their CME. For example, it is possible for members of the College of Family Physicians to earn 60% of required credits through IAM-based CME programs. Our findings should enhance efforts to develop similar programs for other types of health professionals. Physician participation and their reflection on synopses of research-based information are essential CME outcomes, if CME is to support improvements in practice. To assess the contribution of IAM-based CME programs 13 to the health of the population, we need to know if patient health outcomes reported through the IAM questionnaire can be observed in longitudinal follow-up.Given thatpatient health outcomes can be observed, for example in the medical record, we will use the results of the proposed work to objectively measure health benefits in future research. Our integrated KT approach will facilitate use of the new knowledge we will produce, given that knowledge user applicants have helped to define our research questions, and have a proven record of implementing our research findings in national CME programs. Research Plan Research questions: Our research plan is designed to answer the following research questions linked to levels one, three and six of the CME outcomes framework. Our research questions are: Q1. Is physician participation affected by different IAM response formats? (Level oneCME outcome)Q2. Is reflective learning affected by theIAM response format?(Level three CME outcome)Q3. Are the patient health outcomes reported through the IAM questionnaire observable in longitudinal follow-up?(Level sixCME outcome) Questions at level one and three will optimize IAM, a widely used tool for evaluating clinical information in national CME programs. Our level six question is highly relevant to KT. While some might consider that IAM is an intervention for promoting behaviour change via the so-called mere measurement effect26, we are not attempting to assess change in clinician behaviour. Rather we are trying to better understand IAM ratings, in terms of physician reports of observed health benefits for specific patients. 14 With respect to Q1, we hypothesize that the heavier the burden imposed by the response format, the lower participation will be. Two response formats will be compared quantitatively against the popular‘Check all that apply’ format, in terms of physician participation (see appendix four). With respect to Q2, we anticipate that reflection is more highly stimulated by a response format that systematically promotes thinking about each item within the IAM questionnaire. Thus, we will examine the effectofresponse format on reflective learning,using a Reflective Learning Framework.With respect to Q3,we will qualitatively explore self-reportsmade by physicians with regard to the health benefits of synopses observed for specific patients. Q1. Level One (Participation) Hypotheses: We hypothesize that participation will be negatively affected under a response format that (i) forces a check for each item, i.e., format B versus formats A and C described below, (ii) provides a greater number of response choices as compared to ‘Check all that apply’, i.e., formats B and C versus A, and (iii) has no default response, i.e., format B versus C.These hypotheses will be investigated by comparing participation across three formats (note: for space reasons, only two IAM items are displayed in the examples below). Response Format A (presently linked toPOEMs®on cma.ca) ‘Check-all that apply’ requires only one check.It is unclear whether the absence of a check is equivalent to a “NO” response. My practice is (will be) changed and improved I learned something new 15 (…) Response Format B Forced check –the respondent must answer each item, as nothing is pre-checked. Yes No Possibly My practice is (will be) changed and improved I learned something new (…) Response Format C (presently linked toHighlights from e-Therapeutics+ and e-Gene Messengers) The No is pre-checked,thus reducing the burden of responding compared to format B. Compared to A, format C encourages respondents to read each item. Yes No Possibly My practice is (will be) changed and improved I learned something new (…) Participants: Following ethics approval, 120 family physicians will be recruited online, with the assistance of a knowledge user at the CMA (Dr. Shortt). As in our previous research, this is feasible. Eligible family physicians will be those who are in active practice,and rated50 or more POEMs in the past year using IAM. The nominated co-PI will oversee the process of obtaining informed consent.Synopses will be the actual POEMs emailed on weekdays to CMA members, with a time delay of several weeks. Immediately following informed consent, the CMA will disconnect participants from email delivery of POEMs. Then during the recruitment period, the 16 POEMs actually delivered by the CMA will begin to feed a stockpilethat is replenishedas new POEMs for email delivery arrive during the trial. Endpoint: Time to rating of a POEM (POEMs not rated by study end will be deemed rightcensored). Design: In a parallel trial, 120participants will be randomly assigned to one of threearms, response formats A or B or C,according to which they will be asked to rate POEMs over 32 weeks.As in real life, participants will receive CME credit for rated POEMs delivered at a rate of one per weekday (five per week). The trial will be pragmatic in nature, in the sense that physicians will not be prompted or reminded to rate each synopsis. The time between each push and the rating of each POEM by each physician will be recorded. Randomization: Participants will be block randomized according to the number of POEMs they rated in the previous year,given their demonstrated propensity to rate POEMs will influence our endpoint. Participants will start the trial simultaneously, as recruitment will require just a few weeks. It will therefore be possible to randomize exactly 44 physicians to arm A and 38 physicians to each of arms B and C, for reasons explained below. Allocation to response format will be concealed from the investigators. The study statistician will generate codes to determine the allocation sequence, using a computerized random number generator. Using a master file of 120 codes, the statistician will instruct the Web master as to which format each participant is to be assigned. 17 Study size: The number of participants was determined through methods outlined by Schoenfeld, adjusted for clustering by physician27and optimizing the proportion allocated to each arm in such a way as to minimize the required sample size. The asymmetric allocation of participants to study arms was derived analytically and confirmed numerically. Using the number of physicians per arm as stated above, and a study duration of 32 weeks, we will have 80% power to detect the same magnitude of drop in participation that we observed in S2; namely a 45% decrease in the hazard of rating under a forced check format. This power applies to the two contrasts of interest, that is, contrasts between formats A and B and between formats A and C. These calculations are based on a significance level of 5% for two-sided alternatives, and on pre-trial estimates derived from pilot work, namely: a base hazard (under Format A) of 0.05; a hazard ratio of 0.55 between formats B or C and format A; and an intraclass correlation of 0.65. The latter was obtained by comparing variance estimates accounting and not accounting for clustering in the pilot data and accounting for pilot data average cluster size (see appendix 5). Data collection:Prior to rating their first synopsis, each participant will be assigned a random ID number after completing a seven item online demographic questionnaire. This data will be linkable to all subsequentIAM ratings from the same participant, via their unique encrypted ID number.We will build a secure web application for this study, providing us with real-time data entry validation and a web interface for queries. Using this electronic data capture system, we will email synopses and collect ratings from each participant.The system will also provide a data export mechanism to Excel and SAS. 18 Analysis plan:The hazard of rating will be analyzed using a Cox regression model, where the event is defined by the rating of one POEM by one participant. Cox regression was selected over binary endpoint analysis to account for the realistic way in which synopses are pushed throughout the trial, which forces an analysis based on rates rather than numbers. Eschewing binary endpoints also has the advantage of reducing the necessary sample size. We will use rating format as a covariate, accounting for clustering by physicianusing a sandwich variance estimator. In each group, unrated synopses will be deemed not-rated and the proportion of rated synopses recorded for each physician. This proportion will be used to assess the hazard ratio of rating between the different formats, with the ‘Check all that apply’ format as reference. Thus, we will report the hazard ratio of rating (and 95% confidence intervals) under formats B and C compared to “Check all that apply”. Interpretation: Knowledge users will weigh the magnitude of a drop in participation under a forced check format, in relation to stimulation of reflective learning observed in the following level three study. Q2. Level Three (Reflective Learning) Hypothesis:Our level 3 question is as follows:Is reflective learning affected by the response format?We hypothesizeformat B that forces a choice for each item,will stimulate reflective learning more than formats that offer a default for each item (A or C). This hypothesis is plausible as the act of responding to all items of the IAM questionnaire (n=21)can be logically associated with greater reflection. To test this hypothesis, we will conduct an exploratory 19 randomized trialusing anoutcome measurefrom educational psychology - theReflective Learning Framework (RLF).Presented in appendix 6, the RLF contains 12 observable cognitive tasks. Participants will not need to be recruited anew. Of the 120participants who rated POEMs in the level one trial, 100will continue in level 3. Design:Standard two group parallel randomized trial, using a 1:1 allocation ratio.Below, we assume that format A will emerge from level 1 with the highest participation. Data Collection: In this trial,each participant will rateonePOEMunder either format B (forced choice: YES or NO or POSSIBLY) or format A (check-all that apply). Thus, each participant will be simply randomized to eitherformat B or A. Among thePOEMs in our stockpile for email delivery to participants, ten will be read and rated for clinical relevance to primary care using the McMaster relevance scale, by two knowledge user co-applicants who are practicing generalist physicians. As used in other work, this is a seven-point scale anchored by “Directly and highly relevant” at the top end and “Definitely not relevant: completely unrelated content area” on the bottom end28. The most relevant POEM will be used in this level 3 study. Concealment of allocation to formats will be assured. First, codes to determine the allocation sequence will be created by our statistician using a computerized random number generator. This master file will then be given toone person who will schedule and conduct the level three 20 interviews. Holding the list of 100 codes in amasterfile, the interviewer will assign one code to each participant. Once randomization has occurred, the POEMwill be delivered and ratedonly days before a scheduled interview. POEM ratings will be captured using the online system built for level one. At the rate of 10 MDs per week, we will interviewall participants about their rated POEM over 10 weeks. This design will allow us to conduct all interviews shortly after participantsrated their level 3 POEM, thereby maximizing their memory ofthis POEM and its rating.Interviews will be audio taped and transcribed verbatim. Our draft interview guide is presented in Appendix 7. Outcome: The outcome measure will be a reflective learning score, defined as the proportion of all possible cognitive tasks observed in a content analysis of interview transcripts29.In previous work and in a similar educational context, an operational definition of reflective learning was established via the RLF.7The RLF will guide this content analysis, so the effect of response format on reflection can be observed and compared between formats. Content analysis: The RLF codebook defines 12 codes, each referring to one observable cognitive task, i.e., an event reported by the participant in the form of a thought or feeling derived from their rating of one POEM. In using this codebook, we assume that cognitive tasks observed during interviews represent a reflective learning process. For each physician explaining one POEM rating, text will be assigned to one or more RLF codes when the corresponding 21 cognitive task is observed. Two persons will code all interviews using the RLF codebook and guidance in the form of scoring rules. For example, while all occurrences of cognitive tasks will be coded, only the first occurrence of each task will be counted in the reflective learning score. When reading a transcript, coders will not be informed of which response format was used. However coders cannot be blinded, as transcripts of participants’ explaining their ratings will frequently reveal when response format B was used. Several steps will be taken to optimize the training of coders. First, the coderswill discuss the RLF and its codebook with the principal investigators, reviewing the general rules and guidance for coding. Then, the coderswill discuss one interview as an example, and reach consensus on coding rules. This process will continue until there is comfort in applying the codebook. At that point, thecoderswill independently codeallremaining interviews, and their level of agreement assessedusing a kappa inter-coder reliability score. The Kappa score is usually interpreted as follows: between 0.61 and 0.80, substantial agreement; and between 0.81 and 1.00, almost perfect agreement30.Data management, assignments of codes, and the calculation of the inter-coder reliability score will be facilitated by NVivosoftware. Analysis plan: We will count the number and distribution of cognitive tasks observed within each interview about each rated POEM. This number (and its proportion) will vary from 0% (no rating submitted) to 100% (all 12 tasks observed). For each participant, we will create a matrix of ‘Rated POEM by cognitive task’, with the presence or absence of tasks coded 1/0, respectively for each format. A mean reflectivescorefor each response formatwill be determinedas theaverage oftotal scores of each participant(see Appendix 8 for a mock up of study results). Following the 22 ‘intention-to-treat’ principle, we will include all participant ratings in the analysis, including ‘no ratings’ that will score zero. The effect size will be expressed as the absolute difference in proportions, namely the difference in mean reflective learning scores between the two formats. A two proportion Z test (two-tail) will be performed on mean reflective learning scores. However,knowledge usersonly want to know if format B offers a substantial improvement in reflective learning.With respect to this trial, recall that in 2008 we found in our pilot work an increase of almost two types of cognitive impact per POEM, comparing a forced choice format with format A (see appendix 3). This difference was observed at a time when the IAM questionnaire contained only 10 items. While the number of checked IAM items is not equal to the number of cognitive tasks (e.g., physicians may check two items referring to the same task), we anticipate a difference of at least four cognitive tasksusing the 2011 questionnaire that contains more than twice the number of items, i.e., a 30% higherreflective learning score comparing format B against format A. Given 100 participants and a 10% drop-out rate, this trial will have 83% power to detect a 30% difference between these two formats, based on a significance level of 5%. Interpretation: If a substantial effect of a forced check response format on reflective learning is found, then knowledge users will consider the educational importance of this effect in relation to differences between formats in terms of participation, as observed in our level one study. If a large increase in reflective learningis NOTseen with response format B (e.g., a 30% increase in 23 score), then knowledge users will have empirical evidence to support a decision to recommend the format that emerges from level 1 with the highest participation. The CONSORT statement was used to guide the design of trials at levels one and three. As these are not clinical trials involving patients and a treatment, they will not be registered. Reporting of this work will be guided by the 25-items in the CONSORT 2010 checklist 31. Q3. Level Six (Patient Health) Design:To answer our level 6 question “Are the patient health outcomes reported through the IAM questionnaire observable in longitudinal follow-up?”, a sequential explanatory mixed methods study design will be applied32. In this design, the collection and analysis of POEM ratings obtained in the level 1 cross-over trial (quantitative IAM data) will be followed and informed by the collection and analysis of qualitative data in a multiple case study. Analysis of the qualitative data will provide a comprehensive explanation of quantitative results. Quantitative Data Collection & Analysis:Having had the opportunity to rate up to 160 POEMs in the level onetrial, each participant will have a portfolio of IAM-rated email alerts. In the IAM 2011 questionnaire, physicians must answer one final question, when they report they used (or will use) a POEM for at least one patient:namely ‘For this patient, do you expect any health benefits as a result of applying this information?’If their answer is ‘Yes’, at least one of the following three items of health benefit for a specific patient must be selected: (1) This 24 information will help to improve this patient’s health status, functioning or resilience (i.e., ability to adapt to significant life stressors), (2) This information will help to prevent a disease or worsening of disease for this patient, and (3) This information will help to avoid unnecessary or inappropriate treatment, diagnostic procedures, preventative interventions or a referral, for this patient. Thus, ourlevel 1 trialwill generate aportfolio of rated POEMs for each participant where an expected health benefit was reported for a specific patient. In turn, this list will inform the qualitative data collection (sampling). Based on our prior research where synopses were delivered to Canadian physicians, about 60% of all delivered POEMs will be rated33. Of those that are rated, about 50% will contain a report of one expected health benefit for a specific patient. Thus, given 100 MDs asked to rate 160 synopses, each physician will have a portfolio of about 48 synopsis ratings with expected health benefits for a specific patient. To stimulate participants’ memory of their ratings, an additional free text box will be added to the online IAM questionnaire, in which participants will briefly note the type of clinical problem as well as the age and gender of the patient for whom the synopsis was (will be) applied. Qualitative Data Collection & Analysis: In keeping with a multiple case study design,34cases will be the patients for whom participants expect health benefits associated with the use of information from POEMs. At the end of the level 3interview, participants will be given their list of POEMs with expected health benefits, and their portfolio containing IAM ratings, comments, and clinical notes. Starting withthe POEMs that were most recently rated, participants will be 25 asked two questions: “Did you actually use this POEM for a patient?”, and “Have you already observed any health benefit(s) for the patient?” If the answer to both questions is ‘Yes’, then a follow up interview will be immediately scheduled. If participants answer ‘No’ because theyhave not hadthe opportunity to observe theexpected health benefit, then the process of longitudinal follow up will start. The date of the most recently rated POEM with an expected health benefit will define the follow up start date. This will involve re-contacting participants by email or telephone every three months, for nine months in total. If after nine months, no health benefits are observed, the expected benefit will be deemed to have not occurred.Up to nine-months follow-up is recommended to observe level 6 outcomes35. Follow-up interviews will be guided by reviewing POEMs and portfolios. The length of interviews will depend on the number of cases to be investigated. Participants must complete at least one interview in both level one and level three studies toreceive a $200 honorarium($150 per hour).Interviewees will be asked: (1) to describe the type of health outcomes including the clinical story of the patient, and how these outcomes were or could be documented in their medical records; and (2) to explain the relationships between the reading of one POEM on email, the reflective learning activity (completion of one IAM questionnaire), the clinical use of information derived from the POEM, and the observed patient health outcomes. Without compromising patient confidentiality, participants will be asked to describe cases (patients), and the key characteristics of their clinical story, which are based on a case report template for family medicine36. Our interview guide is presented in appendix 9. 26 Given the retrospective nature of these interviews, we will follow the Critical Incident Technique, which has been commonly used to assess the performance of health professionals, specifically their information behaviour37. This technique is usually considered valid to provide detailed empirical illustrations38.Referring to Flanagan (1954),39the use of information for one patient must meet two criteria to be classified as a critical incident: the information use is clear from the observers’ perspective and its consequences are clearly defined. To identify clear information use, we will ask the following three screening questions: (Q1) 'I would like you to tell me more about the clinical story of this patient before you read the POEM, much as you would discuss this case with a colleague’; (Q2) ‘What was the patient-related problem addressed by this POEM?’ and (Q3) ‘What came out of reading the POEM with respect to the clinical story, e.g., the actions taken or avoided because of the POEM?’ Then, to determine whether the consequences of information use are clear and can be attributed to the POEM received on email, we will ask the following three questions: (Q4) ‘I would like you to tell me more about the clinical story of this patient after you read this POEM, much as you would discuss this case with a colleague, i.e., in what ways did this POEM affect the health of this patient?’; (Q5) ‘In your opinion, how can these POEM-related outcomes be measured or documented in the medical record?; and (Q6) I would like to know whether only this POEM, or this POEM and other sources of information, contributed to these outcomes? If other sources of information contributed to the observed outcomes,questions will explicatethis contribution. 27 Qualitative data will consist of archived POEMs, documents (portfolios of IAM ratings with free text comments and clinical notes linked to specific POEMs), field notes and interview transcripts.Qualitative data will be summarized as brief clinical vignettes that will be explicit on the reported contribution of information from POEMs. Vignettes will be classified into categories; for example, with regard to actions avoided, a classification will include the following categories: referral, prevention, diagnosis, treatment, and prognosis. Based on prior research, we anticipate collecting sufficient data to write about 80 vignettesfrom clear and trustworthy clinical stories of the health outcomes of specific patients in relation to information from POEMs. In accordancewith rigorous qualitative thematic data analysis,40 all vignette sentences will be linked to supportive raw data using specialized software (NVivo). The vignettes and the assignment of data to sentences will be reviewed by one co-PI. Disagreements between the qualitative analyst and co-PI will be discussed, and resolved by consensus or arbitrage by the nominated PI. Each vignette will be reviewed by a panel of two knowledge user co-applicants who are family physicians, for the purpose of evaluating whether the reported outcome, e.g., avoiding an inappropriate treatment, was in all likelihood beneficial for the patient. Any disagreement between thesetwo panel members will be resolved through arbitrage by a third knowledge user. Importance of mixed methods: Quantitative and qualitative components will be integrated at data collection, analysis and interpretation stages. Mixing quantitative and qualitative components is essential for interviews, as IAM ratings, textual comments and clinical notes (dataset one) will enhance participants’ memory about specific patients (dataset two) through stimulated recall. 28 Then, qualitative data (interview transcripts) will be analyzed to understand the association between rating one POEM and a reported health benefit for one patient. Integrated KT and End-of-grant KT plans As we all seek to optimize the implementationof IAM in a lifelong e-learning context, a workable solution will be agreed upon in partnership with knowledgeusers at the College of Family Physicians and the CMA. Examples of integration of knowledge users include their involvement in selecting POEMs to be pushed, analysis of vignettes and participation in meetings to debatestudy findings. Optimizing the implementationof IAM is relevant to thousands of Canadian physicians who use IAM, and to researchers who use IAM as an outcome measure outside this country and in CIHR-funded research.41-43 Beyond POEMs and the mentioned ‘eTheraputics+ Highlights’, IAM is presently used in other CIHR funded research. For example, IAM is linked to Gene Messengers - emailed reviews of health conditions for which new genetic tests are available.44,45There is great potential for IAM to inform the measurement of research utilization and patient health outcomes linked to clinical information. Thus, the proposed project is highly relevant to KT. What factors or reasons will lead knowledge users to implement one format over another? First, our results linked to each formatwill be discussed and debated by all knowledge users at a faceto-face meeting attended by the nominated PI. Any decisions will depend of course on the 29 magnitude of the effect of response format on CME outcomes at levelsone and three. It is possible that no single format optimizes both participation and reflection. In that case, one of two formats could be offered in CME programs (either the format that optimizes participation, or the format that optimizes reflection). Our findings could lead knowledge users to implement the following policy for IAM-related CME programs. Imagine that a ‘forced choice’ IAM response format (format B) is associated with greater reflection and acceptable participation. One decision could then be to offer more MOC or Mainpro credit for e-learning programs that use this format, e.g. 0.25 credits as compared to 0.1 credit for the ‘check all that apply’ format. Monitoring of outcomes: Through an ongoing agreement with the Canadian Medical Association, we receive anonymous synopsis ratings submitted by their members. Thus, we are in a unique position to monitor outcomes in future research, if a change in response format is implemented. Limitations: We recognize this proposaltakes an intermediate step towards objectively measuring patient health outcomes. However, the proposed work is essential for progress towards further evaluative research.This study will be performed in a specific CME context, namely email delivery of brief (3-400 word) research-based synopses called POEMs®, and their rating by family physicians using IAM. Generalization of study findings to other educational contexts, e.g., evaluation of online courses, should be carefully considered as courses involve the delivery of large amounts of clinical information. Feasibility / anticipation of difficulties:Given our longstanding partnership with knowledge users, we anticipate no difficulties for recruitment, data collection or analysis. For example, in 2010 we received 231,000 POEM ratings (a crude average of more than 50 ratings per physician). A study timeline is provided in Appendix 10. 30 Our team has the necessary expertise and mix of skills to accomplish the proposed work; for example, mixed methods, biostatistics, CME and KT research expertise. We meet frequently to share findings and interpretations. This has lead to many publications and presentations.Since 2004, Bernard Marlow has been a partner on six of our CIHR funded projects; several of these funded in KT competitions. This demonstrates our capacity for a sustained and integrated researcher–decision maker partnership. Roland Gradwill lead this project and coordinate activities of the team. He is a practicing family doctor and KT researcher. Since 2003, his work to develop and validate IAM is funded by CIHR. IAM is a promising tool for research on elearning, focused on evaluation of practice-based education and how to stimulate reflective learning in the health professions. Pierre Pluye will co-lead this project. He is a CIHR New Investigator, and co-developer of IAM. Pierre teaches graduate students and conducts mixed methods research. His current CIHR-funded studies examine the use of clinical information derived from electronic knowledge resources. Under the supervision of Pluye and Grad, we have budgeted funds to support one student at the Master of Science level. This student will be enrolled in our new MSc program in Family Medicine, develop a thesis plan (year one) and then undertake secondary analysis of our data in the context of their thesis work (year two). Alain C. Vandal is an Associate Professor of Biostatistics specializing in survival and categorical data analysis; he will directly supervise one PhD student for analyses described above. Gillian Bartlett is an epidemiologist specializing in health informatics, pharmacoepidemiology, population health and evaluation of methodologies for complex data sets. She will directly supervise the PhD student for all preliminary analyses. The four knowledge users are (1) 31 Bernard Marlow, director of Continuing Professional Development (CPD) at the College of Family Physicians, the national organization mandated to promote lifelong learning among family physicians; (2) Sam Shortt, director of Knowledge Transfer and Practice Policy at the CMA; (3) Lori Hill, CPD Manager at the College of Family Physicians; (4) Ian Goldstine, a family physician who chairs the National Committee on CPD at the College of Family Physicians. Hill, Marlow, Shortt and Goldstine will help to move study findings into practice given their ability tocommunicate research findings to multiple audiences. These knowledge users will implement an optimized version of IAM in national continuing education programs. References (1) Pluye, P., Grad, R. M., Johnson-Lafleur, J., Bambrick, T., Burnand, B., Mercer, J., Marlow, B., and Campbell, C. Evaluation of email alerts in practice: Part 2 – validation of the Information Assessment Method (IAM). Journal of Evaluation in Clinical Practice 2010;16(6):1236-1243. (2) CIHR. Canadian Institute of Health Research CIHR-About Knowledge Translation. http://www.cihr-irsc.gc.ca/e/29418.html.Accessed<Sept. 12, 2005>. (3) Grad, R. M., Pluye, P., Granikov, V., Johnson-Lafleur, J, Shulha, M., Bindiganavile Sridhar, S., Bartlett, G., Moscovici, J., Vandal, A. C., Marlow, B., and Kloda, L. Physicians' assessment of the value of clinical information: Operationalization of a theoretical model. Journal of the American Society for Information Science and Technology 2011;in press (4) Grad, R. M., Pluye, P., Mercer, J., Marlow, B., Beauchamp, M. E., Shulha, M., JohnsonLafleur, J., and Wood-Dauphinee, S. Impact of Research-based Synopses Delivered as Daily E-mail: A Prospective Observational Study. Journal of the American Medical Informatics Association 2008;15(2):240-245. (5) Pluye, P., Grad, R. M., Granikov, V., Jagosh, J., and Leung, K. H. Evaluation of email alerts in practice: Part 1 - review of the literature on Clinical Emailing Channels. Journal of Evaluation in Clinical Practice 2010;16(6):1227-1235. 32 (6) Doll, W. J. and Torkzadeh, G. The Measurement of End-User Computing Satisfaction. MIS Quarterly 1988;12(2):259-274. (7) Leung, K. H., Pluye, P., Grad, R. M., and Weston, C. A Reflective Learning Framework to evaluate CME effects on practice reflection. Journal of Continuing Education in the Health Professions 2010;30(2):78-88. (8) Mann, K., Gordon, J., and MacLeod, A. Reflection and reflective practice in health professions education: a systematic review. Adv Health Sci Educ 2009;14(4):595-621. (9) Schwarz, N. and Oyserman, D. Asking questions about behavior: Cognition, communication, and questionnaire construction. American Journal of Evaluation 2001;22(2):127-160. (10) Rasinski, K. A., Mingay, D., and Bradburn, N. M. Do Respondents Really "Mark All That Apply" on Self-Administered Questions? The Public Opinion Quarterly 1994;58(3):400-408. (11) Smyth, J. D., Dillman, D. A., Christian, L. M., and Stern, M. J. Comparing check-all and forced-choice question formats in Web surveys. Public Opinion Quarterly 2006;70(1):6677. (12) Krosnick, J. A. Survey research. Annual Review of Psychology 1999;50:537-567. (13) Krosnick, J. A. and Alwin, D. F. An Evaluation of a Cognitive Theory of Response-Order Effects in Survey Measurement. The Public Opinion Quarterly 1987;51(2):201-219. (14) Krosnick, J. A. Response Strategies for Coping with the Cognitive Demands of Attitude Measures in Surveys. Applied Cognitive Psychology 1991;5(3):213-236. (15) Sudman S, Bradburn NM. Asking Questions. San Francisco: Jossey-Bass; 1982. (16) Farmer, A. P., Legare, F., Turcot, L., Grimshaw, J., Harvey, E. L., McGowan, J. L., and Wolf, F. M. Printed educational materials: effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews 7-16-2008;(3):CD004398. (17) Tanna, Gemini V., Sood, Manish M., Schiff, Jeffrey, Schwartz, Daniel, and Naimark, David M. Do E-mail Alerts of New Research Increase Knowledge Translation? A Nephrology Now Randomized Control Trial. Academic Medicine 2011;86(1) (18) Moore, Donald E. Jr, Green, J. S., and Gallis, H. Achieving desired results and improved outcomes: Integrating planning and assessment throughout learning activities. Journal of Continuing Education in the Health Professions 2009;29(1):1-15. 33 (19) College of Family Physicians of Canada, The. Mainpro® - Background information. http://www.cfpc.ca/English/cfpc/cme/mainpro/maintenance%20of%20proficiency/backgr ound%20info/default.asp?s=1.Accessed<Sept. 12, 2011 >. (20) Dillman DA, Smyth JD, Christian LM. Internet, Mail and Mixed-Mode Surveys: The Tailored Design Method. Third ed. Wiley; 2009. (21) Boud, D. and Walker, D. Promoting reflection in professional courses: the challenge of context. Studies in Higher Education 1998;23(2):191-296. (22) Bindiganavile Sridhar S. In pursuit of a valid Information Assessment Method McGill University; 2011. (23) Pluye, P., Grad, R. M., Mysore, N., Shulha, M., and Johnson-Lafleur, J. Using electronic knowledge resources for person centered medicine - II: The Number Needed to Benefit from Information. International Journal of Person Centred Medicine 2011;in press (24) Pluye, P., Grad, R. M., and Shulha, M. Using electronic knowledge resources for person centered medicine – I: An evaluation model. International Journal of Person Centred Medicine 2011; (25) Frenk, Julio, Chen, Lincoln, Bhutta, Zulfiqar A., Cohen, Jordan, Crisp, Nigel, Evans, Timothy, Fineberg, Harvey, Garcia, Patricia, Ke, Yang, Kelley, Patrick, Kistnasamy, Barry, Meleis, Afaf, Naylor, David, Pablos-Mendez, Ariel, Reddy, Srinath, Scrimshaw, Susan, Sepulveda, Jaime, Serwadda, David, and Zurayk, Huda. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. The Lancet 2010;376 (9756) :1923-1958. (26) Godin, G., Belanger-Gravel, A., Eccles, M., and Grimshaw, J. Healthcare professionals' intentions and behaviours: A systematic review of studies based on social cognitive theories. Implementation Science 2008;3(36) (27) Schoenfeld D. Sample-Size Formula for the Proportional-Hazards Regression Model. Biometrics 1983;29(2):499-503. (28) McKinlay, R. James, Cotoi, Chris, Wilczynski, Nancy L., and Haynes, R. Brian. Systematic reviews and original articles differ in relevance, novelty, and use in an evidence-based service for physicians: PLUS project. Journal of Clinical Epidemiology 2008;61(5):449-454. (29) Neuendorf KA. The content analysis guidebook. Thousand Oaks: Sage; 2002. 34 (30) Garson DG. Reliability Analysis. Statnotes: Topics in Multivariate Analysis. Raleigh: North Carolina State University; 2011. (31) Moher, David, Hopewell, Sally, Schulz, Kenneth F., Montori, Victor, G++tzsche, Peter C., Devereaux, P. J., Elbourne, Diana, Egger, Matthias, and Altman, Douglas G. CONSORT 2010 Explanation and Elaboration: updated guidelines for reporting parallel group randomised trials. BMJ 1-1-2010;340 (32) Creswell JW, Plano-Clark VL. Designing and Conducting Mixed Methods Research. Thousand Oaks: Sage; 2007. (33) Grad, R. M., Pluye, P., Johnson-Lafleur, J., Granikov, V., Shulha, M., Bartlett, G., and Marlow, B. Do Family Physicians retrieve synopses of clinical research previously read as email alerts? Journal of Medical Internet Research 2011;in press (34) Yin RK. Case Study Research: Design and Methods. 4th ed. Thousand Oaks: Sage Publications; 2008. (35) Shershneva, M. B. Wang M. f. Lindeman G. C. Savoy J. N. & Olson C. A. Commitment to practice change: An evaluator's perspective. Evaluation & The Health Professions 2010;33(3):256-275. (36) McCarthy, L. H. & Reilly K. E. How to write a case report. Family Medicine 2010;32(3):190-195. (37) Urquhart, C. Light A. Thomas R. Barker A. Yeoman A. Cooper J. et al. Critical incident technique and explicitation interviewing in studies of information behavior. Library & Information Science Research 2003;25(1):63-68. (38) Anderson, B. E. & Nilsson S. G. Studies in the reliability and validity of the critical incident technique. Journal of Applied Psychology 1964;48:398-403. (39) Flanagan, J. C. The critical incident technique. Psychological Bulletin 1954;51:327-58. (40) Fereday, J. & Muir-Cochrane E. Demonstrating rigor using thematic analysis: A hybrid approach of inductive and deductive coding and theme development. International Journal of Qualitative Methods 2006;5(1) (41) Del Fiol, G., Haug, P. J., Cimino, J. J., Narus, S. P., Norlin, C., and Mitchell, J. A. Effectiveness of Topic-specific Infobuttons: A Randomized Controlled Trial. Journal of the American Medical Informatics Association 2008;15(6):752-759. 35 (42) McGowan, J., Hogg, W., Campbell, C., and Rowan, M. Just-in-time information improved decision-making in primary care: a randomized controlled trial. PLOS One 2008;3(11):e3785. (43) Coiera, E., Magrabi, F., Westbrook, J., Kidd, M., and Day, R. Protocol for the Quick Clinical study: a randomised controlled trial to assess the impact of an online evidence retrieval system on decision-making in general practice. BMC Medical Informatics and Decision Making 2006;6(1):33. (44) Pluye P, Grad RM, Repchinsky C, Dawes MG, Bartlett G, Rodriguez R. Assessing and Improving Electronic Knowledge Resources in Partnership with Information Providers. 2007. Canadian Institutes of Health Research. PHE85195. (45) Carroll J, Grad RM, Allanson J et al. Development and Dissemination of a Knowledge Support Service in Genetics for Primary Care Providers. 2010. Canadian Institutes of Health Research. KTB220407. 36 Appendices Appendix 1 Information Assessment Method (for email alerts) Appendix 2 Number of checked items per rated POEM in the pilot study Appendix 3 Response formats Appendix 4 Sample size calculation for Level 1 Appendix 5 The Reflective Learning Framework and Codebook Appendix 6 Level 3 Interview guide Appendix 7 Level 3 Mock data analysis Appendix 8 Level 6 Interview guide 37 Appendix 1: The Information Assessment Method linked to email alerts IAM 2011 (PUSH) Q1.What is the impact of this information on you or your practice? Please check all that apply Note: You can check more than one type of impact. Note to programmer: MUST check at least one My practice is (will be) changed and improved If Yes, what aspect is (will be) changed or improved? Diagnostic approach? Therapeutic approach? Disease prevention or health education? Prognostic approach? I learned something new I am motivated to learn more This information confirmed I did (am doing) the right thing I am reassured I am reminded of something I already knew I am dissatisfied There is a problem with the presentation of this information If Yes, what problem do you see? Too much information? 38 Not enough information? Information poorly written? Too technical? Other? If ‘Yes’, TEXT BOX with mandatory comment. Instruction:Pleasedescribe this problem. I disagree with the content of this information This information is potentially harmful If ‘Yes’, TEXT BOX with mandatory comment. Instruction: Pleasedescribe how this information may be harmful Q2. Is this information relevant for at least one of your patients? Totally relevant Partially relevant Not relevant Answering "No" will disable question 3 Q3. Will you use this information Yes No Possibly for a specific patient? Answering "No" or “Possibly” will disable items of ‘use’ and question 4 If YES: Please check all that apply Note: You can check more than one type of use. Note to programmer: MUST check at least one As a result of this information I will manage this patient differently 39 I hadseveral options for this patient, and I will use this information to justify a choice I did not know what to do, and I will use this information to manage this patient I thought I knew what to do, and I used this information to be more certain about the management of this patient I used this information to better understand a particular issue related to this patient I will use this information in a discussion with this patient, or with other health professionals about this patient I will use this information to persuade this patient, or to persuade other health professionals to make a change for this patient Q4. For this patient, do you expectany health benefitsas a result of applying this information? Yes No Answering "No" will disable items of ‘health benefit’ If YES: Check all that apply. You may check more than type of health benefit Note to programmer: MUST check at least one This information will help to improve this patient’s health status, functioning or resilience (i.e., ability to adapt to significant life stressors) This information will help to prevent a disease or worsening of disease for this patient This information will help to avoid unnecessary or inappropriate treatment, diagnostic procedures, preventative interventions or a referral, for this patient 40 Comment on this information or this questionnaire. Display thank you message - acknowledge credit earned. Appendix 2: Number of checkeditems per rated POEM in the pilot study A switch in response format from S1 to S2 yielded a doubling of the number of types of cognitive impact reported by CMA members, per rated POEM. Ratings* (n) Mean Median Std Dev Minimum Maximum S1a 51,565 1.44 1.00 0.78 1.00 10.00 S2b 7,257 3.05 3.00 1.51 1.00 10.00 *Excluding ratings of ‘No Impact’ a 'Check all that apply' response format b ‘Forced Yes/No’ response format Appendix 3: Three response formats Response Format A (presently linked to POEMs®) ‘Check-all that apply’ requires only one check.It is unclear whether ‘no check’ means NO. 41 My practice is (will be) changed and improved I learned something new Response Format B Forced check requires the respondent answer each item, since nothing is pre-checked. Yes No Possibly My practice is (will be) changed and improved I learned something new Response Format C(presently linked toe-Gene Messengers and Highlights from e-Therapeutics) The No is pre-checked Yes No Possibly My practice is (will be) changed and improved I learned something new 42 Appendix 4: Sample size calculation for Level One Pre-trial estimates of the duration of follow-up andnumber of participants were based on event rates observed in our pilot study. Based on an assumption of constant hazard, we estimate the absolute hazard of rating in S1 at approximately 0.6 POEM per week. The reduction in the hazard of rating in S2 was estimated at 0.46. Based on Schoenfeld (1983) and accounting for clustering by physician using a variance inflation factor, the study must be run for 32 weeks at a rate of five POEMs per week to detect the target 45% decrease in hazard with 80% power. The unequal treatment allocation (44 physicians in arm A and 38 in each of arms B and C) was also taken into consideration. This figure accounts for clustering under the physicians using a variance inflation factor due to that clustering. This factor was estimated using a comparison of the standard errors of the obtained hazard ratio under a clustering model (using a sandwich estimator of the covariance matrix) and without clustering. Number of participants needed for the contrasts of interest, e.g. between response formats A and B # of weeks / #POEMs per week 1 2 3 4 5 25 96 95 94 94 94 26 93 92 92 92 92 27 91 91 90 90 90 28 90 89 88 88 88 29 88 87 87 87 87 30 86 86 85 85 85 31 85 84 84 84 84 32 83 83 83 82 82 33 82 82 81 81 81 34 81 80 80 80 80 35 80 79 79 79 79 43 Appendix 5: The Reflective Learning Framework and CodebookfromLeung KH, Pluye P, Grad RM, Weston C. A reflective learning framework to evaluate CME effects on practice reflection.Journal of Continuing Education in the Health Professions 2010;30(2);78-88. 44 45 46 47 Appendix 6: Level Three Interview guide PART 1: Introduction:Interviewer presents herself. Before we begin, can I briefly explain the context of this interview? You recently rated one POEM.This component of our research seeks to better understand this rating, more specifically the thoughts you had. We have just delivered to you this POEM and the rating you submitted. Let’s look at this together. Before we start, do you have any questions? And is it ok if I record the interview? (if needed, emphasize confidentiality / anonymity) POEM XX (insert title) 1. On (read date and time & day of the week) you rated the POEM® entitled (read title only). Please look at this POEM and your rating. 2. Now, can you explain your rating to me (allow for open-ended feedback) 3. For each question in the Information Assessment method: Q1: Now for question one - can you tell me more about the impact of the information from this POEM, on you or your practice? Q2: Now for question two on the relevance of this information for at least one patient can you tell me more about its relevance? Same for Q3 and / or Q4 when applicable PART 2: Link to Level Six In the first part of this study, you rated XX of 160 POEMs. In XX of these rated POEMs, you reported an expected health benefit for at least one specific patient. Question: Can we take a few minutes to review this list together? [Present table of rated POEMs with expected health benefits and anonymous patient data, as previously provided by the participant]. Our interest is determining whether you have observed one or more than one of these expected health benefits. [If ‘Yes’, schedule level six interview.If ‘NO’, make a plan to re-contact participant within three months]. ‘Do you prefer we call or email you?’ 48 Appendix 7: Level Three Mock data analysis Analysis of cognitive tasks by response format Observed cognitive tasks will be derived from interviews centred on one IAM rated POEM. Our content analysis will be guided by the Reflective Learning Framework codebook, and assisted by NVivo9 software. Thus, we will create a matrix of ‘MD by cognitive task’, with the presence or absence of tasks coded 1/0, respectively. This matrix will be exported to Excel to calculate the ‘means’ as shown below. Cognitive Tasks observed amongparticipants assigned to format A Participant MD1 CT1 CT2 CT3 (…) CT12 Total Score (%) 1 0 0 0 2 (17) MD2 1 1 1 1 4 (33) MD3 1 0 0 1 2 (17) 1 1 1 1 6 (50) …… MD49 Mean Reflective Score (format A) 3.5 (29) Cognitive Tasks observed amongparticipants assigned to format B Participant MD1 CT1 CT2 CT3 (…) CT12 Total Score (%) 1 0 0 0 4 (33) MD2 1 1 1 1 8 (66) MD3 1 0 0 1 4 (33) 1 1 1 1 12 (100) …… MD50 Mean Reflective Score (format B) 7 (58) 49 Appendix 8: Level Six Interview guide GUIDE FOR FOLLOW-UP INTERVIEWS Introduction (Interviewer presents him/herself) Before we begin, maybe I can briefly explain the context of this interview. In Continuing Medical Education, no studies have systematically assessed patient health benefits associated with researchbased email alerts read by family physicians. Our research aims to explore and understand the patient health outcomes associated with information from POEMs. You have observed at least one patient health outcome after reading one POEM. For each POEM where you observed one or more than one patient health outcome, you will be asked: Firstly, to describe these outcomes andthe clinical story of the patient; Secondly, to explain the relationship between reading the POEM on email and the observed outcomes; Thirdly, to describe how these outcomes were documented or could be documented in medical records. In preparation to this interview, I emailed your portfolio of rated POEMs. This includesyour responses to the IAM questionnaire, free text comments and a clinical note. We will use these POEMs and your portfolio to help us during this interview. So this interview may last about N minutes. Is that ok? (N determined by the number of cases to be investigated: reschedule the interview if needed). Before we start, do you have any questions? And is it ok if I record the interview? (if needed, emphasize confidentiality and anonymity) PART 1: POEM 1 POEM 1 was rated on (date of rating) and is entitled (read title). The bottom line of this POEM is as follows (read bottom line).When you rated this POEM, I see from the portfolio that you reported the following ratings, free text comment, and clinical note (read portfolio). 50 Question 1. Prior to reading this POEM, what was the clinical story of this patient? You may tell this story as you would if you were discussing the case with a colleague. Probes: Present the patient in terms of demographics and social context, e.g., work, family, caregivers History of their health problem (the main condition and any co-morbid conditions) Details of their physical examination before reading the POEM Initial test results providing insight into the case (lab, imaging etc.) Before reading the POEM a. What was the initial diagnosis (if there was one) b. What was the initial treatment (if there was any) c. Any initial preventive intervention or recommendation d. What was the initial follow-up plan (if any) e. What was the initial prognosis (if any) f. Was there a plan for a referral, e.g., to another specialist Question 2. Then, what was the patient-related clinical problem addressed by this POEM? Question 3. Thus, tell me what came out of reading this POEM? For example, were anyactions taken or avoided because of this POEM? Probes: Describe any change: a. regarding tests ordered because of the POEM (e.g., revised or additional tests) b. in the diagnosis because of the POEM (e.g., revised or new diagnosis) c. in the treatment because of the POEM (e.g., revision or initiation of a treatment) d. in preventive intervention or recommendation because of the POEM (e.g., revision or initiation of an intervention) e. in the follow-up plan because of the POEM (e.g., revision or initiation of a follow-up plan) f. in your prognosis because of the POEM (e.g., revised or new prognosis) g. regarding referral to another specialist because of the POEM (e.g., revised or new referral) 51 Question 4. Now, we know some time has passed, and you actually observed the following patient health benefits associated with the use of information from the POEM (read the outcomes). I would like you to tell me more about the current clinical story of this patient, as you would discuss this case with a colleague. For example, in what way did this POEM lead to the health benefits you have observed? Probes: Describe the patient (current situation and any change because of the POEM) Provide the main health problem and any co-morbid conditions Provide details about the physical exam Describe current test results that may provide insights into the case (lab, imaging etc.) Present the observed results of treatment when applicable Present the observed results of preventive intervention or recommendation when applicable Describe the evolution of the situation of the patient according to follow-up visits when applicable Describe the results of the referral when applicable Question 5a. Regarding the first outcome (read the outcome), was it measured or documented in any medical record? If YES: How was it measured or documented? If NO: In your opinion, how could doctors measure or document such outcome in any medical record? For example, if a diagnostic test was avoided, how could you document that the test was truly avoided in practice? Question 5b. same as above (second outcome) Question 5c. etc. Question 6. Before we review and explain your ratings, I would like to know whether only this POEM and other sources of information, contributed to these outcomes? Probes: Present discussions with colleagues or other health professionals about this case or the research study summarized by the POEM Describe searches in other sources of information such as electronic knowledge resources (read source category) Describe any print sources you consulted in addition to the POEM (e.g. reference books or journals) 52 Describe any meetings, forums, or conferences that contributed to these outcomes When other sources: Q6a. Can you tell me a little more about this source (or these sources) and what you found? Q6b. Why did you consult another source? Q6c. Was this information (from source X) in agreement with or in conflict with the POEM? Q6d. Was this information more relevant, equally relevant, or less relevant compared to the POEM? Q6e. Regarding the observed patient health outcomes, did this information contribute more, equally, or less compared to the POEM? Question 7. Finally, did you use this POEM for other patients, or are you planning to use this POEM in the future for other patients? If it was used, describe these patients, and tell me how this POEM was used If it will be used, describe the patients, their follow-up, and tell me how you plan to use this POEM. Probes: Any similarities and differences between all patients for whom you used or may use this POEM? Describe how the medical record could assist doctors to retrieve similar patients, sothey may also benefit from the information from this POEM PART 2: POEM 2 Same as above. Conclusion / Wrap-up Finally, thank you very much and I would like to know if you have any comments about our study, the data collection process or this interview 53