The main objectives of repeated measures analysis are to

advertisement

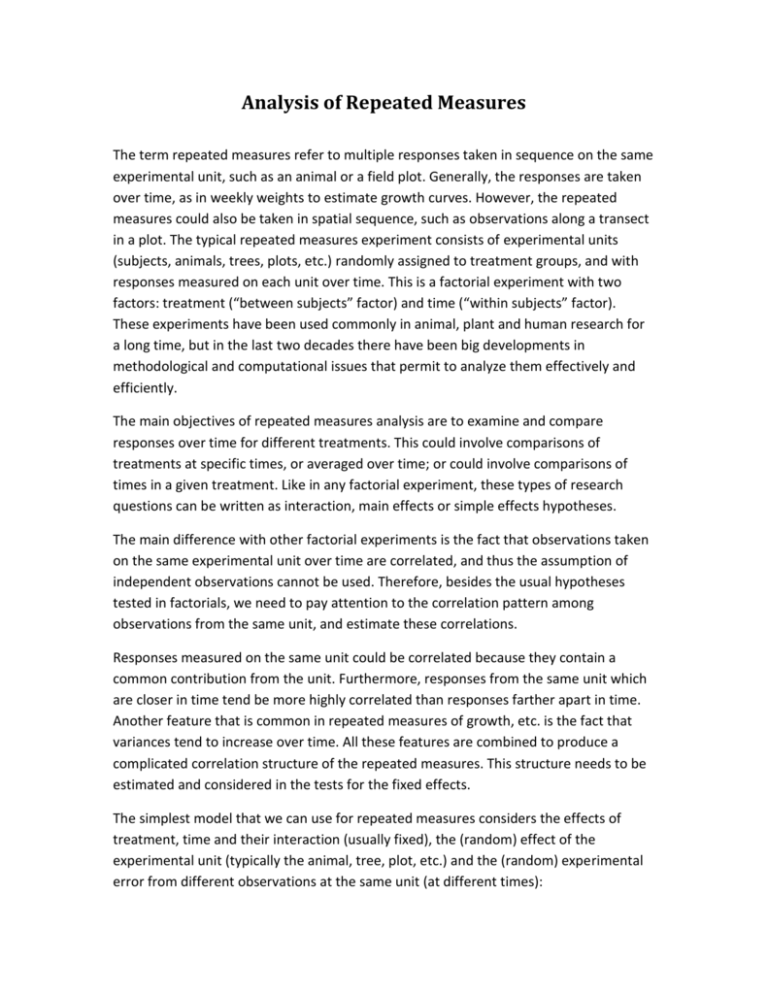

Analysis of Repeated Measures The term repeated measures refer to multiple responses taken in sequence on the same experimental unit, such as an animal or a field plot. Generally, the responses are taken over time, as in weekly weights to estimate growth curves. However, the repeated measures could also be taken in spatial sequence, such as observations along a transect in a plot. The typical repeated measures experiment consists of experimental units (subjects, animals, trees, plots, etc.) randomly assigned to treatment groups, and with responses measured on each unit over time. This is a factorial experiment with two factors: treatment (“between subjects” factor) and time (“within subjects” factor). These experiments have been used commonly in animal, plant and human research for a long time, but in the last two decades there have been big developments in methodological and computational issues that permit to analyze them effectively and efficiently. The main objectives of repeated measures analysis are to examine and compare responses over time for different treatments. This could involve comparisons of treatments at specific times, or averaged over time; or could involve comparisons of times in a given treatment. Like in any factorial experiment, these types of research questions can be written as interaction, main effects or simple effects hypotheses. The main difference with other factorial experiments is the fact that observations taken on the same experimental unit over time are correlated, and thus the assumption of independent observations cannot be used. Therefore, besides the usual hypotheses tested in factorials, we need to pay attention to the correlation pattern among observations from the same unit, and estimate these correlations. Responses measured on the same unit could be correlated because they contain a common contribution from the unit. Furthermore, responses from the same unit which are closer in time tend be more highly correlated than responses farther apart in time. Another feature that is common in repeated measures of growth, etc. is the fact that variances tend to increase over time. All these features are combined to produce a complicated correlation structure of the repeated measures. This structure needs to be estimated and considered in the tests for the fixed effects. The simplest model that we can use for repeated measures considers the effects of treatment, time and their interaction (usually fixed), the (random) effect of the experimental unit (typically the animal, tree, plot, etc.) and the (random) experimental error from different observations at the same unit (at different times): Yijk i uk (i ) j ij ek (ij ) where i represents treatments, j represents time and k is the replicate. The main difference between this model and the one used in the split-plot design is the fact that the ek ( ij ) are not independent with constant variance, but the errors from the same individual (same indices i and k) have a covariance matrix Rk that needs to be estimated. This covariance matrix has the following structure: Var k (i1) Cov k (i1) , k (i 2) Cov k (i1) , k (i 3) Cov k (i1) , k (i 2) Var k (i 2) Cov k (i 2) , k (i 3) Cov k (i1) , k (i 3) Cov k (i 2) , k (i 3) Var k (i 3) Cov k (i1) , k (iT ) Cov k (i 2) , k (iT ) Cov k (i 3) , k (iT ) Cov k (i1) , k (iT ) Cov k (i 2) , k (iT ) Cov k (i 3) , k (iT ) Var k (iT ) There are several statistical methods used for analyzing repeated measures data: 1. 2. 3. 4. separate analysis at each time point. factorial analysis ignoring correlation. factorial analysis assuming a split-plot design. factorial analysis assuming other correlation structures. 1. The separate analysis at each time point does not give any information about the time effects nor the interaction time-by-treatment, and thus is very limited. It is used sometimes to analyze the effect of the treatment at the last time point observed (for example, weight gain after 4 months). 2. The factorial analysis ignoring correlation is incorrect, and will generally result in finding incorrectly too many significant differences. 3. The factorial analysis assuming a split-plot design was used before the availability of software for modeling the covariance structure. It considers that treatment is the factor applied to “whole plots” (the experimental units) and time is the factor applied to “subplots”. This analysis would be correct if we could randomize time, but since time comes in a sequence, it is generally not correct. The analysis is the same that we would obtain if we assume that the variances are the same and the correlations are all equal. This structure is called “compound symmetry”: 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 4. The factorial analysis assuming correlation structures is the most efficient analysis, and permits the use different covariance patterns. Its main problem is that there are (in general) no exact procedures, and several approximations must be used. The method for estimating the covariance structure is the same used for mixed models: REML. For some of these structures we need to pool both random effects (d and e) into one effect (e*) and specify the covariance structure for this “error”. The reason for this is that there is no way of distinguish between the effects (this is called an identifiability problem). This is what happens, for example, in the compound symmetry structure or the unstructured covariance: if we use one of these, we do not specify a unit effect d. The most commonly used covariance structures are: 2 2 2 2 2 2 a. Compound symmetry: 2 2 2 2 2 2 2 2 b. First order autoregressive: 2 2 T 1 2 2 2 2 2 2 2 2 2 2 2 2 T 2 2 T 3 2 c. First order autoregressive with heterogeneous variances: 1 2 2 1 3 T 1 1 T 12 22 2 3 T 2 2 T 1 2 2 1 3 2 3 32 T 3 3 T T 1 1 T T 2 2 T T 3 1 2 T2 T 1 2 T 2 2 T 3 2 2 12 12 2 12 2 4. Unstructured: 13 23 1T 2T 13 23 32 3T 1T 2T 3T 2 T Since we do not want to use an incorrect structure, and an unnecessarily complex structure will decrease the power of the tests, we need to be sure that we are using a reasonable structure before proceeding with the tests of interest. One simple way of deciding between two alternative structures, one of which is a special case of the other, is to fit the model with each of the structures. A test statistic will be –2 times the difference in the loglikelihoods of the two models (provided by the software used to fit the models). 2 Alternatively, we can use penalized likelihood criteria, like AIC and BIC, to select the most appropriate structure. These criteria can be used for comparing nested or non-nested structures.