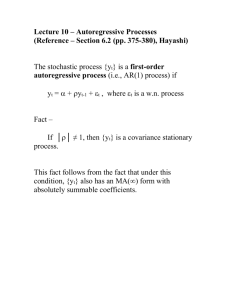

Covarince Stationary Time Series

advertisement

Covarince Stationary Time Series

(Chapter 6, Section 1)

The cyclical component of a time series is,

in contrast to our assumptions about the

trend and seasonal components, a

“stochastic” (vs. “deterministic”) process.

That is, its time path is, to some extent,

fundamentally unpredictable.

Our model of that component must take this

into account.

We will assume that the cyclical component

of a time series can be modeled as a

covariance stationary time series.

What does that mean?

Consider our data sample, y1,…,yT

We imagine that these observed y’s are the

outcomes of drawings of random variables.

In fact, we imagine that this data sample or

sample path, is just part of a sequence of

drawings of random variables that goes back

infinitely far into the past and infinitely far

forward into the future.

In other words, “nature” has drawn values of

yt for t = 0, + 1, +2,… :

{…,y-2,y-1,y0,y1,y2,…}

This entire set of drawings is called a

realization of the time series. The data we

observe, {y1,…,yT} is part of a realization of

the time series.

(This is a model. It is not supposed to be

interpreted literally!)

We want to describe the underlying

probabilistic structure that generated this

realization and, more important, the

probabilistic structure that governs that part

of the realization that extends beyond the

end of the sample period (since that is part

of the realization that we want to forecast).

Ideally, there are key elements of this

probability structure that remain fixed over

time. This is what will enable us to use the

sample path to use inferences drawn about

the proababilistic structure that generated

the sample to draw inferences about the

probabilistic structure that will generate

future values of the series.

For example, suppose the time series is a

sequence of 0’s and 1’s corresponding to the

outcomes of a sequence of coin tosses, with H =

0 and T = 1: {…0,0,1,0,1,1,1,0,…}

What is the probability that at time T+1 the

value of the series will be equal to 0?

If the same coin is being tossed for every t, then

the probability of tossing an H at time T+1 is the

same as the probability of tossing an H at times

1,2,…,T. What is that probability? A good

estimate would be the number of H’s observed

at times 1,…,T divided by T. (By assuming that

future probabilities are the same as past

probabilities we are able to use the sample

information to draw inferences about those

probabilities.)

Suppose however that a different coin will be

tossed at T+1 than the one that was tossed in the

past. Then, our data sample will be of no help in

estimating the probability of an H at T+1. All we

can do is make a blind guess!

Covariance stationarity refers to a set of

restrictions/conditions on the underlying

probability structure of a time series that has

proven to be very especially valuable in this

regard.

A time series, yt, is said to be covariance

stationary if it meets the following

conditions –

1. Constant mean

2. Constant (and finite) variance

3. Stable autocovariance function

1. Constant mean

Eyt = μ

{vs. μt}

for all t. That is, for each t, yt is drawn from

a population with the same mean.

Consider, for example, a sequence of coin

tosses and set yt = 0 if H at t and yt = 1 if T

at t. If Prob(T)=p for all t, then Eyt = p for

all t.

Consider, for example, the HEPI time series.

Does that time series look like a

time series whose values have been

drawn from a population with a

constant mean?

What about the deviations from

trend?

What about the growth rate?

What about your detrended series?

Caution – the conditions that define

covariance stationarity refer to the

underlying probability distribution that

generated the data sample rather than to the

sample itself. However, the best we can do

to assess whether these conditions hold is to

look at the sample and consider the

plausibility that this sample was drawn from

a stationary time series.

2. Constant Variance

Var(yt) = E[(yt- μ)2] = σ2 {vs. σ2t} for all t.

The dispersion of the value of yt around its

mean is constant over time.A sample in

which the dispersion of the data around the

sample mean seems to be increasing or

decreasing over time is not likely to have

been drawn from a time series with a

constant variance.

Consider, for example, a sequence of coin

tosses and set yt = 0 if H at t and yt = 1 if T

at t. If Prob(T)=p for all t, then

Eyt = p

and

Var(yt) = E[(yt – p)2] = p(1-p)2+(1-p)p2

for all t.

Detrended HEPI? HEPI growth rate? Your

detrended series?

Digression on covariance and correlation

Recall that

Cov(X,Y) = E[(X-EX)(Y-EY)]

and

Corr(X,Y) = Cov(X,Y)/[Var(X)Var(Y)]1/2

measure the relationship between the random

variables X and Y.

A positive covariance means that when

X > EX, Y will tend to be greater than EY (and

vice versa). A negative covariance means that

when X > EX, Y will tend to be less than EY

(and vice versa).

The correlation between X and Y will have the

same sign as the covariance but its value will lie

between -1 and 1. The stronger the relationship

between X and Y, the closer their correlation

will be to 1 (or, in the case of negative

correlation, -1).

If the correlation is 1, X and Y are perfectly

positively correlated. If the correlation is -1, X

and Y are perfectly negatively correlated. X and

Y are uncorrelated if the correlation is 0.

Independent random variables are uncorrelated.

Uncorrelated random variables are not

necessarily independent.

End Digression

3. Stable autocovariance function

The autocovariance function of a time

series refers to covariances of the form:

Cov(yt,ys) = E[(yt - Eyt)( ys – Eys)]

i.e., the covariance between the drawings of

yt and ys.

Note that

Cov(yt,yt) = Var(yt)

Cov(yt,ys) = Cov(ys,yt)

For instance,

Cov(yt,yt-1)

measures the relationship between yt and

yt-1.

Your textbook refers to this as the

autocovariance at displacement 1.

We expect that Cov(yt,yt-1) > 0 for most

economic time series: if an economic time

series is greater than normal in one period it

is likely to be above normal in the

subsequent period – economic time series

tend to display positive first order

correlation.

In the coin toss example (yt = 0 if H, yt = 1 if

T), what is Cov(yt,yt-1)?

What about the sign of Cov(yt,yt-1) for the

detrended HEPI?

Suppose that

Cov(yt,yt-1) = γ(1) for all t

where γ(1) is some constant.

That is, the autocovariance at displacement

1 is the same for all t:

…Cov(y2,y1)=Cov(y3,y2)=…=Cov(yT,yT-1)=…

In this special case, we might also say that

the autocovariance at displacement 1 is

stable over time.

For example, in the coin toss example,

Cov(yt,yt-1) = γ(1) = 0 for all t

The third condition for covariance

stationarity is that the autocovariance

function is stable at all displacements.

That is –

Cov(yt,yt-τ) = γ(τ) for all integers t and τ

The covariance between yt and ys depends

only t and s only through t-s (how far apart

they are in time) not on t and s themselves

(where they are in time).

Cov(y1,y3)=Cov(y2,y4) =…=Cov(yT-2,yT) = γ(2)

and so on.

It is hard to make this condition intuitive.

The best that I can offer – If we break the

entire time series up into different segments,

the general behavior of the series looks

roughly the same for each segment.

Notes –

stability of the autocovariance function

(condition 3) actually implies a constant

variance (condition 2); set τ = 0.

γ(τ) = γ(-τ) since

γ(τ) = Cov(yt,yt-τ) = Cov(yt- τ,yt) = γ(-τ)

If yt is an i.i.d. sequence of random

variables then it is a covariance

stationary time series.

{The “identical distribution” means that

the mean and variance are the same for

all t. The independence assumption

means that γ(τ) = 0 for all nonzero τ,

which implies a stable autocovariance

function.}

The conditions for covariance stationary are

the main conditions we will need regarding

the stability of the probability structure

generating the time series in order to be able

to use the past to help us predict the future.

It is important to note that these conditions

do not

imply that the y’s are identically

distributed (or, independent).

place restrictions on third, fourth, and

higher order moments (skewness,

kurtosis,…). [Covariance stationarity

only restricts the first two moments and

so it also referred to as “second-order

stationarity.”]