L3: Lecture notes: Joint disributions

advertisement

Sheets L4

Jointly Distributed Random Variables

Discrete joint distributions:

The joint distribution of the discrete variables X

and Y are given by the joint probability mass

function:

P(X = x and Y = y) for all x SX and y SY

(in a two dimensional table or a formula):

Notation: P(X = x and Y = y)= P(X = x, Y = y)

Requirements:

1. P(X = x and Y = y) ≥ 0

2.

=1

The marginal or individual prob. functions:

P(X = x) =

and

1

P(Y = y) =

The conditional probability function of X,

under the condition Y = y, is defined:

P(X = x | Y = y) =

For fixed y,

P(X = x | Y = y) is a probability function

and

the conditional expectation is:

E(X |Y = y) =

Analogously for P(Y = y |X = x) and E(Y|X= x)

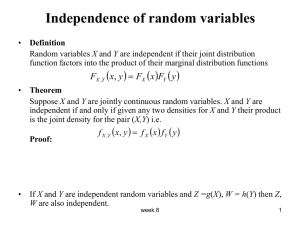

Independence of random variables :

Recall: events A and B are

independent P(A B) = P(A) P(B)

Two r.v.`s X and Y will be called independent

when every event concerning X and every event

concerning Y are indpendent.

2

General definition :

X and Y are independent

P(XA and YB) = P(XA) P(YB) ,

for any combination A R and B R

For discrete variables this is equivalent to:

X and Y are independent

P(X = x and Y = y) = P(X = x) P(Y = y)

for all xR en yR

Consequence: if X and Y are independent than

the joint distribution follows logically on the

marginal distributions.

Functions of 2 discrete r.v.

Theorem:

e.g.

It follows that: E(X+Y) = E(X) + E(Y)

3

The distribution of Z = X+Y can be found if the

joint probability function is known:

If X and Y are independent, we can write for

the sum or convolution Z = X+Y

(for the case X, Y є {0, 1, 2,…}) :

Convolutions of common discrete distributions:

1. If If X ~ Poisson (µ1), Y ~ Poisson(µ2) and

X and Y are independent,

then X+Y ~ Poisson (µ1 + µ2)

2. If X ~ B(m, p), Y ~ B(n, p) and X and Y are

independent => X + Y ~ B(m+n, p)

The properties above can be extended to n

discrete independent r.v. X1, …, Xn

4

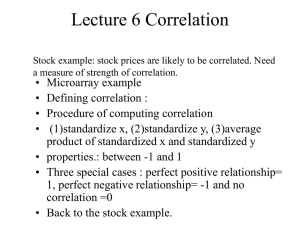

X and Y are dependent if they are not

independent

Measure for dependency: covariance

cov(X, Y) = E{(X-EX)(Y-EY)}

For discrete X and Y:

cov(X,Y) =

We say that X and Y are not correlated if

cov(X, Y) = 0

Properties covariance:

1.

2.

3.

4.

cov(X, Y) = E(XY) – EX×EY

cov(X, Y) = cov(Y, X)

cov(X, X) = var(X)

cov(X + a, Y) = cov(X, Y)

5

5. cov(aX, Y) = a cov(X, Y)

6. cov(X, Y + Z) = cov(X, Y) + cov(X, Z)

7. var(X+Y) = var(X)+var(Y)+2cov(X,Y) ,

var(X- Y) = var(X)+var(Y) - 2cov(X,Y)

Property 7 for X1, …, Xn :

Properties for independent X and Y :

1. E(XY) = EX×EY

2. cov(X, Y) = 0

3. var(X±Y) = var(X)+var(Y)

Note 1: In general: E(X+Y) = EX+EY ,

but

E(XY) ≠ EX×EY

Note 2 : independence => no correlation

not vice versa !

6

The value of the covariance :

- is positive if large values of X in general

coïncide with large values of Y and v.v.

- depends on the unit of measurement

(e.g. 1 m = 100 cm): cov(aX, Y) = a cov(X, Y)

The correlation coefficient ρ(X,Y) does not

depend on the unit of measurement:

Properties ρ(X,Y):

1. -1 ≤ ρ(X,Y) ≤ 1

2. ρ(X,Y) = +1 if for a > 0 P(Y= aX+b) = 1

ρ(X,Y) = -1 if for a < 0 P(Y= aX+b) = 1

3. ρ(aX+b,cY+d) = ρ(X,Y) if a×c > 0

ρ(aX+b,cY+d) = - ρ(X,Y) if a×c < 0

So “ρ(X,Y) is a standardized measure for

linear dependence of X and Y”

7

Continuous joint distributions:

If there is a function f(x, y) so that, for every B

R2, P((X,Y) є B) =

, then

(X,Y) are jointly continuous r.v.

Requirements for joint density function f(x,y):

1.

2.

f(x, y) ≥ 0

Notation: f(x, y) = fX,Y(x, y)

The cumulative joint distribution function F

or FX,Y :

8

Properties f and F of jointly continuous r.v. :

1. F(x, y) is a continuous function

2. F(x, y) has similar properties as F(x) : non

decreasing, right continuous and similar

limits in both arguments (x and y)

3.

and

4.

5.

and

6. P(X = x and Y = y) = P(X= x) = P(Y = y)= 0

7. Probability of values of (X, Y) in a rectangle:

9

Independence of continuous r.v.

General definition (see also discrete joint distr):

X and Y are independent

P(XA and YB) = P(XA) P(YB) ,

for any combination A R and B R

For continuous variables this is equivalent to:

X and Y are independent

for all xR en yR

or

for all xR en yR

The distribution of a function of two (or

more) continuous r.v.

The density function f Z(z) of Z = g(X, Y) can

usually be determined in 3 steps:

1. Express FZ(z) = P( g(X,Y) ≤ z) in the joint

or individual distribution of X and Y.

10

2. Determine f Z ( z )

d

dz

FZ ( z )

3. Use the known distribution.

We will restrict ourselves to simple two

variable functions: X+Y, XY, max(X,Y) and

min(X,Y). And later on we will extend these

properties to n variables X1, …, Xn

Property exponential distribution:

If X ~ E(λ) and Y ~ E(λ) and X and Y are

independent, then min(X,Y) ~ E(2 λ).

The sum or convolution of two independent

continuous r.v. X and Y:

Note: if X and Y are non negative the

boundaries of the integral can be 0 and z.

Consequence for the normal distribution:

11

If X ~ N(µ1, σ12) and Y ~ N(µ2, σ22) and X and

Y are independent, then

X +Y ~ N(µ1+ µ2, σ12 + σ22)

For the expectation we can derive:

In particular:

,which can

be used to compute

Many of the definitions and properties for two

variables can be easily extended to 3 or more

variables. Some examples:

- E(X1 +…+ X n) = E(X1) +…+ E(X n)

12

If X1,…, Xn are independent, then

- var(X1 +…+ X n) = var(X1) +…+ var(X n)

In statistics we use (relatively) small samples to

get an idea about the population properties.

We model the observed values x1,…, xn in the

sample as random variables X1,…, Xn .

We call X1,…, Xn a random sample if

1. X1,…, Xn are independent.

2. X1,…, Xn all have the same distribution

This population is called the population distribution, so if E(Xi)= µ and var( Xi) = σ2),

Then

The sum

:

= nµ and

= nσ2 .

13

The sample mean

:

,

The weak law of large numbers:

for any c > 0

Random samples and the normal distribution

If X1,…, Xn are independent and Xi ~ N(µ, σ2)

(i = 1,…,n) then:

The sum

The sample mean

~ N(nµ, nσ2)

~ N(µ,

)

The Central Limit Theorem

14

If X1, X2 ,… are independent and E(Xi )= µ and

var(Xi )= σ2, i = 1, 2,… then:

Ф(z)

So for large n (≥ 25) we have approximately :

~ N(nµ, nσ2) and

~ N(µ,

)

Normal approximation of X ~ B(n,p) for large

n (rule np(1-p) > 5 ) : X ~ N(np, np(1-p))

Use continuity correction: P(X≤ k)=P(X≤ k+½)

15