1.- What`s HTK? - Universidad Politécnica de Madrid

advertisement

A basic isolated-words ASR system (HTK V3.x)

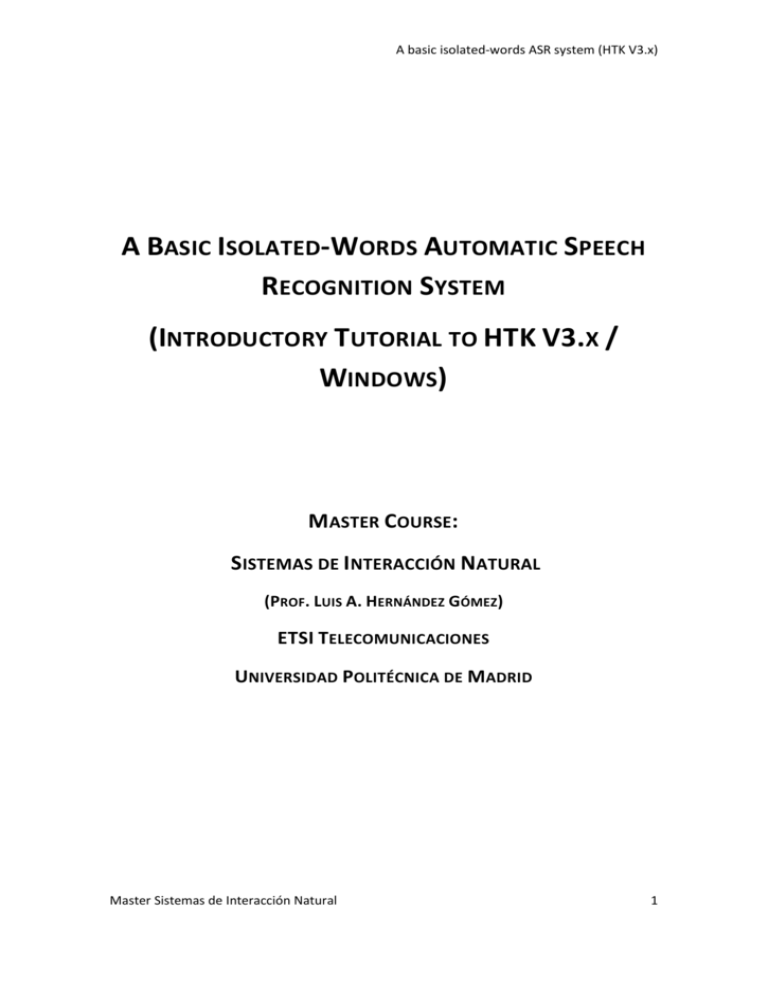

A BASIC ISOLATED-WORDS AUTOMATIC SPEECH

RECOGNITION SYSTEM

(INTRODUCTORY TUTORIAL TO HTK V3.X /

WINDOWS)

MASTER COURSE:

SISTEMAS DE INTERACCIÓN NATURAL

(PROF. LUIS A. HERNÁNDEZ GÓMEZ)

ETSI TELECOMUNICACIONES

UNIVERSIDAD POLITÉCNICA DE MADRID

Master Sistemas de Interacción Natural

1

A basic isolated-words ASR system (HTK V3.x)

Contenido

1.- What’s HTK? ............................................................................................................................. 3

2.- Isolated-words ASR system ...................................................................................................... 3

2.1 Pasos Necesarios ................................................................................................................. 3

2.2 Organización del Espacio de Trabajo .................................................................................. 4

2.3 Instalación/Acceso Básico HTK ............................................................................................ 4

3.- Creación del Corpus de Entrenamiento ................................................................................... 5

4.- Extracción de Características Acústicas ................................................................................... 7

5.- Definición de Topología de los HMMs ..................................................................................... 9

6.- Proceso de Entrenamiento de los HMMs .............................................................................. 12

6.1 Entrenamiento - Paso 1- HINIT .......................................................................................... 12

6.2 Entrenamiento - Paso 2 - HREST ....................................................................................... 14

6.2 Entrenamiento - Paso 3 - HEREST ..................................................................................... 15

7.- Diseño de Tarea de Reconocimiento y Pruebas..................................................................... 16

7.1 Diccionario......................................................................................................................... 16

7.2 Gramática de Reconocimiento .......................................................................................... 17

7.3 Pruebas de Reconocimiento.................................................Error! Bookmark not defined.

7.3.1 Pruebas Off-line.......................................................................................................... 18

7.3.2 Pruebas On-line .......................................................................................................... 19

Master Sistemas de Interacción Natural

2

A basic isolated-words ASR system (HTK V3.x)

1.- What’s HTK?

The aim of an automatic speech recognition system (ASR) is to transcribe speech into to text.

The state-of-the-art technology in ASR systems is based on the use of HMM (Hidden Markov

Models) or Hidden Markov Models.

HTK (Hidden Markov Model Toolkit) is a tool developed by the Department Engineering

Department (CUED) at the University of Cambridge (http://htk.eng.cam.ac.uk/) to create,

evaluate and modify HMMs. HTK is primarily intended for speech recognition research, but can

(and is) used for a wide number of applications.

HTK is a library of functions and tools in C, running on the command line; its distribution is

free, including source code. From the above web address HTK can download and access their

complete documentation (htkbook).

2.- Isolated-words ASR system

In this tutorial we will use HTK to build a simple recognizer that allows the recognition of two

words spoken in isolation "Yes" and "No".

Based on this basic recognizer, you can start experimenting with more complex problems that

could require a more advanced use of HTK, it is advisable to use HTK manual information:

htkbook.

2.1 Necessary steps

To create the Yes / No recognizer we must follow the following steps:

1. Record a training database that contains several repetitions (originally pronounced in

isolation) of each word. We will have to record and LABEL each recording (see Section 3).

2. Process each recorded file to extract the acoustic features (e.g. cepstrum). These features

will be used both to train and test the speech recognition system. (Section 4). The procedure

of extracting parameters from the speech signal is usually referred to as the Front-End of the

ASR system.

3. Define the topology of the HMMs to be used. Note that it will take at least three models:

HMM_SI; HMM_NO and HMM_SIL, the latter will represent the silence or noise before and

after each word. (Section 5)

4. Train the HMM models. (Section 6)

5. Test the recognition system both off-line (–audio from wav files-) and on-line (–audio from

the microphone-). (Section 7)

Master Sistemas de Interacción Natural

3

A basic isolated-words ASR system (HTK V3.x)

2.2 Working environment

In order to follow the isolated-word ASR example in this tutorial we will use a working

environment including the following directory structure:

Prueba\ : contains all the scripts (using Windows .bat files) needed to execute HTK

commands with their proper related configuration files.

Prueba\sonidos : where audio files are located (we will use .wav files with : 16KHz

and 16 bit/sample).

Prueba\labels : it stores label files (with .lab extension) containing the text

transcription for each audio file in Prueba/sonidos ; these label files will be used to

train the HMM models.

Prueba\vcar : to store feature files (i.e. mel-frequency cepstrum files) for each audio

file in Prueba/sonidos.

Prueb\recono : after the recognition of a file this directory will contain the

corresponding text file with the recognition (i.e. transcription) result (.rec files)

Prueba\hmms: to store the HMMs prototypes for SI, NO and RUIDO HMM models.

Prueba\hmmsiniciales: where HMMs will be generated after the first training step

using HINIT HTK tool.

Prueba\hmmhrest: where HMMs will be generated after the second training step

using HREST HTK tool.

Prueba\hmmherest: where a single file containing ALL the HMMs after the third and

last training step using HEREST tool.

2.3 Installation/Basic Access to HTK

To have access to HTK executable tools/programs in the directory ... \ HTKV3.XX \ bin.win32

from a Windows command window (cmd), it’s necessary to include the bin.win32 directory in

the path of the system.

Master Sistemas de Interacción Natural

4

A basic isolated-words ASR system (HTK V3.x)

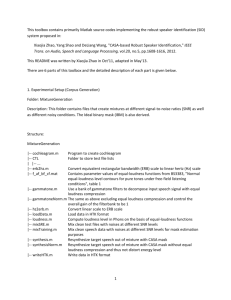

3.- Generating the Training Corpus

To train the models of SI, NO and SIL (silence / noise) it is necessary to define a corpus which

obviously has to contain the words "si" and "no", and in this case pronounced in isolation. Thus

the corpus contains a repetition of each word in isolation.

The training database must also have a number of repetitions for each word; the more

repetitions the better (and different speakers, if for speaker independent recognition ....).

For this example, we only use 5 repetitions for each word. So we have to record five times "si"

and five times "no".

But apart from the audio, you need to generate a file (tags / labels) that contains a label

(label) for each "linguistic / acoustic" which will involve an HMM. That is, if we record the first

sentence by saying "si" in an audio file si_1.wav, the "units" that are in that audio file s1_1.wav

will be sil - si - sil, and that information should be manually included (annotations) in a file

called s1_1.lab.

Following this recording plus manual annotation strategy, the process of training is more

"efficient" (discussion on this issue ...) if it includes not only what is within the audio file

s1_1.wav, but also what ranges of time span are associated to each unit.

These actions to manually include labels and mark the start and end times can be done with

the tool HSLAB (for HTK), or using any other HTK compatible audio editor, for example,

WaveSurfer www.speech.kth.se/wavesurfer/, which is more powerful and easy to use.

IMPORTANT: When starting Wavesurfer ensure that:

- Properties of Sound: record at 16Khz and 16 bin (mono)

- Apply Configuration: indicating that we will use the HTK Transcription

(see figure below)

Master Sistemas de Interacción Natural

5

A basic isolated-words ASR system (HTK V3.x)

Using this configuration we will record and annotate 5 utterances for “si” and 5 for “no”,

generating in:

Prueba\sonidos : si_1.wav, .... si_5.wav , no_1.wav ,.... no_5.wav ( .wav : a 16KHz y 16

bit/sample).

Prueba\labels : si_1.lab, .... si_5.lab , no_1.lab ,.... no_5.lab

The format for transcriptions (lab) in HTK, is shown in the example below, time marks in HTK

are expressed in 10-7 secs. (si_1.lab):

(start end label)

0 3564899 sil

3564899 6288848 si

6288848 9835466 sil

Master Sistemas de Interacción Natural

6

A basic isolated-words ASR system (HTK V3.x)

The Figure below shows an example of the Wavesurfer screen corresponding to the previous

labels for the word “si” (si_1.wav audio file):

4.- Acoustic Features Extraction (Front-End)

The next step will be processing all the recorded audio files si_n.wav, no_n.wav to extract the

features that will use the Speech Recognizer.

HTK implements a wide range of different features that can be used in ASR systems: ceptrum,

LPC, MEL, FilterBank, PLP,... The Front-End is implemented in the HCopy tool. The simplest way

of using HCopy is:

HCopy -C conf si_1.wav si_1.mfc

OPTIONS:

-C conf: Configuration file to include details on the feature extraction process (as the type and

no. parameters)

si_1.wav: input speech audio file

si_1.mfc: output file (parameters)

In this tutorial, to process ALL the files using a single command we will use:

Master Sistemas de Interacción Natural

7

A basic isolated-words ASR system (HTK V3.x)

HCopy -C conf -S flist.scp

(This is included in file SacaCep.bat)

OPTIONS:

flist.scp : contains a list of pairs: audio_wav_file output_mfc_file

.\sonidos\si_1.wav .\vcar\si_1.mfc

.\sonidos\si_2.wav .\vcar\si_2.mfc

....

.\sonidos\no_5.wav .\vcar\no_5.mfc

The configuration file conf in this example has been define to make it possible to use the

training HMM models in on-line tests (i.e using the microphone). The selected parameters are:

SOURCEKIND = WAVEFORM // Type on input files

SOURCEFORMAT = WAV // Extension for input files

SOURCERATE = 625

// Sampling rate T in 100 nano-sec. HTK time units (sampling rate 1/T = 16 KHz)

ZMEANSOURCE = T

USESILDET = TRUE

// USES A Voice Activity Detector (necessary for on-line tests; BUT sometimes

produces error for off-line use and it is necessary to use FALSE)

MEASURESIL = FALSE

OUTSILWARN = TRUE

TARGETKIND = MFCC_0_D_A

// Type of parameterization MelFFT + C0 (Energy) + Delta y Acceleration (Doble Delta)

TARGETFORMAT = HTK

TARGETRATE = 100000.0 // frame rate 10 msec. (sliding window each 10 msec.)

SAVEWITHCRC = FALSE

WINDOWSIZE = 250000.0 // window size 25 msec.

USEHAMMING = TRUE

PREEMCOEF = 0.97 // Pre-emphasis

NUMCHANS = 12 // Number of channels for the FFT

NUMCEPS = 10 // Number of cesptrum per window

// This field together with MFCC_0_D_A : give us the total number of

// coefficients we are going to use 10 ceps + 10 Deltaceps + 10 DDceps + 1C0

// + 1DC0 +1 DDCep = 33 coeficients per frame (window)

ENORMALISE = FALSE // No energy normalization (COULD BE USED TO COMPENSATE

DIFFERENT RECORDING LEVELS)

CEPLIFTER = 22 // Ponderation to give more relevance to more discriminative cepstrum LIFTER

USEPOWER = TRUE // it could also be tested using abs (FFT) instead of power.

Master Sistemas de Interacción Natural

8

A basic isolated-words ASR system (HTK V3.x)

The execution of SacaCep.bat (it is advisable to do this in the Windows command line to check

for possible errors...). The next figure illustrate an error that in some cases appears when using

USESILDET = TRUE ; in this you should try: USESILDET = FALSE

Once SacaCep.bat script is correctly executed you could see a set of mfc files \Prueba\vcar :

si_1.mfc, si_2.mfc,... no_5.mfc

HTK includes the HList tool to display the content of files with parameters (i.e. mfc files); for

example:

Hlist -h -e 1 si_1.mfc

Displays in the command (cmd) screen:

----------------------------------- Source: si_1.mfc ----------------------------------Sample Bytes: 132 Sample Kind: MFCC_D_A_0

Num Comps: 33

Sample Period: 10000.0 us

Num Samples: 98

File Format: HTK

------------------------------------ Samples: 0->1 ------------------------------------0: -5.769 1.360 -1.041 -1.606 -0.643 -1.303 -1.380 0.017 1.731 1.495

58.589 0.279 2.649 2.098 2.927 3.047 1.078 2.394 -0.201 -1.034

-0.393 -1.811 0.360 0.164 0.189 0.027 -0.204 -0.258 -0.458 -0.676

-0.360 0.012 -0.053

1: -3.598 9.894 5.159 5.613 7.360 2.961 5.144 2.312 5.040 2.044

55.530 0.969 3.575 2.640 3.051 3.669 0.158 2.005 -2.530 -1.962

0.340 -2.089 0.343 -0.380 -0.218 -0.505 -0.743 0.055 -1.005 -0.319

-0.367 -0.473 0.120

----------------------------------------- END -----------------------------------------Showing the file header, and the first 33 observation vectors for frames 0 and 1. In the

example you can identify the C0 parameter (related to energy) as the highest values in position

11 of each window (58.589 for frame 0: ; and 55.530 in frame 1:)

5.- Topology for the HMMs

Up to this point we have all the data and information required to start training our Hidden

Markov Models HMMs. These HMMs will be the statistical patterns that will represent the

Master Sistemas de Interacción Natural

9

A basic isolated-words ASR system (HTK V3.x)

“acoustic realization” of the linguistic units we have decided to model; in our case: si, no and

silence.

But before that we have to DEFINE the HMM topology we are going to use (see more details in

Introduction to Digital Speech Processing, Lawrence R. Rabiner1 and Ronald W. Schafer)

In this tutorial we will use the same topology for all the linguistic units, that is for “si”, “no” and

“silencio”. We will use:

12 states ( 14 in HTK: 12 + 2 start/end)

and two gaussians (mixtures) per state.

To this end we will have to create a text file with a PROTOTYPE of this HMM topology using

HTK syntax format:

Each file name MUST be equal to the unit: so far, for the file names and linguistic unit names

we will use will be: si , no and sil

So, for example, the file named si will be:

~o <vecsize> 33 <MFCC_0_D_A>

~h "si"

<BeginHMM>

<Numstates> 14

<State> 2 <NumMixes> 2

<Stream> 1

<Mixture> 1 0.6

<Mean> 33

0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

Master Sistemas de Interacción Natural

10

A basic isolated-words ASR system (HTK V3.x)

<Variance> 33

1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0

1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0

<Mixture> 2 0.4

<Mean> 33

0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0

<Variance> 33

1.0 1.0 1.0 1.0 1.0

1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0

1.0

<State> 3 <NumMixes> 2

<Stream> 1

1.0 1.0 1.0 1.0 1.0 1.0 1.0

1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0

1.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0

1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0 1.0

......................................

<Transp> 14

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

1.0

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.6

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.4

0.0

<EndHMM>

Comments of the different fields inthis data structure:

~o <vecsize> 33 <MFCC_0_D_A>

// MUST be the same parameterization we used in HCopy

~h "si"

NAME!!!)

// Name for the HMM model (MUST be EQUAL TO THE FILE

<BeginHMM> // Initial HMM tag

<Numstates> 14 // 14 states : 12 “real” states + beginning +

end

<State> 2 <NumMixes> 2

<Stream> 1

// HTK allows to divide the input vector

// 33 components in several streams

<Mixture> 1 0.6

// First gaussian and initial weight

<Mean> 33

// Means vector (initialized to zero)

................

<Variance> 33

// Variances vector (initialized to one)

(at the end the Matrix with transition probabilities is included aij, in this case only

transition to the next state is allowed; note also the special definition for the initial and

final HTK states)

<Transp> 14

Master Sistemas de Interacción Natural

11

A basic isolated-words ASR system (HTK V3.x)

0.0 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.6 0.4 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

0.0 0.0 0.6 0.4 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

<EndHMM>

// Final HMM tag

Once the three HMM PROTOTYPES have been defined we can start the

training procedure.

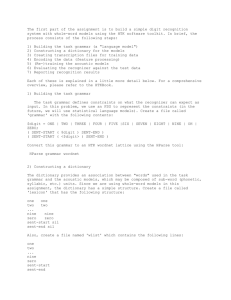

6.- HMMs Training Process

The training of the HMMs is made from the files of features, xxx.mfc in the directory. \ Prueba

\ vcar, tag files, xxx.lab, in the \ Prueba \ labels directory, and HMMs prototypes in the .\

Prueba \ hmms directory.

The HTK training process can be raised in different ways, here we use the more standard and

simple three-steps procedure that will sequentially generate better and more accurate HMMs.

The HMMs in each step will be stored in different directories. These directories together with

the HTK tools for each step: HInit (for step 1), HREST (step 2) and HEREST (Step 3) are

illustrated in the following diagram:

hmms

HERest

(3)

HInit

(1)

hmmrest

hmmsiniciales

HRest

(2)

hmmerest

6.1 Training - STEP 1- HINIT

The first step provides initial values for the mean and variance vectors in the HMM prototypes.

HInit uses the files with the feature vectors (mfc files) together with the time labels (in .lab

files) to do a uniform initialization of the mean and variances of each state in each model. (We

will discuss this in class).

Master Sistemas de Interacción Natural

12

A basic isolated-words ASR system (HTK V3.x)

There are other ways to initialize the HMMs using HCompv, where a broad initialization is

done and a file is generated (varfloor) with the smallest values for the Gaussian variancs.

~v varFloor1

<Variance> 33

2.23232e-001 1.03872e-001 (...) 4.00123e-003

However in this tutorial we will only use HInit as it is executed using the EntrenaListaHInit.bat

script.

NOTE that HInit is executed THREE TIMES one for each HMM: si, no, sil

HInit -T 1 -l si -L .\labels -M .\hmmsiniciales .\hmms\si -S listatrain

HInit -T 1 -l no -L .\labels -M .\hmmsiniciales .\hmms\no -S listatrain

HInit -T 1 -l sil -L .\labels -M .\hmmsiniciales .\hmms\sil -S listatrain

-T 1

: tracing level (log information)

-l si

: training label (HInit will look for this label in the *.lab files)

-L .\labels : label directory (where *.lab files are located)

- M .\hmmsiniciales : output directory for the new HMMs

.\hmms : input directory where HMM prototypes (Section 5) are located.

listatrain, contains the list of all the fles to be used in the training process (the *.mfc files in

the .\vcar directory)

.\vcar\si_1.mfc

.\vcar\si_2.mfc

.\vcar\si_3.mfc

.\vcar\si_4.mfc

.\vcar\si_5.mfc

.\vcar\no_1.mfc

.\vcar\no_2.mfc

.\vcar\no_3.mfc

.\vcar\no_4.mfc

.\vcar\no_5.mfc

The next figure shows output information generated during HInit execution for each HMM. It

can be seen that it is an iterative process to fit the training data in terms of logProb (comments

in class). HInit has several parameters to define the convergence of this process, in the

example, as no information is specified, default values are used (i.e. convergence stopped at

20 iterations, or logProb variation below epdsilon 0.0001)

Master Sistemas de Interacción Natural

13

A basic isolated-words ASR system (HTK V3.x)

The result of this step will generate new si, no and sil HMM models in the

.\Prueba\hmmsiniciales directory.

6.2 Training - STEP 2 - HREST

This second training step re-train the HMM models of the previous step. HRest also keeps the

time marks in the lab files, but now the state segmentation inside each label (unit) is not

uniform as it was in HInit. Now the state segmentation inside each label is done using the

HMM models.

The use of the HTK HRest tool is defined in the script file: EntrenaListaHRest.bat. This script

executes HRest THREE times, one for each HMM model: si, no, sil

HRest -T 1 -l si -L .\labels -M .\hmmhrest .\hmmsiniciales\si -S listatrain

HRest -T 1 -l no -L .\labels -M .\hmmhrest .\hmmsiniciales\no -S listatrain

HRest -T 1 -l sil -L .\labels -M .\hmmhrest .\hmmsiniciales\sil -S listatrain

Master Sistemas de Interacción Natural

14

A basic isolated-words ASR system (HTK V3.x)

The difference of this script compared to the one for HInit are the input and output directories

for the HMM models. Now the inputs are the HInit-generated models, in hmmsiniciales, and

the output is stored in the hmmhrest directory.

The information HRest displays on the screen is similar to the one produced by HInit.

6.2 Training - Step 3 - HEREST

The last training step is the most powerful one as it trains in a join way ALL the HMM models.

This step only considers the transcriptions in the lab files BUT NOT the time marks. It could be

said that in some sense in the HERest HTK tool ALL the time marks are obtained using the

HMM models.

The script file to execute HERest is EntrenaListaHERest.bat. It can be seen that a single

execution of HERest re-trains all the three models (Note that more tan one use of HERest could

improve the trained HMM models)..

HERest -T 1 -L .\labels -M .\hmmherest -d .\hmmhrest -S listatrain listahmms

To use HERest is necessary to use a file listahmms containing the list of ALL the HMM models

to be trained (in this example: si, no y sil).

After the execution of EntrenaListaHERest.bat , the directory .\Prueba\hmmherest will contain

a single file called newMacros. This file contains ALL the training models:

~o

<STREAMINFO> 1 33

<VECSIZE> 33<NULLD><MFCC_D_A_0><DIAGC>

~h "no"

<BEGINHMM>

<NUMSTATES> 14

.....

<ENDHMM>

~h "si"

<BEGINHMM>

<NUMSTATES> 14

.....

<ENDHMM>

~h "sil"

<BEGINHMM>

<NUMSTATES> 14

.....

<ENDHMM>

Master Sistemas de Interacción Natural

15

A basic isolated-words ASR system (HTK V3.x)

This concludes the training process, so the next step will be to use the trained HMM models

for speech recognition.

7.- Define the Speech Recognition Task and Test-it

The use of a set of trained HMM models in a Speech Recognition systems is generally much

more complex that what we will see in this tutorial. But we will see all the necessary

components in a simplified way.

The two main components to define a Speech Recognition task are: The Dictionary (Word

Lexicon) and the Language Model. (again, see more details can be seen in Introduction to

Digital Speech Processing, Lawrence R. Rabiner1 and Ronald W. Schafer)

7.1 Dictionary(Word Lexicon)

The dictionary or word lexicón contains as information the list of words the system i sable to

recognize and HOW these words are defined in terms of the HMM models.

In this example the mapping from word to HMM model is straight, so the dictionary definition

is as simple as:

sil sil

si si

no no

BUT in more general cases where HMM of phonemes are used, each word should be described

in terms of the sequence of phonemes that represent it.

Master Sistemas de Interacción Natural

16

A basic isolated-words ASR system (HTK V3.x)

7.2 Language Models - Grammar

Together with the dictionary it is necessary to specify the sequence of words that the

recognizer will be able to recognize. The ways to define these possible sequences of words are

mainly two: 1) probabilistic models, or language models; 2) deterministic graphs of words, or

recognition grammars.

In our example we will use a simple grammar corresponding to a graph indicating that the

recognizer will optionally recognize a silence at the beginning and/or end of the pronunciation.

The grammar, as it is illustrated below, will be able to recognize “si”, “no” or “nothing” (i.e just

silence; we will discuss in class out-of-vocabulary OOV words)

de reconocimiento indica, principalmente, qué palabras o vocabulario va a ser capaz de

reconocer el sistema, y cómo quedan definidas esas palabras en función de los HMMs que

tiene el mismo.

En nuestro caso la relación palabra del vocabulario

HMM es directa, por lo que el fichero dic es

SI

muy simple:

SIL

SIL

sil silsi si

no no

NO

To design grammars HTK allows to use a human-readable syntax that needs to be compiled in

a more complex-to-read grapsh format (nodes and arcs).

The human-readable description of the previous grammar can be:

$comando = si | no;

( {sil} [ $comando ] {sil} )

The label “comando” represents “si” OR “no” (i.e. in parallel);

{} defines zero or more repetitions (the sil model can be skipped or repeated);

[] defines zero or one repetition (so “si” or “no” can be recognized only one time but

can also be skipped)

Once the grammar is saved in a text file, in our example the text file “gram” it MUST be

compiled or parsed using the HParse HTK tool, as:

HParse gram red

Master Sistemas de Interacción Natural

17

A basic isolated-words ASR system (HTK V3.x)

This generates the file “red” containing the format needed by HVite, the HTK tool for

performing recognition (as we will see in the next section).

File “red” :

VERSION=1.0

N=8

L=12

I=0

W=sil

I=1

W=!NULL

I=2

W=no

I=3

W=si

I=4

W=sil

I=5

W=!NULL

I=6

W=!NULL

I=7

W=!NULL

J=0

S=1

E=0

J=1

S=5

E=0

.......

You can try different variations in the grammar to test the recognition of different utterances

containing “si” and “no”.

7.3 Recognition Tests

Once we have the acoustic HMM models:

the list of models are in: listahmms ;

the HMM models are in ./hmmherest\newMacros

the dictionary (file dic), and the recognition network (file red)

we can perform some recognition tests, both over wav files(off-line) and over the microphone

audio input (on-line).

7.3.1 Off-line Tests

The HTK tool for recognition is HVite. HVite implements the Viterbi algorithm for an efficient

recognition process.

The script file Reconoce_auto.bat makes use of HVite to recognize two files already in *.mfc

format already used for training. As si_1.mfc y no_1.mfc have been used for training this is an

“auto test”, so the recognition should be without errors. The two executions for HVite in the

Reconoce_auto.bat script are:

HVite -w red -l .\recono -T 1 -H .\hmmherest\newMacros dic listahmms .\vcar\si_1.mfc

HVite -w red -l .\recono -T 1 -H .\hmmherest\newMacros dic listahmms .\vcar\no_1.mfc

Master Sistemas de Interacción Natural

18

A basic isolated-words ASR system (HTK V3.x)

Note that together with the mfc files, the use of HVite includes, –l .\recono , to set the

directory name where the recognition results will be stored. For each recognized file a .rec file

is generated with a format similar to .lab files but including the logProbability obtained by

HVite.

For example, the file .\recono\si_1.rec

0 4000000 sil -2632.982422

4000000 7200000 si -1941.103882

7200000 9800000 sil -1721.177368

NOTE that in order to test other recordings not used for training we must:

1. Record an audio file (.wav 16 KHz y 16 bit): for example: prueba.wav

2. Process this audio file using HCopy: prueba.mfc, and the same conf file used for

training.

3. Run HVite as above but on prueba.mfc

The script file ReconocePr.bat process a wav file called prueba.wav stored in .\sonidos and

generates both the .\vcar\prueba.mfc parameters file and the .\recono\prueba.rec recognized

file.

To do a more “formal” evaluation of a recognizer it should be necessary to record a bigger

Data Base and then to perform an analysis of the recognition errors. HTK includes an

evaluation tool called HResults (see the htkbook).

7.3.2 On-line Tests

Another possibility HTK offers is to make on-line tests directly from the audio acquired through

the PC microphone.

To that end also the HVite tool is used, but instead of specifying an input mfc file, you must use

–C trealconf , being trealconf a text file similar to the conf file used in HCopy, as the

parameterization of the input audio must be the same that we used for training.

HVite -w red -l .\recono -T 1 -H .\hmmherest\newMacros -C trealconf dic listahmms

The file trealconf is, thus, similar to conf but including an indication of real time input and the

need for using a voice activity detector.

For these real-time tests it is very important to have the recording conditions and voice activity

detector under control. To listen to the speech signal that is been received by the speech

recognizer it is very useful to activate the “echo” feature in HVite (–g switch in HVite).

HVite -g -w red -l .\recono -T 1 -H .\hmmherest\newMacros -C trealconf dic listahmms

Master Sistemas de Interacción Natural

19

A basic isolated-words ASR system (HTK V3.x)

There is also a framework over HTK to build simple interactive applications combining speech

recognition, text-to-speech and dialog management. This framework is the ATK Real-Time API

for HTK (http://htk.eng.cam.ac.uk/develop/atk.shtml).

Master Sistemas de Interacción Natural

20