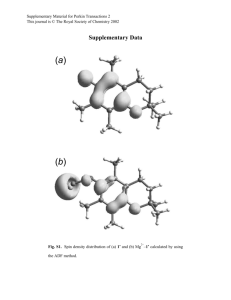

Supplementary figures

advertisement

Supplementary Methods Procedure All tasks were presented using E-Prime 2.0 (Psychology Software Tools) on a laptop equipped with Windows XP operating system. For XG, testing was done over four 2-hour sessions at his residence. Each task was repeated twice between two different testing sessions to ensure that behavior was replicable across days. Similar to XG, elderly controls did two iterations of each task in four 2-hour sessions. Testing was done at the University of Pennsylvania. In all cases, new stimuli were used when a task was repeated to prevent carryover learning effects. In all but one task (Probabilistic Selection Task), all participants (including XG) made incentivecompatible decisions and were able to earn additional performance-based compensation. At the end of each testing session, compensation ($15/hour) was provided for participation and for performance in one randomly chosen task. Only one task was chosen, rather than paying all tasks, so that participants had an incentive to do well on all tasks (e.g., doing well on the first few tasks alone did not guarantee a good payment) and so that the compensation in a single task was at meaningful levels (e.g., each trial is worth $0.05 as opposed to less than a penny). Participants were fully informed of these compensation contingencies before each testing session. Tasks Weather prediction task The Weather Prediction Task is a probabilistic classification task often used to test implicit category learning. Because patients with Parkinson’s disease are impaired on this task, it has 1 been argued that the striatum is necessary for this kind of learning (Knowlton et al., 1996, Shohamy et al., 2004). This task aims to evaluate XG’s ability to learn and combine stimulusvalues in a probabilistic learning environment without reversals. This task requires participants to predict “rain” or “shine” based on combinations of one to three cues, which look like playing cards (Supplementary Figure 5). We used a modified version of this task (Shohamy et al., 2004) to prevent any possibility of a floor effect in performance. In this modified version, there are only four cues in total. Each cue is associated with a fixed conditional probability of “rain”, and the probability of “rain” for a given combination of cues is the product of the individual cues’ conditional probabilities. Both outcomes were equally likely across 200 testing trials. All possible combinations of 1, 2, or 3 cues (14 total combinations) are pseudorandomized so that the same pattern does not appear consecutively. Two lists of 200 pseudorandomized trials are used and the order the lists are used is counterbalanced across all participants for the two testing sessions. On each trial, participants are shown a combination of 1, 2, or 3 cues in the center of the screen. Based on the combinations of cues, participants decide between “rain” or “shine” by pressing a button on the keyboard. After each response, auditory feedback for correct (high pitched ring) or incorrect (low-pitched buzzer) choices, as well as visual feedback (sun or rain cloud above cue combinations) is provided. If participants fail to respond within 2.5 seconds, “Answer Now” appears above the cue combinations to indicate that a response is needed. If participants fail to respond within five seconds, the low-pitched buzzer is sounded and the trial is terminated. A score bar, initiated at 200 points, is provided at the bottom of the screen to provide feedback on 2 overall performance. Participants gain one point for each correct response and lose one point for each incorrect response. Each point has a monetary value of $0.02. Performance was measured as the percentage of time participants chose the more likely answer, that is, whether their response (“rain” or “shine”) was the more likely one given the cue combination. Probabilistic selection task The Probabilistic Selection Task has been used to study the mechanisms that govern learning from positive and negative feedback (Frank et al., 2004). It has been shown with this task that Parkinson’s patients on dopaminergic agonists (such as levodopa) exhibit enhanced learning from positive reward and reduced learning from negative reward (Frank et al., 2004). Vice versa, patients off medication exhibit reduced learning from positive reward and enhanced learning from negative reward. A computational model of learning was developed around the idea of dopamine bursts and dips in the striatum representing positive and negative reward prediction errors, respectively (Cohen and Frank, 2009). This task aims to evaluate XG’s ability to process positive and negative reward and his ability to learn stimulus values in a probabilistic learning environment without reversals. This task has two phases: a training phase and a test phase (Supplementary Figure 6). In the training phase, participants become familiar with three different pairs of Hiragana characters (AB, CD, and EF). The stimulus pairs are presented in a random order. On any given trial, only one pair of stimuli is shown and each character is positioned randomly at two set locations (left and right of the central divide) on the computer screen. Participants respond by pressing a button to choose either the left- or right-positioned stimulus. Feedback is provided after every trial indicating whether the choice is correct or incorrect. Feedback of “Correct” or “Incorrect” is 3 provided probabilistically: 80/20 for AB, 70/30 for CD, and 60/40 for EF. For example, choosing A leads to positive feedback for 80% of AB trials, while choosing B leads to negative feedback as often. Vice versa, choosing A leads to negative feedback for 20% of AB trials, while choosing B leads to positive feedback as often. Typically, participants complete training once criteria are met (at least 65% choices of A, 60% choices of C, and 50% choices of E). Criteria are evaluated after every 60 trials with a maximum of 480 trials. However, since XG did not reach criteria during training, we retested control participants on a modified version of the task that removed the criteria during the training component. XG was tested twice on the task. Controls were tested once with criteria and twice without criteria. Different sets of Hiragana characters were used to limit possible learning effects across testing sessions. Performance during the training phase is measured by the participants’ choice of the richer stimulus from each pair of stimuli (stimuli A, C, and E). After finishing the training phase, participants proceed to the test phase of the task, in which they are tested with new stimulus combinations involving A (AC, AD, AE, AF) and B (BC, BD, BE, BF), along with the three familiar combinations (AB, CD, EF). In this part of the task, participants are told to use the knowledge they gained about the individual stimuli from the training phase and/or use their “gut instincts” in order to choose the best option from each pair of stimuli. There are 66 trials during the test phase (6 trials per stimulus pair). Participants are not given feedback during this phase to ensure that any choices made are a result of learning from the first part of this experiment. In this segment of the task, performance is measured as the percentage of trials participants choose A or avoid B in the novel pairs. 4 Crab game The Crab Game is a dynamic foraging task that has been used to study reinforcement learning in Parkinson’s disease patients on and off levodopa, compared to healthy young adults and elderly controls (Rutledge et al., 2009). This task aims to evaluate XG’s ability to engage in reinforcement learning in an environment with changing probabilities in which matching rather than maximizing is favored (Lau and Glimcher, 2008). In this dynamic foraging task, participants search for crabs in one of two static traps marked by a green and a red buoy (Supplementary Figure 7). Each buoy is attached to a cage visible only after a choice has been made. On any given trial, an individual cage can only hold a maximum of one crab. Once a cage is baited with a crab, it remains armed until chosen. Buoys are baited on a variable-ratio reinforcement schedule of 6:1 with a total reward probability of 0.3 (i.e., the participant can find a maximum of 12 crabs every 40 trials at the richer buoy). The identity of the richer buoy switches every 40 trials. Such variable ratio schedules are often used to elicit matching behaviors in animals, since matching outperforms maximizing on such tasks (Lau and Glimcher, 2008). Participants practice for 40 trials with no contingency reversals before beginning the dynamic foraging task for 320 trials. Participants are informed that no reversals would occur during the practice block. During the dynamic foraging task, block transitions are not signaled and participants are informed that these reversals would occur periodically throughout the task. For this task, performance is measured as a participant’s probability of choosing the richer option. 5 Fish game The Fish Game is a probabilistic reversal-learning task with rewards. In the Fish Game, each option is baited with a fixed probability each trial, but uncollected rewards are not carried over between trials. Under these conditions, maximizing, rather than matching, optimizes performance. Thus, this task aims to evaluate XG’s ability to engage in reinforcement learning in an environment with changing probabilities in which maximizing rather than matching is favored. The Fish Game is adapted from the Crab Game (Supplementary Figure 8). In the Fish Game, participants can fish at either end of the lake. The options are marked by a red and a green fishing rod placed on the left and right ends of the lake, respectively. Participants practice for 40 trials with no contingency reversals before playing the game for 320 trials. Reward ratios, total reward probabilities, and reversal contingencies are the same as the Crab Game. Performance is measured as a participant’s probability of choosing the richer option. Bait game The Bait Game is a variant of the Fish Game in which participants learn to avoid losses rather than collect gains. Thus, this task aims to evaluate XG’s ability to engage in probabilistic reversal learning based on punishment instead of reward. In this task, participants are fishing in a barren lake (Supplementary Figure 9). Although no fish can be caught, participants are told that a large fish is roaming the lake and can eat the bait off of the hook if that rod is chosen. If the bait (image of a worm) is eaten, a replacement is 6 automatically bought at a cost of $0.05. Participants are given $5.00 prior to testing and are told to minimize their losses during the task. For this task, the punishment ratio was 6:1 with a total punishment probability of 0.3. Participants practice for 40 trials with no contingency reversals before playing the game for 320 trials. Reversal contingencies are the same as the Fish and Crab Games. Performance is measured as a participant’s probability of choosing the richer option. Stimulus-value learning This reversal-learning task has been used to differentiate stimulus-value from action-value representation in the ventromedial prefrontal cortex (Glascher et al., 2009). This task aims to cleanly evaluate XG’s ability to learn stimulus values, in a probabilistic learning environment with reversals. In this task, participants choose between two distinct fractal stimuli, which are positioned randomly at two static locations (left and right of the central white dot) on screen (Supplementary Figure 10). On each trial, participants respond by pressing a button on a keyboard to choose between the two fractals. Positive feedback is provided if a fractal is armed with a reward (picture of a coin); otherwise, negative feedback is provided (red X overlaying a coin; no coin is found). The fractals are probabilistically rewarded, with the richer fractal rewarded 70% of the time and the poorer fractal rewarded 30% of the time. Participants are informed that on any given trial one fractal has a higher likelihood of delivering a reward and that this association reverses periodically throughout the task. Participants practice for 40 trials with no contingency reversals before beginning a full run of 320 trials. Each reward 7 (coin) has a monetary value of $0.05. Performance is measured as a participant’s probability of choosing the richer option. All participants were tested with two different iterations of the task. In the first iteration, reversals are dependent on performance (Glascher et al., 2009): after four consecutive choices of the richer fractal, contingency reversal occurred with a 0.25 probability for every consecutive trial. If consecutive choice of the richer fractal is broken before a reversal occurs, the reversal criterion is reset. Because this criterion makes reversals dependent on correct choices, block lengths vary among participants. In order to have more comparable data between XG and controls for this task, we also tested participants on a modified version of the task with a fixed number of blocks and transition points (8 equal blocks of 40 trials, 7 transition points). New fractals were used for each testing session. Action-value learning This reversal-learning task has been used to differentiate action-value from stimulus-value representation in the ventromedial prefrontal cortex (Glascher et al., 2009). This task aims to cleanly evaluate XG’s ability to learn action values, in a probabilistic learning environment with reversals. In this task, participants decide between two actions on a trackball mouse, either clicking the left mouse button with the index finger or swiping the trackball upward with the thumb (Supplementary Figure 11). The richer action is rewarded 70% of the time and the poorer action is rewarded 30% of the time. Similar to the stimulus-value learning task, participants are informed that on any given trial one action provides a higher likelihood of receiving a reward 8 and that this association reverses periodically throughout the task. Participants practice for 40 trials with no contingency reversals before beginning a full run of 320 trials. Each reward (coin) has a monetary value of $0.05. Performance is measured as a participant’s probability of choosing the richer option. Again, all participants are tested on two iterations of the task with different reversal contingencies, one with choice-dependent transitions and one with fixed transitions. For the action-value and stimulus-value learning tasks, we report in the main manuscript the data from the version of the task with fixed reversal contingences. We observed the same dissociation in the version of the task with choice-dependent reversal contingencies (Supplementary Figure 12). Statistical tools In all tasks, binomial probability tests were used to compare XG’s performance against chance. To compare XG’s performance against matched controls, we used a modified t-test specifically designed for case studies (Crawford and Howell, 1998): 𝑡= 𝑋1 − ̅̅̅ 𝑋2 𝑁 +1 𝑆2 √ 2𝑁 2 In the above formula, 𝑋1 = patient’s score, ̅̅̅ 𝑋2 = mean of normative sample, 𝑆2 = standard deviation of normative sample, and 𝑁2 = size of normative sample. The degrees of freedom, 𝜐, are 𝑁1 + 𝑁2 − 2, which reduces to 𝑁2 − 1. We used the t-distribution to estimate what 9 percentage of the normative sample is likely to exhibit the same score as XG at an error rate of 5%. This does not overestimate the rarity of the patient’s score and is more conservative than the typical method of using a z-score. Reported t-tests are all one-sided based on the a priori assumption that the patient will perform worse than the normative sample. Choice data from the action-value and stimulus-value learning tasks were fit with a linear regression in MATLAB to estimate the influence of past rewards and past choices on current choices. This model includes terms for the past 5 rewards (t1 – t5) and the last choice (c). Data were also fitted with a 3-parameter reinforcement learning model. Inputs to this model are the sequences of choices and outcomes from each participant. The model assumes that choices are a function of the participant’s perceived value of each option (V1 and V2) for every trial. Values are initiated at zero at the beginning of the experiment and are updated with the following rule (where V1(t) is the value of option 1 at trial t): V1(t +1) = V1(t) + ad (t) (1) d (t) = R1(t) -V1(t) (2) Here, the value of the chosen option is updated by (t), the reward prediction error, which is the difference between the reward experienced and the reward expected. R1(t) is a binary vector of experienced reward (1 = reward, 0 otherwise) from choosing option 1 on trial t. For each trial, the reward prediction error is discounted by , the learning rate. If the learning rate is low ( 10 close to 0), values are changed very slowly. If the learning rate is high ( close to 1), values are updated very quickly, and recent outcomes have a much greater influence than less recent outcomes. The value for the unchosen option (V2 in this example) is not updated. Given V1 and V2, the model then computes the probability of choosing option 1 (P1(t)) by the following logit regression model: 𝑃1 (𝑡) = 1 (3) 1+ 𝑒 −𝑧 𝑧 = 𝛽(𝑉1 (𝑡) − 𝑉2 (𝑡)) + 𝑐 (4) In equation 4, the inverse noise parameter is the regression weight connecting the values of each option to the choices and c is a constant term that captures a bias toward one or the other option. Likelihood ratio tests are used to compare the full model (all three parameters , , and c) to a reduced model with no learning (only c). The test statistic has a chi-squared distribution with degrees of freedom equal to the difference in the number of parameters for the two models so that model complexity is accounted for. We also used Bayesian Model Comparison, computing Bayesian Information Criterion scores for each model penalizing for the number of parameters. The model was fit to the data by the method of maximum likelihood using the optimization toolbox in MATLAB. This reinforcement learning model was also fit to data in the Crab, Fish and Bait Games. We could not fit the model to the learning phase of the probabilistic selection task, due to a coding error in what data was saved in that task. 11 References Cohen MX, Frank MJ. Neurocomputational models of basal ganglia function in learning, memory and choice. Behav Brain Res 2009; 199: 141-56. Crawford JR, Howell DC. Comparing an individual's test score against norms derived from small samples. The Clinical Neuropsychologist 1998; 12: 482-6. Glascher J, Hampton AN, O'Doherty JP. Determining a role for ventromedial prefrontal cortex in encoding action-based value signals during reward-related decision making. Cerebral Cortex 2009; 19: 483-95. Frank MJ, Seeberger LC, O'Reilly RC. By carrot or by stick: Cognitive reinforcement learning in Parkinsonism. Science 2004; 306: 1940-3. Knowlton BJ, Mangels JA, Squire LR. A neostriatal habit learning system in humans. Science 1996; 273: 1399-402. Lau B, Glimcher PW. Value representations in the primate striatum during matching behavior. Neuron 2008; 58: 451-63. Rutledge RB, Lazzaro SC, Lau B, Myers CE, Gluck MA, Glimcher PW. Dopaminergic drugs modulate learning rates and perseveration in Parkinson's patients in a dynamic foraging task. J Neurosci 2009; 29: 15104-14. Shohamy D, Myers CE, Onlaor S, Gluck MA. Role of the basal ganglia in category learning: how do patients with Parkinson's disease learn? Behavioral Neuroscience 2004;118: 676-86. 12 Supplementary Tables Supplementary Table 1: Neuropsychological evaluation Ability Tested Dementia Premorbid IQ Cognitive Ability Test Name MMSE AMNART Revised WAIS-III Info Subscale Visual Acuity Rosenbaum vision screener Contrast Sensitivity Cambridge Low Contrast Gratings Color Vision Ishihara Color Vision Deficiency Test Intermediate Vision Visual Object and Space and Spatial Location Perception Battery Shape Detection Screening Test 1, Incomplete Letters Test 5, Dot Counting Test 6, Position Discrimination Test T, Number Location Visuospatial Memory Brief Visuospatial Memory Test – Revised Trial 1 Trial 2 Trial 3 Total Recall Learning Delayed Recall Executive Function Wisconsin Card Sort – 64 Total Errors Perseverative Responses Perseverative Errors Non-perseverative Errors Conceptual Level Responses Executive Function D-KEFS Tower Test Total Achievement Score Total Rule Violation Mean First-Move Time Time-Per-Move Ratio Move Accuracy Ratio Rule-Violation-Per-Item Ratio 13 Score 28/30 111.0 12 (mean = 10, SD = 3) OD 20/25 -2, OS 20/30 -2 OD 28, OS 29 (mean = 28.4, SD = 6.5) 38/38 20/20 20/20 10/10 19/20 10/10 Percentiles: 54% 96% 92% 88% 90% 93% Percentiles: 93% 87% 92% 66% 86% Percentiles: 50% 46% 84% 37% 16% 50% Supplementary Table 2: Testing schedule Day 1 Probabilistic Selection1 Stimulus-value Learning3 Action-value Learning3 Fish Game Crab Game Day 2 Action-value Learning3 Stimulus-Value Learning3 Crab Game Fish Game Bait Game Probabilistic Selection2 Day 3 Probabilistic Selection2 Stimulus-Value Learning4 Action-Value Learning4 Bait Game Weather Prediction 1 With criteria Without criteria 3 Dynamic reversal blocks (performance-dependent reversals) 4 Static reversal blocks (defined reversal points) 2 14 Day 4 Action-value Learning4 Stimulus-value Learning4 Weather Prediction Supplementary figures Supplementary Figure 1. Photograph showing dystonic posturing of XG’s hands. 15 Supplementary Figure 2. Median response times for XG and healthy controls across five tasks. XG was significantly slower than controls during the Bait Game (t10 = 2.15, P = 0.028). Error bars denote standard deviation. 16 Supplementary Figure 3. XG and healthy controls’ performance in the training phase of the Probabilistic Selection Task. (A) Plotted is probability of choosing the richer option given a specific left-right configuration (e.g., AB means symbol A on the left and B on the right, BA means vice versa). XG only performed at above chance levels for one left-right configuration of each stimulus pair (BA, DC and FE, respectively) and not for the other (AB, CD, and EF, respectively). XG was significantly impaired at choosing the richer option for AB configuration (t10 = –2.830, P = 0.009) and CD configuration (t10 = –3.047, P = 0.006). (B) Plotted is the probability of repeating the choice of the richer option conditional upon the same (‘consistent’) left-right configuration (e.g., ABt-1, ABt) or different (‘inconsistent’) left-right configuration (e.g., ABt-1, BAt). XG was impaired relative to controls for the inconsistent configuration of AB trials (t10 = –2.84, P = 0.009) as well the inconsistent configuration of CD trials (t10 = –2.923, P = 0.008), and did not repeat the choice of the richer option at greater than chance levels in these configurations. XG was also impaired relative to controls for the consistent configuration of AB trials (t10 = –1.93, P = 0.041). Error bars denote standard deviation. 17 Supplementary Figure 4. XG and healthy controls’ performance as a function of time across all tasks. In each figure, performance is shown broken down into blocks of 40 trials. In the bottom row, these blocks correspond to reversals in the reward contingencies. In top row, these blocks only correspond to time, as there are no reversals of the reward contingencies in the Weather Prediction of Probabilistic Selection Tasks. In the tasks that require the learning of stimulus values (Weather Prediction, Probabilistic Selection, Stimulus-Value Learning), XG’s performance is impaired from the first block. In the tasks without reversals (top row), XG’s performance does not show a clear decline with time, as would be indicative of a problem with the long-term stability of values or maintaining set during learning. In the tasks with reversals (bottom row), XG’s performance also does not show a clear decline with time, as would be indicative of a problem that is specific to reversal learning as opposed to initial learning. 18 Supplementary Figure 5. Weather Prediction Task. (A) The task used four individual cues (C1C4). (B) Each trial presents one of the fourteen different combinations of 1-3 cues from A. (C) Probability table for rain vs. shine for each card combination pattern. 19 Supplementary Figure 6. Probabilistic Selection Task. In the training phase, three pairs of stimuli are presented and the stimuli are probabilistically rewarded at different rates (reward probabilities are shown in parentheses). In the testing phase, novel pairs of stimuli are presented without feedback. These novel pairs include all possible combinations of the stimulus most associated with positive feedback (A: AC, AD, AE, AF) and the stimulus most associated with negative feedback (B: BC, BD, BE, BF). 20 Supplementary Figure 7. Sequence of events across trials in the Crab Game. 21 Supplementary Figure 8. Sequence of events across trials in the Fish Game. 22 Supplementary Figure 9. Sequence of events across trials in the Bait Game. 23 Supplementary Figure 10. Sequence of events across trials in the Stimulus-Value Learning Task. 24 Supplementary Figure 11. Sequence of events across trials in the Action-Value Learning Task. 25 Supplementary Figure 12. (A,B) XG and healthy controls’ performance for the stimulus-value learning (A) and action-value learning tasks (B), in which reversals were dependent on performance. Plotted is the probability of choosing the richer option averaged around all transitions (i.e., reversal of the reward contingencies). Vertical dashed line denotes transition. Horizontal line denotes chance performance. Data smoothing kernel = 11. Similar to the versions of these tasks with fixed transitions presented in main manuscript, XG was impaired at stimulusvalue learning but performed well in action-value learning. 26