Employers` feedback on completer`s performance

advertisement

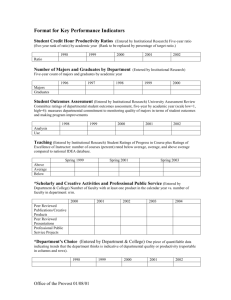

h.1 1 h.1 Employer Feedback on Graduates and Summaries of the Results Précis: Mean ratings since 2005-2006 indicate program improvement from initial “good” to “very good” ratings in content area knowledge, general and content specific teaching methods, with specific strengths noted in professional behavior and ethics and addressing issues of diversity. Mean ratings since 2007-2008 suggest that candidates are as well or better prepared than employees from other programs. Issues related to ratings in assessing learning are being addressed through program and assessment changes such as the Teacher Performance Assessment, co-teaching to emphasize co-teaching, and the use of an analysis of student work in methods courses. Inconsistent reports between mentors and employers related to infusing technology are being addressed through conversations with faculty members to modify course content. Classroom management findings will be continued to be explored with partnership panels, mentor teachers, and our emphases on increasing engagement and differentiation. Since 2009-2010 graduates have performed well on general education competencies as rated by employers. Despite various efforts and consultation with our professional community, response rates are not what we would desire. The educator preparation unit recognizes that schools and school districts are stakeholders in our efforts to improve the outcomes for students with whom our graduates work. During the 2003-2004 academic year, we mailed brief survey forms to principals and superintendent faculty members that we knew had employed our graduates. The return of these surveys was too poor to aggregate or report meaningfully (4 responses). During the 2004-2005 academic year, we posed the question to our Partnership Panel, an advisory board designed to provide additional stakeholder participation in the design, implementation, and evaluation of our field experiences. In these and program specific advisory group meetings, the need for a revised survey and a means for distribution and collection was discussed. In 2005-2006, several strategies were employed to increase participation in the survey, as suggested by these groups. In addition, discussions took place as to whether the survey should be annual or biannual. Beginning 2007-2008 the survey was administered annually. In our effort to increase responses, we identified principals of schools and superintendents of districts who were reported as employers of our graduates on the Ohio Department of Jobs and Families Services (ODJFS) Graduate Employment Report. We also used a state Teacher Quality Performance measure follow-up study data base to identify additional principals of schools in which our graduates worked. The results of these efforts were not what we hoped: Of the 43 sent to principals on the ODJFS listing, 12 were received (28%) Of the 25 sent to superintendents on the ODJFS listing, 3 were received (12%) Of the 102 sent to Principals on the Teacher Quality Performance follow-up list, 18 were received (18 percent. Overall, 170 surveys were sent, and 33 were received (19%). During the 2007-2008 academic year, the University of Cincinnati opted out of continuing the Teacher Quality Partnership survey. During this year, we initiated sending emails with a link to a survey to principals and superintendents named on the ODFJS listings. Fifteen were returned (25%). However, feedback from principals and superintendent partnership panel meetings indicated that they were “always getting surveys.” Beginning 2008-2009, surveys were distributed at the partnership meetings, with postage-paid envelopes, and a request to complete and return the survey. Limited responses were returned. We continue to talk with principals, h.1 2 superintendents, members of the Partnership Panel, and community members of the University Council for Educator Preparation about strategies to increase responses. The Survey and Aggregated Data The survey was developed using phrases generated from institutional standards. In 2007-2008, at the suggestion of the Partnership Panel, an additional section comparing candidates to those from other programs was added. In 2009-2010 an additional set of questions, developed from the general education student outcomes, was added to gather stakeholder data. The overall ratings are provided in Table 1.7 T1. Scale for quality of graduate in each area: 5 Excellent 4 Very Good 3 Good 2 Fair 1 Poor Scale for comparison: 5 much stronger 4 Stronger 3 about the same 2 weaker 1 much weaker Table 1.7 T1 Employer Perceptions of Unit Program Completers Ratings of observations of UC program completers/employees: Content area knowledge General teaching methodology Content specific teaching methodology Planning lessons and units Delivering lessons to students Assessing learning Professional behavior and ethics Classroom management Infusing technology Dealing with issues of diversity Communication Skills Social Responsibility Critical Thinking Knowledge integration Information literacy Comparison with grads of other programs: Content area knowledge General teaching methodology Content specific teaching methodology Planning lessons and units Delivering lessons to students Assessing learning Professional behavior and ethics Classroom management Infusing technology Dealing with issues of diversity Communication Skills Social Responsibility Critical Thinking Knowledge integration Information literacy N 2005-2006 2007-2008 2008-2009 2009-2010 2010-2011 3.97 3.91 3.78 3.88 3.72 3.94 3.56 3.84 3.76 4.36 4.18 4.18 4.27 4.36 4.36 4.60 4.45 4.30 4.18 4.11 4.05 3.96 3.93 3.85 4.17 3.83 3.56 3.86 3.79 3.79 3.79 3.57 4.21 3.93 3.64 3.69 4.69 4.65 4.62 4.38 4.38 3.46 4.77 3.86 2.92 3.76 4.64 4.14 4.21 4.08 3.79 4.50 4.29 3.64 4.29 2009-2010 4.92 4.92 4.69 3.92 4.30 2010-2011 3.14 3.79 3.21 3.21 3.14 3.21 3.36 3.14 3.29 3.86 3.21 3.93 4.14 3.21 3.14 14 3.15 3.07 3.15 3.07 3.23 3.23 3.07 3.15 3.15 4.23 3.15 3.46 3.07 3.07 3.07 13 Items added 2009-2010 to gather additional information regarding general education outcomes 2005-2006 2007-2008 2008-2009 Items added 2007-2008 suggestion of partnership panel 3.36 3.45 3.45 3.55 3.45 3.36 3.55 3.36 3.40 3.50 4.29 4.14 3.70 3.87 3.35 3.57 3.34 3.30 3.31 3.27 Items added 2009-2010 to gather additional information regarding general education outcomes 33 15 18 h.1 3 Analysis The following charts include (a) the mean scores of University of Cincinnati graduates as judged by employers; and (b) the mean comparison of University of Cincinnati graduates with peers from other programs. Trend lines are provided for mean scores of University of Cincinnati graduates. Scale for quality of graduate in each area: 5 Excellent 4 Very Good 3 Good 2 Fair 1 Poor Scale for comparison: 5 much stronger 4 Stronger 3 about the same 2 weaker 1 much weaker Content Area Knowledge UC Grads 3.97 4.36 Compared to other programs 4.18 4.29 3.36 2005-2006 2007-2008 2008-2009 4.69 3.86 3.14 3.15 2009-2010 2010-2011 Mean ratings of content area knowledge show a positive trend between 2005 and 2011 as judged by employers. Mean ratings of comparisons with employees from other programs indicated that graduates were consistently judged to be at least as strong as employees from other programs; during 2008-2009 mean ratings were much stronger for University of Cincinnati graduates. Mean ratings of general teaching methods showed a positive trend as well; mean ratings suggested that our graduates were at least as strong as other graduates from other programs. General Teaching Methods UC Grads Compared to other programs 4.65 3.91 4.18 4.11 4.14 3.45 2005-2006 2007-2008 3.79 3.79 3.07 2008-2009 2009-2010 2010-2011 h.1 4 Content Specific Teaching Methods UC Grads Compared to other programs 4.62 4.18 3.78 2005-2006 4.05 3.70 3.45 2007-2008 2008-2009 3.79 3.21 3.15 2009-2010 2010-2011 Planning Lessons and Units UC Grads Compared to other programs 4.38 4.27 3.96 3.88 3.45 2005-2006 2007-2008 3.70 2008-2009 3.79 3.21 3.15 2009-2010 2010-2011 Delivering Lessons to Students UC Grads Compared to other programs 4.38 4.36 3.72 2005-2006 3.93 3.45 3.35 2007-2008 2008-2009 3.57 3.14 3.23 2009-2010 2010-2011 Mean ratings suggest that content specific teaching methods, planning, and delivering lessons to students are strengths for our candidates; mean ratings demonstrate a mild positive advantage over employees from other programs. h.1 5 Assessing Learning UC Grads Compared to other programs 4.36 4.21 3.94 3.85 3.36 2005-2006 2007-2008 3.57 3.21 2008-2009 2009-2010 3.46 3.23 2010-2011 Mean ratings of graduates’ ability to assess learning have been inconsistent with a negative trend line. Programs addressed this issue through a variety of strategies. Initial licensure programs initiated a pilot the Teacher Performance Assessment in autumn 2009, requiring an analysis of student work and documentation of the findings of this assessment to differentiate instruction. Secondary and middle childhood education coursework was co-taught with general and special education faculty members, with a fresh emphasis on assessment and differentiation. We anticipate that in subsequent years we will see a positive change in employers’ ratings of graduates’ ability to assess learning in response to these program changes. Professional Behavior and Ethics UC Grads Compared to other programs 4.77 4.60 4.17 3.56 2005-2006 3.55 2007-2008 3.93 3.34 3.36 2008-2009 2009-2010 3.07 2010-2011 Data gathered from our candidates’ dispositions reports document that for most programs, 98-100% of all candidates demonstrate strong professional behavior and ethics. This finding is confirmed by employers’ ratings of graduates in this area. h.1 6 Classroom Management UC Grads Compared to other programs 4.45 3.84 2005-2006 3.83 3.86 3.64 3.36 3.30 2007-2008 2008-2009 3.14 3.15 2009-2010 2010-2011 Classroom management is an area of concern of employers consistently voiced at Partnership Panel meetings. Graduates, however, are demonstrating management comparable to that of employees from other programs. With the use of the Teacher Performance Assessment, increased emphasis on student engagement, and increased differentiation we anticipate that ratings of classroom management will improve. However, we have encountered a subtheme of “controlling students” among some participants in these meetings. This “control” is inconsistent with our emphases on collaborative learning strategies and developing students’ voice. Further conversations may clarify expectations related to classroom management. Infusing Technology UC Grads Compared to other programs 4.30 3.76 3.40 3.56 3.69 3.31 3.29 2.92 2005-2006 2007-2008 2008-2009 2009-2010 3.15 2010-2011 Our mentor teachers indicate that candidates use a wide range of technology in their placements. The data from employers, however, is of great concern. We have also found that not all candidates have the technology skills necessary for the videotaping, compressing, and uploading of evidence for the Teacher Work Sample. During summer 2011 meetings were conducted with faculty members who teach this course to discuss these finding and concerns. With the addition of the Information Technology program to the College of Education, Criminal Justice, and Human Services in Autumn 2011 there is an increased potential for instructional growth in technology. h.1 7 Dealing with Diversity UC Grads Compared to other programs 4.64 3.50 2005-2006 2007-2008 4.08 4.23 4.21 4.14 3.76 3.86 3.27 2008-2009 2009-2010 2010-2011 With the emphasis on culturally responsive teaching and developing a conversation of teaching and learning among candidates and students representing a wide range of diversity, we are please with employers’ recognition that our candidates are very strong both in their practice and in comparison with employers from other programs. General Education Outcomes UC 09 10 4.92 3.92 3.64 3.07 Social Responsibility 4.30 4.29 3.46 3.15 Communication Skills Others 10 11 4.29 4.14 3.93 3.79 UC 10 11 4.69 4.50 3.21 Others O9 10 4.92 Critical Thinking 3.21 3.07 Knowledge integration 3.14 3.07 Information literacy In 2009 we added items to the employer survey which addressed the general education student outcomes for the University of Cincinnati. Mean ratings suggest that candidates are both “very good” in these skill areas and are as strong as or stronger than employees from other programs.