Chapter 2: Data Architecture - Center

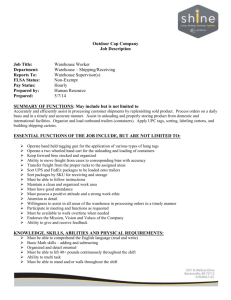

advertisement