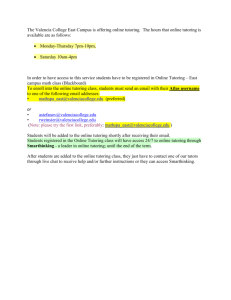

Learning - Community College of Philadelphia

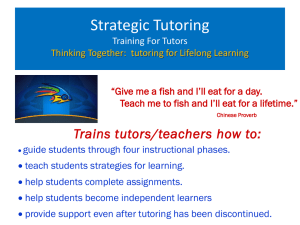

advertisement