naropa assessement of non-academic support units

advertisement

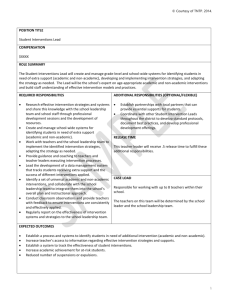

Annual Non-Academic Support Unit (NASU) Assessment Manual For more information contact: Ted Lamb, Ph.D., Asst Dean of Curriculum & Assessment, tlamb@naropa.edu phone: 303-245-4617 Version 2.1 July 25, 2013 TABLE OF CONTENTS I. II. III. IV. V. VI. VII. VIII. IX. X. Introduction Naropa Mission Statement Non-Academic Support Unit Mission Statement Curricular Arc Outcomes Assessment of Non-Academic Support Units Why Do Non-Academic Support Units Need to Conduct Assessment? What Are the Costs of Not Measuring Performance in Support Units? What are the Characteristics of Effective Assessment? Naropa Non-Academic Support Units Naropa Non-Academic Support Unit Assessment Plan Review and Select Conduct Analyze Data & Prepare Assessment Report Feedback Support Unit Goals and Outcomes Goals How to develop unit outcomes Characteristics of good outcomes Examples of Some Problematic Unit Outcomes Checklist for a Unit Outcome Questions to Consider when Reviewing the Design of Outcomes Direct and Indirect Measures of Support Direct Measures Indirect Measures What Should an Assessment Measure Do? Analyzing Your Assessment Data What to do The Skeptical Assessor Implementation-Closing the Loop Annual Assessment Report WEAVEonline References 1 APPENDICES A. FAQs on Naropa Assessment of Non-Academic Support Units B. How to Write a Mission Statement C. Naropa WEAVEonline Assessment Worksheet D. WEAVEonline Logging in and Logging Out E. WEAVEonline Assistance 2 I. INTRODUCTION Naropa University assesses each of its non-academic programs/departments yearly in order to assure that university, program, and course level goals and outcomes are being achieved and continually improved. This is a primarily internal process in which data are gathered by a Support Unit WEAVE Lead (SUWL) as well as support unit staff. External input may be solicited from campus personnel not in the support unit, alumni or other individuals or organizations but the process is largely internal. We use WEAVEonline as the software platform to input all of our assessment data, outcomes, reports, measures, maps, plans, etc. Training and use of WEAVEonline will begin in the fall, 2013. Assessment of non-academic support units is not evaluative in the sense that departments are not held accountable for failure or success so there should be no fear of failure if results show areas where there is a need for improvement. In fact, that is one of the reasons for doing assessment…finding areas that need improvement! The departments are held accountable, however, for actually having in place a process for stating mission and outcomes, establishing targets for achievement of outcomes, measuring accomplishments, and using the results to improve their services to the Naropa community. This manual includes a variety of guidelines for assessing your support unit or departments and serves as a tool for us to achieve clarity and some degree of uniformity in the complex area of assessment at a complex and unique institution. All assessment at Naropa begins with the basic mission statement in mind. Naropa University Mission Statement. Inspired by the rich intellectual and experiential traditions of East and West, Naropa University is North America's leading institution of contemplative education. Naropa recognizes the inherent goodness and wisdom of each human being. It educates the whole person, cultivating academic excellence and contemplative insight in order to infuse knowledge with wisdom. The university nurtures in its students a lifelong joy in learning, a critical intellect, the sense of purpose that accompanies compassionate service to the world, and the openness and equanimity that arise from authentic insight and self-understanding. Ultimately, Naropa students explore the inner resources needed to engage courageously with a complex and challenging world, to help transform that world through skill and compassion, and to attain deeper levels of happiness and meaning in their lives. Drawing on the vital insights of the world's wisdom traditions, the university is simultaneously Buddhist-inspired, ecumenical and nonsectarian. Naropa values ethnic and cultural differences for their essential role in education. It embraces the 3 richness of human diversity with the aim of fostering a more just and equitable society and an expanded awareness of our common humanity. A Naropa education—reflecting the interplay of discipline and delight—prepares its graduates both to meet the world as it is and to change it for the better. Non-Academic Support Unit Mission Statement. Each non-academic support unit also has a mission that should be carefully and artfully articulated. Some units already have such mission statements. For those units that do not have a mission statement there is a guide in Appendix B to assist in developing your unit’s unique mission statement. 4 II. CURRICULAR ARC OUTCOMES Naropa has a set of outcomes that describe the broad picture towards which all the diverse teaching and learning activities at the University are directed. These are called Curricular Arc Outcomes. The University also has outcomes for individual programs (e.g., BA Peace Studies and MA Environmental Leadership). Every course also has learning outcomes established for that course that assist students and faculty in guiding and assessing learning activities. It would be useful and instructive for staff as well as faculty and students to know how the wide range of critical work and activities in the non-academic support units enable and are linked to the achievement of curricular arc outcomes. This can be accomplished in the WEAVEonline software platform fairly easily. As support unit outcomes are defined in WEAVE they can be linked to the curricular arc outcomes to illustrate how the efforts of the support units directly create and maintain the organizational and environmental structures and climate to accomplish the Naropa mission. The following is a list of Curricular Arc Level Outcomes below that can also be found on MyNaropa and are further articulated with descriptions of Introductory, Milestone, and Capstone types of course coverage. 1. COMPETENCY IN CONTEMPLATIVE THEORY AND PRACTICE Graduates cultivate unbiased awareness and presence of self, insight and clarity of mind, and compassionate practice. 1.A. THE RELATIONSHIP OF PERSONAL IDENTITY AND CONTEMPLATIVE EDUCATION 1.B. APPLYING CONTEMPLATIVE PRINCIPLES 1.C. MINDFULNESS AWARENESS PRACTICE 2. SKILLFULNESS IN ADDRESSING DIVERSITY AND ECOLOGICAL SUSTAINABILITY Graduates are able to think critically and analytically about social and cultural diversity; they recognize the interconnectedness of the human community to ecological sustainability and cultivate sustainable practices. 2.A. ECOLOGICAL RELATIONSHIPS AND SUSTAINABILITY AWARENESS 2.B. DIVERSITY AND SYSTEMS OF PRIVILEGE AND OPPRESSION 5 3. ABILITY TO EMPLOY MULTIPLE MODES OF INQUIRY, KNOWING, AND EXPRESSION Graduates are able to think, read, and write analytically and critically; use academic research methodology; utilize library resources and technical media. Graduates understand and are able to employ the contribution of the arts to human inquiry, knowing and expression. 3.A. CRITICAL THINKING & QUESTIONING 3.B. RESEARCH SKILLS 3.C. ARTS, EMBODIMENT, AND AESTHETIC PERCEPTION 3.D. WRITTEN COMMUNICATION 3.E. ORAL COMMUNICATION 4. EMBODY INTRA- AND INTER-PERSONAL CAPACITIES Graduates are able to effectively communicate as individuals and in collaboration with others through empathetic listening and inquiry, embodied deep listening and dialogue, and intercultural competency in diverse groups 4.A. INTRA-PERSONAL CAPACITIES 4.B. INTER-PERSONAL CAPACITIES 5E. DEMOSTRATE KNOWLEDGE AND SKILL IN A DISCIPLINE OR AREA OF STUDY Graduates develop a comprehensive understanding of both foundational and advanced concepts and methods in their area of study; build awareness of contemporary issues; and demonstrate the ability to apply, synthesize or create knowledge through a capstone project or paper. 5.A. KNOWLEDGE & SKILL 6. APPLY LEARNING IN REAL WORLD SETTINGS Graduates are able and inclined to engage real-world challenges and work ethically and effectively across diverse communities. 6.A. ETHICALLY ENGAGE REAL-WORLD CHALLENGES 6.B. CONNECTION TO VOCATION AND CAREER PATH 6 III. ASSESSMENT OF NON-ACADEMIC SUPPORT UNITS Why do administrative units need to conduct assessment? Assessment can be defined as the systematic and ongoing method of gathering, analyzing, and using information from various sources about a support unit in order to improve institutional and student support services as well as student learning. Assessment, as it is addressed in this manual, relates to measuring critical processes in order to gather data that provides information about how the institution is meeting stakeholders’ needs and expectations. It is not about evaluation of individual performance but instead about how programs/departments are meeting the needs of a smoothly running Naropa University. A major benefit of measuring performance among administrative support services is that it provides the basis by which the institution’s employees can gain a sense of what is going wrong and what is going right within the organization. This process ultimately establishes direction for improving quality and constituent satisfaction What are the costs of not measuring performance in administrative units? • Decisions based on assumptions or guesses rather than being “data driven” • Failure to meet customer expectations o Reliability o Efficiency o Quality o Cost o Delivery • Failure to identify potential improvement areas • Failure to identify areas that are succeeding quite well • Lack of optimum progress towards accomplishing the Naropa mission 7 What are the Characteristics of Effective Assessment? Effective support unit assessment should answer these questions: 1. What are you trying to do? 2. How well are you doing it? 3. Using the answers to the first two questions, how can you improve what you are doing? 4. What and how does a support unit contribute to the development and growth of the students? 5. How can the student learning experience be improved? (Hutchings, 2010) As we look at the whole picture of assessment, we know that there is a set of core set of principles articulated in the Naropa mission statement and the Curricular Arc Outcomes that drive the entire range of activities in and out of the classroom. Assumptions about assessment at Naropa can be summarized thusly: • The ultimate goal of good assessment is to improve student learning • Assessment requires clarity of purpose, goals, standards and criteria • Assessment requires a variety of measures • Assessment requires attention to outcomes and processes • Assessment is ongoing rather than episodic • Assessment is a cultural value at Naropa • Assessment is more likely to be embraced when it is part of a larger practice that promotes change • Assessment values the use of information in the process of improvement • Assessment is “owned” by the entire Naropa community not just an Office of Assessment • Assessment works best when we recognize that our students’ educational experience is multidimensional and integrated and may not occur merely in the classroom 8 IV. NAROPA NON-ACADEMIC ASSESSMENT UNITS Naropa has a number of non-academic assessment units that each, in their own way contribute to the culture, environment, and mission that helps accomplish the curricular arc outcomes. They are critical in the everyday operation and functioning of Naropa. They are key factors in establishing the institutional effectiveness of Naropa. The units below conduct yearly assessments. 1. Accounting 2. Admissions 3. The Learning Commons (formerly the Center for Excellence & Engagement) Advising ASP Writing Center 4. Development 5. Facilities 6. Human Resources 7. Information Technology 8. Marketing and Communication 9. Naropa Archives and Records 10. Office of Events 11. Safety and Security 12. Student Affairs Counseling Center Career Services Disability Services Student Leadership and Engagement 9 V. NAROPA ANNUAL NON-ACADEMIC SUPPORT UNIT ASSESSMENT PLAN Figure 1 presents a graphic of the cyclic Naropa Annual Non-Academic Support Unit Assessment Plan. The cycle begins in the fall of each academic year and proceeds with steps that should be completed by No Later Than (NLT) dates. 1. REVIEW and SELECT. This is the stage that begins early each fall semester with a review of the previous year AAR. It involves reviewing existing support unit outcomes and the selection of one or more to focus extended assessment activities in the coming year. Changes suggested by analyzing the data should be addressed at this time. Ideally this should be done early in the fall semester but certainly NLT 1 Oct. At the discretion of the Support Unit WEAVE Lead (SUWL) and support unit staff they may decide to examine all or a select number of support unit outcomes. 2. CONDUCT. This stage essentially goes on for all year and involves the use of assessment tools to gather data that will later be examined in more depth. In their courses, staff use assessment instruments to gather data about the achievement of support unit outcomes. The Support Unit WEAVE Lead and individual staff should use both direct and indirect measures of learning to determine how well students are achieving support unit outcomes. This activity will continue for the entire academic year that will be concluded by the Spring semester Grades due date (e.g., 14 May 2014). 3. ANALYZE DATA & PREPARE ASSESSMENT REPORT. This stage in the annual assessment process also goes on for the entire year and involves the support unit SUWL compiling the results of the assessment research that they do as well as the data that all staff members provide to them into an Assessment Report (previously known as a Departmental Systematic Review – DSR). The SUWL will meet periodically with the Asst. Dean of Curriculum & Assessment to update progress and discuss issues. The SUWL will input data into the WEAVE software platform. The Annual Assessment Report should be delivered to the Asst. Dean of Curriculum & Assessment no later than 1 July 2014 for senior leadership review. 4. FEEDBACK. The Asst Dean of Curriculum & Assessment will compile the reports from all the support units into a Support Unit Effectiveness Report to be delivered to the Provost NLT 1 August. This last step in the annual assessment cycle is important and it must be emphasized that assessment of our programs is not a one-shot activity that you simply check off your to-do list. Assessment is a cultural value at Naropa and the spirit of continuous improvement is what we seek to enhance. We do not seek perfection, whatever that may be, but we do seek to continuously review and improve our efforts to support the achievement of the Naropa Curricular Arc outcomes. If there are support unit outcomes in which targets for measures of the achievement of outcomes have not been met then plans should be made for how to meet those targets. If targets have been met then the SUWL and other support staff should consider ways to maintain and even improve their services. Make changes suggested by data, implement those changes, and then collect data to see if they worked the next time around through the cycle. 10 Appendix A has a number of frequently asked questions about assessment of non-academic support units at Naropa. Please review those questions and if you have others contact Ted Lamb. 11 Figure 1. NON-ACADEMIC SUPPORT UNIT ASSESSMENT PLAN & SCHEDULE: WHAT TO DO AND WHEN TO DO IT. PLAN. Review last years Feedback. Reviews work and select outcomes to focus on this year by 1 Oct 2013. of AAR provided to Program Leads NLT 1 August 1. REVIEW & SELECT 4. FEEDBACK • Delivered to Support Unit WEAVE Leads no later than Aug 1st annually • Review previous year AAR • Review outcomes, rubrics, and measures of learning • Select Support Unit Outcome(s) to Assess in current academic year • Implement changes suggested by data analysis • Completed no later than Oct 1st annually 3. ANALYZE DATA & PREPARE REPORT 2. CONDUCT • Annual Assessment Report with results • Assemble all the sections of the Annual Assessment Report and coordinate drafts with staff review • Annual Program Assessment Report delivered (Word copy is OK) to Asst. Dean of Curriculum & Assessment no later than 1 July. • Assessment of Support Unit Outcomes in normal course of work activities • Collect data on new changes to see if they worked • Use Rubrics as necessary, if you have them • Input data into WEAVE • Use Direct & Indirect measures of achievement of outcomes • October through May at Program Lead and program faculty discretion DO. REPORT. Conduct your assessment and data collection activities in Fall & Spring semesters. Input data into WEAVE. Write and submit annual assessment report by 14 May 2014 PLAN, DO, REPORT are Program Lead activities. FEEDBACK is an Academic Affairs activity. 12 VI. SUPPORT UNIT OUTCOMES GOALS FOCUS ON WHAT DO YOU DO? Goals derive from your broad the mission statement but spotlight what you do in your department that facilitates the operation and maintenance of Naropa. FOR EXAMPLE: Our goals are: 1. To facilitate faculty development, evaluation, and hiring. 2. To guide the academic operational work. 3. To assure compliance with academic priorities and the mission throughout the university. 4. To ensure availability of resources and monitor fiscal management. 5. To enable and monitor co-curricular opportunities 6. To establish and ensure outstanding academic curriculum and programs. 7. To coordinate… 8. To structure… 9. To encourage…. 10. To serve…. Mission Statement and Outcomes. The first task in identifying support unit outcomes is to wordsmith a mission statement for your unit. Many support units already have such a statement but if not see Appendix B for some assistance. After the mission of the unit has been designed, specific outcomes should be the focus of attention. Often, and unfortunately incorrectly, unit goals and outcomes are seen as synonymous. This is not the case. Unit goals are broad and long-term objectives; on the other hand, unit outcomes are measurable expectations. Unit goals are the long range general statements of what the unit intends to deliver, and they provide the basis for determining more specific outcomes and objectives of the unit. The chief function of unit goals is to provide a conduit between specific outcomes in any particular area and the general statements of the College mission statement. Thus, unit goals and outcomes should be crafted to reflect the goals of the College mission statement. How to develop unit outcomes: Unlike outcomes in academic programs that all have student learning outcomes the outcomes for different administrative departments can be quite varied but the effective performance of all of them is 13 critical to the contemplative education mission of Naropa. Outcomes should describe current services, processes, or instruction. One approach that works well is to ask each of the unit staff members to create a list of the most important things the unit does. Then create a master list of the key services, processes or instruction. From that list, a set of outcomes can be created. Staff members are the best equipped to identify the unit outcomes, as they are the experts in the day-to-day operations. Typically, the number of outcomes is unique to the specific unit; however, usually eight to twelve outcomes is generally the case for the total number of outcomes to be assessed in a three to four year period and the number is based upon the mission and purpose of the unit. For each institutional effectiveness assessment cycle, it is recommended that three outcomes are assessed, and over a three to four year period, all outcomes are assessed. Characteristics of good outcomes: A good outcome should: 1. 2. 3. 4. 5. 6. 7. focus on a current service, process, or instruction; be under the control of or responsibility of the unit; be meaningful and no trivial; be measurable, ascertainable and specific; lend itself to improvements; be singular, not bundled not lead to “yes/no” answers. They should: 1. 2. 3. 4. 5. describe current services, processes or instruction; use active verbs in the present tense (unless a learning outcome); reflect measurable standards; measure the effectiveness of the unit (using descriptive words); be essential and meaningful to the unit. A Support Unit Outcome Should: ● Be clearly and succinctly stated. Make the unit outcome clear and concise; extensive detail is not needed at this stage. ● Be under the control or responsibility of the unit. ● Be measurable. Sometimes an outcome is not measurable in a cut and dried objective fashion and thus might be considered somewhat subjective, such as client satisfaction. By using a survey and assessing each major component of the survey instrument, the outcome can thus be measured. ● Lend itself to improvements. The process of assessment is to make improvements, not simply to look good. The assessment process is about learning how the unit can be better, so do not restrict your outcomes to activities that will measure something the unit 14 is already doing well. ● Focus on an outcome that is meaningful. Although it can be tempting to measure something because it is easy to measure, the objective to measure that which can make a difference in how the unit functions and performs. ● Focus on outcomes that measure effectiveness. If the answer to the outcome is a “yes/no” response, the outcome probably has not been written correctly and, when measured, may not yield actionable data. We recommend use of descriptive words regarding the service or function. ● Be phrased with action verbs in the present tense that relate directly to objective measurement. Examples of Some Problematic Unit Outcomes: Example 1: The Office of Institutional Effectiveness and Accreditation will ensure that 90 percent of departments submit their annual Institutional Effectiveness plan on time. Problems: a) The unit does not have control over this outcome. While we certainly hope this goal can be achieved, and it is important, the outcome itself is not appropriate for the assessment of this unit’s outcomes because there is no direct control. b) In addition, this outcome is stated in the future tense, implying that it may be a future goal or initiative, rather than a current service or process. Example 2: The Artist Series will process ticket orders in a timely manner, provide quality refreshments, coordinate comprehensive VIP program, and follow sound accounting principles. Problems: a) This outcome is what is often referred to as “bundled;” there are several different components all tied into one outcome. This would be an extremely challenging outcome to assess; thus, it would be more effective by simplifying and focusing the outcome. With a bundled outcome, the assessment measure would have to specifically address each and every one of the elements, and that is an incredibly large amount of data to design, collect and report. So, although each of the elements is important, it would be better (and easier) to separate these into multiple outcomes. b) In addition, this outcome is stated in the future tense, implying that it may be a future goal or initiative, rather than a current service or process. Administrative Major Types of Administrative Outcomes to Measure Example Efficiency The Foundation processes donation receipts in a timely manner. 15 Accuracy Effectiveness Purchasing accurately processes purchase orders. The Human Resources Office provides effective new employee orientation services. The cafeteria provides food and facilities that are satisfactory to its customers. The Artist Series provides high quality cultural events to the community The Campus Bookstore provides comprehensive customer service. The College Finance Department consistently complies with standard accounting practices. Employees will understand how to accurately enter a department requisition in Orion. Client Satisfaction Quality Comprehensiveness Compliance with Standards Employee Learning Outcomes* *Only use employee learning outcomes if the unit is responsible for leading professional development/ training workshops for employees. Checklist for a Unit Outcome An Outcome should: • • • • • • • • Describe unit’s services, processes or instruction Identify a current function Be under the control of or responsibility of the unit Be measurable/ascertainable and specific Lend itself to improvement Be singular, not “bundled” Be meaningful and not trivial Not lead to a “yes/no” answer! Questions to Consider when Reviewing the Design of Outcomes: o Is the outcome stated in terms of current services, processes, or o instruction? Does the unit have significant responsibility for the outcome with little reliance on other programs? o Will the outcome lead to meaningful improvement? o Is the outcome distinct, specific and focused o Any answer other than “yes” to the above questions is an indication that the outcome should be re-examined and redesigned. 16 17 VII. DIRECT AND INDIRECT MEASURES OF SUPPORT OUTCOMES. Non-Academic Support Units can use both direct and indirect measures of accomplishing support unit outcomes. It is wise of use at least one of each type of measure for each support unit outcome to provide useful and adequate data to determine whether targets for the measures are being met. Direct measures assess support unit achievement of outcomes without the use of opinions, thoughts, or assumptions. A Direct measure will usually be very concise and easy to interpret. For example, a Finance department may have a goal of receiving an unqualified audit every year, a direct measure of this goal would be the State’s audit report of university financial activities. If the HR department has a goal of submitting information to an external agency on time and accurately every semester, then a direct measure would be the records kept by the HR department that indicate whether or not the reporting files were sent on or before the due date and whether or not any re-submissions were necessary due to inaccurate data. Facilities may have goals of snow removal or lawn maintenance. A direct measure would be similar records of these activities. Other useful direct measures might include data on staff time, costs, equipment utilization, response timeliness to customer requests, timely delivery of accurate reports or data to external agencies (e.g., Higher Learning Commission), enrollment rates, retention rates, use of services, program participation, number and types of programs offered, number of people trained, meetings conducted, etc. Indirect measures assess opinions or thoughts about whether or not your unit meets it goals of being effective, efficient, and whether or not your unit completes all tasks that are expected. Indirect measures are most commonly captured by the use of surveys. Surveys of stakeholders such as other staff, faculty, students, parents, or visitors can provide valuable insights for establishing useful measures as well as targets for those measures. Other useful data collection methods include interviews and focus groups. What should an assessment measure do? An assessment measure should provide meaningful, actionable data that leads to improvements. The purpose of assessment is to look candidly and even critically at one’s unit to measure and collect data that will lead to improvements. The purpose of assessment measurement is to gather data to determine achievement of the unit outcomes selected during the specific assessment cycle. An Assessment Measure should: Answer the questions: • • • What data will be collected? When will the data be collected? What assessment tool will be used? 18 • • How will the data be analyzed? Who will be involved? It is vitally important that the assessment be directly related to the outcome. For example, if an outcome is designed to measure community satisfaction with community continuing education, and then the assessment measure counts the number of continuing education courses, there is a misalignment between the outcome and the measure. In this case, the measure should be an evaluation survey. An Assessment Measure includes: A clear and specific description of what data will be collected. A definitive and specific timeframe for when and by whom the data will be collected. Will it be measured and collected during one specific month? A full year? By whom? A clear and specific description of the assessment tool which will be used. Will it be a systems log? Or will it be a survey? Other? A clear and specific description of how the data will be analyzed. Once the measures for assessing unit outcomes have been determined, an assessment measure should be developed which states what outcomes have been chosen to be assessed, how they will be assessed, and how the assessments will be administered and the data collected. Multiple measures should be used that include at least one direct and one indirect measure. Checklist for an Assessment Measure An Assessment Measure should: • • • • • • • Be directly related to the outcome Consider all aspects of the outcome Be designed to measure/ascertain effectiveness Multiple assessment measures should be identified, if possible; Be complemented by a second assessment measure, if possible Provide adequate data for analysis Provide actionable results Outline in detail a systematic way to assess the outcome (who, what, when, and how) • Be manageable and practical Questions to Consider when Reviewing the Design of Assessment Measures: • • • Are assessment measures for each outcome clearly appropriate and do they measure all aspects of the outcome? Have multiple – at least one direct and one indirect measure been identified? Are the assessment measures clear and detailed descriptions of the assessment activity (who, when, what and how)? 19 • • Do the assessment measures clearly indicate a specific time frame for conducting assessment and collecting data? Does the measure reflect different campuses and locations (i.e., Arapahoe, Nalanda, Paramita), if appropriate? Any answer other than “yes” to the above questions is an indication that the assessment measure should be re-examined and redesigned. Establishment of an achievement target for your measure An achievement target is the benchmark for determining the level of success for the unit outcome. Thus, it provides the standard for determining success. Additionally, an achievement target assists the unit staff and reviewers to place the data derived into perspective. Finally, setting achievement targets allows the unit to discuss and determine exactly what the expectations should be and thus determine what constitutes effectiveness. How achievement targets should be expressed. Achievement targets should be specific. The achievement target should be clearly stated with actual numbers. Achievement targets should avoid words such as “most,” “all,” or “the majority.” Specific and actual numbers should be utilized. Achievement targets should not utilize target goals of 100 percent. If a target of 100 percent is set, the standard set is either unrealistically high or there is an implication that staff has selected a target they already know can be universally achieved. If a support unit is expected to consistently attain 100 percent due to legal or financial regulations or guidelines, it is recommended that the unit state that in the target. Example of a Unit Outcome, Appropriate Assessment Measure and Achievement Target: Outcome: The “Child Care Center” communicates effectively with parents regarding center policies, procedures, and activities. Assessment Measure: In March, a survey will be emailed to parents of all enrolled children, evaluating the effectiveness of communicating with parents via the monthly newsletter. The survey will include questions about delivery method, content, calendar, and length. The survey scale ranges from “Strongly Disagree” to “Strongly Agree.” The percent of “Agree” or “Strongly Agree responses for each question will be tallied. In addition, suggestions for improvements will be compiled. Achievement Target: At least 90% of responses to each survey question will be “Agree” or “Strongly Agree.” 20 Checklist for an Achievement Target An Achievement Target should: • • • • Be specific Avoid vague words such as “most” or “majority” Generally not be stated in terms of “all” or “100%” Directly relate to the outcome and assessment measure 21 VIII. ANALYZING YOUR ASSESSMENT DATA. Analyzing your assessment data can seem like a daunting task but it is really just trying to make sense of the variety of inputs you have on what you are doing in your program. You should have some simple, basic goals in mind as you begin to probe the variety of data sources you have. One of the best I have seen is two simple questions: A. What is working?, and B. What is not working and needs fixing? WHAT TO DO. These two questions can be applied to virtually every aspect of your program assessment whether you are examining program or course level issues. As you review your various sources of data and findings try to summarize your results by making tables, figures, or charts that help you make sense of it all. It helps to drill down and attempt to answer specific questions on topics such as these while your data are spread out before you. SUPPORT UNIT OUTCOMES. Are your outcomes reflective of your current program or have things changed? DIRECT MEASURES OF UNIT OUTCOME ACHIEVEMENT. What do your direct measures of outcome achievement tell you about how well you are supporting the university community? INDIRECT MEASURES OF UNIT OUTCOME ACHIEVEMENT. What do your indirect measures of learning tell you about how well you are supporting the r students are achieving the program and student/course learning outcomes? Are your direct and indirect measures working well? Do they link to your student learning outcomes? Do they need to be modified or improved? ORGANIZATIONAL STURCTURE. Is the way you have organized your unit working well or do you need to restructure to better accomplish your mission? WORK ORGANIZATION. Are the tasks assigned to the right offices/people? Are there new practices/techniques/equipment that you need to better accomplish your work? THE SKEPTICAL ASSESSOR. Remember that the data and various findings are evidence. Be a critical, suspicious, and skeptical investigator who is always leery of the evidence but knows they need it. The skeptical assessor wants to reveal good, reliable, and valid evidence that their support unit is accomplishing it’s mission…or where it might not be working and needs refining, updating, or modifying. IMPLEMENTATION! The reason for collecting and analyzing assessment data is to pinpoint areas for improvement in your program. The next step, of course, is to actually make the program or course improvements or implement new practices. Track those changes and plan to collect new assessment data during the next cycle to determine whether the changes have the intended consequences. As you implement the changes to your program you will collect new data on how well the program is achieving program and course outcomes. This is sometimes referred to as “closing the loop” and it is in analyzing the data and preparing the Annual Assessment Report that you close the loop. 22 ANNUAL ASSESSMENT REPORT (AAR). After you have implemented your assessment changes you will have new data that will allow you to determine whether you are achieving your objectives. The Annual Assessment Report documents what you have done over the academic year. This is where you summarize what you did and what the results were and what new actions are suggested for the next academic year. Keep in mind that we seek a process of continuous improvement. Unit assignments and personnel change, and content knowledge about how to best accomplish tasks improves. Support units are not static and actually should change over time. Assessment allows us to make smart changes in the support we offer to our “customers” the faculty, other staff, and students. The AAR is due by the end of the spring semester but certainly no later than 1 June. Proposed sections of the AAR are: Introduction Plans, meetings and discussions held. Support Unit Outcomes Reviewed This Year Normally 2-3 program level outcomes are selected for focuses analysis per year. Direct and Indirect Measures Used Both should be included in your assessment work and report. Data Analysis Tables, figures, graphs, charts are very useful in explaining results and should be coupled with written explanations. WEAVE reports can be very useful here and should be included in this section. Discussion of Results Input from the Assessment POC as well as other faculty members. Assessment Plans for Next Academic Year What does your work this year suggest should be your focus next year? 23 24 IX. WEAVEonline WEAVEonline is a widely used software application that addresses the need to maintain continuous improvement processes in our academic and administrative structures. It guides and provides for the alignment of multiple processes, such as assessment, planning, accreditation, budgeting, and institutional priorities. WEAVEonline uses a classic model of assessment that follows a step-by-step process for completing an assessment cycle. This process begins with creating a mission/purpose and establishing goals for achieving that mission. The process takes the user through objective/outcome development, establishing appropriate measures, setting achievement targets for each measure, reviewing applicable data, writing findings of the assessment results, and establishing needs based on current data through planning before repeating steps in the next assessment cycle. One of the strengths of WEAVEonline is the way the application helps users to utilize their results to “close the loop” by developing and implementing action plans. Action plans can be developed based on needs as determined by the data the user collects. Once created, action plans are tracked within WEAVonline and categorized according to level of completion. This method creates a living history of the institution’s assessment work and allows the institution to have a clear longitudinal view of how it has “closed the loop.” Action Plans can also be developed that are not findings-based but are, instead, intended for strategic planning and quality enhancement purposes (image and text extracted from http://www.weaveonline.com/ ). We will begin implementation and training for faculty and staff on WEAVEonline in the 2013-2014 academic year. The person in your support unit who coordinates assessment work will also be the person who receives WEAVE training and inputs assessment data, links outcomes, uses measures, establishes targets, and generates reports in WEAVE for the Annual Assessment Report. Appendix D contains information on logging in and logging out of WEAVEonline. Additionally, Appendix C contains a useful worksheet to guide the efforts of the Support Unit Weave Lead in their activities with the WEAVEonline 25 software platform. After logging in each page has a unique “Help with this page” feature as well as a tutorial to assist using the software. Users can contact the WEAVE administrator at any time for assistance. 26 27 References Clark, M.L. and Crosby, L.S. (2012). Institutional Effectiveness Resource Manual for Non-Academic and Academic and Student Support Services Units. Florida State College, Jacksonville, FL. This is an excellent manual from which majors sections have been extracted and modified for Naropa application. Available online: http://www.fscj.edu/district/institutional-effectiveness/assets/documents/manualnonacad-prgms.pdf and the WEAVEonline Collegewide Document Repository. Diamond, R.M. (2008). Designing and assessing courses and curricula: A practical guide. (3rd Edition). San Francisco, CA: Jossey-Bass Publishers. Guskey, T.R. (2000). Evaluating professional development. Thousand Oaks, CA: Corwin Press. Hutchins, P. (2010). Opening Doors to Faculty Involvement in Assessment. Online. National Institute for Learning Outcomes Assessment. http://www.learningoutcomeassessment.org/documents/PatHutchings.pdf Linn, R.L. and Gronlund, N.E. (1995). Measurement and assessment in teaching. (7th Ed.)Englewood Cliffs, NJ: Prentice Hall. Maki, P.L. (2004). Assessing for learning: Building a sustainable commitment across the institution. Sterling, VA: AAHE. Middaugh, M.F. (2010). Planning and assessment in higher education: Demonstrating institutional effectiveness. San Francisco, CA: Jossey-Bass Publishers. Stevens, D.D. and Levi, A.J. (2005). Introduction to rubrics. Sterling, VA. Stylus Publishing, LLC. Suskie, L. (2004). Assessing student learning: A common sense guide. San Francisco, CA: Anker Publishing Company, Inc.] 28 29 APPENDIX A FREQUENTLY ASKED QUESTIONS ABOUT NAROPA ASSESSEMENT OF NON-ACADEMIC SUPPORT UNITS 30 FAQs on NAROPA ASSESSMENT ON NON-ACADEMIC SUPPORT UNITS 1. What is assessment and why is it necessary? Assessment is a process by which you measure your department against a pre-defined set of goals, objectives, or outcomes. For non-academic departments, assessment is determining whether or not your department does what is expected of it and completes all of these tasks in an efficient and effective manner. Basically, assessment is a systematic method of finding ways for your department to become more streamlined. It is important to determine if your department does complete everything that is expected in an efficient and effective manner or are there procedures or processes no longer working for your department and needed change. Assessment is a collaborative effort that includes all staff members in your department, as well as any direct or indirect constituents that influence your department or are impacted by your department. Assessment planning enables faculty and staff to answer important questions posed by students, parents, employers, accrediting bodies, and legislators about university, school and program policies, procedures and other areas crucial to university operations. Assessment allows everyone involved to become a part of the whole system and to have an influence in policy and procedural methods adopted by every office on campus. 2. What are some misconceptions about assessment? Assessment is not about making your department conform to one particular business model that seems to work at some other school. Assessment is about evaluating the process, not the person. Assessment is not measuring the effectiveness of a single person; assessment is NOT a performance evaluation of any single person; it is a performance assessment of a department. For example, if a department had so many tasks expected of them and no way to complete them, the department assessment report should state what tasks could not be completed. By performing an assessment, it can allow for possible changes based on the results of the assessment reporting. 3. Who does assessment? I thought that this was something that only faculty had to worry about? Everybody does assessment in some form or fashion. Assessment is not just for faculty. In higher education, everybody from the administration to the students perform assessment. Our goal is to ensure that assessment is a collaborative effort between faculty, staff, and administration. Assessment works best when as many people are involved as possible. Every department at Naropa has room for improvement, and assessment can identify places that need improvement as well as possible ways to accomplish this improvement. Quite often you will hear the words “Student Learning” when assessment is being discussed; rest assured that you do affect student learning. It does not matter what department you work in; you DO affect student learning. However, there is big difference in how faculty conducts assessment and how nonacademic departments conduct assessment. 4. How do I begin an assessment plan for our department? The first thing is to determine your department’s responsibilities. This can most easily be accomplished by looking at job descriptions or duties of employees, as well as departmental 31 responsibilities. If your department has a vision or mission statement, that should be the corner stone of your assessment. Once you have determined the overall responsibilities of your department, you will need to decide on a set of clear and concise goals or objectives. These objectives should be directly related to your department’s responsibilities and mission/vision statement if it exists. After you decide on your goals or objectives, you need to determine if these goals or objectives are being met. This involves measurement, but such measurements need not always be quantitative. It is impossible to say what you will learn from these measurements. If the department easily meets all of the goals, perhaps the goals are too low. Remember that it is OK if your department does not meet all of your goals every year. Assessment data is meant to be followed over time. If some goals are not met in one assessment period this does not necessarily mean that anything needs to change; you should examine trends over time. After a period of time, if goals are consistently not met, then action may need to be taken. Regardless, goals matter and meeting those goals imply more than simply going through the steps. Too often, employees are told that “measuring” and reporting “measurements” are what really matter. Being obsessed with the process of assessment is never a substitute for improving your department. 5. What about “Student Learning?” Do I have to relate everything I am assessing to how it impacts student learning? You do not have to relate everything you are assessing to specific student learning outcomes but you should know that what you do creates the social, technical, and cultural setting for the achievement of the Naropa Curricular Arc Outcomes. Your support unit (or department) has specific goals, objectives, or outcomes and those do in fact help the faculty accomplish their outcomes and without your efforts they would be at a loss to do their work. Some departments (such as the Allen Ginsberg Library) may directly impact student learning outcomes but most of the non-academic programs do so indirectly. 6. What role does my administrator play in assessment? Your department (or program) will have a person designated as the Lead for inputting data in our WEAVE assessment software platform. This Support Unit WEAVE Lead (SUWL) will input your mission, goals, objectives, and outcomes, measures, targets for measures, etc. into WEAVE to track and generate sections of the annual assessment report. 7. How do I encourage my people to participate in assessment? Knowledge of the purpose and goals of assessment usually encourages staff to participate. This is the best way to make improvements and to ensure that all staff in your department has a say. The best way to encourage our staff is by responding to the results and showing everyone involved that their participation is making a difference. Department heads or directors can also encourage their staff by setting aside a separate time in each department meeting where assessment issues are discussed. 8. What do we measure and what kinds of information do we collect? What you measure is a simple question to answer; just evaluate whatever your general job duties require, as well as, anything that comes from your mission statement. What kinds of information to collect is more difficult; this varies tremendously by department; you can use things such as audit reports, student surveys, faculty/staff surveys, meeting minutes, etc.. 32 9. What makes for good assessment measures, and how many do you need? The kinds of things that make for good support unit assessment measures include: third party observations, self-administered questionnaires, interviews with students, faculty, and/or staff, and external assessment instruments (Audit reports, Federal or State reports, etc.). How many measures you need is greatly dependent upon the type of goal or objective you have and the available measures for that goal or objective. In general, you only need one measure if it is a direct measure; if all that are available are indirect measures then you may need more than one. 10. What is the difference between direct and indirect measurements? Direct measures assess departmental performance without the use of opinions, thoughts, or assumptions. A Direct measure will usually be very concise and easy to interpret. For example, a Finance department may have a goal of receiving an unqualified audit every year, a direct measure of this goal would be the State’s audit report of university financial activities. If the HR department has a goal of submitting information to an external agency on time and accurately every semester, then a direct measure would be the records kept by the HR department that indicate whether or not the reporting files were sent on or before the due date and whether or not any re-submissions were necessary due to inaccurate data. Indirect measures assess opinions or thoughts about whether or not your department meets it goals of being effective, efficient, and whether or not your department completes all tasks that are expected. Indirect measures are most commonly captured by the use of surveys. 11. Can’t we just resubmit the same report as we did last year since we didn’t do anything different? No. Each year the departments benefit from assessment in some way, whether it is from changes made as a result of their own assessments or from those made as a result of a past year’s assessments. Some changes can be made immediately. Other changes will take more time. We seek to “close the loop” by collecting new data when changes are made to determine if those changes had the intended effect. Nobody denies that good assessment can reveal consistent trends. Always remember, your assessment report is not your goal. You should be ensuring that your department is doing all that is expected in the most efficient way possible with the tools and resources available. It is very likely that your assessment goals or objectives will remain constant from year to year; but the report should not. 12. Should we consistently meet all of our goals each year? If every goal is met consistently from year to year, it may mean that the goals are set too low. Purposefully setting goals that are easily met defeats the purpose for having an assessment plan. On the other hand, usually you do not want to set goals that are impossible to reach. It is expected that sometimes you will meet some or all of your goals and sometimes you will not meet all of them. It is advisable to focus your assessment efforts on a subset of your goals or objectives each year. 33 13. What if we don’t have time for assessment? Everybody has time for assessment. Assessment is essential for a department to stay focused on their mission and to stay current with Federal, State, and University policy. It is necessary to make assessment a top priority in order for a department to know if they are consistently performing at or above what is expected and needed Excerpt from (and modified): http://www.atu.edu/assessment/faq_nonacademic.php 34 APPENDIX B HOW TO WRITE A MISSION STATEMENT 35 How to write a Naropa Non-Academic Support Unit Mission Statement MISSION STATEMENT OF A SUPPORT UNIT. The support unit mission statement is a concise statement of the general values and principles which guide the activities of the unit. It sets the tone and a philosophical position from which follow a program’s goals and objectives. The mission statement should define the broad purposes the support unit is aiming to achieve, describe the community the unit is designed to serve, and state the values and guiding principles which define its standards. Support Unit Mission Statements must also be consistent with the principles set forth in the Naropa University mission statement. Accrediting bodies expect that Non-Academic Support Unit Mission Statements are in harmony with the mission statements of the university or school. Therefore, a good starting point for any mission statement is to consider how the program mission supports or complements the University or schools missions and strategic goals. A Support Unit Mission Statement Is a broad statement of what the unit is, what it does, and for whom it does it Is a clear description of the purpose of the unit and the performance environment Reflects how the support unit contributes to the education Naropa students or how the unit supports its other university “customers” May reflect how the teaching and research efforts are enhanced by their efforts Is aligned with university and school missions should be distinctive for the support unit Components of a Program Mission Statement Primary functions or activities of the support unit. What are the most important functions, operations, outcomes, and/or offerings of the unit? Purpose of the support unit. What are the primary reasons why you perform your major activities or operations? Stakeholders. Who are the groups or individuals that do the work of the support unit and those that will benefit from the work of the unit? Attributes of a well-written Support Unit Mission Statement The statement leads with the educational purpose of your support unit The statement identifies the signature feature of the support unit The statement defines clarity of purpose and sticks in your mind after one reading The statement explicitly promotes the alignment of the support unit with university and school missions 36 A TYPICAL STRUCTURE OF A SUPPORT UNIT MISSION STATEMENT “The mission of (name of your support unit) is to (your primary purpose) for the (your stakeholders) by providing (your primary functions or activities).” Primary Purpose name Support Unit name EXAMPLE: The mission of the Facilities Department is to provide an attractive, comfortable, clean, functional, and sustainable contemplative learning and work environment for the students, faculty, staff, and visitors to Naropa by constructing, maintaining, equipping, and enhancing our buildings, grounds, and the environment with a strong commitment to customer service and satisfaction. As an integral part of the campus community, we carry out our duties with pride and professionalism. Primary Functions Stakeholders Another type of mission statement format that can be used: The ________(name of program) will_________ for__________ by ____________. This tells what the program is, what it intends to do, for whom it intends to do it, and by what means. Checklist for a Support Unit Mission Statement Is the statement clear and concise? Is it distinctive and memorable? Does it clearly state the purpose of the support unit? Does it indicate the primary function or activities of the support unit? Does it indicate who the stakeholders are? Does it support the mission of the university and school? Does it reflect the support units’ priorities and values? Extracted from and modified from: http://oeas.ucf.edu/doc/adm_assess_handbook.pdf 37 Appendix C Naropa WEAVEonline Assessment Worksheet 38 (Example template only) Naropa WEAVEonline Assessment Worksheet Program: Facilities Contact person: Email address: Assessment cycle/year: AY13-14 Entity Mission/Purpose The mission of the Facilities Department is to provide an attractive, comfortable, clean, functional, and sustainable contemplative learning and work environment for the students, faculty, staff, and visitors to Naropa by constructing, maintaining, equipping, and enhancing our buildings, grounds, and the environment with a strong commitment to customer service and satisfaction. As an integral part of the campus community, we carry out our duties with pride and professionalism Goals: Goal 1. Goal 2. Goal 3. Assessment Summary Performance Outcomes/Objectives Measure(s) Achievement Targets Findings Action Plans 1. 2 3. 4. 5. Analysis Questions Example: Based on your findings and action plan, what primary changes will you make to enhance student success? To performance outcomes? 1. 2. Annual Report Items Example: Assessment Report due SP14 to AA 1. 2. 39 APPENDIX D WEAVEonline Logging In and Logging Out 40 WEAVEonline Logging In and Logging Out. WEAVEonline seems to work best with the Mozilla Firefox browser so you might want to download it (free) and then bookmark the link below for easy access. Go to: https://app.weaveonline.com/naropa/login.aspx and then this screen should appear. LOGIN Type in your WEAVEonline ID (e.g., tlamb or cfrederick) and then your password. Click on Login and the next screen should appear. The first time you login you will have a designated password that the WEAVE administrator assigned to you. You will be required to change it to your own unique password. Select a password that has upper and lower case characters as well as at least one special character. You should be the only one who uses this access. If others in your program or support unit need access then have them contact the WEAVE administrator (Dr. Ted Lamb). 41 This is the first screen that you should see. It has announcements and a number of tabs across the blue horizontal bar. Leave the “cycle” in 2013-2014 and select your program (WEAVE calls them “entities). The screen should change. You can then select under Assessment or the other tabs the area in which you want to work. One of the special features of WEAVE is the HELP (?) tab on the upper right hand side of the screen. Help is available for every page that you might be on. There is also a Quick Start Guide and a Tutorial that you might find useful. Please Note. 1. On the lower right hand side of the screen there are links to www.naropa.edu and to www.my.naropa.edu where you can access information without leaving WEAVEonline. 2. For those programs who have done Departmental Systematic Reviews (DSRs) in the past there are scans of those files for your use in the Assessment>Document Management section. LOGOUT To logout simply click on the Logout tab on the blue horizontal bar. 42 APPENDIX E WEAVEonline Assistance 43 WEAVEonline Assistance WEAVEonline Help Button. There is a HELP (?) button on the upper right hand side of every WEAVE page. If you click on it you will see an option for “Help for this page.” Every page you have access to should have help for that page. There is also a tutorial and a Quick Start Guide that may answer your questions. Naropa WEAVE Administrator: Dr. Ted Lamb, Asst. Dean of Curriculum & Assessment, 2120, phone: 303-245-4617 tlamb@naropa.edu 2111 Arapahoe Ave. Office For Title III undergraduate programs you can also see Carol Frederick for assistance. cfrederick@naropa.edu , phone: 303-245-4658 WEAVEonline Customer Support: WEAVEonline SUPPORT, phone: 877-219-7353, support@weaveengaged.com (try this first…they respond quickly) 44