Functions of collaborative question-posing environments

advertisement

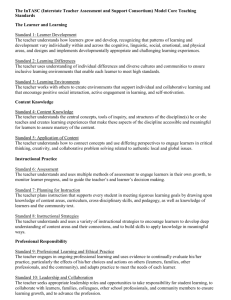

Conducting Programming Education Using Collaborative Question-posing Environments Junko Shinkai Toyama National College of Technology Japan shinkai@nc-toyama.ac.jp Yoshikazu Hayase Toyama National College of Technology Japan hayase@nc-toyama.ac.jp Isao Miyaji Faculty of Informatics, Okayama University of Science Japan miyaji@mis.ous.ac.jp Abstract: A learning activity in which learners themselves create questions in a collaborative manner is regarded as more effective for improved understanding of learning contents than mere question solving. In this study, authors attempted to perform programming education using a question-posing activity in which learners create questions on Moodle, a Learning Management System (LMS), using collaborative question-posing environments. Learners ask each other questions they have created. This paper presents an outline of collaborative question-posing environments, contents of lesson practice, and the transition of learner's capability and consciousness as revealed by a questionnaire survey. Introduction In programming education, three educational contents of comprehension of grammar and writing of the programming language, algorithmic construction, and program construction are taught using educational methods concurrently. However, an important difficulty of teaching is that importance is placed on practices performed by limited human resources and in which individual differences appear with respect to progress and enthusiasm for learning of the learners. The authors have been using blended learning, which is a combination of teacher-centered concurrent lessons in which one's own notebook and notebook computer are used in the current educational system, and individual learning by e-learning, collaborative learning in which learners themselves assess each other, and a support system for own algorithm preparation in the same course unit (Shinkai & Miyaji, 2011). Results revealed that a formal test of blended e-learning is effective for improvement of the knowledge of learning contents. Results further showed that, using a mere formal test, students with insufficient understanding might give a correct answer by guessing. Investigation of a method that surely improves the level of comprehension is necessary. Authors considered that instead of solving a given question, question-creating activities, which are said to be effective for improvement of level of understanding of learning contents, should be blended. An earlier study that examined intellectual support environments for question-posing based on mathematical expressions (Nakano et al., 2000) and which collaborative learning support system using the web (Takagi et al., 2007) were reported. In an earlier study, authors developed collaborative question-posing environments based on Moodle that is open source LMS. Authors attempted programming education using collaborative question-posing environments authors developed and conducted a questionnaire investigation. This paper deals with the transition of learner's capability and consciousness and utilization assessment of collaborative question-posing environments. Collaborative question-posing environments based on Moodle Using a quiz module, which is a standard activity module of Moodle, it is possible to create test questions of various types using a unique tag. The created questions are stored in a question bank of the database, from which they can be added to a mini-test. Learners will take merely formal tests by e-learning using the quiz module. However, with the quiz module, learners without teacher authorization are not allowed to register questions. To provide a collaborative question-posing environment in which a learner can create questions and perform mutual assessment, authors produced a collaborative question-posing module. The collaborative question-posing environment is operated on Moodle 9 on a Linux server where PHP5, MySQL5, and Apache 2 are operating. Figure 1 is a use case diagram by Unified Modeling Language (UML) of the collaborative question-posing environments. Learners can perform question-posing activities and mutual assessment activity using the collaborative question-posing module. The teacher can register questions created to the question bank to allow for utilization by the quiz module. Fig. 1 A use case diagram of collaborative question-posing environments Functions of collaborative question-posing environments To enable the learner to perform question-posing and assessment activities easily using collaborative question-posing environments of Moodle, authors considered requirements for collaborative question-posing environments to be as shown below: (1) Questions should be one with a blank space, filling in multiple-choice type. (2) Learners can create questions without consciousness of a tag for Moodle question-posing. (3) Learners can describe an advisory statement for a wrong answer. (4) Learners can create similar questions. (5) Learners can assess questions created by others. To satisfy these requirements, the collaborative question-posing environment has the following three functions: a question-posing function by the learner, a mutual assessment function by the learner, and a question registration function by the teacher. Each function will be described below. (1) Question-posing function by the learner The learner creates a blank space, filling in multiple-choice question using a text editor without being conscious of a tag necessary for Moodle question-posing (Shinkai et al., 2010). Next, on the question-posing screen, the title of the question, objective of the question, options (4 options), advisory statement for wrong answer, intention of question setting for blank space, and the intention of the question are entered. It is also possible to preview questions on the question confirmation screen and to answer the questions actually for confirmation. Furthermore, modification of the questions is possible. The created questions are written automatically into Moodle XML format and registered to the questionposing bank. (2) Mutual assessment function by learners For assessment items prepared by the teacher, the learner enters five-grade assessment and comments. Assessable questions are displayed on the assessment question list screen. The learner who created the question browses contents of the assessment on question assessment confirmation screen. Referring to assessment contents, the learner can modify the question. (3) Question registration function by the teacher The teacher is able to register questions registered to the question-posing bank to Moodle standard question bank. Questions created by the learner can now be used by the quiz module. Practice of lesson Authors attempted practice of lesson using collaborative question-posing environments. The target is 39 second-year students of the Electronic information course at A-technical college. After each of the course units of substitution, operator, input–output, selection and recursion is completed in the lecture of programming, questionposing, and assessment activities were performed. Each student was requested to create one question such as a grammatical question related to C-program language, blank space filling in of the program and others. In assessment activities, students assessed the question created by a student bearing a certain same last digit of the student number. Results of questionnaire assessment analysis After the lesson practice, question-posing activity using collaborative question-posing environments and a questionnaire survey consisting of 45 items relating to assessment activity (Table 1) were performed. The questionnaire includes items related to question-posing activity, wrong answers, assessment of the question, the system, and others. Assessment was made in five steps (5. I think so, 4. I slightly think so, 3. No opinion, 2. I rather do not think so, 1. I do not think so). For “3. No opinion,” a t-test was conducted to ascertain whether the average rating level is inclined to the affirmative side or negative side. Table 1 presents items in the order of higher t-value. Symbols **, *, and + in the column of significance probability respectively signify that a significant difference was found at significance level 1% and 5%, and that a significant trend was found at significance level 10%. According to the questionnaire results, 33 of 45 items were at the affirmative side with significance level 1%. Although this is based on the subjective view of the learner, the following matters were identified from items with significance at significance level 1%: (1) Respondents regarded question-posing and assessment activities as helpful for review of the learning contents and settlement of knowledge related to learning contents. (2) Respondents regarded question-posing and assessment activities as improving knowledge of the program and grammatical knowledge, thereby improving program creation capability. (3) Respondents regarded that creation of advisory statement for a wrong answer improves the level of understanding of learning contents. (4) Respondents regarded question-posing and assessment activities as difficult. (5) The learners create questions consciously knowing that they are instrumental for other learners. However, from items 43, 44, and 45, for which no significance was found, results showed that functions of collaborative question-posing environments should be made more user-friendly. Results showed further that from item 3, question-posing activity has not reached a level for improvement of enthusiasm for learning. Students described good and bad aspects of question-posing and assessment activities. Outstanding contents are as follows: • It results in review. • I came to know things I was not aware of. • It is time-consuming. • I am not sure what question should be created. • I hesitate to assess because my name is identified. Respondents were requested to set the priority order (1–6) for items to which importance is placed at question-posing, and the number of priorities of each item was subjected to cross tabulation (Table 2). Using this table as a 6 6 contingency table, a χ2 test was performed. Results show that bias of frequencies was significant ( χ2(25) = 111.85, p<.01). Then residual analysis was conducted and an * mark was assigned to cells with a plus residual recognized to have a significant difference. This identified that at question-posing, the learners assign importance to learning contents and matters instrumental for the learners and attach less importance to advisory statement for wrong answers and question originality. Table 1 Questionnaire survey of question-posing and assessment activities No. 6 13 1 9 4 20 8 2 40 7 41 29 25 21 27 11 38 30 35 28 17 39 16 23 32 36 10 42 37 12 19 5 31 26 33 24 3 45 15 43 44 18 22 14 34 Assessment item Average Question-posing activity is useful for review of learning contents. Question-posing for what one does not understand is not possible. Question-posing activity is difficult. Question-posing activity improves knowledge of the program. Question-posing activity fixes the knowledge of learning contents. Creating advisory statements for a wrong answer is difficult. Question-posing activity improves grammatical knowledge of the programming language. Question-posing activity improves the level of understanding of learning contents. Assessment contents can be accepted frankly by others. Question-posing activity improves the program creation capability. Assessment contents produced by others are helpful for modification of the question. Blank spaces of questions created by others are appropriate. Solving the question created by others improves the level of understanding of learning contents. Creating advisory statements for wrong answers improves the level of understanding of learning contents. Solving questions created by others fixes the knowledge of learning contents. Questions are created while being conscious of important matters of learning. Assessing the question created by others improves knowledge of the program. Wrong answers of the questions created by others are appropriate. Assessing questions created by others is useful for review of learning contents. Questions created by others improve consciousness of important matters of learning. Creating wrong answers improves the level of understanding of learning contents. Assessment contents of others are appropriate. Creating alternative answers (wrong answers) for the problems is difficult. Creating an advisory statement for wrong answers fixes the knowledge of learning contents. Assessing questions created by others is difficult. Assessing questions created by others improves program-creation capability. The question is created to be helpful for other learners. Advisory statement for wrong answers by others is appropriate. Assessing questions created by others improves grammatical knowledge of the programming language. Questions are created with consciousness of the intention of question setting. Creating wrong answers fixes the knowledge of learning contents. Question-posing activity provides more learning effects than solving the question. Advisory statements for wrong answers by others are appropriate. Solving questions created by others improves enthusiasm for learning. Assessing questions created by others improves the level of understanding of learning. Questions created by others are difficult. Question-posing activity improves enthusiasm for learning. Question assessment browsing function of coordinated learning environments is easy to use. Consulting with other learners aids Question-posing. The Question-posing function of coordinated learning environments is easy to use. The question assessment function of coordinated learning environments is easy to use. Creating a wrong answer improves enthusiasm for learning. Creating an advisory statement for wrong answer improves enthusiasm for learning. I studied to create questions. Assessing questions created by others improves enthusiasm for learning. 4.33 4.44 4.31 3.97 4.05 4.23 4.03 4.23 4.08 4.03 4.10 3.95 4.08 3.87 3.85 3.85 3.67 3.64 3.67 3.64 3.62 3.74 3.87 3.67 3.69 3.56 3.59 3.44 3.49 3.56 3.51 3.46 3.46 3.46 3.38 3.38 3.28 3.28 2.77 2.82 3.15 2.87 2.92 2.92 3.00 Standard Significance deviation probability 0.53 0.79 0.73 0.58 0.69 0.81 0.71 0.87 0.81 0.78 0.85 0.76 0.87 0.80 0.81 0.90 0.74 0.74 0.81 0.78 0.75 0.94 1.15 0.93 1.13 0.94 0.99 0.79 0.88 1.07 1.05 1.00 1.02 1.10 1.02 1.04 0.94 1.10 1.35 1.14 1.11 0.98 0.96 1.16 1.10 ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** ** * * * + **: p < .01 , *: p < .05 , +: p < .1 Table 2 Cross tabulation of priority items of question-posing and χ2 test results Priority order Item Actual frequency 3 4 5 6 1 9 9 10 6 4 39 6.5 6.5 6.5 6.5 6.5 6.5 39 Option of question (Wrong answer) 2 10 12 7 7 1 39 6.5 6.5 6.5 6.5 6.5 6.5 39 Advisory statement for wrong answer 1 2 5 5 12 14 39 6.5 6.5 6.5 6.5 6.5 6.5 39 20 9 3 5 1 1 39 6.5 6.5 6.5 6.5 6.5 6.5 39 4 3 4 3 10 15 39 6.5 6.5 6.5 6.5 6.5 6.5 39 Helpful for learners 11 6 6 9 3 4 39 6.5 6.5 6.5 6.5 6.5 6.5 39 Total 39 39 39 39 39 39 234 39 39 39 39 39 39 234 Difficulty level of question -2.6 1.2 1.6 -0.2 -1.2 Option of question (Wrong answer) -2.1 1.6 2.6 0.2 0.2 -2.6 Advisory statement for wrong answer -2.6 -2.1 -0.7 -0.7 2.6 3.5 6.4 1.2 -1.6 -0.7 -2.6 -2.6 -1.2 -1.6 -1.2 -1.6 1.6 4.0 2.1 -0.2 -0.2 1.2 -1.6 -1.2 Originality of question 2 3 4 5 Expected frequency Difficulty level of question Learning contents of question 1 6 Total 1 2 Adjusted residual Learning contents of question Originality of question Helpful for learners 1.2 Total Significance test ** ** ** ** ** * **: p < .01 , *: p < .05 Conclusion Aiming at improvement of the level of understanding of programming education, authors developed environments to perform collaborative question-posing activity on Moodle and attempted practice of the lesson. A questionnaire survey made after practice of the lesson revealed that the learners consider that question-posing and assessment activities are useful for the review of learning contents, for fixing the knowledge of learning contents, and for improving programming skills. The effectiveness of question-posing activity was therefore confirmed. It was noted that learners regard question-posing activity as difficult. An important shortcoming of the question-posing activity is that it is time-consuming. In the future, authors intend to improve the ease of use of collaborative question-posing environments that are not significant at the affirmative side. Authors intend to identify problem areas of question-posing that learners feel are difficult and to investigate improvement of enthusiasm for learning. The authors appreciate the support of the Grant-in-Aid for Scientific Research, foundation study (C 22500955) given by the Ministry of Education, Culture, Sports, Science and Technology, of Japan for this research. Reference Fu-Yun, Y., Yu-Hsin, L., & Tak-Wai, C. (2005). A web-based learning system for question-posing and peer assessment, Innovation in Education and Teaching International, 42(4), pp.337-348. Nakano, A., Hirashima, T., & Takeuchi, A. (2000). An Intelligent Learning Environment for Learning by Learning by Problem Posing. IEICE Trans D-Ⅰ, J83-D-Ⅰ(6), pp.539-549 (in Japanese). Takagi, M., Tanaka, M., & Teshigawara, Y. (2007), A Collaborative WBT System Enabling Students to Create Quizzes and to Review Them Interactively. IPSJ Journal, 48 (3), pp.1532-1545 (in Japanese). Hirai, Y., & Hazeyama, A. (2008). Question-posing Based Collaborative Learning Support System and Its Application to a Distributed Asynchronous Learning Environment. IPSJ Journal, 49(10), pp.3341-3353 (in Japanese). Shinkai, J., Hayase, Y., & Miyaji, I. (2010). A Study of Generation and Utilization of Fill-in the Blank Questions for Programming Education on Moodle. IEICE Technical Report ET2010-42, pp.7-10 (in Japanese). Shinkai, J., & Miyaji, I. (2011). Effects of Blended Instruction on C Programming Education. Transactions of Japanese Society for Information and Systems in Education, 28(2), pp.151-162 (in Japanese).