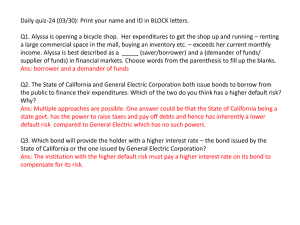

Q#01: Multiple Choice Question. 01: Feed

advertisement

Q#01: Multiple Choice Question. 01: Feed-forward networks have ______________ connections from input to output layers. (A) Two-way (B) One-Way (C)None 02: LVQ is a powerful method for classifying patterns that are not __________separable. (A) Linearly (B) Non Linearly (C)None 03: _________________ recognize and group similar input vectors and using these groups the network automatically sorts the inputs into categories. (A) Self-organizing maps (B) Recurrent networks (C) Radial basis networks (D) Competitive layers 04: Early stopping is a technique that uses ______ different data sets (A) 4 (B) 3 (C) 2 (D) None 05: Activation function is a ____________function which takes the argument u and produces the output y (A) Linear (B) Non-Linear (C) Both A & B (D)None 06: Most commonly used activation function in feed forward neural networks is: (A) Log-sigmoid transfer function (B) Tangent hyperbolic function (C) Positive linear function (D) Symmetrical hardlimiter 07: x=radbas(u) command used to generate response of ______________ Function (A) Triangular basis (B) Max Transfer (C) Positive linear (D) Gaussian Function 08: In MATLAB Neural Network toolbox has the function satlin to generate_________function. (A) Symmetric saturating linear transfer (B) Saturating linear transfer (C) Soft Max Transfer (D) Log-sigmoid transfer 09: In MATLAB trainbfg function is used to run__________________ back propagation training algorithm. (A) Quasi-Newton (B) Baysian regulation (C) Levenberg-Marquardt (D) RPROP 10: In MATLAB to train MLP Network using Gradient Descent back-propagation with momentum which matlab function is used: (A) trainlm (B) traingdm (C) traingda (D) traingd Q#02 Fill in the blanks: 1: A Perceptron can be created with the function__________ (Ans: newp) 2: __________ command is used to bring up the neural network toolbox block set (Ans: neural) 3: Training and learning functions are mathematical procedures used to automatically adjust the network’s _________ and _________ (Ans: weights and Biases) 4: LVQ stand for _______________________________________ (Ans: Learning vector quantization) 5: Recurrent networks use feedback to recognize________ and __________ patterns. (Ans:Spatial,temporal) 6: y=satlins(u) command is used to generate the response of ____________________________________ function (Ans: Symmetric saturating linear transfer) 7: trainlm MTLAB function is used to train a MLP network using _________________________ Back-propagation algorithm (Ans: Levenberg-Marquardt) 8: Radial Basis Function Networks can be designed with the function__________ (Ans:newrbe.m) 9: The drawback of newrbe is that it produces a network with as many _________ neurons as there are input vectors. (Ans: Hidden) 10: The weight vector of the associator is computed as_________________ (Ans: W=dX) Q#03: True and False 1: Pseudoinverse Rule is used with the Hebbian learning when the patterns are not orthogonal (T) 2: In Neural Network toolbox of MATLAB there are four distinct ways to calculate distances from a particular neurons to its neighbors. (T) 3: The hextop function creates a dissimilar set of neurons but they are in a hexadecimal pattern. (F) 4: The dist function calculates the Euclidean distance from a home neuron to any other neuron. (T) 5: newsop function is used to create self organizing map neural network. (F) 6: The Elman network commonly is a three layer network with feedback from the first layer output to the first layer input. (F) 7: Tangent hyperbolic function is a very famous activation function which is widely used in multilayer perceptron networks. (T) 8: Self-organizing maps learn to classify input vectors according to dissimilarity. (F) 9: Radial basis networks provide an alternative fast method for designing linear feed-forward Networks (F) 10: Soft Max Transfer Function usually used with committee machines (T)