Lecture 1 - BYU Department of Economics

advertisement

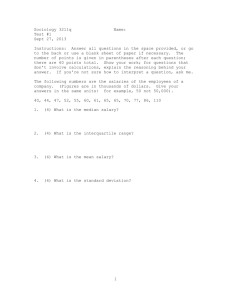

Econ 388 R. Butler 2014 revisions Lecture 1 I. Introduction: names, prayers, responsibility for learning, grading and syllabus II. Simple (Bivariate) Regression: test behavioral relationships or make predictions. A. Linear Relationships: Some Background Linear relationship: Y = a + bX with data variables: Y,X and parameters: a=intercept (the point on the Y-axis where the line crosses) b=slope (of the straight line = rise/run) B. FHSS salaries and teaching experience: Is there a relationship between salary and teaching experience. For the following data we can check this by looking at a plot of the data or the simple correlation between Y(annual salary) and X (experience): FHSS faculty (see the lec4corr.ppt file): Annual Salary(2) Experience (in years) male 45,000 2 1 60,600 7 0 70,000 10 1 85,000 18 1 50,800 6 0 64,000 8 1 62,500 8 0 87,000 15 1 92,000 25 0 89,500 22 1 (We ignore the dummy variable for male for the moment, but male=1 for males and male=0 for female faculty). Probabilistic linear relationships: yi 0 1 x i i (this is the ‘population’ regression—our theoretical model) where = error term When we estimate this linear relationship, we get specific estimates for the slope ( 1 ), intercept ( 0 ), and error term (the estimated error term is called the residual ̂ i ): yi 0 1 x i ˆ i (this is the ‘sample’ regression—estimated with data) Once we pick values for the intercept and slope parameters (respectively, those will be ˆ0 , ˆ1 ), we have a line from which we can test hypothesis (about the population regression) or make predictions. *What does the hypothesis that experience leads to higher salaries indicate about what we expect to find for the slope and intercept values? *What does the hypothesis that new professors (with no teaching experience) make $40,000 a year indicate about the expected slope and intercept values? 1 E. Ordinary least squares criterion for choosing the values of ˆ0 , ˆ1 : minimize the following sum of squares (these are called the “residual sum of squares,” “error sum of squares,” or “unexplained sum of squares” since there are the deviations in the dependent variable that are unexplained by the regression model): n min ( y - ŷ ) , 2 i i where ŷi = ˆ 0 + ˆ 1 x i = value of y predicted by the regression line i =1 This yields the following estimators (see Wooldridge, chapter 2): yˆi ˆ0 ˆ1 xi 4483 (2128.7)(12) $70, 427.00 ( predicted salary when exp 12 years) n estimators: ˆ1 = (x - x)(y i i - y) i =1 ; n (x - x ) 2 ˆ0 = y - ˆ 1 x i i =1 𝑦̂𝑖 = 𝛽̂0 + 𝛽̂1 𝑥𝑖 =44883 + 2128.7 (12) =$70,427 (which is the predicted starting salary when experience=12 years) y 70, 640 x 12.10 Estimate: ˆ (45000 70640)(2 12.1) (60, 600 70, 640)(7 12.1) etc. 1 (2 12.10)2 (7 12.1) 2 etc. Estimators are formulas; estimates are application of the formulas to specific samples. Using these formulas we find that ̂ 0 =44883 and ˆ1 =2128.7. What do these estimates tell us about the structure of wages at FHSS? What is the predicted wage for someone with 12 years of experience? What is the predicted wage for someone with 13 years of experience? What is the difference in these two predicted wages? STATA code (get into the do-editor of Stata, type this, save it as file (*.do), and execute it using the “do” option on the toolbar to the upper right on top of the toolbar) # delimit ; input salary experience male; 45000 2 1; 60600 7 0; 70000 10 1; 85000 18 1; 50800 6 0; 64000 8 1; 62500 8 0; 87000 15 1; 92000 25 0; 2 89500 end; regress salary experience; 22 1; and to get the predicted salary at 12 years we can do a trick with a (0,-1) variable: # delimit ; input salary experience pred_trick; 45000 2 0; 60600 7 0; 70000 10 0; 85000 18 0; 50800 6 0; 64000 8 0; 62500 8 0; 87000 15 0; 92000 25 0; 89500 22 0; 0 12 -1; end; regress salary experience pred_trick; III. Multivariate Regression This just means we have two or more independent variables (i.e., two or more slope regressors). To run this regression in STATA, we just need to change the last line of the program above to the following: regress salary experience male; and we would get the following sample regression function (for our 10 observations from FHSS faculty): salary 44, 256 2,106 exp erience 4,836 male Notice that the intercept and slope coefficient for experience didn’t change much. The 2106 coefficient now means “holding gender constant, each additional year of experience (either for males or females) increases salary by $2,106.” What does the coefficient for the male variable indicate? What is the expected salary of a female faculty member with 12 years of experience? Note that the 𝛽̂ type variables are estimators that depend on y, and since y is a random variable, so are the 𝛽̂ type variables. Random variables are usefully characterized by their mean, their standard deviation (square root of the estimated variance), and their shape. IV. Multivariate Regression Application: Difference-in-Difference to measure regime change (like new light rail system on local economy, or new laws on the incomes or health or employment status of the affected individuals) Econometrics is often used to make causal inferences: When a state builds a new light rail system (LR), it is often argued that this improves the quality of life of the local residents. There are many dimensions to quality of life, but let’s suppose that we are 3 interested in the wages of those in the metropolitan area where the light rail is installed in the short run (before immigration possibly changes the size of the labor market). We could address this by looking at wages before and after this change, but the danger is that their salaries may have risen or fallen for other reasons not related to the change in the law. That is, we need to know what would have happened to them in the absence of the law change—sometimes, this is called the counterfactual. A difference-in-difference approach to estimating the impact of LR on wages picks a “reasonable comparison” group (in this case wages of those in a similar labor market who did not adopt a LR system, and examines the before and after of this group (with no LR system) to the before and after results of those living in the metro area where LR (light rail train system) was built. The treatment group is workers living in a metro area with LR; the control group, workers living in a metro area without LR, during the same periods. Difference in difference estimation exploits temporal variation between the treatment and control groups, by adjusting the wage incomes after minus incomes before for the treatment group (call this the “a-b” change in income). The adjustment is to assume that control group is just like the treatment group, except that they didn’t get the treatment. So the control group’s “incomes after” minus “incomes before” (this change in income is denoted as “c-d”) represents what would have happened to the treatment group, if only they had not received the treatment. If the control group is suitable in just this way, the basic strategy is to use the difference in difference, or “(a-b) – (c-d)” as the net effect of LR on , accounting for other trends in wages (in the “c-d” difference) that would have occurred in the absence of the treatment. For concreteness, assume that a mythical place called SLC put in a LR system in 1999, and another mythical place with an analogous urban population, call it Boise, didn’t put in a LR system. Here are the average wages before and after for the two cities Real Wages for Two Metro Areas Year City SLC (LR-treatment grp) 1998 $30,000 2000 $34,000 Boise(no LR-control grp) $25,000 $27,500 The first difference, $4,000, is the increase in wage income in SLC after LR. However, note that even in places without LR, urban wages were increasing: wages rose $2,500 in Boise). So the real effect of the LR in SLC (assuming the Boise data is providing the right counterfactual information) is $1,500, as indicated by the following difference in difference calculation: Diff-in-diff = ($34,000-$30,000) – ($27,500-$25,000)=$1,500. Since these differences are not the result of a randomized experiment, it is very often useful to include the difference in difference estimation technology into a regression framework so that we can additionally control for other factors that might affect the outcomes (in this case, workers’ age, marital status, and educational attainment). To see how the difference in difference is estimated with regression coefficients, consider workers’ wages as determined by being in the treatment group (a metro area with LR), after versus before the law was passed (another dummy variable that takes the value of 4 1 after the LR is operational and zero before), the interaction of treatment group and the LR, and other factors (demographic variables such as those indicated above). Then this model is for individual i at year t ( , , , are parmenters, the variables with subscripts are the data variables): wagei ,t X i ,t treatmenti after _ LRt (treatmenti * after _ LR)i ,t it Hence the expected wage (with E ( it ) 0 ) for the treatment group before LR is (that is, before the LR is operational, setting the appropriate dummy variable terms to zero and one) : wagei ,t X T i ,t and the expected wage for the treatment group after the LR is operational is wagei ,t X T i ,t So the first difference for the treatment group is just ( X T i ,t ) ( X T i ,t ) . For the control group, a similar calculation indicates that their before and after wage difference equals ( X C i ,t ) X C i , t , So that the difference in difference regression equivalent (controlling for other characteristics, X) is just the coefficient , the coefficient of “treatment*after LR” interaction term. SAS APPENDIX SAS code (get into the SAS editor, type this, save it as a file (*.sas), and execute it by clicking the “run” option—little guy running—on the toolbar to the upper right) data one; input salary experience male; cards; *you can use ‘datalines’ instead of ‘cards’ here; 45000 2 1 60600 7 0 70000 10 1 85000 18 1 5 50800 64000 62500 87000 92000 89500 6 8 8 15 25 22 0 1 0 1 0 1 run; proc reg; model salary=experience male; run; 6