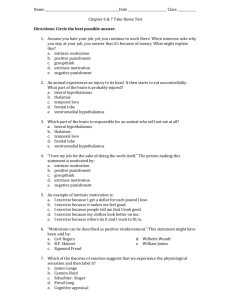

DOC - Robotics Lab

advertisement

Human-Computer Interface Course – Final Project Lecturer: Dr. 連震杰 Student ID: P78971252 Student Name: 傅夢璇 Mail address: mhfu@ismp.csie.ncku.edu.tw Department: Information Engineering Laboratory: ISMP Lab Supervisor: Dr. 郭耀煌 Title: Real-time classification of evoked emotions using facial feature tracking and physiological responses Jeremy N. Bailenson, Emmanuel D. Pontikakis, Iris B. Mauss, James J. Gross, Maria E. Jabon, Cendri A.C. Hutcherson, Clifford Nass, Oliver John. International Journal of Human-Computer Studies. Volume 66 Issue 5, May, pp. 303-317, 2008. Keywords: Facial tracking; Emotion; Computer vision Abstract They propose real-time models to predict people emotion through subjects watching videotapes and their physiological responses. The real emotion is extracted from the facial expressions while watching videotapes instead posed. There are two emotion types that are amusement and sadness to be exploited. The emotion intensity is also observed. The experiment is split into three aspects which are performance measurement, model choose comparison and various specific tests. Introduction There are many applications and technologies of facial tacking are achieved by camera and image technologies. In Japan, some cars are installed cameras in the dashboard to insure the driving security. Besides, the cameras are also used to detect the drivers’ emotion statuses which are angry, drowsy or drunk drivers. First goal of facial emotion system development is to assist the human-computer interface applications. The other goal is to understand people emotion from their facial expressions. Related work There are at least three main ways in which psychologists assess facial expressions of emotions (Rosenberg and Ekman, 2000) 1) naı¨ve coders view images or videotapes, and then make holistic judgments concerning the degree on target faces in the images. But the limitation is in that the coders may miss subtle facial movements, and in that the coding may be biased by idiosyncratic morphological features of various faces. 2) use componential coding schemes in which trained coders use a highly regulated procedural technique to detect facial actions such as the Facial Action Coding System (Ekman and Friesan, 1978). The advantage is this technique including the richness of the dataset. The disadvantage is the frame-by-frame 3) coding of the points is extremely laborious. To obtain more direct measures of muscle movement via facial electromyography (EMG) with electrodes attached on the skin of the face. This allows for sensitive measurement of features, the placement of the electrodes is difficult and also relatively constraining for subjects who wear them. However, his approach is also not helpful for coding archival footage. Approach Part 1- Actual facial emotion The stimuli are used as the inputs which elicit intense emotions from people watch videotapes. The actual emotional behavior is higher accessed than in studies that used deliberately posed faces. Paul Ekman el. Propose the Duchenne Smiles that is the automatic smiles involving crinkling of the eye corners. The automatic facial expressions appear to be more informative about underlying mental states than posed ones (Nass and Brave, 2005). Part 2- Opposite emotions and intensity The emotions were coded second-by-second by using a linear scale for oppositely valenced emotions of the amusement and sadness. The learning algorithms are trained by both binary set of data and linear set of data spanning a full scale of emotional intensity. Part 3- Three model types Hundreds of video frames rated individually for amusement and sadness are collected from each person enable to create three model types. The first type is a ‘‘universal model’’ which predicts how amused any face is by using one set of subjects’ faces as training data and another independent set of subjects’ faces as testing data. The model would be useful for HCI applications in bank automated teller machines, traffic light cameras, and public computers with webcams. The second one is an ‘‘idiosyncratic model’’ predicts how amused or sad by using training and testing data from the same subject for each model. It is useful for HCI applications in the same person who uses the same interface. For example, driving in an owned car, using the same computer with a webcam, or any application with a camera in a private home are applications of the idiosyncratic model. Thirdly, a gender-specific model that trained and tested using only data from subjects in same gender is proposed. This model is useful for HCI applications target a specific gender. For example, make-up advertisements directed at female consumers, or home repair advertisements targeted at males. Part 4- Features The data is not only extracted from facial expressions but also from subjects’ physiological responses including Cardiovascular activity, Electro dermal responding and Somatic activity. The facial features from a camera and the heart rate obtains from the hands gripping the steering wheel. Part 5- Real-time algorithm The real-time algorithm that is designed to predict emotion from computer vision algorithms of facial features detecting and real-time physiological measures extracted is proposed. There are applications on respond to a user’s emotion such as cars seek to avoid accidents for drowsy drivers or advertisements seek to match their content to the mood of a person walking. The amusement and sadness are targeted in order to sample positive and negative emotions. The 15 physiological measures were monitored. The selected films induced dynamic changes in emotional states over the 9-min period which enables varying levels of emotional intensity across participants. Data collection Training data: It was taken from 151 Stanford undergraduates watched movies pretested to elicit amusement and sadness while they watch videotapes and physiological responses were also assessed. In the laboratory session, firstly, the participants should watch a 9-min film clip which was composed of an amusing, a neutral, a sad, and another neutral segment (each segment was approximately 2min long). From the larger dataset of 151, randomly chose 41 to train and test the learning algorithms Expert ratings of emotions It was anchored at 0 with neutral and 8 with strong laughter for amusement and strong sadness expression. Average inter-rater reliabilities were satisfactory, with Cronbach’s alphas= 0.89 (S.D.= 0.13) for amusement behavior and 0.79 (S.D.= 0.11) for sadness behavior. Physiological measures The 15 physiological measures were monitored including heart rate, systolic blood pressure, diastolic blood pressure, mean arterial blood pressure, pre-ejection period, skin conductance level, finger temperature, finger pulse amplitude, finger pulse transit time, ear pulse transit time, ear pulse amplitude, composite of peripheral sympathetic activation, composite cardiac activation, and somatic activity shown as the figure. System architecture The videos of the 41 participants were analyzed at a resolution of 20 frames per second. The level of amusement/sadness of every person for every second was measured from 0 (less amused/sad) to 8 (more amused/sad). The goal is to predict at every individual second the level of amusement or sadness for every person. The emotion recognition system architecture from facial tracking output and physiological responses is shown as the figure. For measuring the facial expression of the person at every frame, the NEVEN Vision Facial Feature Tracker is used. 22 points are tracked on a face with four blocks which are mouth, nose, eyes and eyebrow. eyebrow eyes nose mouth Predicting emotion intensity The WEKA software package is chosen as the statistical tool, the linear regression function using the Akaike criterion for mode selection. Two-fold cross-validation was performed on each dataset using two non-overlapping sets of subjects. The separate tests are performed both sadness and amusement. Three test are using face video alone, physiological features alone, and using both of them to predict the expert ratings. The table below demonstrates the intensity of amusement is easier predicted than that of sadness. The correlation coefficients of the sadness neural nets were consistently 20–40% lower than those forthe amusement classifiers. The table shows the facial features performed better than the classifiers only using the physiological ones. The dataset is processed to discrete the expert ratings for amusement and sadness. In the amusement datasets, all the expert ratings less than or equal to 0.5 were set to neutral and 3 or higher were discretized to amused. On the other hand, in the sadness datasets, all the expert ratings less than or equal to 0.5 were discretized to neutral and 1.5 or higher were discretized to sad. Emotion classification A Support Vector Machine classifier with a linear kernel and a Logitboost with a decision stump weak classifier using 40 iterations (Freund and Schapire, 1996; Friedman et al., 2000) is applied. Each dataset uses the WEKA machine learning software package (Witten and Fank, 2005). The data is split into two non-overlapping datasets and performed a two-fold cross-validation on all classifiers. The precision, the recall and the F1 measure is defined as the harmonic mean between the precision and the recall. For a multi-class classification problem with classes Ai, i=1,..,M and each class Ai having a total of Ni instances in the dataset, if the classifier predicts correctly Ci instances for Ai and predicts C’I i instances to be in Ai where in fact those belong to other classes (misclassifies them), then the former measures are defined as The discrete classification results for all-subject datasets is shown as Experimental results The linear classification results for the individual subjects is shown as The discrete classification results for the individual subjects is shown as These two tables show that the performance of amusement is better than sadness. Linear classification results for gender-specific datasets as table Discrete classification results for gender-specific datasets as the table These two tables indicate in the gender of male emotional responses than female. Conclusion A real-time system for emotion recognition is presented. A relatively large number of subjects watched videos through facial and physiological to recognize the feel of amused or sad responses. A second-by second ratings of the intensity with expressed amusement and sadness to train coders. The results demonstrated better fits in the performance of emotion categories than intensity, the amusement ratings than sadness, a full model using both physiological measures and facial tracking than use alone and person-specific models than others. Discussion • The emotion recognition through facial expressions while watching • videotapes is not strong prove become of the limitations in content of videotapes Otherwise, the correctness of the devices of physiological features collection • are also considered The statistics in the aspects of emotion intensity is not significant improve References [1] Bradley, M., 2000. Emotion and motivation. In: Cacioppo, J., Tassinary, L., Brenston, G. (Eds.), Handbook of Psychophysiology. Cambridge University Press, New York. [2] Ekman, P., Friesan, W., 1978. The Facial Action Coding System: A Technique for the Measurement of Facial Movement. Consulting Psychologists Press. [3] Ekman, P., Davidson, R.J., Friesen, W.V., 1990. The Duchenne smile: emotional expression and brain physiology II. Journal of Personality and Social Psychology 58, 342–353. [4] el Kaliouby, R., Robinson, P., Keates, S., 2003. Temporal context and the recognition of emotion from facial expression. In: Proceedings of HCI International, vol. 2, Crete, June 2003, pp. 631–635. ISBN 0-8058-4931-9. [5] Kring, A.M., 2000. Gender and anger. In: Fischer, A.H. (Ed.), Gender and Emotion: Social Psychological Perspectives. Cambridge University Press, New York, pp. 211–231. [6] Lyons, M.J., Bartneck, C., 2006. HCI and the face. In: CHI ’06 Extended Abstracts on Human Factors in Computing Systems. ACM, pp. 1671–1674. [7] Pantic, M., Patras, I., 2006. Dynamics of Facial Expression: Recognition of Facial Actions and Their Temporal Segments from Face Profile Image Sequences. IEEE Transactions on Systems, Man and Cybernetics—Part B, vol. 36, no. 2, pp. 433–449, April 2006. [8] Witten, H.I., Fank, E., 2005. Data Mining: Practical Machine Learning Tools and Techniques, second ed. Morgan Kaufmann, San Francisco. [9] Zlochower, A., Cohn, J., Lien, J., Kanade, T., 1998. A computer vision based method of facial expression analysis in parent–infant interaction. In: International Conference on Infant Studies.