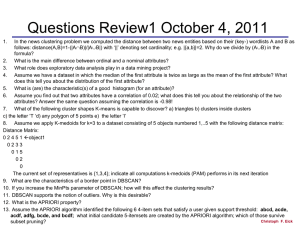

databases classifiers

advertisement

1. How decision trees are used for classification?Explain decision tree induction algorithm for classification [Dec-14/Jan 2015][10marks] To illustrate how classification with a decision tree works, consider a simpler version of the vertebrate classification problem described in the previous section. Instead of classifying the vertebrates into five distinct groups of species, we assign them to two categories: The tree has three types of � A root node that has no incoming edges and zeroor more outgoing edges. � Internal nodes, each of which has exactly one incoming edge and two or more outgoing edges. � Leaf or terminal nodes, each of which has exactly one incoming edge and no outgoing edges 2. How to improve accuracy of classification?Explain [Dec-14/Jan 2015][5marks] some tricks for increasing classification accuracy. We focus on ensemble methods. An ensemble or classification is a composite model, made up of a combination of classifiers. The individual classifiers vote, and a class label prediction is returned by the ensemble based on the collection of votes. Ensembles tend to be more accurate than their component classifiers. We start off in introducing ensemble methods in general. Bagging, boosting and random forests are popular ensemble methods. Traditional learning models assume that the data classes are well distributed. In many real-world data domains 3. Explain the importance of evaluation criteria for classification methods [Dec-14/Jan 2015][8marks] The input data for a classification task is a collection of records.Each record, also known as an instance or example, is characterized by a tuple (x, y), where x is the attribute set and y is a special attribute, designateas the class label. sample data set used for classifying vertebrates into one of the following categories: mammal, bird,fish,reptile, oramphibian. The attribute set includes properties of a vertebrate suchas its body temperature, skin cover, method of reproduction ability to fly, andability to livein water.the attribute set can also contain continuous features.The classlabel,on the other hand, must be a discreteattribute. This is a key characteristic that distinguishes classification from regression, a predictive modelling task in 4. Explain a.Continious b.Discrete c.Asymmetric Attributes with example? [June/July 2014] [10marks] Discrete attribute has a finite or countably infinite set of values. Such attributes can be categorical or numeric. Discrete attributes are often represented by integer valyes.E.g. Zip code, counts etc. Continuous attribute is one whose values are real numbers. Continious attributes are typically represented as floating point variables. E.g. tempreture, height etc. For asymmetric attributes only presence- a non zero attribute value- is regarded as important. E.g. consider a data set where each object is a student & each attribute records whether or not a student took a particular course at university. For a specific student an attribute has a value of 1 If the student took the course and a value 0 otherwise. Because student took only a small fraction of all available courses, most of the value in such a data set would be 0.therefore it is more meaningful and more efficient to focus on non 0 values 5. Explain hunts algorithm and illustrate is working? [june/july 2015][10 marks] [June/July 2014][10marks] Data cleaning: This refers to the preprocessing of data in order to remove or reduce noise (by applying smoothing techniques, for example) and the treatment of missing values (e.g., by replacing a missing value with the most commonly occurring value for that attribute, or with the most probable value based on statistics). Although most classification algorithms have some mechanisms for handling noisy or missing data, this step can help reduce confusion during learning. Relevance analysis: Many of the attributes in the data may be redundant. Correlation analysis can be used to identify whether any two given attributes are statistically related. For example, a strong correlation between attributes A1 and A2 would suggest that one of the two could be removed from further analysis. A database may also contain irrelevant attributes. Attribute subset selection4 can be used in these cases to find a reduced set of attributes such that the resulting probability distribution of the data classes is as close as possible to the original distribution obtained using all attributes. Hence, relevance analysis, in the form of correlation analysis and attribute subset selection, can be used to detect attributes that do not contribute to the classification or prediction task. Including such attributes may otherwise slow down, and possibly mislead, the learning step. 6. What is rule based classifier? Explain how a rule based classifier works. [Dec-14/Jan 2015][10marks] [Dec 13/jan14][7 marks] Using IF-THEN Rules for Classification Rules are a good way of representing information or bits of knowledge. A rule-based classifier uses a set of IF-THEN rules for classification. An IF-THEN rule is an expression of the form IF condition THEN conclusion. An example is rule R1, R1: IF age = youth AND student = yes THEN buys computer = yes. The “IF”-part (or left-hand side) of a rule is known as the rule antecedent or precondition. The “THEN”-part (or right-hand side) is the rule consequent. In the rule antecedent, the condition consists of one or more attribute tests (such as age = youth, and student = yes) that are logically ANDed. The rule’s consequent contains a class prediction (in this case, we are predicting whether a customer will buy a computer). R1 can also be written as R1: (age = youth) ^ (student = yes))(buys computer = yes). If the condition (that is, all of the attribute tests) in a rule antecedent holds true for a given tuple, we say that the rule antecedent is satisfied (or simply, that the rule is satisfied) and that the rule covers the tuple. A rule R can be assessed by its coverage and accuracy. Given a tuple, X, from a class labeled data set,D, let ncovers be the number of tuples covered by R; ncorrect be the number of tuples correctly classified by R; and jDj be the number of tuples in D. We can define the coverage and accuracy of R as coverage(R) = ncovers jDj accuracy(R) = ncorrect ncovers That is, a rule’s coverage is the percentage of tuples that are covered by the rule (i.e., whose attribute values hold true for the rule’s antecedent). For a rule’s accuracy, we look at the tuples that it covers and see what percentage of them the rule can correctly classify. 7. Write the algorithm for k-nearest neighbour classification. [june/july 2015] [Dec 13/jan14][3 marks] Data clustering is under vigorous development. Contributing areas of research include data mining, statistics, machine learning, spatial database technology, biology, and marketing. Owing to the huge amounts of data collected in databases, cluster analysis has recently become a highly active topic in data mining research. As a branch of statistics, cluster analysis has been extensively studied for many years, focusing mainly on distance-based cluster analysis. Cluster analysis tools based on k-means, k-medoids, and several other methods have also been built into many statistical analysis software packages or systems, such as S-Plus, SPSS, and SAS. In machine learning, clustering is an example of unsupervised learning. Unlike classification, clustering and unsupervised learning do not rely on predefined classes and class-labeled training examples. For this reason, clustering is a form of learning by observation, rather than learning by examples. In data mining, efforts have focused on finding methods for efficient and effective cluster analysis in large databases. Active themes of research focus on the scalability of clustering methods, the effectiveness of methods for clustering complex shapes.