Continuous Distribution

advertisement

Statistics 309

9/2/2011 5:03:00 PM

Sample Space (Ω) : a set of all possible outcomes

1) Toss a die : {1,2,3,…6}

2.) Class grade : {A, AB, B,…F}

4 colors

3 Cars

Ω

Red

1

Blue

2

Green

3

Black

Toss 2 dice: {(1,1), (1,2)…(6,6)} = 36 outcomes

(3^4 = 81 outcomes)

An event A is a subset of Ω. [A c Ω]

Ex. – 2 dice: A={ (i,j) i + j ≥ 0

1. A = {H}.

2. A = {HH,HT,TH}=at least one head.

3. A = {1, 3, 5}=odd numbers, B = {5, 6}.

4. A={(i,j):i=6 or j=6}=atleastone”6”,

B = {(i,j) : i+j ≥ 10}= sum of the two

numbers greater or equal to 10.

complement: Ac, A ̄ or A′=the set of all outcomes in Ω that are not contained in A

intersection: A ∩ B=the event that consists of all outcomes in both A and B

union: A∪B= all outcomes in either A or B or both A and B;

symmetric difference: A∆B= all outcomes in only A or only B;

∅: null set or empty set: the event consisting of no outcomes whatsoever;

A ∩ B = ∅: mutually exclusive or disjoint events;

DeMorgan’s law: (∪iAi)c

Example: Toss two dice: A = {1,2,3,4}, B = {3,4,5,6}, C ={1,3,5}, D = {2,4,6}.

o A∩B={3,4}, A∪B={1,2,3,4,5,6}=Ω, A∪C={1,2,3,4,5}, A∩C={1,3}, C∩D=∅,

o Ac = {5,6}, Bc = {1,2}, (A∩B)c = {1,2,5,6}, Ac∪Bc = (A∩B)c

Definition: Events Ai are said to be pairwise disjoint (or mutually exclusive),if

Ai∩Ai =φ for any i≠ j.

Definition: We say A1, · · · , An is a partition of Ω if Ai are mutually disjoint and

= ∩iAci

∪ni=1Ai =Ω.

Probability: A probability P is a mapping from subsets of Ω to [0,1] that assigns a unique

number in [o,1] for each event A and satisfies:

1.) P(φ) = 0

2.) P(A) ≥ 0

3.) if Ai are pairwise disjoint, then

Example: Toss a coin: Ω = {H, T }. Assign P({H}) = p, P({T}) = q, which is a

probability if p + q = 1.

Interpretation: (1). Relative frequency: Toss a coin or a die.

(2) Subjective: good chance for a stock to gain; high probability for a peace

agreement; likely to award contract; odd is good for a team to win a ball game.

Properties:

P (Ac) = 1 − P (A);

P(A ∪ B) = P(A) + P(B) − P(A ∩ B), and

P(A1 ∪ A2 ∪ A3)=P(A1)+P(A2)+P(A3)−P(A1 ∩ A2) − P(A1 ∩ A3)

− P(A2 ∩ A3)+P(A1 ∩ A2 ∩ A3)

Example: In a city 60% of all households subscribe to a national newspaper, and

80% of all households subscribe to a local newspaper, and 50% subscribe both.

If a household is selected at random, what is the probability that it subscribes to

(1) at least one of the two newspapers? (2) none of the newspapers? (3) exactly

one of the two papers?

Solution. A = subscribe to a national newspaper, B = subscribe a local

newspaper. A ∩ B=subscribe to both newspapers. P (A) = 0.6, P(B) = 0.8, P(A ∩

B) = 0.5.

(1). A ∪ B= subscribe either local newspaper or national news- paper or both

newspapers. P(A ∪ B) = P(A) + P(B) − P(A ∩ B) = 0.6 + 0.8 − 0.5 = 0.9

(2). (A ∪ B)c =subscribe none of the newspapers.

P [(A ∪ B)c] = 1 − P(A ∪ B) = 1 − 0.9 = 0.1

(3). A∆B = subscribe exact one newspaper

P(A∆B) = P(A) − P(A ∩ B) + P(B) − P(A ∩ B) = P (A) + P (B) − 2 P (A ∩ B)

= 0.6 +

0.8 − 2 × 0.5 = 0.4

Counting Rules

Equally likely outcomes: Suppose Ω has N outcomes and the probabilities are

equal for all N elements, that is, each element has probability 1/N.

For any event A,

P(A) = #(A)/#( Ω)

*Finding the probability is to count the elements in A and Ω

Multiplication Rule: If a job consists of k separate tasks, the jth task can be done

in nj ways, j=1,…,k, the entire job can be done in n1 X n2 X … X nk.

Permutation: (Order Matters) From n distinct objects, select k objects and line

them up. The total number of ways is

Pnk = n!/(n – k)!

Combination: (No Order) From n distinct objects, select k objects (as a group).

The total number of ways is

Cnk = (nk) = n!/k!(n – k)!

Example: Suppose a bridge hand is dealt from a well-shuffled deck, that is, 13

cards are randomly selected from among the 52 possible cards. Find the

probability that:

o (1) the hand consists entirely of spades and clubs with both suits

represented.

o (2) the hand consists of exactly two suits.

Solution:. Let sample space =Ω= the hand with 13 cards randomly selected from

52 cards, A=the hand consists entirely of spades and clubs with both suits

represented, B=the hand consists of exactly two suits.

#( Ω) = (5213) 635 billion,

#(A) = (2613) – 2 = 10,400,600

We need to divide into two steps in finding #(B).

o Step 1: Out of four suits, the number of ways to select two suits = (42)

o Step 2: the number of ways to have a hand with both the selected two

suits = #(A).

Example: For a certain style of new automobile, the colors blue, white, black and

green are in equal demand. Three successive orders were placed for automobiles

of this style. Find the probabilities

a. One blue, one white and one green are ordered.

b. Two blues are ordered.

c. At least one black is ordered

d. Exactly two of the orders are for the same

color.

o Solution:

o a.) A= one green, white, blue

#(A) = P33 = 3! (Permutation)

P(A) – 3!/43

o b.) B = two cars are blue

#(B) 1. Two blue cars, 2. The 3rd car color.

o c.) C = at least one black is ordered

1black, 2 black, & 3 blacks

Cc = no black

P(C) = 1 – P(Cc)

#(Cc) = 33

P(C) = 1 – 33/43

o d.) 1. Pick a color for two cars: (41)

2. Two same color cars: (32)

3. The 3rd car: (31)

D = exactly two same color for two cars

#D= (41)(32)(31)

P(D) = {(41)(32)(31)}/(43)

Example: A box contains 25 balls, with 10 red and 15 green. Six balls are

selected at random without replacement. Find the probability that:

(1) there are 3 red and 3 green in the 6 selected balls.

(2) there are at least 2 red in the 6 selected balls.

o Solution:

o 1.) Ak = k red balls are selected.

#(Ω) = (256)

To find A3, we divide two steps. Step 1: select 3 balls from the 10

red balls and Step 2: select 3 balls from the 15 green balls.

#(A3) = (103)(153)

P(A) = {(103)(153)}/(2516) = 0.3083

2.) B = At least two red balls are selected = A2 ∪ A3 ∪ A4 ∪ A5 ∪

A6

Refer to Learn@UW notes!

Example :A 5-card poker hand is said to be a full house if it consists of 3 cards of

the same denomination (triple) and 2 cards of the same denomination (pair).

That is, a full house is three of a kind plus a pair. What is the probability that one

is dealt a full house?

o Solution. Let sample space =Ω= the hand with 13 cards randomly

selected from 52 cards, A= the hand with full house. #(Ω) = C525. To find

#(A) we divide into steps:

(1) C42 ways to select suits for a pair;

(2)C43 ways to select suits for a triple; 13 × 12 choices for the kind

of pair and triple. #(A) = 13 × 12 × C42 × C43

P(A) = {13×12×C42 ×C43}/C535 = 0.0014

Example :A poker hand consists of 5 cards. If the cards have distinct

consecutive values and not all of the same suit, we say that the hand

is a straight, while 5 consecutive cards of the same suits is called a

straight flush. For instance, a hand consisting of the five of spades,

six of spades, seven of spades, and eight of spades, and nine of hearts

is a straight. What is the probability that one is dealt a straight?

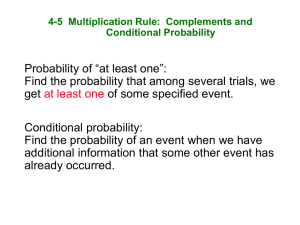

Conditional Probability: the probability that event A occurs when the sample space is

limited to event B.

The conditional probability of A given B is defined as:

o P(A|B) = P(A ∩ B) / P(B) , P(B) > 0

o P(B|A) = P(A ∩ B) / P(A) , P(A) > 0

We say A and B are independent if P(A ∩ B) = P(A) P(B)

Look for “condition” or “given” in the problem!

Properties:

o P (A ∩ B) = P (A) P (B|A) = P (B) P (A|B)

o A and B are independent ⇐⇒ P(A|B) = P(A) ⇐⇒ Ac and B are

independent ⇐⇒ A and Bc are independent ⇐⇒ Ac and Bc are

independent

Example: A box contains 20 red and 30 green balls. Select 2 balls

at random from the box.

(a) If balls are selected without replacement, what is the probability that the first

ball is red and the second ball is green?

o A1 = first ball is red, B2 = second ball is green

o P(A1) = 20/50

o P(A1 ∩ B2) = P(B2|A1) P(A1)

o P(B2|A1) = 30/49

o P(A1 ∩ B2) = (2/5)*(30/49)

(b) If balls are selected without replacement, what is the probability that one is

red and one is green?

o B1 = first ball is green , A2 = second ball is red

o P[(A1 ∩ B2) ∪ (B1 ∩ A2)] = P(A1 ∩ B2) + P(B1 ∩ A2)

o (20/50)*(30/49) + (30/50)*(20/49)

(c) If balls are selected with replacement, what is the probability that the first

ball is red and the second ball is green?

o A = first ball is red, B = second ball is green

o P(A) = 20/30

o P(A ∩ B) = P(B|A) P(A)

o P(B|A) = 30/50 = P(B)

o P(A ∩ B) = (20/50)*(30/50)

(d) If balls are selected with replacement, what is the probability that one is red

and one is green?

(e) If balls are selected without replacement, what is the probability that the

second ball is green?

(f) If balls are selected with replacement, what is the probability that the second

ball is green?

Example: A survey of consumers in a particular community showed that 10%

were dissatisfied with plumbing jobs done in their town. Half the complaints

dealt with plumber A, who does 40% of the plumbing jobs in the town.

(a) Find the probability that a consumer will obtain an unsatisfactory plumbing

job, given that the plumber was A.

o A = plumbing jobs done by A , B = unsatisfactory plumbing jobs

o P(A) = 40% , P(B) = 10%

o P(B|A) = P(A ∩ B) / P(A)

o P(A|B) = 50%

o P(A ∩ B) = 0.1*0.5

o P(B|A) = (.1*.5) / 0.4

(b) Find the probability that a consumer will obtain a satisfactory plumbing job,

given that the plumber was A.

o P(Bc|A) = ?

o P(Bc|A) = 1 − P(B|A) =

Example A box contains three cards. One card is red on both sides, one card is

green on both sides, and one card is red on one side and green on the other. One

card is selected from that box at random, and the color on one side is observed. If

this side is green, what is the probability that the other side of the card is also

green ?

o Front : [R] [G] [R]

o Back: [R] [G] [G]

o Case 1: Back of G/G card

o Case 2: Front of G/G card

o Case 3: Front of R/G card

o Probability that the other side of the card is also green = 2/3

Total Probability

Law of Total Probability: suppose H1, · · · , Hn are a partition of Ω, then for any

event B,

o P(B)= P(B ∩ H1) + P(B ∩ H2) + P(B ∩ H3)

= P(H1)P(B|H1) + P(H2)P(B|H2) + P(H3)P(B|H3)

Bayes’ Theorem: suppose A1,…,An are a partition of Ω,

P(Aj|B) = P(Aj ∩ B)/P(B) =

Independence

Events A and B are independent if

P(A ∩ B) = P(A) × P(B) which is equivalent

to

P(A|B) = P(A), P(B|A) = P(B)

Events A1, · · · , An are mutually independent if for every k and every subset of

indices i1, · · · , ik, P(Ai1 ∩Ai2 ∩···∩Aik)=P(Ai1)P(Ai2)···P(Aik)

Example : A1, A2, A3 are mutually independent is not different from pairwise

independence: each pair of A1, A2, A3 are independent.

Binomial Probability:

There are large number of items. Suppose that each item is defective with

probability p and non- defective with probability q = 1 − p, and items being

defective or not are independent from each others. Select n items at random

with replacement and inspect them. Let X = the number of defective items in the

n selected items. Find probability P(X = k) for k = 0,··· ,n.

P(X = k) = (nk)pkqn—k

Geometric Probability

There are large number of items. Suppose that each item is defective with

probability p and non- defective with probability q = 1 − p, and items being

defective or not are independent from each others. Select item by item at

random (with replacement) until a first defective item is found. Let Y = the

number of items selected. Find probability P(Y = k) for k = 1,2,··· ,.

P(Y = k) = pqk—1

Negative Binomial Probability:

There are large number of items. Suppose that each item is defective with

probability p and non-defective with probability q = 1 − p, and items being

defective or not are independent from each others. Select item by item at

random (with replacement) until r defective item is found. Let W = the number

of items selected. Find probability P(W = k) for k = r, r + 1, · · · ,.

P(W = k) = (k—1 r—1)prqk—r

r = “success”

Example: A certain devices can be sent back to the manufacturer for repair while under

warranty. Of these, 60% can be repaired and 40% must be replaced with new ones.

(a) What is the probability that one device must be replaced among the ten

devices that were sent back?

(b) What is the probability that the first device that must be replaced was the

tenth send-back devices?

(c) What is the probability that three device must be replaced among the twenty

devices that were sent back?

(d) What is the probability that the third device that must be re- placed was the

twentieth send-back devices?

Solution:

a.) Binomial Probability

n=10, k=1, p=4, q=6

(101)(.4)(.6)9

b.) Geometric Probability

k=10

(.4)(.6)9

c.) Binomial Probability

n=20, k=3

(203)(.4)3(.6)17

d.) Negative Binomial Probability

r=3, k=20

(20—13—1) (.4)3(.6)17

Discrete Random Variable

A random variable X is a mapping from sample space Ω to real line R. If X

Probability Distribution

The probability mass function (pmf or pf) of X is given by:

f(x) = P(X = x)

o Suppose X takes values r1, r2,…, then f(xj) = P(X = xj) = pj and f(x) = 0 for

x ≠ xj.

o Properties of pmf:

pi ≥ o

ipi = 1

Cumulative Distribution

The (cumulative) distribution function (df or cdf) of X

F(x) = P(X ≤ x) =

å

xj<x

f (xj)

The graph of cdf F (x) is a step function.

Expectation

X ∼ f(x), the expectation (mean or average) of X is

μX =E(X) =

Suppose H(x) is a function. Then H(X) is random variable,

E[H(X)] =

å

x

xf (x)

å H(X) f (x)

x

Variance

μ = E(X).

σ2X =Var(X)=E[(X−μ)2]= (x—μ)2f(x)

Standard deviation:

σX = Var(X)

V ar(X) = E(X2) − [E(X)]2

Discrete Distribution:

Binomial distribution:

(i). n independent trials;

(ii). each trial has two possible outcomes: success and failure, with probability p

and 1 − p, respectively;

(iii). X = the total number of ‘success’ in the n trials.

f(x) =

æ n ö x

ç

÷ p (1- p)n-x

è x ø

, x = 1, 2, 3, …, n

E(X) = np

Geometric distribution:

(i). independent trials;

(ii). each trial has two possible outcomes: success and failure, with probability p

and 1 − p, respectively;

(iii). X = the number of trials needed to produce the first success.

f (x) = p (1 − p)x−1, x = 1, 2, …, n

E(X) = 1/p V(X) = q/p^2

Negative Binomial distribution:

(i). independent trials;

(ii). each trial has two possible outcomes: success and failure, with probability p

and 1 − p, respectively;

(iii). X = the number of trials needed to produce the rth success.

V(X) = npq, where q = (1—p)

æ x -1 ö r

x-r

ç

÷ p (1- p) ,

r -1 ø

f(x) = è

x = r+1,r+ 2, …, n

E(X)= r/p V(X) = rq/p^2

Hypergeometric distribution:

Of N items, k are defective and N − k are non-defective. Randomly select n items

from N items without replacement. Let X = the number of selected defective

items. X takes values between max{0, n − (N − k)} and min{n, k} and has

probability function:

Poisson Distribution:

Has pmf:

f (x) = P(X = x) =

l x e- l

E(X) = λ V(X) = λ

x!

, x = 0,1, 2,..., n

9/2/2011 5:03:00 PM

Continuous Random Variable

Continuous random variable X takes values in a subinterval of real

line IR or IR.

Definition: The probability density function (pdf) of X

is a function given by:

ò

o ♠ f(x) ≥ 0;

♠

o ♠P(X∈D)=

ò

D

f(x)dx = 1;

f(x)dx.

P(X =a)=0, P(a<X ≤b)=F(b)−F(a)

Definition: The (cumulative) distribution function (df or cdf) of X:

dF(x) f(u)du, f(x)= dx

x

ò f (u)du

F(x)=P(X ≤x)=

Theorem 1 ♠ F (x) is non-decreasing: F (x1) ≤ F (x2) for x1 ≤ x2;

♥ F(x) is right continuous: F(x + h) ↓ F(x) if h ↓ 0;

♠ lim x→−∞ F(x) = 0 and lim x→∞ F(x) = 1.

Expectation:

o Definition: X ∼ f(x), the expectation (mean or average) of X is

-¥

, f(x) = dF(x) / dx

defined by:

μX =E(X)=

ò x f (x)dx

Let μ = E(X).

σX2 =Var(X)=E (X−μ)2 =

Standard deviation:

σX =

Var(X) = E(X2) − [E(X)]2

Var(a X + b) = a2 V ar(X)

Percentile: Given a continuous random variable X with pdf f(x), its

(100 p)-th percentile = η(p)

p=

ò

(x−μ)2f(x)dx

Var(X)

n( p)

ò

f (x)dx

-¥

o

Common Continuous Distributions

Uniform Distribution:

o X follows s uniform distribution on [a,b] with p.d.f. :

f(x) = 1/(b—a) , a ≤ x ≤ b

E(X) = (a+b)/2 , Var(X) = (b—a)2/12

F(x)=P(X ≤ x)=0, x<a

x-a

x

x

-¥

a

F(x) = P(X ≤ x) = 1, x>b

F(x) = 0, x<a

ò f (u)du = ò b - adu = b - a,

1

a≤x≤b

=(x—a)/(b—a) , a≤x≤b

=1, x>b

Exponential Distribution: X ∼ Exp(β)

l=

o

1

b

1

o f(x) =

b

F(x) =

o

x

ò

0

x

exp(- ),

b

x>0

æ uö

æ xö

æ uö

exp ç - ÷ du = -exp ç - ÷ 0x = 1- exp ç - ÷

b

è bø

è bø

è b ø , x>0

1

æ xö

ç- ÷

o F(x) = 1—exp è b ø, x>0

=x ≤ 0

Location Exponential Distribution:

æ x-mö

exp ç ÷

b

è b ø

1

o f(x) =

Double Exponential Distribution:

f (x) =

, x> μ

æ- x -m

1

exp ç

2b

è b

ö

÷,

ø

x Î (-¥,¥)

o

Normal (or Gaussian) Distribution:

o

æ (x - m )2 ö

1

f (x) =

exp ç ÷

2

2

s

2ps

è

ø

x Î (-¥,¥)

,

o Standard normal: Z ∼ N (0, 1), a standard normal distribution

with parameter (μ, σ) = (0, 1). Denote by Φ and φ its df and

pdf:

æ z2 ö

1

f (z) =

exp ç - ÷

2p

è 2ø

z

F(z) = ò f (u)du

-¥

Φ(z) are tabulated. The pdf of Z is symmetric, thus

P(Z < −a) = P(Z > a) or Φ(−a) = 1 − Φ(a).

Suppose X ~

Z=

N(m, s 2 )

X -m

s

~ N(0,1)

,

X =sZ +m

Find Probability:

a£ X £bÛ

a-m

s

£Z£

b-m

s

Find Percentile:

Percentile for X = σ percentile for Z + μ

Normal Approximation:

o Suppose Y sin Bin(n,p). For np ≥10 and nq ≥10, the binomial

probability for Y can be approximated by a normal distribution

with mean np and variance npq. That is, for integers a and b,

æ a - 0.5 - np

b + 0.5 - np ö

P(a £ Y £ b) » P ç

£Z£

÷

npq

npq ø

è

o

Cauchy Distribution

f (x) =

o

1æ 1 ö

ç

÷

p è 1+ x 2 ø

,

x Î (-¥,¥)

1

o F(x) =

p arctan(x) + ½

Gamma Distribution

o

X ~ Gamma(a, b )

o

æ xö

1

a -1

f (x) = a

x exp ç - ÷

b G(a )

è bø

G(a ) =

o where,

o

E(X) = ab

o

Var(X) = ab

¥

ò ua

-1 -u

e du

0

Log-normal Distribution

o

log X ~ N(m, s 2 )

o

æ (log x - m )2 ö

1

f (x) =

exp ç ÷

2

2

s

x 2ps

è

ø,

o

æ log x - m ö

P(X £ x) = P(log X £ log x) = F ç

÷

è s

ø

o

æ m +s 2 ö

E(X) = exp ç

÷

è 2 ø

o

Var(X) = exp(2m + s 2 )[es -1]

2

Weibull Distribution

x>0

a

o

æXö

ç ÷ ~ Exp(1)

èbø

o

æ é x ùa ö

f (x) = ab -a xa -1 exp ç -ê ú ÷

ç ëb û ÷

è

ø

o

æ 1+1 ö

E(X) = b ´ G ç

÷

è a ø

o

é æ 1+ 2 ö é æ 1+1 öù2 ù

Var(X) = b 2 êG ç

÷ - êG ç

÷ú ú

êë è a ø ë è a øû úû

o

ææ X öa æ c öa ö

æ æ c öa ö

P(X £ c) = P çç ÷ £ ç ÷ ÷ = 1- exp ç - ç ÷ ÷

çè b ø è b ø ÷

ç èb ø ÷

è

ø

è

ø

,

x>0

Beta Distribution

o X ~ Beta(α, β)

f (x) =

o

1

xa -1 (1- x)b -1,

B(a, b )

B(a, b ) =

o where,

E(X) =

o

ò ua

-1

(1- u)b -1 du =

0

G(a )G(b )

G(a + b )

a

a+b

Var(X) =

o

1

0 <= x <=1

ab

(a + b )2 (a + b +1)

Discrete Distributions

Let X1 and X2 be two discrete random variables. The joint pf of

(X1, X2):

o f(x1,x2) = P(X1 = x1,X2 = x2)

Theorem 1 (a). f (x1, x2) ≥ 0;

o

å å f (x1, x2) =1

x1

x2

Marginal distribution : the marginal pf of X1:

o

f 1(x1) = å f (x1, x2)

x2

o the marginal pf of X2:

f 2(x2) = å f (x1, x2)

x1

o We say X1 and X2 are independent if:

f (x1, x2) = f1(x1) f2(x2)

Conditional distribution: From the definition of conditional

probability we have:

P(X1 = x1| X2 = x2) =

o

P(X1 = x1, X2 = x2)

P(X2 = x2)

o The conditional pdf of X1 given X2 = x2:

f1|2 (x1 | x2 ) =

f (x1, x2 )

f2 (x2 )

o Similarly, the conditional pdf of X2 given X1 = x1:

f2|1 (x2 | x1 ) =

f (x1, x2 )

f1 (x1 )

o The conditional probability of X1 ∈ D1 given X2 = x2:

P(X1 Î D1| X2 = x2) =

å

f1|2 (x1| x2)

x1ÎD1

o Similarly, the conditional probability of X2 ∈ D2 given X1 =

x1:

å

P(X2 Î D2 | X1 = x1) =

f2|1 (x2 | x1)

x 2ÎD2

o The conditional expectation of H1(X1) given X2=x2:

E[H1 (X1 ) | X2 = x2 ] = å H(x1 ) f1|2 (x1 | x2 )

x1

Theorem 2: Law of total probability

o

P(E) = å P(E | X2 = x2) f2 (x2)

x2

Theorem 3:

å E[H(X , x ) | X

1

o E[H(X1, X2)] =

=

2

2

= x2 ] f2 (x2 )

x2

E2 {E1|2 [H(X1, X2 ) | X2 ]}

Covariance:

o Cov(X1, X2) = E(X1 X2) − E(X1) E(X2)

Variance:

o Var(X1) = E(X21) − [E(X1)]2,

o Var(X2) = E(X22) − [E(X2)]2,

Correlation

Multinomial Distribution:

Model: (i). n independent trials;

(ii). each trial has k possible outcomes: the i-th outcome occurs

å

k

pi =1

i=1

with probability pi, i = 1, · · · , k,

iii). Xi = the total number of the i-th outcome appeared in the n

trials.

(X1,….,Xk) takes values (x1, …,xk) with xi ≥ 0 and

(X1,…,Xk) has joint pf:

å

k

x =n

i=1 i

and

o f(x1, …,xk) =

o where

æ

n

çç

è (x1, ... ,x k )

æ

n

çç

è (x1, ... ,x k )

ö

÷÷ p1x1... pkxk

ø

ö

n!

÷÷

ø = x1 !x2!...xk !

Denote by (X1,··· ,Xk) ∼ Multk(n,p1,··· ,pk).

Suppose X ∼ Bin(n, p). Then (X, n − X) ∼ Mult2(n, p, q).

Theorem 4 If (X1,··· ,Xk) ∼ Multk(n, p1,··· , pk), then

Xi ∼ Bin(n, pi).

Theorem 5 If (X1,··· ,Xk) ∼ Multk(n, p1,··· , pk), then

(X1,··· , Xr, n−X1−···−Xr) ∼ Mult r+1(n, p1,··· , pr, 1−p1−···−pr).

Theorem 6 If (X1,··· ,Xk) ∼ Multk(n,p1,··· ,pk), then the conditional

distribution of (X1, · · · , Xr) given Xr+1 +· · ·+Xk = t is

Multr (n−t, p*1,··· , p∗r), where:

pi* =

o

pi

p1+.... + pr

, i =1, …, r

Theorem 7 Suppose (X1,··· ,Xk) ∼ Multk(n, p1,··· , pk). Then

o E(Xi)=npi,

o Var(Xi)=npi (1−pi),

o Cov(Xi, Xj)=−n*pi*pj, i̸=j

Continuous Distribution

Let X1 and X2 be two continuous random variables.

The joint pdf of (X1, X2): f(x1, x2).

Property: a.) f(x1, x2) ≥ 0

o b.)

òò

f(x1, x2) d x1 dx2 = 1

Find Probability:

o

Mean and Variance:

P((X1, X2 ) Î D) =

òò

( x1,x2 )ÎD

f (x1, x2 )dx1, dx2

o

Var(X) = E(X 2 ) -[E(X)]2

Marginal Distribution:

o Marginal distribution of X1

f1 (x1 ) =

ò f (x , x )dx

1

2

2

o Marginal Distribution of X2

f2 (x2 ) =

ò f (x , x )dx

1

2

1

o The conditional pdf of X1 given X2 = x2:

o Similarly, the conditional pdf of X2 given X1 = x1:

f2|1 (x2 | x1 ) =

f (x1, x2 )

f1 (x1 )

The conditional expectation of H1(X1) given X2=x2:

Polar coordinate (r,θ) ←→ Cartesian coordinate (x,y)

x = r cosθ

y = r sin θ

The Jacobian

o

æ cosq

J =ç

è -r sinq

sinq ö

÷

r cosq ø

o

det(J) = r cos2 q + rsin 2 q = r

o dx dy = r dr dθ

More Than Two Variables:

X1,…,Xn are discrete random variables. Their joint probability mass

function is:

o f(x1,…,xn) = P(X1=x1, …., Xn=xn)

Multinomial Distribution

o X1,…,Xn are continuous random variables. Their joint

probability density function is:

f(x1,…,xn)

n-fold integral:

o Suppose marginal pmf or pdf are f(x1),…, fn(xn). We say

X1,…,Xn are independent if

f(x1,…,xn)= f(x1)f(x2)…. fn(xn)

For independent random variables X1,…,Xn :

o P(X1 ∈ D1,X2 ∈ D2,X3 ∈ D3) = P(X1 ∈ D1)P(X2 ∈ D2)P(X3 ∈

D3)

The independent concept for the bivariate case: Suppose (X1,Y1)

and (X2,Y2) are independent:

o P((X1,Y1) ∈ D1,(X2,Y2) ∈ D2) = P((X1,Y1) ∈ D1)P((X2,Y2) ∈

D2)

Exam 2 Exclusions:

Example 5.10 on book page 201

Lecture Notes

o The distribution of X1+X2

o Max (X1,…Xn)

Chapter 4

X pdf

P(X Î D) =

ò f (x)dx

D

o

2

o E(X), E(X ), Var(X)

Normal Table:

o

o

E[H(X)] =

N(m, s 2 )

Z=

X -m

s

Exponential:

ò h(x) f (x)dx

æ xö

exp ç - ÷ = l exp(-l x)

b

è bø

1

o

l=

o

Gamma:

1

b

(a, b )

G(a ) =

o

o

Beta:

¥

ò ta

-1 -t

e dt

0

G(k) = (k -1)!

(a, b )

o Alpha and Beta are integers

Weibull

Poisson

Chapter 5:

Marginal pf

Conditional pf

o Conditional probability

o Conditional Expectation

o Conditional Variance

E(X), Var(X), Cov(X1,X2), p=correlation

Continuous Case:

o

f (x1, x2 ) joint pdf

o

P(X1, X2 ) Î D) =

òò f (x , x )dx dx

1

D

o Marginal pdf

f1 (x1 )

f2 (x2 )

o Conditional pdf

f (x1, x2 )

Marginal pdf

2

1

2

o Multinomial

o

9/2/2011 5:03:00 PM