Mid2-Review

advertisement

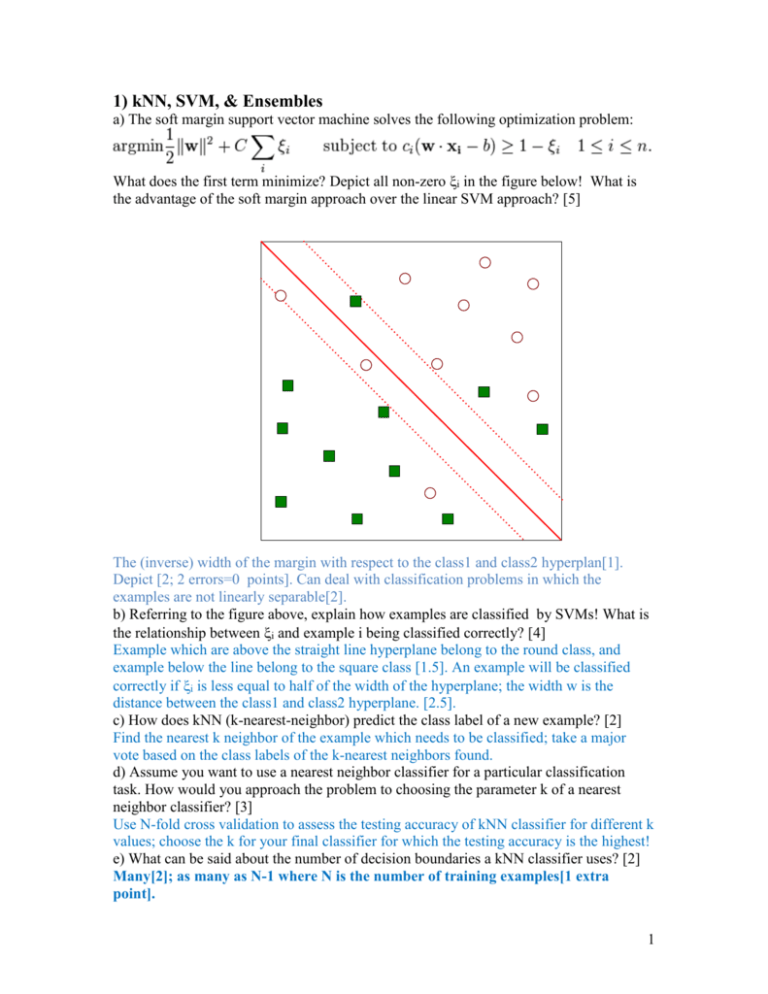

1) kNN, SVM, & Ensembles a) The soft margin support vector machine solves the following optimization problem: What does the first term minimize? Depict all non-zero i in the figure below! What is the advantage of the soft margin approach over the linear SVM approach? [5] The (inverse) width of the margin with respect to the class1 and class2 hyperplan[1]. Depict [2; 2 errors=0 points]. Can deal with classification problems in which the examples are not linearly separable[2]. b) Referring to the figure above, explain how examples are classified by SVMs! What is the relationship between i and example i being classified correctly? [4] Example which are above the straight line hyperplane belong to the round class, and example below the line belong to the square class [1.5]. An example will be classified correctly if i is less equal to half of the width of the hyperplane; the width w is the distance between the class1 and class2 hyperplane. [2.5]. c) How does kNN (k-nearest-neighbor) predict the class label of a new example? [2] Find the nearest k neighbor of the example which needs to be classified; take a major vote based on the class labels of the k-nearest neighbors found. d) Assume you want to use a nearest neighbor classifier for a particular classification task. How would you approach the problem to choosing the parameter k of a nearest neighbor classifier? [3] Use N-fold cross validation to assess the testing accuracy of kNN classifier for different k values; choose the k for your final classifier for which the testing accuracy is the highest! e) What can be said about the number of decision boundaries a kNN classifier uses? [2] Many[2]; as many as N-1 where N is the number of training examples[1 extra point]. 1 f) Assume you use an ensemble approach. What properties should base classifiers have to obtain a highly accurate ensemble classifier? [3] The classifiers should be diverse; that is, they make different errors.[2] The classifier should be somewhat accurate; at least above 50%!.[1] g) What role do example weights play for the AdaBoost algorithm? Why does AdaBoost modify weights of training examples? [4] The weight determines with what probability an example is picked as a training example.[1.5] The key idea to modify weights is to obtain high weights for examples that are misclassified ultimately forcing the classification algorithms to learn a different classifier that classifies those examples correctly, but likely misclassifies other examples.[2.5] 4) Decision Trees/Classification Compute the GINI-gain1 for the following decision tree split (just giving the formula is fine!)[3]: (12,4,6) (3,3,0) (9,1,0) (0,0,6) G(6/11,2/11,3/11) – (6/22*G(0.5,0.5,0) + 10/22* G(0.9,0,1,0) + 0) G(6/11,2/11.3/11)= 1-(6/11**2 + 2/11**2+3/11**2)= 1- ((36+4+9)/121)= 72/121=0.595 The worst Gini for 3 classes is: G(1/3.1/3.1/3)=0.667 a) Assume there are 3 classes and 50% of the examples belong to class1, and 25% of the examples belong to class2 and class3, respectively. Compute the entropy of this class distribution, giving the exact number not only the formula! [2] H(1/2,1/4,1/4)= ½*log2(2)+ 2*1/4log2(4)=3/2=1.5 b) The decision tree learning algorithms is a greedy algorithm—what does this mean? [2] Seeks the shortest path from the current state to a goal state/makes local decisions[1]; does not backtrack[1]; frequently, does not find the optimal solution[1]. c) Assume you learn a decision tree for a dataset that only contains numerical attributes (except the class attribute). What can be said about the decision boundaries that decision trees use to separate the classes? [1] Axis parallel lines/hyperplanes (of the form att=value where att is one attribute of the dataset and value is a floating point number) where each axis corresponds 1 (GINI before the split) minus (GINI after the split) 2 d) Why is pruning important when using decision trees? What is the difference between pre-pruning and post pruning? [4] To come up with a decision tree that uses the correct amount of model complexity to avoid under and overfitting. [2] Prepruning: directly prevents a tree from growing too much by using stricter termination conditions for the decision tree induction algorithm Postpruning; Grows a large tree and then reduces it in size by replacing subtrees by leaf nodes. 3