Biography

advertisement

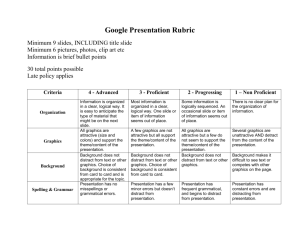

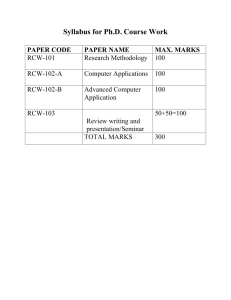

WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin Biography Alex is a junior majoring in Computer Science Video Games. He was born in Moscow, but lived in China since he was 10. After he graduates, Alex hopes to stay in the US and work for a software development firm. Contact and Email Phone: (213) 400-2585 Email: alex.silkin@gmail.com Key Words Video Games, 3D Graphics, 3D rendering, polygon mesh, shader Multimedia Suggestions A basic, 10 minute tutorial that demonstrates how to model a human hand: http://www.youtube.com/watch?v=J1mExXURsWk Euclideon’s Unlimited Detail presentation and comparison to traditional polygonal graphics: http://www.youtube.com/watch?v=5dWHsrRqUx4&feature=fvst A demonstration of how a skeleton-based rig can move and animate a character’s limbs: http://www.youtube.com/watch?v=X2Uk-r0BNJE&feature=related Abstract Video games are becoming an increasingly mainstream form of art and entertainment. At the same time, the medium’s visuals have evolved at a similarly exponential rate. Modern video games provide extremely immersive experience with detailed graphics that are progressively closing in on photorealistic quality. Despite these rapid improvements, the foundation of real-time 3D graphics has remained relatively constant. The 3D production pipeline still consists of 6 core stages: pre-production, 3D modeling, shading & texturing, animation, lighting and rendering & post-production. This paper will explore the modern methodologies that are employed during these stages to give an overview of how today’s video games deliver their incredible visuals. 1 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin Introduction to Real-Time 3D Computer Graphics Have you ever wondered how today’s amazing 3D graphics in games are possible? Modern real-time 3D computer graphics have evolved exponentially since the first primitive 3D games at the end of the 20th century. However, the fundamentals of real-time 3D graphics have stayed relatively constant because the evolution of 3D technology has been mostly driven by improvements in computer hardware and integration of new algorithms within the traditional computer graphics pipeline. The computer graphics pipeline refers to the process of creating 3D assets and delivering them to the audience in their finished form. This process is broken down into 6 stages: pre-production, 3D modeling, shading & texturing, animation, lighting and rendering & post-production [1]. We need to explore these different stages to fully understand and appreciate the technology behind the incredible visuals in 3D games. During the pre-production stage, the vision for a 3D asset is formed and finalized. Artists and engineers involved in the project brainstorm ideas to produce a design of the asset. The design’s aesthetics must be homogenous with the style of the whole project. At the same time, the design must be feasible within the project’s limited resources, such as time, personnel and technology. The design must also be fairly detailed, as it will guide the creation of the asset through the next 5 stages of its production. Consequently, the finished design usually contains concept art of the 3D asset, which is used as a reference to create the model of the 3D asset. 3D models are usually constructed using a modeling package - software specifically designed for creating 3D models for movies and games. There are many ways to represent models in 3D space, but the oldest and one of the most popular is using polygons. Because of 2 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin their relatively low processing requirements, 3D polygon graphics are especially prevalent in projects that rely on real-time rendering, or on the fly graphics processing, such as video games and simulations. A polygon is defined as a “bounded region of a plane” [2]. These bounds are formed by lines that join together at points called vertices. Vertices are defined by their positions in 3D space expressed with positional vectors in relation to a predetermined origin of the 3D space. Consequently, a collection of all the vertices is the most basic way to express a 3D model constructed out of polygons, also known as a mesh [2]. This system allows 3D modelers to create meshes that represent any object by approximating its shape with flat polygons (see Figure 1). Mark Allen 2007/ http://www.playtool.com/pages/basic3d/basics.html Figure 1: A polygonal representation of a table A model’s appearance can be improved with greater detail by using more polygons, thus raising the model’s polygon count. However, rendering more polygons requires more processing resources, which is particularly problematic for real-time rendering [3]. Consequently, artists 3 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin are usually specifically informed about the planned poly count limits for each 3D asset and the whole 3D scene. The improvements in 3D game graphics have been significantly driven by the increased poly counts of objects within games. This increased detail is made possible by the improvements of computer hardware, which allows computers and gaming consoles to process more polygons at a rate sufficient for real-time animation, which is at least 30 frames per second. Real-time animations must run at least with of 30 frames per second, or the animation will seem to lag as there will be enough time between each frame for an observer to be able to distinguish each individual frame. However, practically, real-time simulations must aim to run with much higher frame rates, because frame rates fluctuate sporadically depending on the present amount of detail on screen, and may consequently fall below the desired levels. At the same time, game developers are constantly thinking of new tricks to creatively overcome poly count limits. An example of this is swapping high-polygon versions of models by low-polygon versions when the models are far away from the camera. Similarly, objects very far away can simply be 2D planes with images, usually scenery, effectively serving as cardboard carvings surrounding the scene. These tricks conserve the polygon count in the entire scene and thereby allow higher polygon detail on objects closer to the camera. Consequently, the race for better graphics in games consists of discovery and improvement of such techniques in addition to the traditional rise of poly count limits using faster hardware. However, such tricks are far from perfect. In the first example, a player can notice the model swap if he or she pays attention. At the same time, many modern games allow players to use tools, such as binoculars, to look into the distance of virtual worlds, which can expose both the low poly versions of models and the 4 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin aforementioned fake scenery. Consequently, programmers are constantly trying to invent ways to overcome problems plaguing these tricks and polygonal graphics in general. In fact, several individuals have proposed alternatives to polygons, such as voxels, which are 3D versions of pixels. However, while all proposed alternatives overcome some drawbacks of polygonal graphics, in general none have been found adequate to replace polygons completely. This is because after many years, hardware, algorithms and artistic techniques have been heavily optimized for polygonal graphics. The debate surrounding the Unlimited Detail technology embodies this conflict between traditional and new 3D methodologies. Unlimited Detail is currently under development by Euclideon, a Brisbane-based company. Ueclideon promises that their technology will revolutionize graphics in 3D games using innovative application of voxels called point clouds. According to Euclidion, Unlimited Detail technology “can process unlimited point cloud data in real time, giving the highest level of geometry ever seen” [4]. Furthermore, Bruce Rober Dell, Euclideon’s CEO, has stated that Unlimited Detail can “do all this with modest, rather than excessive, processing power” to deliver graphics more detailed than traditional polygonal graphics “by a factor of 100,000 times” [5]. Naturally, industry experts have responded with much skepticism to Dell’s grandiose claims. One of these experts is John Carmack, who is the creator of the original Wolfenstein, Doom, and Quake 3D engines and consequently considered to be one of the “fathers” of 3D games. Carmack has expressed relatively moderate cynicism towards Ueclidion’s claims, as he has stated his belief that there is “no chance of a [Unlimited Detail] game on current gen systems, but maybe several years from now. Production issues will be challenging” [6]. On the other hand, Markus Alexej Persson, another influential individual in the game industry, has 5 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin been much more critical of the new technology, going so far as to proclaim that “[Unlimited Detail is] a scam” [7]. Person has backed up his claim by stating that essentially “[Euclideon] made a voxel renderer”, which suffers from typical draw backs in voxel technology: limited number of unique objects in a scene and difficulties in applying traditional animation techniques to voxels [7]. Persson is known as “Notch” and is famous for developing Minecraft, which uses stylistically low detail voxel technology for terrain alongside traditional polygonal graphics for characters. Persson’s voxel related experience grants authority to his criticisms and conclusion that “[Euclideon is] carefully avoiding to mention any of the drawbacks” because the company is seeking funding [7]. Indeed, during an interview with Euro Gamer, Dell has shown an inability to directly confront the criticisms of Unlimited Detail, “often choosing to be humorously defensive in answering even basic technological questions, or simply evading them altogether” [8]. Dell has further eroded his credibility with his contradicting statements, saying that Euclidean has learnt from its past mistakes and will no longer show unfinished features, and yet “[attempting] to put the new video into context, saying that the decision to go ahead with a new demo only came a few weeks ago" [8]. This lack of credibility and lack of support from the games industry exemplifies the issues that challenge the development of alternative 3D graphics technologies. Consequently, in the last twenty years, polygonal modeling has been thoroughly entrenched within the traditional 3D production pipeline for games. During the next stage in the 3D production pipeline, called shading and texturing, game developers add texture maps to a finished 3D model to give it color and more detail. A texture map is essentially a 2D image that is projected onto the surface of a 3D model [9]. Texture maps influence lighting algorithms during rendering to not only display color on a model’s surface, 6 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin but also create lighting effects and even display visual illusions that change the appearance of a model’s geometry. However, in order to create an effective texture map that will properly cover a model’s surface, artists need to first create a custom UV layout for the model. A UV layout is “a visual representation of a 3D model flattened onto a two dimensional plane”, where U and V standing for the coordinates on a 2D map that covers the 3D surface of a model. [9] Image sections contained within a polygon of UVs are mapped to appropriate faces on the model. The process for creating a UV layout is called UV unwrapping, when an artist lays out flattened UV sections of the model within a 2D square. A texture map is created using the UV layout as a reference, allowing artists to affect specific regions on the model. When a texture map is applied to a model, it is automatically scaled to fit within the 2D UV space, thus giving artists the freedom to choose the resolution of texture maps. However, similarly to a model’s poly count, higher resolution textures require more memory and consequently the resolution of texture maps is restricted by memory limitations. There are also different types of texture maps, such as color, specular, bump, reflection and transparency maps [10]. Color, or diffuse, maps allow artists to add different colors or textures to different regions of a model. Specular maps, also known as gloss maps, manipulate the light algorithm to give a shiny appearance to specific regions of a model’s surfaces. Bump, displacement, or normal maps effectively change the appearance of a model’s geometry by affecting the light algorithm to render surfaces at displaced positions. This allows bump maps to add 3D detail without sacrificing much processing resources in comparison to the cost of raising a model’s polygon count (see Figure 2). Lastly, reflection and transparency maps, as their name implies, make surfaces reflective and 7 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin transparent respectively. All these texture maps can be used in conjunction to achieve the appearance of any material that artists desire. Mark Allen 2007/ http://www.playtool.com/pages/basic3d/basics.ht ml Figure 2: The top model of the Earth only has a diffuse map attached. The bottom model makes use of a bump map to create the illusion of more detail A fully textured 3D asset is ready to be animated if necessary. Traditional computer animation uses key frames, where the object is manipulated in a special manner at specific frames, which causes the object to smoothly transition, or tween, between the frames as the animation is played. A system that uses a skeleton has been developed to complement 8 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin traditional animation techniques to allow 3D character animation. In this system, an artist first “rigs” a model to attach it to a skeleton with appropriately positioned joints. Then, the artist paints “skin weights” to distribute the influence of each joint on the various portions of the mesh’s skin to achieve realistic deformation of the skin during movement. Finally, the model is ready for animation and the character’s joints are rotated at specific key frames. When the animation is played, the joints smoothly rotate between the predetermined angles at the key frames. This is the fundamental methodology behind all the moving characters in games today. However, it would be impossible to see all the action within games without a source of light. Consequently, during the lighting phase of production, artists position lights around the scene. The four different lights that are used in 3D graphics are: ambient, directional, point, and spotlight. The different properties of these lights allow artists to emulate the different kinds of environments in real life. However, for real-time simulations, like games, it would be very resource-expensive to calculate the real-time light interactions, or what is known as dynamic lights, for all the objects in a scene. Consequently, developers use static lights, which allow the developers to calculate shadows in the scenery during development. These shadows can then be applied as a light map, a type of texture map, to surfaces on objects to display the shadows without real-time calculations (see Figure 3). Mark Allen 2007/ http://www.playtool.com/pages/basic3d/basics.html Figure 3: This illustration shows the effect of a light map on what is otherwise a bland brick wall 9 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin This allows the developers to conserve computational resources to only calculate the effects of dynamic lights on certain objects, particularly moving characters. However, it is impossible to use this technique to achieve the quality of a scene entirely lit by dynamic lights. After all the hard work in the previous stages, developers are finally ready to show the fruition of their efforts during the rendering stage. However, unlike 3D movies, 3D games are rendered real time, which means that this stage effectively occurs during rendering each frame that a game is played in real time. In order to display the 3D contents of a scene on a 2D screen, the game must effectively flatten all the objects to enter all the objects in what is known as screen space [11]. However, further steps must be taken to improve the quality of this 2D representation on the screen. This is because of the limited number of pixels on a display screen, which cause texture artifacts and aliasing, known as “jaggies”. Texture artifacts are minimized using texture filtering, which averages out the color value of a pixel based on the average color values of the pixels’ region on the texture. Similarly, anti-aliasing is a process that involves calculating an average of multiple samples of an image (see Figure 4) [3]. Both these techniques can be performed at various levels at the expense of computational resources, which will impact the frame rate of the simulation. http://www.cdrinfo.com/Sections/Reviews/Specific.aspx?ArticleId=14349 Figure 4: The images show various levels of anti-aliasing from left to right: 0xAA, 2xAA, 4xAA 10 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin The underlying technology behind the amazing 3D graphics in games today is deeplyrooted within the techniques mastered during the birth of 3D games. In the past twenty years, 3D graphics in games have been mostly pushed by exponential improvements in hardware which allow greater polygon counts in scenes. However, no matter how high the poly count limits rise, they still constrain artists. Consequently, game developers have to rely on various engineering and artistic tricks, which are inherently flawed in one way or another. At the same time, no effective alternatives to polygonal graphics have been found. However, one great development in the area of 3D game graphics is the growing popularity of 3D displays. Because 3D games are inherently 3D, there is little effort in displaying games on 3D displays. There is still uncertainty about the technology’s impact on visual health, and a report by the American Optometric Association admits that visual health can be influenced by “poorly made 3D products”, poor equipment, or existing individual’s eye problems [12]. In spite of this, the technology is becoming increasingly popular, and soon the trend will bring consumers affordable large glasses-free 3D screens. These developments seem like a natural evolution of 3D graphics in games. 3D screens will allow us all to finally experience the worlds depicted in 3D games the way they are meant to be experienced – in 3D. 11 WRIT 340 Dr. Ramsey Illumin Paper 10/19/2011 Alexander Silkin Works Cited [1] Justin Slick. (2011) About.com. [Online]. http://3d.about.com/od/3d-101-TheBasics/tp/Introducing-The-Computer-Graphics-Pipeline.htm [2] Bob Pendleton. (2008, November) Game Programmer. [Online]. http://gameprogrammer.com/5poly.html [3] Rene Froeleke. (2011) CDRinfo. [Online]. http://www.cdrinfo.com/Sections/Reviews/Specific.aspx?ArticleId=14349 [4] Euclideon. (2010, September) Unlimited Detail Technology. [Online]. http://unlimiteddetailtechnology.com/home.html [5] Matthew Murray. (2011, August) Extreme Tech. [Online]. http://www.extremetech.com/gaming/91854-unlimited-detail%E2%80%99s-non-polygon-3d-getsmore-unlimited [6] John Carmack. (2011, August) Twitter. [Online]. https://twitter.com/#!/ID_AA_Carmack/statuses/98127398683422720 [7] Markus Alexej Persson. (2011, August) The Word of Notch. [Online]. http://notch.tumblr.com/post/8386977075/its-a-scam [8] Richard Leadbetter. (August, 2011) Euro Gamer. [Online]. http://www.eurogamer.net/articles/digitalfoundry-vs-unlimited-detail?page=2 [9] Justin Slick. (2011) About.com. [Online]. http://3d.about.com/od/3d-101-The-Basics/a/Surfacing101-Creating-A-UV-Layout.htm [10] Justin Slick. (2011) About.com. [Online]. http://3d.about.com/od/3d-101-The-Basics/a/Surfacing101-Texture-Mapping.htm [11] Thierry Tremblay. (1999, August) GameDev.net. [Online]. http://www.gamedev.net/page/resources/_/technical/graphics-programming-and-theory/3dbasics-r673 [12] American Optometric Association, "3D In The Classroom," American Optometric Association, St. Louis, Public Health Report 2011. [13] Mark Allen. (2007, July) PlayTool. [Online]. http://www.playtool.com/pages/basic3d/basics.html 12