2013 Final Exam

advertisement

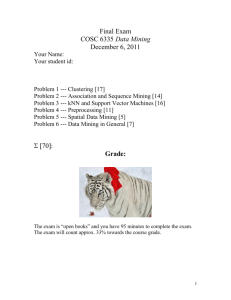

Final Exam

COSC 6335 Data Mining

December 17, 2013

Your Name:

Your student id:

Problem 1 --- Clustering and Density Estimation [13]

Problem 2 --- Association and Sequence Mining [12]

Problem 3 --- Data Mining in General [5]

Problem 4 --- Classification [17]

Problem 5 --- Spatial Data Mining [4]

Problem 6 --- PageRank [8]

Problem 7--- Write an Essay! [11]

:

Grade:

The exam is “open books” and you have 120 minutes to complete the exam.

The exam will count approx. 32% towards the course grade.

1

1) Clustering [13]

a) How do hierarchical clustering algorithms, such as AGNES, form dendrograms? [3]

The algorithm starts with the clustering where each object belongs to a separate cluster,

and then merges the instances of the two clusters that are closest to each other, creating a

connection between the two merged clusters in the dendrogram, continuing this process

until a single cluster is obtained that contains all the objects of the dataset.

b) The Top Ten Data Mining Algorithm article suggests that “K-means will falter

whenever the data is not well described by reasonably separated spherical balls”, as we

witnessed in Project2 when clustering the Complex8 dataset. What could be done to

alleviate the problem? [3+up to 2 extra points]

One Possible Answer is: use kernels that map the dataset into a different space, and

cluster the data with k-means in the mapped space. This allows obtaining clusters that are

no longer convex polygons in the original space: the convex polygons in the mapped

space will have other shapes when mapped back into the original space. A similar affect

can be obtained by using non-traditional distance functions in conjunction with k-means.

Other answers exist that also deserve credit.

c) Assume we have a 2D dataset X containing 4 objects : X={(1,0), (0,1), (1,2) (4,4)};

moreover, we use the Gaussian kernel density function to measure the density of X.

Assume we want to compute the density at point (2,2) and you can also assume h=1

(=1) and that we use Manhattan distance as the distance function. Give a sketch how the

Gaussian Kernel Density Estimation approach determines the density for point (2, 2). Be

specific! [3]

fX((2,2))= e9/2 + e9/2 + e1/2 + e16/2

One error at most 1.5 points; two errors: no points.

d) What role does the parameter play in the above approach—what is its impact on the

density computations? [2]

It determines how quickly density decreases with distance.[1]When using larger values of

smother density surfaces are obtained, whereas when smaller values more spiky density

functions are obtained. [1]

e) How are density attractors used in DENCLUE’s clustering approach? [2]

Density attractors are local maxima of the employed density functions.[0.5] DENCLUE

forms clusters by associating each object in the dataset with a density attractor using a

hill climbing procedure—objects which are associated with the same density attractor

belong to the same cluster. [1.5]

2

2) Association Rule and Sequence Mining [12]

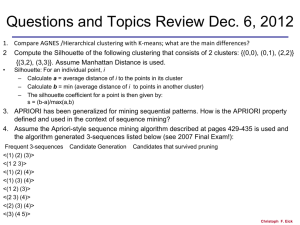

a) Assume the Apriori-style sequence mining algorithm described on pages 429-435 of

our textbook is used and the algorithm generated 3-sequences listed below:

Frequent 3-sequences Candidate Generation Candidates that survived pruning

<(1) (2 3)>

<(1) (3 4))>

<(1) (2 4))>

<(1 2) (3)>

<(2 3 4)>

<((2 3) (4)>

<(2)(3)(4)>

<(2) (3 4)>

<(3 4 5)>

What candidate 4-sequences are generated from this 3-sequence set? Which of the

generated 4-sequences survive the pruning step? Use format of Figure 7.6 in the textbook

on page 435 to describe your answer! [5]

b) Assume you run APRIORI with a given support threshold on a supermarket

transaction database and you receive exactly 2 disjoint 8-item sets. What can be said

about the total number of itemsets that are frequent in this case? [4]

Because all subsets of a frequent itemset are frequent, and due to the fact that the 2 sets

are disjoints implying their non-empty subsets are also disjoint—we do not need to worry

about double counting—we obtain:

2x(28 -1)= 29 -2

Correct except accidentally counting the empty set: 3.5 points; other errors at most 1.5

points.

c) Assume the association rule if smoke then cancer has a support of 3%, a confidence

of 80% and a lift of 2.0. What do those numbers tell us about the occurrence of cancer

and smoking and their association? [3]

3) Data Mining in General [5]

Data Mining has become quite popular in the last 15 years. What are the main reasons for

this development?

3

4) Classification [17]

a) The soft margin support vector machine solves the following optimization problem:

What role does C play in the soft margin approach[1.5]? What does i measure (be

precise; you can refer to the figure below!)?[2] Based on the figure below, how many

examples are misclassified by the support vector machine[1] What is the advantage of the

soft margin approach over the linear SVM approach[1.5]? [6]

b) Assume you use the kNN approach for a classification problem. Devise a procedure to

select a good value for the parameter k—the number of nearest neighbors used to classify

an example! [4]

c) Compare kNN, decision trees, and SVMs with respect to the shape and number of

decision boundaries they use when solving classification problems? [3]

one, n, n, axix-parallel lines, single line, egdges of Voronoi polygong

4

Problem 4 continued

d) Describe two methods to enhance the classical kNN approach to obtain better

accuracies. [4]

4) Spatial Data Mining [4]

What are the main challenges in mining spatial datasetshow does mining spatial

datasets differ from mining business datasets?

Autocorrelation/Tobler’s first law[1], Attribute Space is continuous[0.5], no clearly

defined transactions [0.5], complex spatial datatypes such as polygons [1],

separation between spatial and non-spatial attributes [0.5], importance of putting

results on maps [0.5], regional knowledge[0.5], a lot of objects/a lot of patterns[0.5],

importance of scale/granularity if patterns[0.5], need to identify arbitrarily shaped

objects[0.5].

At most 4 points!

5) PageRank [8]

a) Give the equation system that PAGERANK would set up for the webpage structure

given below. [4]

One error at most 3 points; two errors at most 1 point.

P1

P2

P3

P4

5

b) What does the parameter d model—the damping factor—in PageRank’s equation

systems? How does it impact the solution of the equation system? [4] The probability of

following links; if d is high the variance of the websites’ pageranks is higher.

7) Essay Question [11]

Assume you are working for a major restaurant chain, such as PF Chang or Olive

Garden. Write an essay to convince the owner of the restaurant chain to use data mining

to enhance the success of the restaurant chain! Limit your discussion to 10-18 sentences!

6