ProgressReport - Flinders University

advertisement

Flinders University

School of Computer Science,

Engineering and Mathematics

Bleeding Effects for Flinders Endoscopic

Sinus Surgery Simulator

by

Lee-Ying Wu

A Progress Report for the Degree of

Bachelor of Engineering - Software (Honours)

March 2013

Supervisor: Greg Ruthenbeck, Flinders University, Adelaide, Australia

1

CONTENTS

Abstractt ................................................................................................................... 4

1.

1.1

Introduction .............................................................................................. 5

Endoscopic sinus surgery .......................................................................... 5

1.1.1

1.2

1.2.1

1.3

Issues in the ESS training ..................................................................... 7

Virtual reality surgical simulators................................................................ 7

Existing ESS models/simulators ........................................................... 8

Related studies of bleeding effects ............................................................ 9

1.3.1

1.3.2

1.3.3

1.3.4

1.3.5

1.3.6

Temporal bone surgical simulation ....................................................... 9

Layered surface fluid simulation ......................................................... 10

Laparoscopic adjustable gastric banding simulator............................. 11

Smoothed particle hydrodynamics ...................................................... 11

Fast prototyping simulators with PhysX-enabled GPU........................ 11

Bleeding for virtual hysteroscopy ........................................................ 11

1.4

1.5

Flinders ESS simulator ............................................................................. 12

Aim of the project ..................................................................................... 13

2.

2.1

Methodology ........................................................................................... 15

Tool .......................................................................................................... 15

2.1.1

2.1.2

2.1.3

2.2

DirectX 11 API .................................................................................... 16

2.1.1.1

DirectX 11 ....................................................................... 16

2.1.1.2

Rendering pipeline .......................................................... 16

2.1.1.3

HLSL ............................................................................... 18

Parallel computing architecture – CUDA............................................. 18

Haptic device ...................................................................................... 19

Approach .................................................................................................. 20

2.2.1

2.2.2

Texture-based blood simulation technique ......................................... 20

2.2.1.1

Creation of CUDA 2D texture structure ........................... 21

2.2.1.2

Interoperability between Direct3D and CUDA ................. 22

2.2.1.3

CUDA kernel computation and HLSL program................ 25

Bleeding simulation - particle system .................................................. 25

2.2.2.1

Direct3D initialisation and interoperability with

CUDA .............................................................................. 28

2.2.2.2

Emission and destruction of particles .............................. 29

2.2.2.3

CUDA kernel call to update particle details ..................... 30

2.2.2.4

Render-to-texture ............................................................ 31

2.2.2.5

CUDA kernel call to blur the off-screen texture ............... 31

2.2.2.6

Alpha blending ................................................................ 31

2.2.2.7

Haptic force feedback ..................................................... 31

3.

3.1

3.2

Progress result ....................................................................................... 33

Texture-based blood simulation ............................................................... 33

Bleeding simulation .................................................................................. 33

4.

Future work ............................................................................................. 35

5.

Conclusion .............................................................................................. 36

Appendix ................................................................................................................ 37

A.1

Acronyms ................................................................................................. 37

References ............................................................................................................. 38

2

FIGURES

Figure 1: A doctor was performing the endoscopic sinus surgery (Perkes,

2008). ......................................................................................................... 6

Figure 2: Images of sinus anatomy before and after performing ESS. The

BEFORE image circles the area where the thin, delicate bone and

mucous membranes will be removed. The circled area blocks the

drainage pathways of the sinuses. The AFTER image shows the

removal of the circled area

(http://www.orclinic.com/information/ente_PostOpSinusSurgery,

2012). ......................................................................................................... 6

Figure 3: (A) Real patient and (B) virtual endoscopic close-up showing

similarities such as mucosal band lateral to middle turbinate

indicated by arrows (Parikh et al., 2009). .................................................. 8

Figure 4: The endoscopic sinus surgery simulator built by Lockheed Martin,

Akron, Ohio (Fried et al., 2007). ................................................................ 9

Figure 5: Fluid rendering of the blood from the drilled area during a

mastoidectomy simulation (Kerwin et al., 2009). ..................................... 10

Figure 6: The left side of a real patient’s turbinate (left); the middle turbinate

model rendered in the ESS simulator (right) (Ruthenbeck et al.,

2011). ....................................................................................................... 12

Figure 7: Two haptic devices are used to provide control over the surgical

instrument and the endoscopic camera (Ruthenbeck, 2010). ................. 13

Figure 8: Spreading of bleeding area during performing the ESS shown from

left to right images (The video is provided by the Ear, Nose and

Throat (ENT) services at Flinders Private Hospital (FPH)). .................... 14

Figure 9: The progress (left to right) of blood flow (circled by the blue line)

occurred during performing the ESS (The video is provided by the

ENT services of FPH). ............................................................................. 14

Figure 10:The stages of rendering pipeline and GPU memory resources on

the side (Luna, 2012). .............................................................................. 17

Figure 11: Novint Falcon haptic device (Block, 2007). ............................................ 20

Figure 12: The activity diagram of texture-based simulation. The dash-linecircled area is the do-while looping part of the simulation. ...................... 21

Figure 13: The activity diagram of building interoperability between Direct3D

and CUDA. The dash-line-circled area is the rendering looping

part of the simulation (Sanders and Kandrot, 2010). ............................... 23

Figure 14: The activity diagram of bleeding simulation. The dash-line-circled

area is the do-while looping part of the simulation. ................................. 27

Figure 15: a) Resultant texture calculated by a CUDA kernel. b) Final

texture after blending the tissue texture with the CUDA-computed

texture (Figure 15a). ................................................................................ 33

Figure 16: The C# particle simulation. ..................................................................... 34

Figure 17: The Direct3D bleeding simulation. ......................................................... 34

TABLES

Table 1: Description of D3D11_BUFFER_DESC flags (NVIDIA, 2013). ................. 28

3

Abstractt

In this progress report, two approaches of simulating the bleeding effect for the endoscopic

sinus surgery (ESS) simulator are presented. First, the texture-based blood simulation models

the growing and radiant spreading of the blood pattern from the central cut point. The second

approach uses the particle system to simulate the blood flow when the user clicked the leftmouse button. The blood flow is accelerated by the gravitation and flow downward to

accumulate at the bottom of the simulation panel. Eventually, the blood cluster will disappear

after a period of time since the no blood aspiration is implemented in the simulation. The

velocity and position of particles are computed using the CUDA kernel on the Graphics

Processing Unit (GPU). The use of GPU parallel computation helps make the simulation

responsive and interactive.

Future work section describes the techniques that will be implemented in the remaining of the

project. In the completion of the project, users should be able to use the Falcon haptic device

to have real-time interaction with the bleeding effect simulation.

4

1.

Introduction

This study aims to develop the bleeding effects for the endoscopic sinus simulator built by

Ruthenbeck et al. (2011). Many studies indicate the need to develop a surgical simulator for

the endoscopic sinus surgery (ESS) due to the lack of teaching resources in the surgical

training (Ooi and Witterick, 2010, Wiet et al., 2011). Ruthenbeck et al. (2013) have

successfully developed a new computer based training tool for practising ESS. The ESS

simulator is able to represent the virtual-reality based environment of the ESS. The object of

the ESS simulator is to serve as an educational tool to teach paranasal sinus anatomy and

basic endoscopic sinus surgery techniques to ear, nose, and throat surgeons and residents

(Ooi and Witterick, 2010). The precision force feedback (haptic) devices implemented in the

ESS simulator enables trainees to use movements that closely mimic those used during the

actual procedure (Ruthenbeck et al., 2013). With the advancement in the graphics technology

and expanding of the computation power, the applications of many surgical simulation

techniques become achievable (Kerwin et al., 2009, Halic et al., 2010). The bleeding effect

simulation will encourage the engagement between the ESS simulator and users, and make

the simulator closer to the emulation of the surgery.

The rest of the report is organized as follows. Section1 provides the introductions of ESS,

virtual reality surgical simulations, previous studies of bleeding effects, Flinders ESS

simulator and the project aim. Section 2 describes simulation methodology and

implementation details. Section 3 gives the progress results of the bleeding simulation.

Section 4 provides future work and Section 5 draws conclusions.

1.1

Endoscopic sinus surgery

The endoscopic sinus surgery is a technique used to remove blockages in the sinuses (Slowik,

2012). Sinus are the spaces filled with air in some of the bones of the skull (Slowik, 2012).

These blockages cause sinusitis, a condition in which the sinuses swell and become clogged,

causing pain and impaired breathing (Slowik, 2012).

Occasionally infections are recurrent or non-responsive to the medication (Laberge, 2007).

When this occurs, the surgery that enlarges the openings to drain the sinuses is another

choice. Figure 1 shows the ESS that involves the insertion of the endoscope, a very thin fibreoptic tube, into the nose for a direct visual examination of the openings into the sinuses. Then

the surgeon locates the middle turbinate, which is the most important landmark for the ESS

procedure (Laberge, 2007). The uncinate process lies on the side of the nose at the level of

the middle turbinate. The surgeon removes the uncinated process, abnormal and obstructive

tissues (Laberge, 2007). In the majority of scenario, the surgical procedure is performed

entirely through the nostrils without leaving external scars. Figure 2 (after) shows the sinus

anatomy after the removal of the thin, delicate bone and mucous membranes which block the

drainage pathway of the sinus.

5

Figure 1: A doctor was performing the endoscopic sinus surgery (Perkes, 2008).

Figure 2: Images of sinus anatomy before and after performing ESS. The BEFORE image circles the area

where the thin, delicate bone and mucous membranes will be removed. The circled area blocks the

drainage pathways of the sinuses. The AFTER image shows the removal of the circled area

(http://www.orclinic.com/information/ente_PostOpSinusSurgery, 2012).

However, the ESS also carries substantial risks (McFerran et al., 1998). The key to safe

surgery lies with adequate training (McFerran et al., 1998). These surgical techniques are

traditional learned by performing them in a dissection laboratory using cadaveric specimens

(Kerwin et al., 2009). As a result, residents integrate knowledge of regional anatomy with

surgical procedural techniques (Kerwin et al., 2009).

6

1.1.1

Issues in the ESS training

Because of the need of improving patient safety and reducing the threat litigation, trainees do

not have many opportunities to learn by operating on real patients (Ooi and Witterick, 2010).

In addition, Ooi and Witterick (2010) indicate that due to the working hours restriction,

trainees may not have sufficient exposure to the number of cases to feel competent in

performing the ESS at the end of their training period. Therefore, if trainees have not

acquired sufficient skills for basic ESS, they may not be able to progress to more advanced

sinus surgery cases during training (Ooi and Witterick, 2010). Increasing the training time is

an alternative. However, the supervision of junior consultants is required for an additional

period time (Ooi and Witterick, 2010). In addition, once a training procedure is completed,

whether on cadaver or live subjects, it is impossible to perform repetition due to the

permanent alterations, and the procedure may not be erased and restarted (Fried et al., 2007).

As a result of issues illustrated above, Ooi and Witterick (2010) has identified the need of

using surgical simulator as a method to improve surgical training (Ooi and Witterick, 2010).

1.2

Virtual reality surgical simulators

Multiple factors have made using surgical simulators an attractive option for teaching and

training as an assessment tool (Ooi and Witterick, 2010). These factors include moving

toward competency-based training and pressures from the threats of medical litigation and

patient safety. Surgical simulators offer significant advantages over traditional teaching and

training by operating on patients (Ooi and Witterick, 2010). Advantages in using surgical

simulators are (Kerwin et al., 2009, Bakker et al., 2005, Ooi and Witterick, 2010):

Can be used as teaching tool

Have the potential to facilitate learning

Increase the exposure to difficult scenarios

Reduce cost

Reduce the learning curves

Cause no harm to patients

Allow learners to make mistakes

Enable standardised training

Repeat assessment of technical skills

Many studies have shown the improvement in technical skills after performing the training

with surgical simulators (Fitzgerald et al., 2008, Ooi and Witterick, 2010). For example,

Verdaasdonk et al. (2008) have shown positive results with specific in-theater operative skills

after being trained with simulators. Ahlberg et al. (2007) show that competency-based virtual

reality (VR) training significantly reduced the error rate when residents were performing their

first 10 laparoscopic cholecystectomies. Gurusamy et al. (2008) indicate that simulators can

shorten the learning curve for novice trainees and allow trainees to establish a predefined

competency before operating on patients.

7

1.2.1

Existing ESS models/simulators

The endoscopic sinus surgery is a sub-speciality area suitable for implementing surgical

simulators (Ooi and Witterick, 2010). The study conducted by Bakker et al. (2005) indicate

that residents considered the recognition and learning to make a three-dimensional mental

representation of anatomy were the most difficult tasks to perform while manual skills such

as manipulating an endoscope were much easier. As a result, models simulating a realistic

endoscopic anatomy might be more important in the development of ESS simulators (Ooi and

Witterick, 2010).

Parikh et al. (2009) present a new tool for producing 3-dimensional (3D) reconstructions of

computed tomographic (CT) data. The tool allows the rhinologic surgeon to interact with the

data in an intuitive, surgically meaningful manner. The creation and manipulation of sinus

anatomy from CT data provide a means of exploring patient-specific anatomy (Parikh et al.,

2009). Figure 3 shows the virtual surgical environment (VSE) created by Parikh et al. (2009).

The VSE provides the potential for an intuitive experience that can mimic the views and

access expected at the ESS. The inclusion of tactile (haptic) feedback offers an additional

dimension of realism. The system can be used to practice patient-specific operations before

operating on the real patient. This provides the space for making mistakes and performing

experimentations without harming the actual patient. However, Parikh et al. (2009)

knowledge several issues of lack of tissue manipulation such as no bleeding effects and the

unrealistic rendering of sinus instruments.

Figure 3: (A) Real patient and (B) virtual endoscopic close-up showing similarities such as mucosal band

lateral to middle turbinate indicated by arrows (Parikh et al., 2009).

Fried et al. (2007) shows that Department of Defence contractor Lockheed Martin, Inc

(Akron, Ohio) has developed a training device, the ESS simulator (ES3) using both visual

and haptic (force) feedback in a VR environment as shown in Figure 4. The Lockheed Martin

simulation development team built a physical space with embedded VR elements that allows

for analysis of single or multiple tasks (Fried et al., 2007). The ES3 simulates bleeding and

image blurring effects similar to the dirty endoscope during performing the procedure. There

8

are three modes available in ES3 simulations: novice, intermediate and advanced. The force

feedback and bleeding effects are introduced in the advanced mode. The ES3 has been tested

and validated by several studies (Ooi and Witterick, 2010). Medical students felt the use of

ES3 provided them with significant training benefits (Glaser et al., 2006). The ES3 can be an

effective tool in teaching sinonasal anatomy (Solyar et al., 2008).

Figure 4: The endoscopic sinus surgery simulator built by Lockheed Martin, Akron, Ohio (Fried et al.,

2007).

1.3

Related studies of bleeding effects

Multiple studies have identified the need to incorporate the bleeding effects into surgical

simulators (Halic et al., 2010, Kerwin et al., 2009, Borgeat et al., 2011, Wiet et al., 2011,

Pang et al., 2010). The following sub-sections summarise some important simulators which

implement the bleeding effects. The summaries include the reasons and approaches that the

individual study uses to implement the bleeding simulation.

1.3.1

Temporal bone surgical simulation

As many surgical procedures, temporal bone surgery involves bleeding, which is from vessels

inside bony structures (Kerwin et al., 2009). Drilling into major blood vessels results in a

large amount of bleeding. Actions must be taken immediately to correct the error (Kerwin et

al., 2009). However, in the traditional training methods, either through a cadaver bone or

physical synthetic bone substitute, there is no blood in the training sample. Hence, no blood

flow is generated by drilling. In the a mastoidectomy simulator (Kerwin et al., 2009), blood

flow is introduced into the fluid during drilling. A real-time fluid flow algorithm has been

integrated into with the volume rendering to simulate the movement of blood.

9

The simulator allows users to experience haptic feedback and appropriate sound cues while

handling a virtual bone drilling and suction/irrigation devices. The fluid rendering technique

employed includes bleeding effects, meniscus rendering, and refraction. The simulator

demonstrates that the two-dimensional fluid dynamics can be implemented in the surgical

simulator. In the use of Graphics Processing Unit (GPU) technology, the real-time fluid

dynamics can be computed efficiently. The simulator can maintain high frame rate required

for effective user interactions (Kerwin et al., 2009). The rendering speed is consistently larger

than the target speed of 20 frame per second (FPS).

The following illustrates the advantages of including bleeding effects (Kerwin et al., 2009):

a. Encourage the engagement between the simulation and users.

b. Make the simulation closer to the emulation of the surgery.

c. Provide a mechanism to observe errors easily by allowing users to make mistakes

which could result in immediate consequences. This could be an essential means that

maximises the transfer from the simulation to practice.

Figure 5: Fluid rendering of the blood from the drilled area during a mastoidectomy simulation (Kerwin

et al., 2009).

1.3.2

Layered surface fluid simulation

Borgeat et al. (2011) present an approach to layered surface fluid simulation integrated in a

simulator for brain tumour resection. The method combines a surface-based water-column

fluid simulation model with a multi-layer depth peeling (Borgeat et al., 2011). This allows

realistic and efficient simulation of bleeding on complex surfaces undergoing topology

modification (Borgeat et al., 2011). The simulator provides full haptic feedback for two

hands. Their implementation allows fast propagation and accumulation of blood over the

entire scene. In the simulation of brain tumour resection, users can perform blood aspiration,

tissue cauterisation and tissue cleaning.

10

1.3.3

Laparoscopic adjustable gastric banding simulator

In actual surgery procedures, smoke and bleeding due to cauterization processes provide

important visual cues to the surgeon (Halic et al., 2010). The trainee surgeons must be able to

recognise these cues and make time-critical decision. Hence, such cues of smoke and

bleeding are used as assessment factors in surgical education (Halic et al., 2010). The

handling of bleeding has been measured as an assessment component (Halic et al., 2010).

Halic et al. (2010) has developed a low-cost method to generate realistic bleeding and smoke

effects for surgical simulators. The method outsources the computations to the GPU. Hence,

the implementation of the method frees up the central processing unit (CPU) for other critical

tasks.

The animation variables are used to determine the bleeding speed over a surface (Halic et al.,

2010). Then the animation variables are passed to the vertex shader and pixel shader

performing rendering tasks on the GPU. The bleeding starts when tissue is cut and is

initialised based on the density of the blood vessel at the cautery tool tip.

1.3.4

Smoothed particle hydrodynamics

Müller et al. (2004) propose an approach based on Smoothed Particle Hydrodynamics (SPH)

to simulate blood with free surfaces. The force density fields are derived from the NavierStokes equation and the term to model surface tension are added to SPH calculations. Their

approach can implement 3000 particles to simulate bleeding effects in interactive surgical

training systems.

1.3.5

Fast prototyping simulators with PhysX-enabled GPU

Pang et al. (2010) present their fast prototyping experience in the bleeding simulation based

on PhysX-enabled GPU. The PhysX Engine provides an Application Program Interface (API)

that supports fast physics processing and calculation. The fast bleeding simulation takes into

account of patient behaviour and mechanical dynamics. Realistic bleeding effects are

achieved by implementing the PhysX built-in SPH-based fluid solver and proper assignment

of parameters. The simulation result demonstrates that the approach achieves the interactive

frame rate and convincing visual effects.

1.3.6

Bleeding for virtual hysteroscopy

The graphical fluid solvers are presented by Zátonyi et al. (2005) for simulating bleeding for

a hysteroscopic simulator. The bleeding simulation is required to be responsive to the

feedback provided by the operating surgeon. The solver primarily works best in the 2dimensional domain. Zátonyi et al. (2005) has extended the solvers capability to perform 3dimensional fluid solving. Parallelization technique is used to maintain the real-time

requirements.

11

1.4

Flinders ESS simulator

An endoscopic sinus surgery simulator is developed by Ruthenbeck et al. (2013). The ESS

simulator aims to develop the realistic computer-based training tool for medical trainees to

develop surgical skills. The simulator has the capacity to reproduce the accurate interactions

between surgeons and sinus tissues with surgical instruments. The X-ray computed

tomography (CT) scan images were used to create accurate 3D model of patients’ sinuses as

shown in Figure 6 (right) (Ruthenbeck et al., 2011). The tissue simulation is developed to

simulate the real-time tissue deformation such as tissue cutting and resections. The parallel

computation is used to render deformable tissue based on the GPU technology.

Figure 6: The left side of a real patient’s turbinate (left); the middle turbinate model rendered in the ESS

simulator (right) (Ruthenbeck et al., 2011).

The simulator is able to achieve high fidelity tactile interactions. Eight surgical instruments

are provided by the simulator. Two haptic devices are used to provide control over the

surgical instruments and endoscopic camera (Figure 7). The haptic force feedback is

produced by the interaction between the instrument and the tissue. In the use of the simulator,

trainees will need to manage the navigation of the sinus anatomy and tissue cutting while

performing the endoscopic sinus surgery tasks.

12

Figure 7: Two haptic devices are used to provide control over the surgical instrument and the endoscopic

camera (Ruthenbeck, 2010).

1.5

Aim of the project

The project aims to present a method to simulate bleeding effects for the Flinders endoscopic

sinus surgery simulator. Two approaches have been investigated to achieve the project goal.

The first approach: texture-based blood simulation models the blood pattern spreading when

the user performs a cut on the tissue surface. Figure 8 shows the progress of the blood

spreading after cutting on a real tissue, and this is the feature that the first approach intends to

achieve. This approach can be implemented into the ESS simulator to generate the blurring

and reddish cut edge. The second approach simulates the blood flow using the particle system

technique. The circled areas in Figure 9 show the progressing scenes of the blood flow that

the particle system aims to reproduce. The simulation will model the bleeding effects on a 2D

tissue model with potential extension to a 3D model. The haptic feedback will be also

incorporated with the simulation. Therefore, user can use the stylus as an instrument tool

provided by a haptic device to perform a cut on the tissue model. Bleeding effects with blood

flow will be produced around the cut area. In the future study, the bleeding simulation will be

integrated into the ESS simulator.

13

Figure 8: Spreading of bleeding area during performing the ESS shown from left to right images (The

video is provided by the Ear, Nose and Throat (ENT) services at Flinders Private Hospital (FPH)).

Figure 9: The progress (left to right) of blood flow (circled by the blue line) occurred during performing

the ESS (The video is provided by the ENT services of FPH).

14

2.

Methodology

The major goal of this study is to create realistic visual bleeding effects without inducing

excessive additional load on the CPU. Halic et al. (2010) emphasise that virtual-reality-based

surgical simulators must be interactive so the computation must be performed in real time. To

be interactive, visual display must be performed at least 30 Hz update rate, and haptic

information must be refreshed at 1 kHz rate (Halic et al., 2010). However, it is challenging to

achieve this interactive refresh rate (Halic et al., 2010). A surgical simulator usually includes

multiple components including surgical scene rendering, collision detection, computation of

tissue response and generating haptic feedback. Incorporation of these components consumes

significant computation efforts. As a result of including these components, the simulation of

bleeding is often ignored or highly simplified. Therefore, the CPU can be freed up to process

more critical tasks. In order to achieve the study goal, the following sections illustrate tools

and approaches used to maximise the utilisation of the GPU.

2.1

Tool

A variety of tools are adopted in the development of the bleeding simulation. These tools

includes C++, Microsoft Visual Studio, C#, Subversion, DirectX 11, High Level Shader

Language (HLSL), CUDA and Novint Falcon haptic device. Sections 2.1.1 to 2.1.3 provide

brief introductions for DirectX 11 API, parallel computing and haptic devices.

Majority of simulation is written in C++ using DirectX 11 (Direct3D) graphics library, and is

implemented on the Integrated Development Environment (IDE): Microsoft Visual Studio.

The object-oriented language C# is used for the fast prototype development. C# is a Java-like

language so it is relatively straight forward to perform fast application development. The use

of DirectX 11 to create graphical effects is relatively complicated due to handling of low and

high level graphics library and HLSL. Any effect that will be implemented in the bleeding

simulation was first created and tested in the C# application. When the C# application is able

to represent the testing effect, the C# application serves as a prototype and is converted into

C++ codebase. For example, the fluid dripping effects was firstly created and tested using C#.

Once the C# application can simulate the reasonable fluid dripping events, the C# application

prototype is converted into the Direct3D application.

The Direct3D applications are regularly backed up using Subversion. Subversion is a

software versioning and revision control system. It can be used to maintain current and

historical versions of files such as source code and documentations.

15

2.1.1

DirectX 11 API

2.1.1.1

DirectX 11

DirectX 11 is a C++ rendering library to build a high performance 3D graphics applications

(Luna, 2012). The terms DirectX 11 and Direct3D are used interchangeably in this study. It

uses the capacity of modern graphics hardware and the Windows platform. DirectX 11 is a

low-level library so it can closely manipulate the underlying graphics hardware. The main

consumer of the DirectX 11 is the game industry. DirectX 11 provides computer shader API

for creating general purpose GPU (GPGPU) applications. GPGPU takes advantage of GPU to

offload work to the GPU for performing parallel computation (Luna, 2012). With the support

of tessellation and multi-threading, developers can create applications that better utilise multicore processors (Hanners, 2008).

2.1.1.2

Rendering pipeline

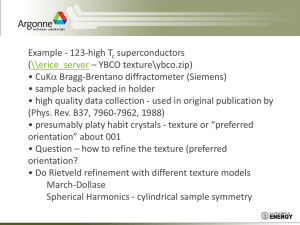

Luna (2012) illustrates that the DirectX 11 rendering pipeline, as shown in Figure 10, is the

stages to generate a 2D image based on what the virtual camera sees. The arrow coming from

the GPU resource pool to a stage means a stage can access the resources as input. For

example, the input assembler needs to read the data of vertex buffers and index buffers stored

in the GPU memory. The arrow going from a stage to GPU resources indicates the stage

writes to the GPU memory. For example, the output merger stage writes data to the back

buffer and stencil buffer. In general, most stages do not write to GPU resources (Luna, 2012).

The output of a stage is fed in as input of its following stage. The following provides a brief

description of each stage (Luna, 2012):

a. The input assembler stage reads geometry data, vertices and indices, from the GPU

memory and use it to assemble geometric primitives such as triangles.

b. The vertices assembled by the input assembler are fed into the vertex shader stage.

The vertex shader is a function that receives inputs of vertices and outputs vertices

and other per-vertex data. To make a beginner understand the shading language

better, the following sequential code conceptually represents the work happening in

the vertex shader:

for (UINT i = 0; i < vertexNum; i++)

{

oVertex[i] = VertexShader(iVertex[i]);

}

However, actually, the shader is executed on the GPU for each vertex concurrently.

Therefore, the execution of the vertex shader is very fast.

c. The tessellation stage is optional and it subdivides the triangles of a mesh to add new

triangles. These new triangles are used to create finer mesh detail. The tessellation

16

stage is new to Direct3D 11. It provides a new approach to perform tessellation on

the GPU so it is very fast as well.

Vertex buffers, Index buffers

Input Assembler (IA) Stage

Vertex Shader (VS) Stage

Hull Shader (HS) Stage

SsStageStage

Domain Shader (DS)Stage

SSStage

Geometry Shader (GS) Stage (optional)

Stream Output (SO) Stage

GPU Resources: Buffers, Textures

Tessellator Stage (optional)

Rasteriser (RS) Stage

Pixel Shader (PS) Stage

Output Merger (OM) Stage

Figure 10:The stages of rendering pipeline and GPU memory resources on the side (Luna, 2012).

d. The geometry stage is optional. It is fed with the entire primitives. For example, the

triangle lists are used in the rendering, the input to the geometry shader is the three

vertices that defines a triangle. The geometry shader can create and destroy

geometry. The input primitive can be expended into one or more primitives. This is

17

different from the vertex shader which cannot create vertices. In general, the

geometry shader is used for expending a point/triangle into a quad. Figure 10 shows

that through the use of the stream out stage, the geometry shader can output the

vertex data into a buffer on the GPU memory.

e. The rasterisation stage takes an image described in a vector graphics format and

converts it into a raster image with pixels or dots for the screen display.

f. The pixel shader stage is the function constructed by developers and is executed on

the GPU. The pixel shader is executed for each pixel fragment. It uses the

interpolated vertex attributes as input to calculate a colour.

g. Once the computation of pixel fragments is completed by the pixel shader, the output

merger (OM) stage starts the further process for the pixel fragments. Some pixel

fragments might be rejected by the depth or stencil buffer test. The remaining pixel

fragments are written to the back buffer. The blending is also performed in the OM

stage. A pixel can be blended with the current pixel on the back buffer, or the

blending can also include the transparency effect of pixels.

2.1.1.3

HLSL

The vertex shader, geometry shader and pixel shader described in the previous section are

written in the language named High Level Shading Language (HLSL) (Luna, 2012). It is a

shading language developed by Microsoft (St-Laurent, 2005). It is used in conjunction with

the Direct3D API. Shading languages provide developers with a programming language to

define custom shading algorithms.

The Direct3D passes the vertex information to the graphics hardware. A vertex shader is

executed for each vertex. It transforms the vertex from object space to view space, generates

texture coordinates, and computes lighting coefficients such as the vertex’s tangent, binormal

and normal vectors. Once the vertex shader finished its processing, it passes the result

information to the rasterizer. The rasterizer is responsible for deciding the screen pixel

coverage of each polygon, vertex information interpolation and occlusion (St-Laurent, 2005).

After the pixel coverage has been determined by the rasterizer, the pixel shader is invoked for

each screen pixel drawn (St-Laurent, 2005). The output information produced by the pixel

shader is used to blend the final result on the frame buffer. Then the frame buffer is presented

on the computer terminal.

2.1.2

Parallel computing architecture – CUDA

CUDA is a platform and programming model that facilities parallel computing (Cook, 2012).

It is a parallel programming interface created by NVIDIA in 2007 (Cook, 2012). CUDA

allows developers to program GPUs without learning complex shader languages. It uses

GPUs to increase the computing performance (Cook, 2012). GPUs have a parallel throughput

architecture that executes many threads concurrently while the strategy that CPUs use is

executing a single thread in a very high speed (Cook, 2012).

18

CUDA supports industry-standard languages such as C++, Fortran (Cook, 2012). It is an

extension to the C language that allows developers to program GPUs in regular C. There are

also third party wrapper available for Python, Perl, Java, Ruby, Lua, Haskell, MATLAB,

IDL, and native support in Mathematica (Cook, 2012).

The computer game industry uses CUDA for not only graphics rendering but also physics

computation such as effects of smoke, fire and fluids (Cook, 2012). CUDA is also used in

other industries such as computational biology and cryptography (Cook, 2012). GPUs are

perfect to execute algorithms where processing of large blocks of data is carried out in

parallel. The applications with parallel nature includes: fast sort algorithms of large lists, twodimensional fast wavelet transform and molecular dynamics.

2.1.3

Haptic device

A Novint Falcon haptic device (Figure 11) is used in the study so users can interact with the

bleeding simulation in real time. Novint Falcon is the world's first 3D touch device developed

by Novint (Neal, 2008). It has been used in two primary industries: computer games and

medical simulations. Novint Falcon devices provide users a more immersive experience

(Neal, 2008). Users can have sense of touch of objects and events using haptic devices.

The Falcon's internal components include several motors and multiple sensors that work in

conjunction with the three external arms (Neal, 2008). The three moving arms join at a

centralized grip (Figure 11). Each arm can move in and out of the Falcon’s body. The

removable grip, also called stylus, is used to control the device. The 3 Degree of Freedom (3

DOF) grip allows users to move the stylus in 3 dimensions: right-left, forwards-backwards

and up-down. To generate a realistic sense of touch, the sensors keep track of the grip

position to sub-millimetre resolution, and the motors are updated at 1 kHz rate (Block, 2007).

These internal and external components work together to deliver a realistic sense of touch

(Neal, 2008). As each of the three arms moves, an optical sensor attached to each motor

keeps track of movements of the arm (Block, 2007). A mathematical Jacobian function is

used to determine the position of a cursor in Cartesian coordinates. The cursor position

displayed on the computer terminal is determined according to the positions of the arms. The

developers can write an algorithm to calculate the force feedback that the user should

experience. The calculated force is then applied to the grip. Currents are sent to the motors at

the 1 kHz servo rate to produce an accurate sense of touch. The Falcon can generate up to the

maximum force of 2 pounds with the frequency of 1kHz (NOVINT, 2012).

The Novint Falcon software consists of low level layer and software layer (Block, 2007). The

low level layer driver named Haptic Device Abstraction Layer (HDAL) handles low level

communications between the device and the computer. The software layer called Haptics

Effects (HEX) is responsible for creating force effects. In the support of these layers, the

weight and physics of objects can be produced so users can have the sensation of the object’s

inertia and momentum (Block, 2007).

19

Figure 11: Novint Falcon haptic device (Block, 2007).

2.2

Approach

Two approaches simulating blood effects are investigated. The texture-based blood

simulation models the spreading of the blood. It uses the CUDA technology to update the

pixels values of the texture and the rendering pipeline of Direct3D to render the final texture.

The second approach uses the particle system technique to produce the blood flow when

users cut the tissue. In the simulation, the bleeding starts at the contact location of the mouse

click with the tissue, and it flows down along the tissue surface rapidly. The design of the

simulation is that the bleeding will stop and the generated blood cluster will disappear after a

period of time since there is no blood aspiration implemented in the study. Eventually, the

future study will integrate the bleeding simulation into the ESS simulator.

The method based on the Smoothed Particle Hydrodynamics (SPH) technique used by Müller

et al. (2004) will not be appropriate for our study. The SPH is used to simulate the blood

circulation in an artery (Müller et al., 2004). The Navier-Stokes equation well suits to

modelling the fast change in the advection and diffusion of the blood circulation. However,

the blood fluid is much more stickier in sinuses than in vessels. The ESS video provided by

the Ear, Nose and Throat (ENT) services of Flinders Private Hospital (FPH) shows the blood

fluid has a very low flowing speed. The fluid comes to a standstill status quickly in the scene

until the instrument stirs the fluid or the surgeon performs the blood aspiration.

2.2.1

Texture-based blood simulation technique

The texture-based blood simulation aims to generate the blood effect that the blood grows

from the centre of the image and slowly spreads out as a growing blood pattern with burring

edge. The bold pattern keeps spreading until its radius reaches the designed threshold as

20

shown in Figure 15b. Figure 12 shows the activity diagram of the texture-based simulation.

The activities consist of the initialisation of Direct3D, creation of CUDA 2D texture, building

interoperability between Direct3D and CUDA, CUDA kernel computation, blending of

CUDA 2D texture and tissue texture and rendering the scene. The dash-line-circled area in

Figure 12 is the do-while looping part of the simulation. The following sub-sections describe

these activities in detail. The texturing results are shown in Figure 15. The simulation is built

based on the tutorial of CUDA C sample code named Simple D3D11 Texture, bundled in the

NVIDIA GPU Computing SDK. The major modification in the source code is the

computation of the pixel value of the CUDA 2D texture detailed in the section 2.2.1.3.

Direct3D Initialisation

Create CUDA 2D texture structure

Interoperate Direct3D with CUDA

CUDA kernel computers pixel values of CUDA 2D texture

Blend the tissue texture with the CUDA 2D texture

Render the tissue texture with the blood pattern spreading

spreadingspreading

Figure 12: The activity diagram of texture-based simulation. The dash-line-circled area is the do-while

looping part of the simulation.

2.2.1.1

Creation of CUDA 2D texture structure

A data structure for 2D texture shared between Direct3D and CUDA is created at the

beginning of the program as shown in the following code block. The variables contained in

this data structure facilitates building the interoperability between Direct3D and CUDA. The

21

variables g_texture_2d.pTexture and g_texture_2d.cudaResource are used in the

cudaGraphicsD3D11RegisterResource() function illustrated in the following section.

g_texture_2d.pTexture is a 2D texture interface managing texel data.

g_texture_2d.cudaResource is a CUDA graphic resource data structure. The variables

g_texture_2d.cudaLinearMemory, g_texture_2d.pitch, g_texture_2d.width and

g_texture_2d.height are prepared as parameters of cudaMallocPitch() function also specified

in the next section.

struct

{

ID3D11Texture2D

ID3D11ShaderResourceView

cudaGraphicsResource

void

size_t

int

int

int

} g_texture_2d;

2.2.1.2

*pTexture;

*pSRView;

*cudaResource;

*cudaLinearMemory;

pitch;

width;

height;

offsetInShader;

Interoperability between Direct3D and CUDA

CUDA supports the interoperability with Direct3D (Sanders and Kandrot, 2010). CUDA C

extends C language by allowing programmers to write C functions which are also named

kernels. When a kernel is called, it is executed N times in parallel by N different CUDA

threads. It is different from only being called once like regular C functions. Figure 13 shows

the steps to build the interoperability between Direct3D and CUDA (Sanders and Kandrot,

2010). The dash-line-circled area is the rendering looping part of the simulation. The texturebased simulation activities shown in Figure 12 is the application of these interoperability

steps. The following paragraphs provide descriptions for some important steps in Figure 13.

22

Get a CUDA-enabled adapter

Create a swap chain and device

Register device with CUDA

Register Direct3D resource with CUDA

Map Direct3D resource for writing from CUDA

Execute CUDA kernel

Unmap Direct3D resource

Draw the final result on the screen

Figure 13: The activity diagram of building interoperability between Direct3D and CUDA. The dash-linecircled area is the rendering looping part of the simulation (Sanders and Kandrot, 2010).

To begin building the interoperability, the function cudaD3D11SetDirect3DDevice()records

g_pd3dDevice as the Direct3D device to be used for the Direct3D interoperability with the

CUDA device as shown in the following code segment (NVIDIA, 2013).

cudaD3D11SetDirect3DDevice(g_pd3dDevice);

23

The following code block firstly uses the function cudaGraphicsD3D11RegisterResource() to

register the Direct3D resource g_texture_2d.pTexture to be accessed by CUDA (NVIDIA,

2013). The function cudaMallocPitch() allocates at least g_texture_2d.width * sizeof(float)

* 4 * g_texture_2d.height bytes of linear memory on the device, and returns in

_texture_2d.cudaLinearMemory as a pointer to the allocated memory. The call to the function

cudaMemset() fills the first g_texture_2d.pitch * g_texture_2d.height bytes of the memory

area pointed to by g_texture_2d.cudaLinearMemory with the constant byte value 1.

cudaGraphicsD3D11RegisterResource(&g_texture_2d.cudaResource,

g_texture_2d.pTexture, cudaGraphicsRegisterFlagsNone);

cudaMallocPitch(&g_texture_2d.cudaLinearMemory, &g_texture_2d.pitch,

g_texture_2d.width * sizeof(float) * 4, g_texture_2d.height);

cudaMemset(g_texture_2d.cudaLinearMemory, 1,

g_texture_2d.pitch * g_texture_2d.height);

The function cudaGraphicsMapResources() maps the registered resources so it can be accessed

by CUDA. It is efficient to map and unmap all resources in a single call, and to have the

map/unmap calls at the boundary between using the GPU for Direct3D and CUDA (NVIDIA,

2013).

cudaGraphicsMapResources( nbResources, ppResources, stream);

The call to the function cudaGraphicsSubResourceGetMappedArray() returns in cuArray array

through which the sub-resource of the mapped graphics resource g_texture_2d.cudaResource

which corresponds to array index 0 and mipmap level 0 may be accessed (NVIDIA, 2013).

cudaGraphicsSubResourceGetMappedArray( &cuArray, g_texture_2d.cudaResource,

0, 0);

The program makes a call to CUDA kernel cuda_texture_2d() by sending the staging buffer

g_texture_2d.cudaLinearMemory as an argument to allow the kernel to write to it

cuda_texture_2d(g_texture_2d.cudaLinearMemory, g_texture_2d.width,

g_texture_2d.height, g_texture_2d.pitch, t);

Then the function cudaMemcpy2DToArray() copies g_texture_2d.cudaLinearMemory to the

Direct3D texture via the previous mapped CUDA array cuArray.

cudaMemcpy2DToArray (

cuArray, // dst array

0, 0,

// offset

g_texture_2d.cudaLinearMemory, // src

g_texture_2d.pitch,

g_texture_2d.width*4*sizeof(float),

g_texture_2d.height, // extent

cudaMemcpyDeviceToDevice // kind

);

24

The function cudaGraphicsUnmapResources() unmaps the resources:

cudaGraphicsUnmapResources( nbResources, ppResources, stream);

2.2.1.3

CUDA kernel computation and HLSL program

A blank texture is passed to the CUDA kernel as an input argument. The red, green and blue

(RGB) values of a pixel in the texture is calculated concurrently using multiple CUDA

threads. The red colour value is always set zero. The values of green and blue components of

the pixel decrease with the increase in the distance between pixel location and centre of the

circle, and increase with time as shown in the following code block. Figure 15a shows the

resultant texture after the CUDA computation. The variable dist holds the distance between

the pixel and the texture centre. The radius variable sets the maximum radius value of the

spreading blood pattern. Only the pixel colour values of a pixel of which the dist value is

less than the radius, are calculated. The variable finalGreenBluePixelValue and

currentGreenBluePixelValue contain the calculated final and current pixel values. The current

colour pixel value is incremented by currentGreenBluePixelValue until it reaches the final

pixel value finalGreenBluePixelValue.

float dist = sqrt((float)((pixelIndexX - centreX) * (pixelIndexX - centreX)) +

(float)((pixelIndexY - centreY) * (pixelIndexY - centreY)));

if(dist <= radius)

{

float finalGreenBluePixelValue = pow((radius - dist)/radius, 6.0f);

float currentGreenBluePixelValue = finalGreenBluePixelValue * t * 1E-5f;

if (pixelByteAddress[1] < finalGreenBluePixelValue )

{

pixelByteAddress[0] =0.0f;

pixelByteAddress[1] += currentGreenBluePixelValue;

pixelByteAddress[2] += currentGreenBluePixelValue;

pixelByteAddress[3] = 1.0f;

}

else

{

pixelByteAddress[0] = 0.0f;

pixelByteAddress[1] = finalGreenBluePixelValue; // green

pixelByteAddress[2] = finalGreenBluePixelValue; // blue

pixelByteAddress[3] = 1.0f;

}

}

Then, the CUDA-calculated texture pixel values are passed to the HLSL program to compute

the final blending vales for the 2D texture. The HLSL program subtracts the CUDAcalculated texture from the tissue texture values to produce the growing/spreading of blood

pattern from the centre of the tissue.

2.2.2

Bleeding simulation - particle system

Particle systems are used to simulate the behaviour of blood flow. Particles are very small

objects that have been used to simulate a variety of 3D graphics effects such as fire, smoke,

25

rain and fluid (Luna, 2012). The bleeding simulation models the blood flow on a 2D tissue

texture. When users clicked an area on the tissue model, the blood starts flowing from the

clicked area, and accelerated by the gravitation to flow toward the bottom of the simulation

panel. The bleeding simulation is built upon the source code provided by Greg Ruthbeck. The

source code originally simulates the deformable objects and particle systems for applications

such as astrophysics. The deformation of object parts have been switched off and the particle

systems have been further modified to accommodate the render-to-texture technique and

CUDA kernel calls for updating the particle details and blurring the particle texture. The

HLSL program will also be modified to perform the blending of blurred texture with tissue

texture.

Figure 14 shows the activity diagram of the bleeding simulation. The simulation starts from

the Direct3D initialisation, building the interoperability with CUDA, checking the mouse

click and corresponding rendering activities. If no mouse-clicked event is detected, the

rendering scene will be the same as the one in the last time step. Otherwise, if the mouse is

clicked, the new particle is added into the dynamic vertex buffer. Then the program performs

the updating of the particle details, the render-to-texture technique, blurring of the particle

texture and alpha blending of the blurred texture with the tissue texture. Finally, the program

renders the 2D model with the blended texture. The dash-line-circled area in Figure 14 is the

do-while looping part of the simulation. The circled area keeps looping until users exit the

program. The following sub-sections 2.2.2.1 to 2.2.2.6 provide detailed descriptions of these

activities designed for the simulation framework. The sections 2.2.2.7 describes the

implementation of the haptic device to enable users to experience the real-time interaction

with the simulation.

26

Direct3D Initialisation

Interoperate Direct3D with CUDA

Check if mouse is clicked

[No?]

Render the same scene

[Yes?]

Generate a new particle

Add the new particle to the vertex buffer

CUDA kernel updates the position and velocity of particles

Render to texture

CUDA kernel performs the blurring of the texture

Perform alpha blending of the blurred texture

with the tissue texture

Render the 2D model with the blended texture

Figure 14: The activity diagram of bleeding simulation. The dash-line-circled area is the do-while looping

part of the simulation.

27

2.2.2.1

Direct3D initialisation and interoperability with CUDA

A dynamic vertex buffer is used to contain particle details. The particle structure holds

particle attributes: position and velocity. The HLSL effect program performs the rendering of

the particles. Particles should have various sizes and even an entire texture can be mapped

onto particles. To extend capacity of particle systems, the point where shows the particle

location is expended into a quad that faces the camera in the geometry shader (Luna, 2012).

The following C++ code indicates the position and velocity attributes of a particle structure.

struct PosVel

{

D3DXVECTOR4 pos; // position

D3DXVECTOR4 vel; // velocity

};

To build the interoperability between CUDA and Direct3D, the same steps specified in the

section 2.2.1.2 are followed. The Direct3D device is specified by

cudaD3D11SetDirect3DDevice() (Cook, 2012). The Direct3D resources that can be mapped

into the address space of CUDA are Direct3D buffers, textures, and surfaces. The particle

vertex buffer g_pParticlePosVelo is registered using cudaGraphicsD3D9RegisterResource().

The flag for mapping the graphics resource is set using cudaGraphicsResourceSetMapFlags()

with the flag value of cudaGraphicsMapFlagsWriteDiscard. This flag specifies CUDA will not

read from resource positionsVB_CUDA and will write over the entire contents of

positionsVB_CUDA, so none of the data previously stored in positionsVB_CUDA will be preserved

(NVIDIA, 2013). The following is the example code to build the interoperability:

cudaD3D11SetDirect3DDevice(pd3dDevice);

cudaGraphicsD3D11RegisterResource(&positionsVB_CUDA, g_pParticlePosVelo,

cudaGraphicsRegisterFlagsNone);

cudaGraphicsResourceSetMapFlags(positionsVB_CUDA,

cudaGraphicsMapFlagsWriteDiscard);

The following code creates the particle buffer description D3D11_BUFFER_DESC. The

descriptions of D3D11_BUFFER_DESC flags are listed in Table 1.

D3D11_BUFFER_DESC desc;

ZeroMemory( &desc, sizeof(desc) );

desc.Usage = D3D11_USAGE_DYNAMIC;

desc.BindFlags = D3D11_BIND_SHADER_RESOURCE;

desc.ByteWidth = mMaxNumParticles * sizeof(PosVel);

desc.CPUAccessFlags = D3D11_CPU_ACCESS_WRITE;

desc.MiscFlags = D3D11_RESOURCE_MISC_BUFFER_STRUCTURED;

desc.StructureByteStride = sizeof(PosVel);

Table 1: Description of D3D11_BUFFER_DESC flags (NVIDIA, 2013).

D3D11_BUFFER_DESC flags

Description

D3D11_USAGE_DYNAMIC

A dynamic resource is a good choice for a resource that will

be updated by the CPU at least once per frame.

D3D11_CPU_ACCESS_WRITE

The resource is to be mappable so that the CPU can change

28

its contents.

D3D11_RESOURCE_MISC_BUFFER_STRUCTURED

2.2.2.2

Enables a resource as a structured buffer.

Emission and destruction of particles

Particles are generally emitted and destroyed over time. The dynamic vertex buffer created in

the previous section keeps track of spawning and killing of particles on CPU (Luna, 2012).

The vertex buffer contains currently living particles. The update cycle of particle spawning is

carried out in the CPU. The following AddParticle() function adds a particle to the particle

vertex buffer. When the user clicked the mouse, the function keeps adding a particle to the

buffer at each time step until the user releases the mouse button. The use of

D3D11_MAPPED_SUBRESOURCE provides access to sub-resource data, the particle buffer

g_pParticlePosVelo. The maximum number of particles that the buffer can contain is

specified by mMaxNumParticles. The new particle is appended at the position of the particle

buffer indicated by mNextWriteToId. Once the value of mNextWriteToId is equal to

mMaxNumParticles, the mNextWriteToId is reset to zero and the next new particle is appended at

the first location of the buffer. The mNextWriteToId- related code segments are written by

Greg Ruthenbeck.

template <class T>

void ParticleRenderer::AddParticle(const T& particle, ID3D11DeviceContext* pContext)

{

D3D11_MAPPED_SUBRESOURCE mappedSubRes;

if (!FAILED(pContext->Map(g_pParticlePosVelo, 0, D3D11_MAP_WRITE_DISCARD, 0,

&mappedSubRes)))

{

memcpy((void*)((T*)mappedSubRes.pData + mNextWriteToId),

(const void*)&particle, sizeof(PosVel));

pContext->Unmap(g_pParticlePosVelo, 0);

}

++mNextWriteToId; // is initialised as 0 in the ParticleRenderer constructor

if (mNextWriteToId == mMaxNumParticles)

{

mNextWriteToId = 0;

}

}

However, there is some overhead in the use of dynamic vertex buffer. In each time step, if a

mouse event is triggered, a new added particle is transferred from the CPU to GPU. In the

future work, the handling of particle update cycle should be shifted to GPU instead of CPU.

The outsourcing the workload to GPU would free the CPU for other important tasks such as

computation of force feedback for the use of haptic device.

The following function: updatePos() maps the graphics resource positionsVB_CUDA for access

by CUDA. The call to cudaGraphicsResourceGetMappedPointer returns a pointer through

which the mapped resource positionsVB_CUDA may be accessed (NVIDIA, 2013). Then the

CUDA kernel cuda_calculatePositions() is called to update the position and velocity of

particles.

29

void ParticleRenderer::updatePos(float bound)

{

static float t = 0.0f;

t += 0.0001f;

float4* positions;

cudaGraphicsMapResources(1, &positionsVB_CUDA, 0);

size_t num_bytes;

cudaGraphicsResourceGetMappedPointer((void**)&positions, &num_bytes,

positionsVB_CUDA);

// Execute kernel

cuda_calculatePositions(positions, t, cudaWidth, cudaHeight, bound,

mMaxNumParticles);

// Unmap vertex buffer

cudaGraphicsUnmapResources(1, &positionsVB_CUDA, 0);

}

2.2.2.3

CUDA kernel call to update particle details

The velocity and position of a particle is calculated by making the CUDA kernel call at every

time step. The particle is affected by the gravitation only. The released particles fall

downward until they reach the button of the simulation panel. When a kernel is called, it is

executed N times in parallel by N different CUDA threads. As a result of parallel computing,

the velocity and position of particles are updated concurrently.

The collision detection between neighbouring particles and boundary is performed. As a

result of the detection, falling particles are able to accumulate at the bottom of the simulation

panel.

The synchronisation mechanism is applied after the execution of collision detection. The

synchronisation method __syncthreads() has been placed between the collision detection

code block and the code block updating of velocity and position of particles as shown below:

// collision detection

.....

// synchronise threads in this block

__syncthreads();

// updating velocity and position of particles

....

The synchronisation makes sure the collision detection has completed before the position and

velocity of particles are updated. This method guarantees that each thread in the block has

completed instructions prior to the __syncthreads() before the hardware executes the next

instruction on any thread (Sanders and Kandrot, 2010). The synchronisation is very important

since it is likely different thread executes different instruction. Applying synchronisation

ensures the position and velocity of particles at the current time step are used in the collision

detection not the updated ones.

30

2.2.2.4

Render-to-texture

The technique of render-to texture, also known as render-to-off-screen-texture, is used to

draw the scene of blood flow, generated by the particle system described above, to an offscreen texture. The off-screen texture is updated at each time step.

The technique of render-to-texture is drawing a different “off-screen” texture instead of the

back buffer (Luna, 2012). When the C++ program instructs the Drect3D to render to the back

buffer, it binds a render target view of the back buffer to the Output Merger stage of the

rendering pipeline. The back buffer is just a texture in the swap chain (Luna, 2012). The

contents of the back buffer are eventually displayed on the screen. Hence, the same approach

can be applied to create a texture and bind it to the OM stage. A different texture is drawn

instead of the one in the back buffer. The only difference is since the texture is not a back

buffer, it does not get displayed on the screen during presentation (Luna, 2012). A drawback

of render-to-texture is that it is expensive to perform this technique since the scene is

rendered multiple times (Rastertek, 2012a).

2.2.2.5

CUDA kernel call to blur the off-screen texture

The blood flow created by the particle system is rendered to an off-screen texture by the

previous activity. This texture will be used as input of the blurring algorithm executed by

using the CUDA parallel computing technology. The use of blurring effect will make the

blood clusters look like bleeding patterns. Rastertek (2012b) details the approach to

implement the blurring effect. The steps consist of down- sampling the texture to half size or

less, performing the horizontal and vertical blurring on the down-sampled texture, and upsampling the texture back to the original screen size.

2.2.2.6

Alpha blending

After the off-screen texture is blurred, the tissue texture is blended with the blurred texture to

create the blood flow effect on the cut area of 2D tissue model. The shader program will

implement the alpha blending effect on these two textures. The technique of alpha blending is

analogous to the texture-based blood simulation. The alpha value of particle patterns on the

texture is set to one while the rest of areas contains zero value. The value one is also assigned

to the alpha layer of the tissue texture. This indicates that only the pixel values of particle

patterns and the whole tissue texture are used in the blending calculation for the final pixel

value. After the performing the alpha blending, the final activity of the simulation is to

perform the rendering of blood flowing on the 2D tissue model.

2.2.2.7

Haptic force feedback

The haptic device will be implemented into the bleeding simulation. With the use of Novint

Falcon haptic device, users can interact with the bleeding simulation in real time. The user

should experience the force feedback when touching and performing cutting on the tissue

surface using the haptic device. The stylus of the device will be used as a surgical instrument.

31

The user can use the stylus to perform cutting on the tissue and the blood flow will start from

the cut point and will be accumulated at the bottom of the simulation panel.

32

3.

Progress result

FiguresFigure 15 Figure 16 show the results of texture-based blood simulation and bleeding

simulation. These simulations were tested on the machine with a second generation Intel

quad-core i7-3610QM 2.3 GHz (with Turbo Boost up to 3.3 GHz), 8 GB physical randomaccess memory (RAM) and NVIDIA® GeForce® GT 640M with 2GB dedicated video RAM

(VRAM).

3.1

Texture-based blood simulation

The texture-based blood simulation generates the growing blood pattern with burring edge as

shown in Figure 15b. Figure 15a is the resultant colour of a texture calculated using the

CUDA parallel computation technology. The technique is described in the section 2.2.1. The

represented colour is produced by computed green and blue colour values of each pixel.

These values decrease with the increase in the radius of the circle and increase with time.

Figure 15b shows the final texture after blending the tissue texture with the CUDA-calculated

texture (Figure 15a).

Figure 15: a) Resultant texture calculated by a CUDA kernel. b) Final texture after blending the tissue

texture with the CUDA-computed texture (Figure 15a).

3.2

Bleeding simulation

Figure 17 shows the result of the bleeding simulation. When the user clicked anywhere on the

simulation panel, a cluster of particles is generated and dragged by the gravitation to fall

toward the bottom of the panel. This is to simulate the bleeding effects when a surgeon

performs cutting on a tissue. Once the user cut the tissue, the blood represented by particles

starts flowing from the cut area to the south bound of panel, and the blood cluster is

accumulated at the bottom of the simulation panel.

Figure 16 shows the C# version of the particle simulation. The C# application was built

based on the source code provided by Greg Ruthbeck with modifications for testing a variety

33

of bleeding effects. The major difference between C# and Direc3D version simulations is that

these is no parallel computing technique used in the C# application. The update of velocity

and position of particles are computed sequentially. Although there could exist a wrapper

program to link C# to CUDA, the APIs provided by Falcon haptic device is written in C++.

This makes Direct3D a better choice than C#.

Figure 16: The C# particle simulation.

Figure 17: The Direct3D bleeding simulation.

34

4.

Future work

This progress report presents the approaches and results of texture-based blood simulation

and particle systems detailed in the Approach and Progress result sections. The following lists

the remaining work for the honours project. These techniques will be implemented in the

bleeding simulation:

a. Add the render-to-texture technique as described in the section 2.2.2.4.

b. Add the blurring effect for the off-screen texture as described in the section 2.2.2.5.

c. Add the alpha blending to blend the blurred texture with the tissue texture as

described in the section 2.2.2.6.

d. Implement the haptic device into the bleeding simulation described in the section

2.2.2.7.

e. Implement a 3D tissue model into the bleeding simulation. The bleeding simulation

will migrate from a 2D model to a 3D model once the haptic feedback

implementation has completed. The calculation of the u and v coordinates on a

texture for the model where the cutting is performed will be challenging.

Due to the time constraint, the project aims to implement the techniques described in the

Approach section. The bleeding simulation built in this project will serve as a prototype to

investigate a variety of approaches that best meet the bleeding requirements of the ESS

simulator. More work should be carried out to further improve the bleeding simulation. The

following lists some recommended future work:

a. Integrate the bleeding simulation into the ESS simulator.

b. Implement the blood aspiration. When a surgeon is performing the ESS, the blood

aspiration is one of the tasks the surgeon has to perform to increase the visibility of

the surgical scene (Borgeat et al., 2011).

c. Make the amount bleeding initialised based on the density of the blood vessels at the

contact location of the instrument tool tip with the tissue (Halic et al., 2010). It is

logical to assume that the tissue region with higher vessel density will be bleeding

more than the one with lower density (Halic et al., 2010).

35

5.

Conclusion

The texture-based blood simulation and bleeding simulation are presented in this progress

report. The texture-based blood simulation is able to model the growing and radiant spreading

of the blood pattern from the central cut point. The bleeding simulation shows the flood flow

represented by the particle system when the user clicked the left-mouse button. The velocity

and position of particles are computed using the CUDA kernel running on the GPU. Casting

the work load to the GPU makes the CPU can use released resources to perform other

important tasks. In the completion of the project, users should be able to use the Falcon haptic

device to have real-time interaction with the bleeding simulation. When the user performed

cutting on the tissue using the haptic device, the generated blood starts flowing downward

and is accumulated at the bottom of the simulation panel.

Future work section describes the techniques that are to be implemented in the remaining of

the project. Beyond the project, the most important future work is to integrate the bleeding

simulation with the ESS simulator. After the integration, the optimisation needs to be carried

out to meet the requirement of the refresh rate of the ESS simulator.

This study will serve as a prototype to investigate various techniques that best meet the

bleeding effect requirement of the ESS simulator. In the accomplishment of the ESS

simulator, the simulator can bring potential benefits for the surgical training. (Ooi and

Witterick, 2010). However, further research is needed to validate the use of the ESS

simulator with the bleeding effect. The various validations should be carried out before the

simulator can be widely and effectively implemented into the surgical training program (Ooi

and Witterick, 2010).

36

Appendix

A.1

Acronyms

3D

3-dimensional

API

Application Program Interface

CPU

Central Processing Unit

CT

X-ray computed tomography

ESS

Endoscopic Sinus Surgery

FPS

Frame Per Second

GPU

graphical processing unit

MRI

Magnetic Resonance Imaging

VR

Virtual Reality

37

References

Ahlberg, G., Enochsson, L., Gallagher, A. G., Hedman, L., Hogman, C., McClusky Iii, D. A.,

Ramel, S., Smith, C. D. & Arvidsson, D. 2007. Proficiency-based virtual reality

training significantly reduces the error rate for residents during their first 10

laparoscopic cholecystectomies. American Journal of Surgery, 193, 797-804.

Bakker, N. H., Fokkens, W. J. & Grimbergen, C. A. 2005. Investigation of training needs for

Functional Endoscopic Sinus Surgery (FESS). Rhinology, 43, 104-108.

Block, G. 2007. Novint Falcon Review

Is this long-awaited 3D-touch controller the future of PC-gaming? Find out. [Online]. IGN.

Available: http://www.ign.com/articles/2007/11/06/novint-falcon-review [Accessed

12/02 2013].

Borgeat, L., Massicotte, P., Poirier, G. & Godin, G. 2011. Layered surface fluid simulation

for surgical training. Medical image computing and computer-assisted intervention :

MICCAI ... International Conference on Medical Image Computing and ComputerAssisted Intervention, 14, 323-330.

Cook, S. 2012. CUDA Programming: A Developer's Guide to Parallel Computing with

GPUs, USA, Morgan Kaufmann.

Fitzgerald, T. N., Duffy, A. J., Bell, R. L., Berman, L., Longo, W. E. & Roberts, K. E. 2008.

Computer-Based Endoscopy Simulation: Emerging Roles in Teaching and Professional

Skills Assessment. Journal of Surgical Education, 65, 229-235.

Fried, M. P., Sadoughi, B., Weghorst, S. J., Zeltsan, M., Cuellar, H., Uribe, J. I., Sasaki, C.

T., Ross, D. A., Jacobs, J. B., Lebowitz, R. A. & Satava, R. M. 2007. Construct validity

of the endoscopic sinus surgery simulator II. Assessment of discriminant validity and

expert benchmarking. Archives of Otolaryngology - Head and Neck Surgery, 133, 350357.

Glaser, A. Y., Hall, C. B., Uribe, J. I. & Fried, M. P. 2006. Medical students' attitudes toward

the use of an endoscopic sinus surgery simulator as a training tool. American Journal of

Rhinology, 20, 177-179.

Gurusamy, K., Aggarwal, R., Palanivelu, L. & Davidson, B. R. 2008. Systematic review of

randomized controlled trials on the effectiveness of virtual reality training for

laparoscopic surgery. British Journal of Surgery, 95, 1088-1097.

Halic, T., Sankaranarayanan, G. & De, S. 2010. GPU-based efficient realistic techniques for

bleeding and smoke generation in surgical simulators. International Journal of Medical

Robotics and Computer Assisted Surgery, 6, 431-443.

Hanners. 2008. What's next for DirectX? A DirectX 11 overview [Online]. Elite Bastards.

Available:

http://www.elitebastards.com/?option=com_content&task=view&id=611&Itemid=29

[Accessed 11/02 2013].

http://www.orclinic.com/information/ente_PostOpSinusSurgery. 2012. Endoscopic Sinus

Surgery: A Patient's Guide - ENT East Division [Online]. Available:

http://www.orclinic.com/information/ente_PostOpSinusSurgery [Accessed 03/02

2013].

Kerwin, T., Shen, H. W. & Stredney, D. 2009. Enhancing realism of wet surfaces in temporal

bone surgical simulation. IEEE Transactions on Visualization and Computer Graphics,

15, 747-758.