DIP failure on 09/09/2012

advertisement

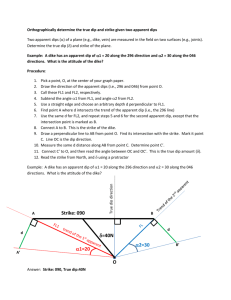

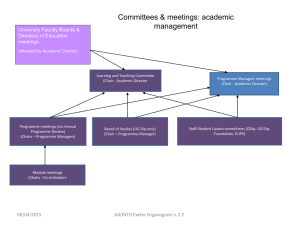

DIP failure on 09/09/2012 On Sunday 9 September at 08h26, the Secondary DIP Name Server monitoring process (DIPNS2) stopped due to a memory shortage. At 18h05 the same day, the TIM team reported to the EN-ICE Standby Service that both the Primary DIP Name Server and the Secondary were unavailable. After a restart, the Primary DIP Name Server became operational again. The Secondary Name Server was only rebooted the next day Monday 10 September at 10h31, thereby restoring the service to its normal state (i.e. active Primary name server with active monitoring, and Secondary name server inactive with active monitoring). Background information The current operational version of the DIM Name Server binaries used to support the DIP Name Server GPN and TN infrastructures is version 19r37, which was deployed on the 29th June 2012. The TN DIP name servers CS-CCR-DIPTN1 and CS-CCR-DIPTN2 are two HP Proliant G7 servers under SLC5, dedicated to running of the primary DNS process and their associated monitoring and redundancy processes. CS-CCR-DIPTN1 also runs the DIP Contract Monitoring (DIPG) agent, which subscribes to a large number of DIP publications with the purpose of monitoring their availability and data quality. At runtime, the DIP Name Server monitoring package is composed of : A Tanuki Service Wrapper installation – a service wrapper specialized in Java processes. This watchdog will automatically restart the Java virtual machine in case of anomalies (note that it is not currently configured to restart the VM in case of OUT OF MEMORY errors). A Java process that monitors the DIP name server process (either primary or secondary name servers), evaluates its response time, pipes its standard output to a logfile and restarts it in case of anomalies. The DIP name server binary, in fact a DIM name server configured to operate on port 2506. Sequence of failure Author : Fernando Varela (on Standby Duty at the time) 18h45 Call to the CCC to understand if they had seen the problem. Yes, they detected the problem around 15h and called Kris Kostro who asked them to restart some gateways with no success. I told them that we would need to restart the name server to fix the problem.They need to check if this was possible as they were in operation. 19h00 Call to Enzo to check if I could reboot the machine or if he preferred to do it himself. He will do it. 19h05 Call to the CC to inform them that we are about the reboot the server, cs-ccr-diptn1. 19h07 Call to Enzo and reboot of the machine. 19h15 Server is back in business and publications are now listed in the DIP browser. We thank Enzo and apologize for having called him during the weekend. 19h20 Call to the CCC to inform about the end of the intervention. They tell me they are two busy to take my call and ask me to call back. 19h25 Call to Mark Brightwell to inform him about the intervention. He checks and can also see the DIP publications. 19h45 Call to CCC again to check if everything was OK. They do not know yet because they need to reboot several processes but things look better than before. The operator tells that they will need some more time to check. I gave them my phone number and ask them to call again in case of problems. A second call from the CCC at 21h00. After the restart of the DIP name servers not all publications where properly reconnected. The CCC mention the following systems having problems: GCS in ATLAS and CMS. ALICE beam monitoring Checks done: Open the DIP browser and check the publication list. Publications could not be found for any of the three systems. Actions taken: GCS Call the GCS expert -> No answer. Call the CMS GCS and ask them to call the PH-DT piquet as they are supposed to provide the first line support. Anyway, the GCS is not a system covered by our piquet service and both CMS and ATLAS did not agree to have their systems monitored by MOON. I told the CMS shift leader that my suspicion is that the DIP API manager in the GCS projects has to be restarted but I do not have access to the machines and I do not know the consequences of such an action. Call back to the CCC to inform them about my conversation with the CMS shift leader. The CCC operators will also call the PH-DT Gas piquet about the problem with the ATLAS GCS. ALICE Luminosity: Ask the CCC to call the ALICE DCS team as the project that publishes this info is under their responsibility. 21h30 end of the intervention. What are the reasons, are the failures understood The Secondary DIPNS monitoring reported a slow behavior from the primary Name Server on Sunday 9 September between 7h27 AM and 8h08 AM. This caused the secondary name server to start up and to be reported by email to DIP service experts. However, the wrapper / watchdog process of the secondary name server died "gracefully" at 8h26 that morning with an OUT OF MEMORY ERROR (such an incident was not reported by email as the secondary DIP name server monitoring process stopped altogether). All publishers were therefore forcefully redirected to the Primary DIP name server, where the performance disruptions stopped shortly after. At this point, the primary name server remained was active and functional until 18h05, at which point it froze (the DNS process remained alive, but would not reply to any queries, without any timeouts or error messages, as reported by Fernando Varela), without any support from the secondary name server. At the time of the incident, the Primary DIP name server process was active, but somehow not replying to any DIM requests (the DID tool could not connect to the DNS in order to query internal status, connection was established but no data ever obtained). It is not understood why the primary DIP name server process was not simply killed by the monitoring process. Fernando Varela rebooted the primary server machine, as indicated in the documented piquet procedure and asking BE-CO administrators to intervene. This resolved the problem, as the primary monitoring restarted and instantiated immediately a DNS process. Following this incident, there have been disruptions to the Primary DIP Name Server which forced it to be killed due to a slow response time, on the following dates : 10 th September (14h15 to 15h07), 11 th September (23h05 to 00h12), 17th September (14h28 to 14h30), 18th September (08h42 to 08h44). and the redundancy mechanisms worked as expected every time. Once the primary name server was restarted, all publications were again accounted for within a matter of 5 to 10 minutes. Action taken to warn the EN/ICE SBS piquet in case of similar failure The following actions have been taken to avoid future problems : DIP-101 – deploy FMC Agents and integrate DIP Name Server monitoring into EN-ICE MOON. DIP-104 - reorganise DIP name server maintenance procedures in the EN-ICE Standby Duty manual. Short term actions (Christmas 2012 technical stop) Integration of DIP Name Server infrastructure in the EN-ICE MOON monitoring infrastructure and DIP Contract Monitoring facility. Configure the DIP name server monitoring to restart automatically in case of OUT OF MEMORY ERROR (this caused the secondary DIP name server monitoring process to stop on Sunday 9 September). Upgrade of the DIM Name Server binaries to version 19r38, which introduce a random reconnection time, helping to scatter the load upon DIP NS restart. After discussion with Clara Gaspar : ◦ Modification of the DIP monitoring process so as to avoid piping the DNS standard output – it should flow freely to a rotating log file. Long term actions Investigate the possibility to gain more visibility into the DIM DNS process that underpins the DIP name server activity. For instance, by implementing on demand statistics that could be consulted without having to go through the DIM protocol (it was proven during DNS incidents that if the DNS is unresponsive, the DID tool is ineffective to connect and investigate problems). Potential separation of infrastructure / accelerator publications, requiring a separate subscription process for both. After discussion with Clara Gaspar : ◦ Testing for long disconnection situations : after a long while, Clara has observed that some DIM clients / servers may not reconnect to the name server at all. Annexes These diagrams explain the sequence of events during a typical DIP Name Server failure. This incident (ENS-6034) was different because the Primary DIP Name Server could not be shutdown, and the secondary DIP name server was unavailable to take over, as monitoring had crashed on the morning of Sunday 9 September.